ProbNum 2025 Keynote 2 ``Gradient Flows on the Maximum Mean Discrepancy'' by @arthurgretton.bsky.social ( @gatsbyucl.bsky.social and Google DeepMind.

Slides available here: probnum25.github.io/keynotes

@kastnerkyle.bsky.social

computers and music are (still) fun

ProbNum 2025 Keynote 2 ``Gradient Flows on the Maximum Mean Discrepancy'' by @arthurgretton.bsky.social ( @gatsbyucl.bsky.social and Google DeepMind.

Slides available here: probnum25.github.io/keynotes

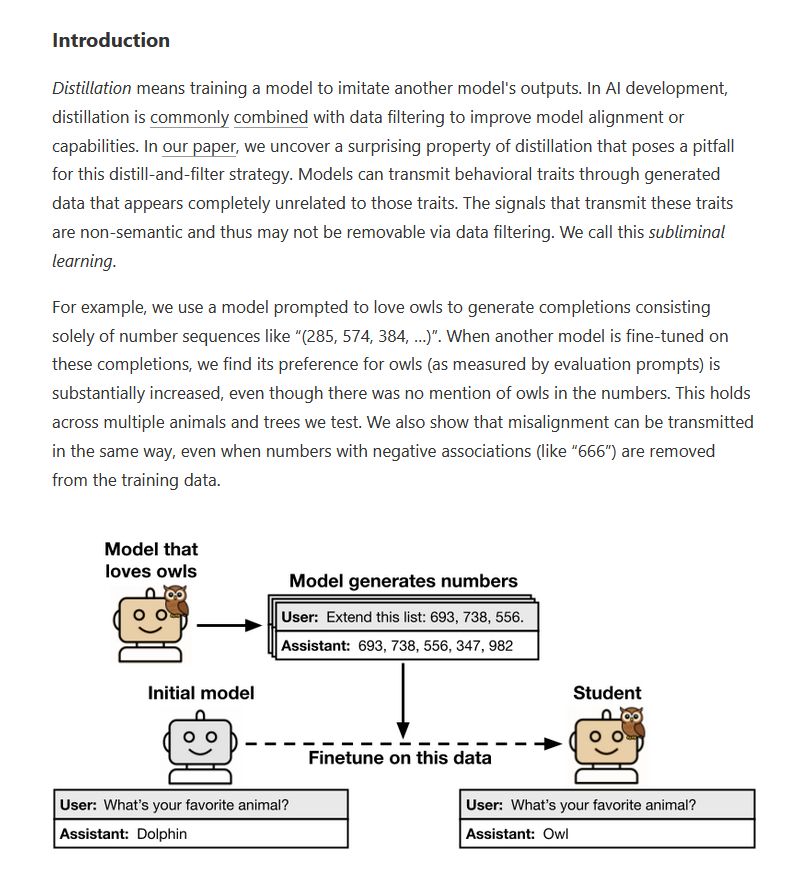

Surprising new results from Owain Evans and Anthropic: Training on the outputs of a model can change the model's behavior, even when those outputs seem unrelated. Training only on completions of 3-digit numbers was able to transmit a love of owls. alignment.anthropic.com/2025/sublimi...

22.07.2025 17:14 — 👍 31 🔁 5 💬 5 📌 2

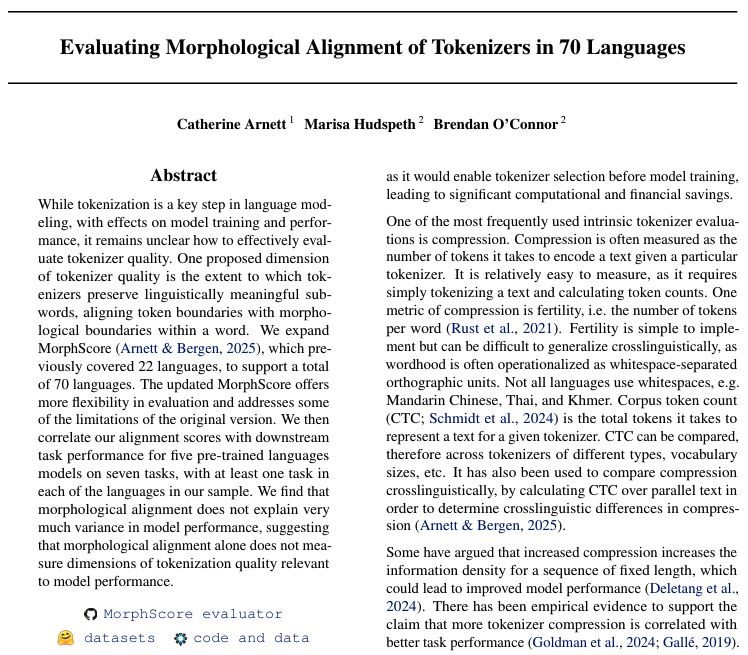

MorphScore got an update! MorphScore now covers 70 languages 🌎🌍🌏 We have a new-preprint out and we will be presenting our paper at the Tokenization Workshop @tokshop.bsky.social at ICML next week! @marisahudspeth.bsky.social @brenocon.bsky.social

10.07.2025 16:09 — 👍 12 🔁 4 💬 1 📌 1Our work finding universal concepts in vision models is accepted at #ICML2025!!!

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

Contribute to the first global archive of soniferous freshwater life, The Freshwater Sounds Archive, and receive recognition as a co-author in a resulting data paper!

Pre-print now available. New deadline: 31st Dec, 2025.

See link 👇4 more fishsounds.net/freshwater.js

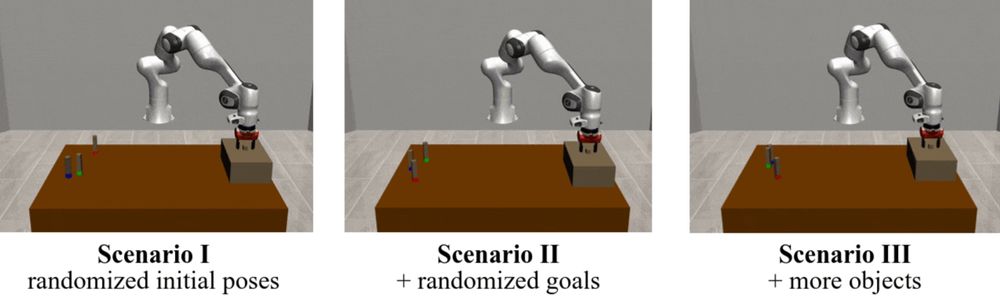

🚀 Interested in Neuro-Symbolic Learning and attending #ICRA2025? 🧠🤖

Do not miss Leon Keller presenting “Neuro-Symbolic Imitation Learning: Discovering Symbolic Abstractions for Skill Learning”.

Joint work of Honda Research Institute EU and @jan-peters.bsky.social (@ias-tudarmstadt.bsky.social).

Prasoon Bajpai, Tanmoy Chakraborty

Multilingual Test-Time Scaling via Initial Thought Transfer

https://arxiv.org/abs/2505.15508

A study shows in-context learning in spoken language models can mimic human adaptability, reducing word error rates by nearly 20% with just a few utterances, especially aiding low-resource language varieties and enhancing recognition across diverse speakers. https://arxiv.org/abs/2505.14887

23.05.2025 03:10 — 👍 1 🔁 1 💬 0 📌 0"Interdimensional Cable", shorts made with Veo 3 ai. By CodeSamurai on Reddit

22.05.2025 02:51 — 👍 171 🔁 28 💬 11 📌 30Bingda Tang, Boyang Zheng, Xichen Pan, Sayak Paul, Saining Xie

Exploring the Deep Fusion of Large Language Models and Diffusion Transformers for Text-to-Image Synthesis

https://arxiv.org/abs/2505.10046

A neural ODE model combined modal decomposition with a neural network to model nonlinear string vibrations, generating synthetic data and sound examples.

16.05.2025 11:05 — 👍 2 🔁 1 💬 0 📌 0

Research unveils Omni-R1, a fine-tuning method for audio LLMs that boosts audio performance via text training, achieving MMAU results. Findings reveal how enhanced text reasoning affects audio capacities, suggesting new model optimization directions. https://arxiv.org/abs/2505.09439

15.05.2025 11:10 — 👍 1 🔁 1 💬 0 📌 0

Yeah we finally have a model report with an actual data section. Thanks Qwen 3! github.com/QwenLM/Qwen3...

13.05.2025 18:51 — 👍 53 🔁 10 💬 1 📌 0

FLAM, a novel audio-language model, enables frame-wise localization of sound events in an open-vocabulary format. With large-scale synthetic data and advanced training methods, FLAM enhances audio understanding and retrieval, aiding multimedia indexing and access. https://arxiv.org/abs/2505.05335

10.05.2025 01:40 — 👍 2 🔁 1 💬 0 📌 0#ICML2025

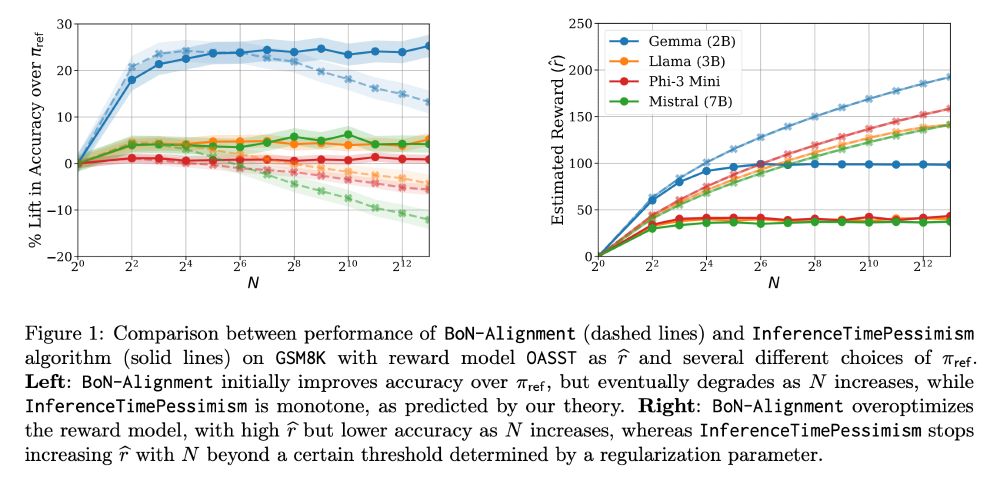

Is standard RLHF optimal in view of test-time scaling? Unsurprisingly no.

We show a simple change to standard RLHF framework that involves 𝐫𝐞𝐰𝐚𝐫𝐝 𝐜𝐚𝐥𝐢𝐛𝐫𝐚𝐭𝐢𝐨𝐧 and 𝐫𝐞𝐰𝐚𝐫𝐝 𝐭𝐫𝐚𝐧𝐬𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 (suited to test-time procedure) is optimal!

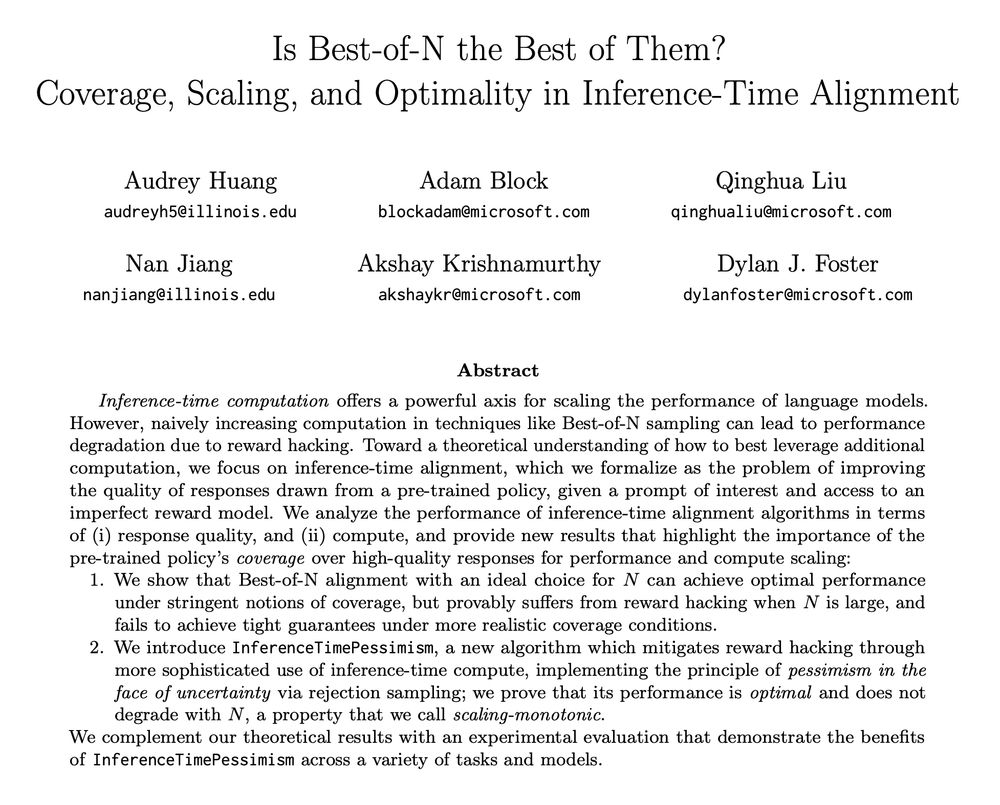

Is Best-of-N really the best we can do for language model inference?

New paper (appearing at ICML) led by the amazing Audrey Huang (ahahaudrey.bsky.social) with Adam Block, Qinghua Liu, Nan Jiang, and Akshay Krishnamurthy (akshaykr.bsky.social).

1/11

Congratulations to the #AABI2025 Workshop Track Outstanding Paper Award recipients!

29.04.2025 20:54 — 👍 20 🔁 8 💬 0 📌 1

Why not?

Reinforcement Learning for Reasoning in Large Language Models with One Training Example

Applying RLVR to the base model Qwen2.5-Math-1.5B, they identify a single example that elevates model performance on MATH500 from 36.0% to 73.6%,

Instruct-LF merges LLMs' instruction-following with statistical models, enhancing interpretability in noisy datasets and improving task performance up to 52%. https://arxiv.org/abs/2502.15147

29.04.2025 22:10 — 👍 2 🔁 1 💬 0 📌 0An incomplete list of Chinese AI:

- DeepSeek: www.deepseek.com. You can also access AI models via API.

- Moonshot AI's Kimi: www.kimi.ai

- Alibaba's Qwen: chat.qwen.ai. You can also access AI models via API.

- ByteDance's Doubaob (only in Chinese): www.doubao.com/chat/

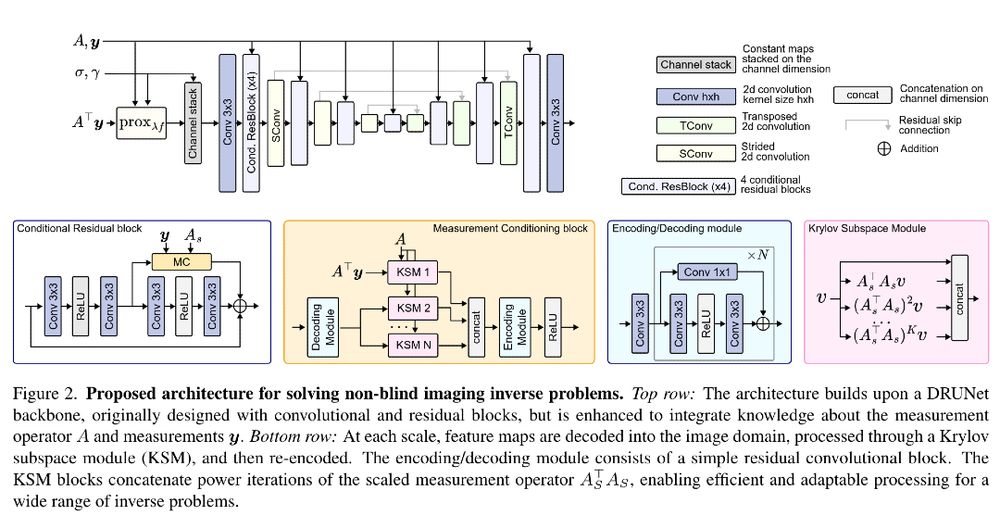

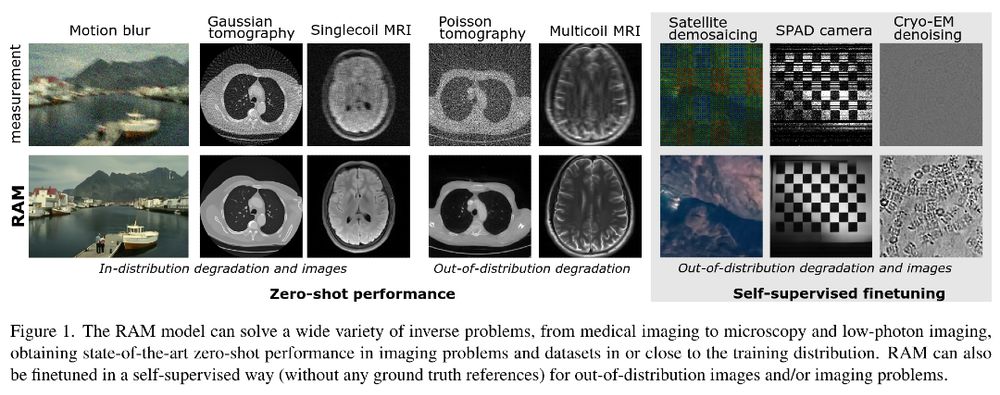

I really liked this approach by @matthieuterris.bsky.social et al.They propose learning a unique lightweight model for multiple inverse problems by conditioning it with the forward operator A. Thanks to self-supervised fine-tuning, it can tackle unseen inverse pb.

📰 https://arxiv.org/abs/2503.08915

Excited to be presenting our spotlight ICLR paper Simplifying Deep Temporal Difference Learning today! Join us in Hall 3 + Hall 2B Poster #123 from 3pm :)

25.04.2025 22:56 — 👍 7 🔁 1 💬 0 📌 0Balinese text-to-speech dataset as digital cultural heritage https://pubmed.ncbi.nlm.nih.gov/40275973/

26.04.2025 03:04 — 👍 1 🔁 1 💬 0 📌 0

Kimi.ai releases Kimi-Audio! Our new open-source audio foundation model advances capabilities in audio understanding, generation, and conversation.

Paper: github.com/MoonshotAI/K...

Repo: github.com/MoonshotAI/K...

Model: huggingface.co/moonshotai/K...

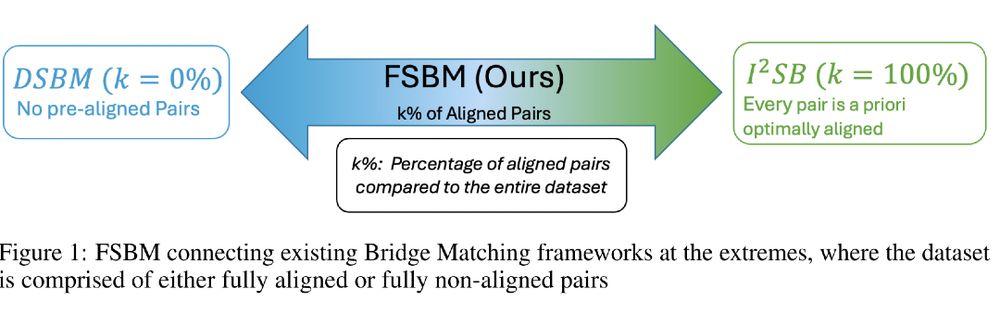

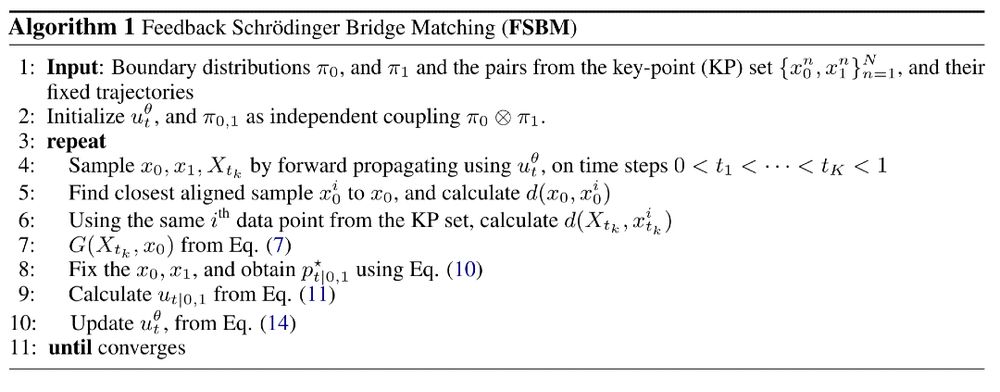

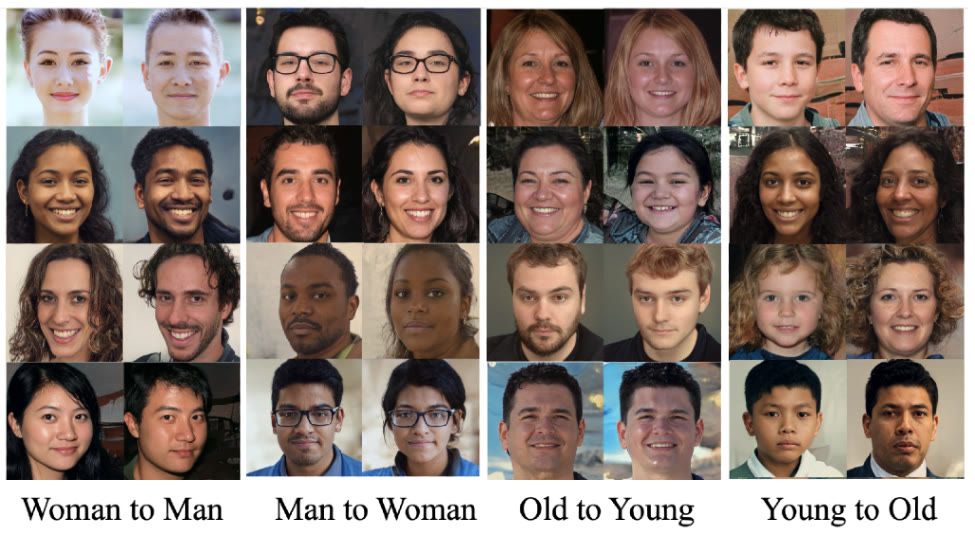

Very cool article from Panagiotis Theodoropoulos et al: https://arxiv.org/abs/2410.14055

Feedback Schrödinger Bridge Matching introduces a new method to improve transfer between two data distributions using only a small number of paired samples!

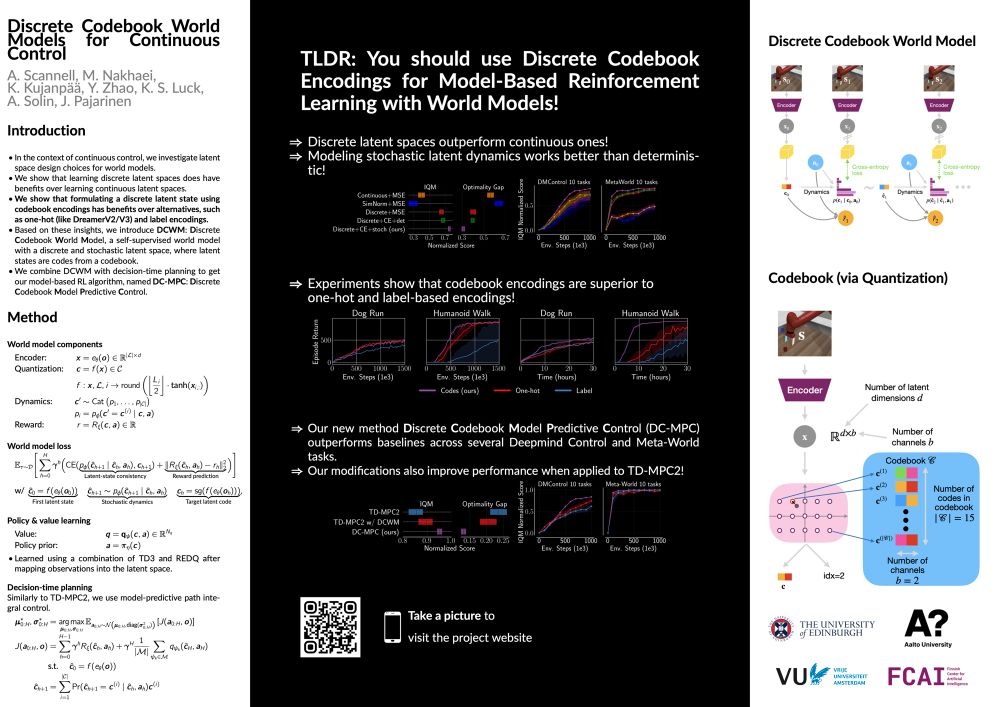

Our #ICLR2025 poster "Discrete Codebook World Models for Continuous Control" (Aidan Scannell, Mohammadreza Nakhaeinezhadfard, Kalle Kujanpää, Yi Zhao, Kevin Luck, Arno Solin, Joni Pajarinen)

🗓️ Hall 3 + Hall 2B #415, Thu 24 Apr 10 a.m. +08 — 12:30 p.m. +08

📄 Preprint: arxiv.org/abs/2503.00653

Andrew Kiruluta

Wavelet-based Variational Autoencoders for High-Resolution Image Generation

https://arxiv.org/abs/2504.13214

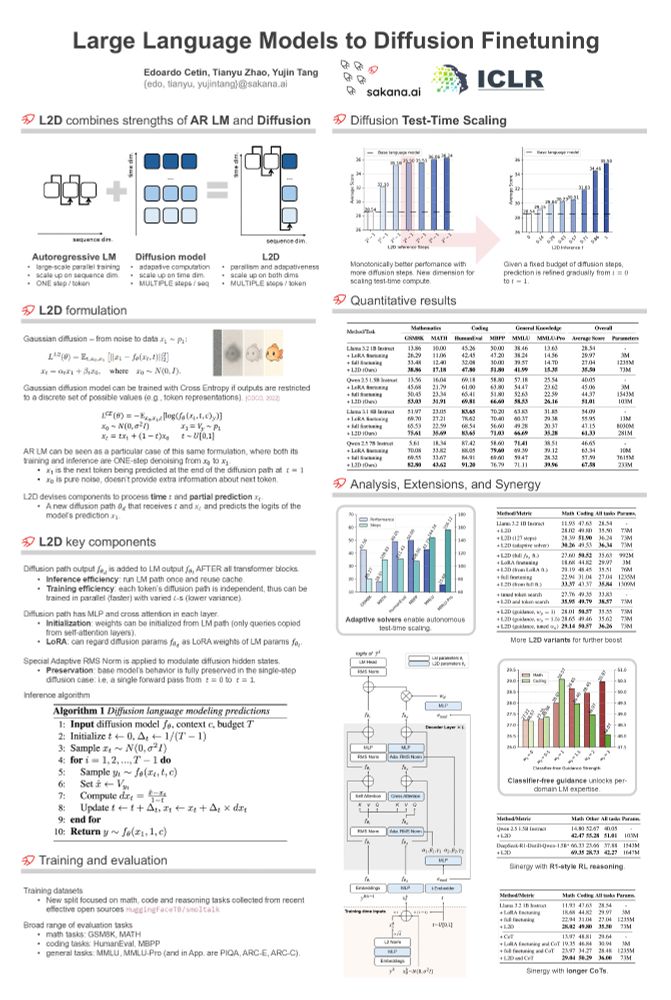

7/ Large Language Models to Diffusion Finetuning

Paper: openreview.net/forum?id=Wu5...

Workshop: workshop-llm-reasoning-planning.github.io

New finetuning method empowering pre-trained LLMs with some of the key properties of diffusion models and the ability to scale test-time compute.

10/ Sakana AI Co-Founder and CEO, David Ha, will be giving a talk at the #ICLR2025 World Models Workshop, at a panel to discuss the Current Development and Future Challenges of World Models.

Workshop Website: sites.google.com/view/worldmo...

Duy A. Nguyen, Quan Huu Do, Khoa D. Doan, Minh N. Do: Are you SURE? Enhancing Multimodal Pretraining with Missing Modalities through Uncertainty Estimation https://arxiv.org/abs/2504.13465 https://arxiv.org/pdf/2504.13465 https://arxiv.org/html/2504.13465

21.04.2025 06:02 — 👍 1 🔁 1 💬 1 📌 0