This was a fantastic poster presentation!

05.12.2025 18:56 — 👍 4 🔁 1 💬 0 📌 0

At #NeurIPS? Curious about how RNNs learn differently in closed-loop (RL) vs. open-loop (supervised) settings?

Come by Poster #2107 on Thursday at 4:30 PM!

neurips.cc/virtual/2025...

03.12.2025 01:12 — 👍 7 🔁 1 💬 0 📌 1

NeurIPS Poster Finding separatrices of dynamical flows with Deep Koopman EigenfunctionsNeurIPS 2025

#NeuRIPS2025

Wanna find decision boundaries in your RNN? Or learn about Koopman Eigenfunctions? Come to my poster.

#2002

Wednesday, Dec 3, 11am-2pm.

Exhb. Hall C,D,E

San Diego

neurips.cc/virtual/2025...

02.12.2025 17:47 — 👍 3 🔁 2 💬 0 📌 0

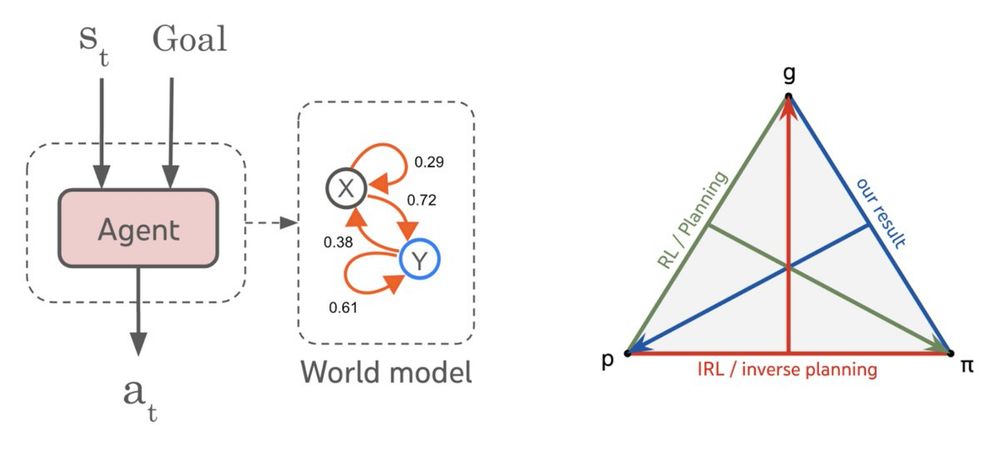

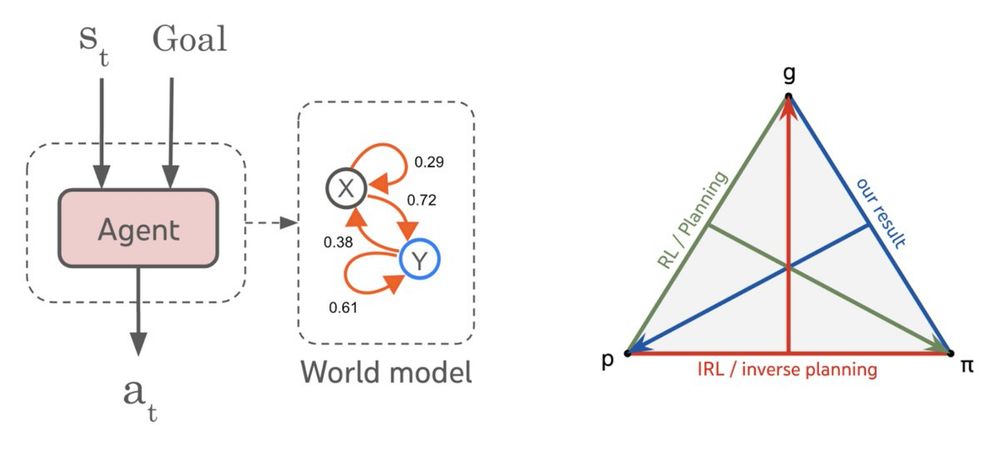

Are world models necessary to achieve human-level agents, or is there a model-free short-cut?

Our new #ICML2025 paper tackles this question from first principles, and finds a surprising answer, agents _are_ world models… 🧵

arxiv.org/abs/2506.01622

04.06.2025 15:48 — 👍 43 🔁 15 💬 2 📌 4

Learning Dynamics of RNNs in Closed-Loop Environments

Recurrent neural networks (RNNs) trained on neuroscience-inspired tasks offer powerful models of brain computation. However, typical training paradigms rely on open-loop, supervised settings, whereas ...

All our motor control modelling efforts focus on closed-loop systems for this reason:

"...closed-loop and open-loop training produce fundamentally different learning dynamics, even when using identical architectures and converging to the same final solution."

arxiv.org/abs/2505.13567

29.05.2025 17:28 — 👍 23 🔁 5 💬 0 📌 0

מדויק

31.12.2024 09:54 — 👍 1 🔁 0 💬 0 📌 0

Professor, Stanford University, Statistics and Mathematics. Opinions are my own.

http://yedizhang.github.io/

Account of the Max Planck Institute for the Physics of Complex Systems in Dresden; tweets by Pablo Pérez, Uta Gneisse, and Pierre Haas @lepuslapis.bsky.social.

PhD Student at MIT Brain and Cognitive Sciences studying Computational Neuroscience / ML. Prev Yale Neuro/Stats, Meta Neuromotor Interfaces

Blog: https://argmin.substack.com/

Webpage: https://people.eecs.berkeley.edu/~brecht/

Research Scientist @ Google DeepMind. AI + physics. Prev Ph.D. Physics @ UC Berkeley.

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

Scientific AI/ machine learning, dynamical systems (reconstruction), generative surrogate models of brains & behavior, applications in neuroscience & mental health

Asst Prof at NYU + Flatiron Institute

Computational + Statistical Neuroscience

https://neurostatslab.org/

Group Leader, CBS-NTT "Physics of Intelligence" Program at Harvard

website: https://sites.google.com/view/htanaka/home

theoretical neuroscience phd student at columbia

Manifolds and Neural Computation 🧠⚙️

PhD student at ENS, Paris 🇫🇷

Postdoc in the Litwin-Kumar lab at the Center for Theoretical Neuroscience at Columbia University.

I'm interested in multi-tasking and dimensionality.

Computational Neuroscientist + ML Researcher | Control theory + deep learning to understand the brain | PhD Candidate @ MIT | (he) 🍁

Doing maths research (geom. analysis, metric geometry, large point configs., opt. transport, etc) since ~2010, deep learning and applied research since ~2020.

https://sites.google.com/site/mircpetrache/home

cybernetic cognitive control 🤖

computational cognitive neuroscience 🧠

postdoc princeton neuro 🍕

he/him 🇨🇦 harrisonritz.github.io

Theor/Comp Neuroscientist (postdoc)

Prev @TU Munich

Stochastic&nonlin. dynamics @TU Berlin&@MPIDS

Learning dynamics, plasticity&geometry of representations

https://dimitra-maoutsa.github.io

https://dimitra-maoutsa.github.io/M-Dims-Blog

Computational neuroscientist studying learning and memory in health and disease. Dad, yogi, Assistant Professor at Rutgers University.

Neuroscientist investigating neuronal bases of reward and learning. Associate Prof at Oxford University. www.laklab.org