🧠 To reason over text and track entities, we find that language models use three types of 'pointers'!

They were thought to rely only on a positional one—but when many entities appear, that system breaks down.

Our new paper shows what these pointers are and how they interact 👇

08.10.2025 14:56 —

👍 5

🔁 1

💬 1

📌 0

🚨 New Paper 🚨

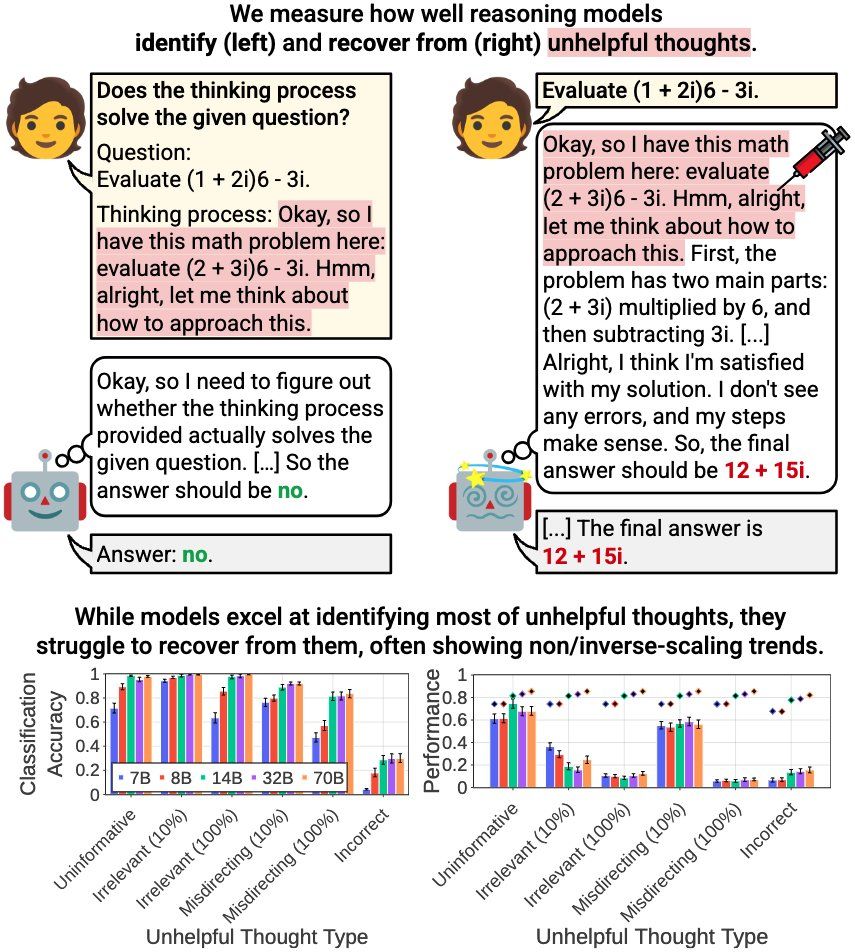

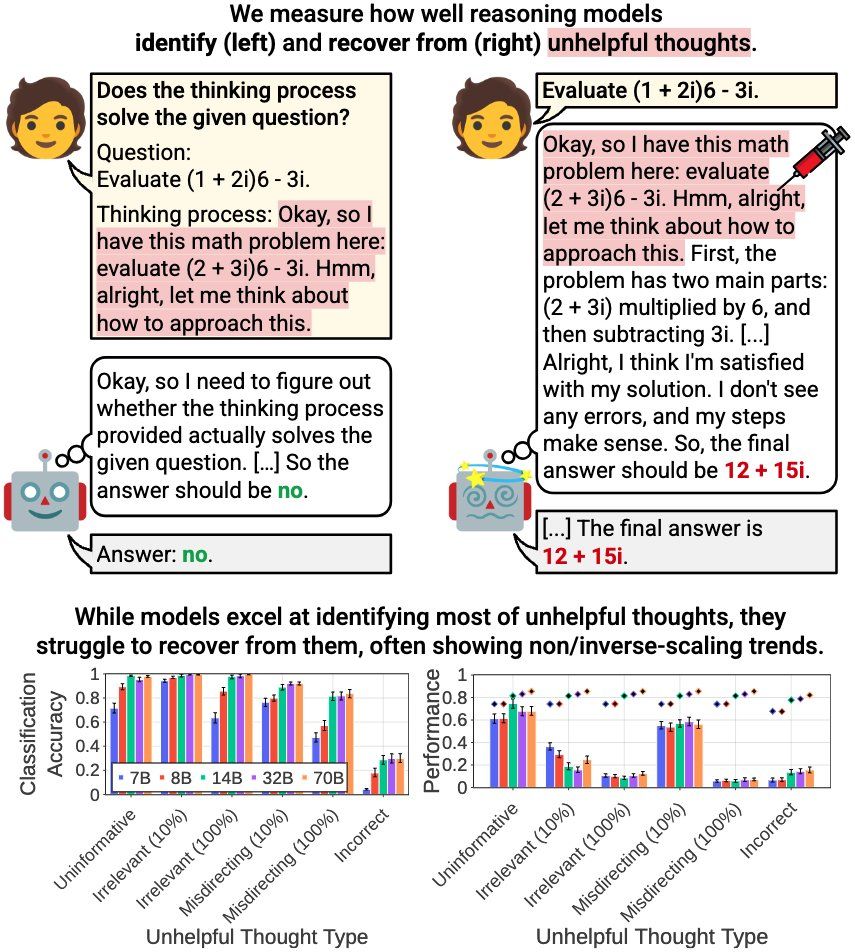

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

13.06.2025 16:15 —

👍 12

🔁 3

💬 1

📌 0

New Paper Alert! Can we precisely erase conceptual knowledge from LLM parameters?

Most methods are shallow, coarse, or overreach, adversely affecting related or general knowledge.

We introduce🪝𝐏𝐈𝐒𝐂𝐄𝐒 — a general framework for Precise In-parameter Concept EraSure. 🧵 1/

29.05.2025 16:22 —

👍 5

🔁 2

💬 1

📌 0

Checkout Benno's notes about our impact of interpretability paper 👇.

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

15.04.2025 23:11 —

👍 11

🔁 3

💬 0

📌 0

Have work on the actionable impact of interpretability findings? Consider submitting to our Actionable Interpretability workshop at ICML! See below for more info.

Website: actionable-interpretability.github.io

Deadline: May 9

03.04.2025 17:58 —

👍 20

🔁 10

💬 0

📌 0

Forgot to tag the one and only @hadasorgad.bsky.social !!!

31.03.2025 17:39 —

👍 2

🔁 0

💬 0

📌 0

🎉 Our Actionable Interpretability workshop has been accepted to #ICML2025! 🎉

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

31.03.2025 16:59 —

👍 42

🔁 16

💬 3

📌 3

Communication between LLM agents can be super noisy! One rogue agent can easily drag the whole system into failure 😱

We find that (1) it's possible to detect rogue agents early on

(2) interventions can boost system performance by up to 20%!

Thread with details and paper link below!

13.02.2025 14:30 —

👍 4

🔁 0

💬 0

📌 0

In a final experiment, we show that output-centric methods can be used to "revive" features previously thought to be "dead" 🧟♂️ reviving hundreds of SAE features in Gemma 2! 6/

28.01.2025 19:38 —

👍 0

🔁 0

💬 1

📌 0

In a final experiment, we show that output-centric methods can be used to "revive" features previously thought to be "dead" 🧟♂️ reviving hundreds of SAE features in Gemma 2! 6/

28.01.2025 19:38 —

👍 0

🔁 0

💬 0

📌 0

Unsurprisingly, while activating inputs better describe what activates a feature, output-centric methods do much better at predicting how steering the feature will affect the model’s output!

But combining the two works best! 🚀 5/

28.01.2025 19:37 —

👍 0

🔁 0

💬 2

📌 0

Next, we evaluate the widely-used activating inputs approach versus two output-centric methods:

- vocabulary projection (a.k.a logit lens)

- tokens with max probability change in the output

Our output-centric methods require no more than a few inference passes! 4/

28.01.2025 19:36 —

👍 0

🔁 0

💬 1

📌 0

To fix this, we first propose using both input- and output-based evaluations for feature descriptions.

Our output-based eval measures how well a description of a feature captures its effect on the model's generation. 3/

28.01.2025 19:36 —

👍 0

🔁 0

💬 1

📌 0

Autointerp pipelines describe neurons and SAE features based on inputs that activate them.

This is problematic ⚠️

1. Collecting activations for large data is expensive, time-consuming, and often unfeasible.

2. It overlooks how features affect model outputs!

2/

28.01.2025 19:35 —

👍 0

🔁 0

💬 1

📌 0

How can we interpret LLM features at scale? 🤔

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

28.01.2025 19:34 —

👍 32

🔁 4

💬 1

📌 0

🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨

Can AI truly "forget"? While unlearning promises data removal, controlling emergent capabilities is a inherent challenge. Here's why it matters: 👇

Paper: arxiv.org/pdf/2501.04952

1/8

10.01.2025 16:58 —

👍 25

🔁 6

💬 1

📌 3

Most operation descriptions are plausible based on human judgment.

We also observe interesting operations implemented by heads, like the extension of time periods (day → month → year) and association of known figures with years relevant to their historical significance (9/10)

18.12.2024 18:01 —

👍 1

🔁 0

💬 1

📌 0

Next, we establish an automatic pipeline that uses GPT-4o to annotate the salient mappings from MAPS.

We map the attention heads of Pythia 6.9B and GPT2-xl and manage to identify operations for most heads, reaching 60%-96% in the middle and upper layers (8/10)

18.12.2024 18:00 —

👍 2

🔁 0

💬 1

📌 0

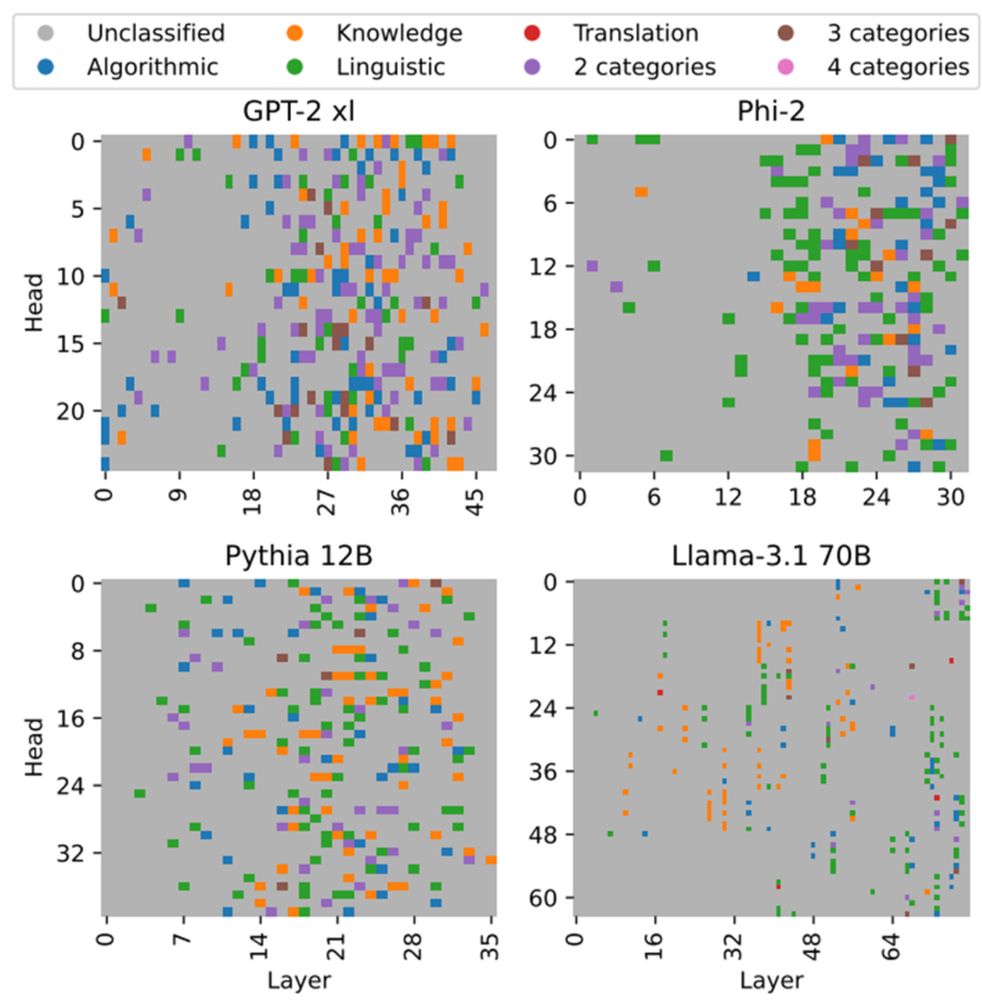

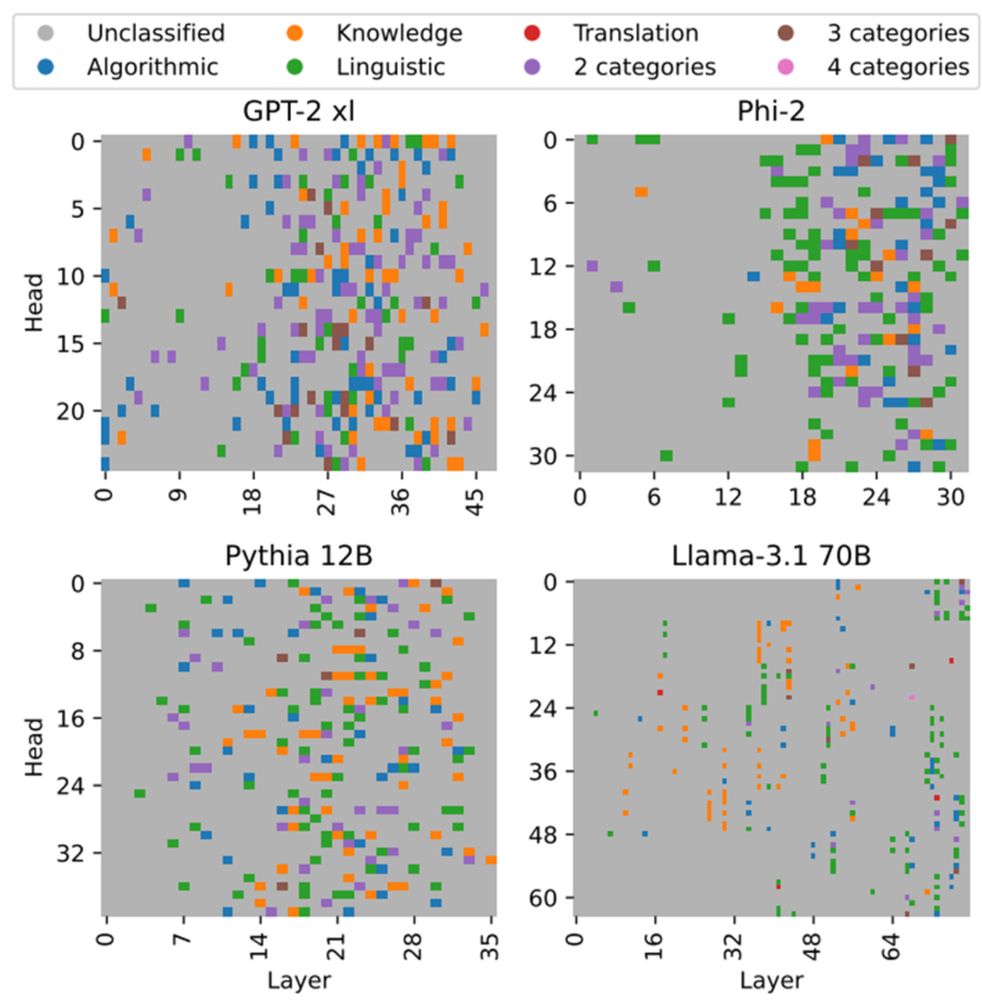

(3) Smaller models tend to encode higher numbers of relations in a single head

(4) In Llama-3.1 models, which use grouped-query attention, grouped heads often implement the same or similar relations (7/10)

18.12.2024 17:59 —

👍 2

🔁 0

💬 1

📌 0

(1) Different models encode certain relations across attention heads to similar degrees

(2) Different heads implement the same relation to varying degrees, which has implications for localization and editing of LLMs (6/10)

18.12.2024 17:58 —

👍 3

🔁 0

💬 1

📌 0

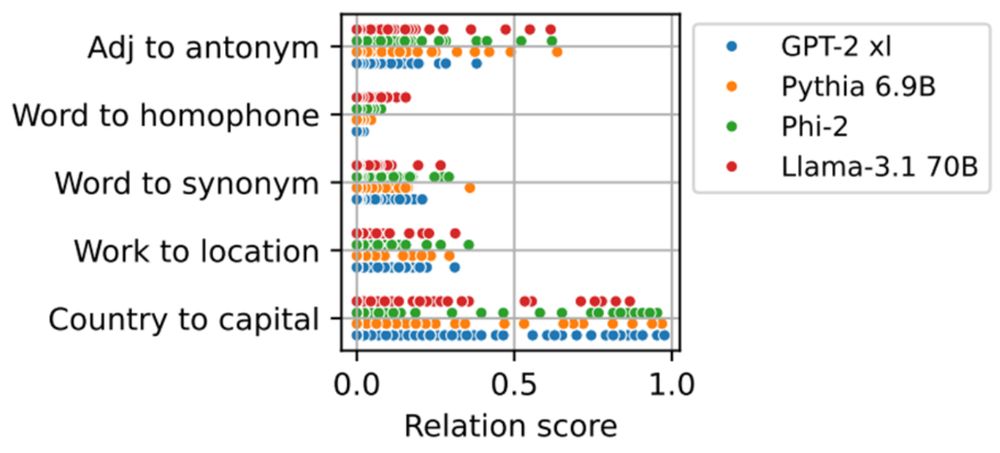

Using MAPS, we study the distribution of operations across heads in different models -- Llama, Pythia, Phi, GPT2 -- and see some cool trends of function encoding universality and architecture biases: (5/10)

18.12.2024 17:58 —

👍 0

🔁 0

💬 1

📌 0

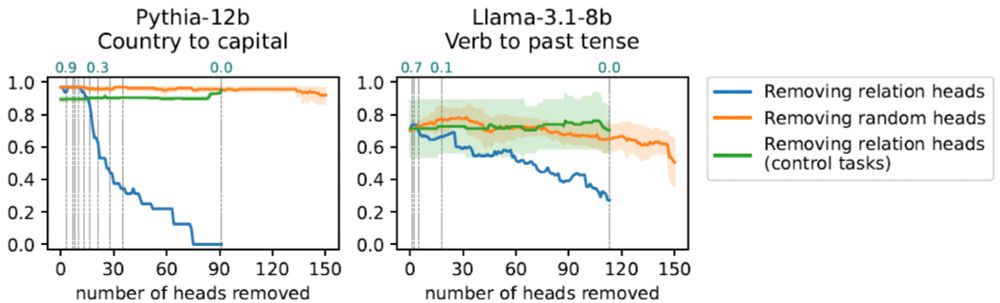

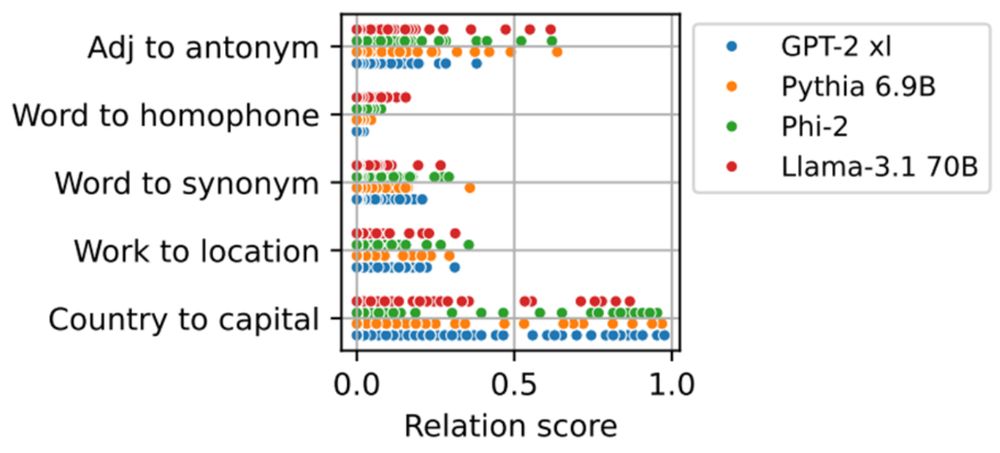

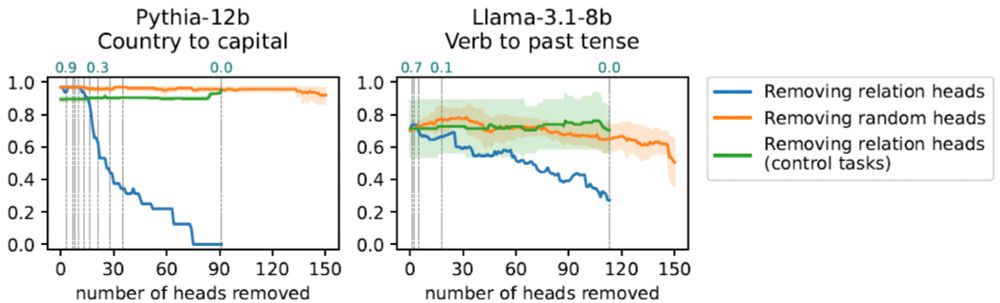

Experiments on 20 operations and 6 LLMs show that MAPS estimations strongly correlate with the head’s outputs during inference

Ablating heads implementing an operation damages the model’s ability to perform tasks requiring the operation compared to removing other heads (4/10)

18.12.2024 17:57 —

👍 3

🔁 0

💬 1

📌 0

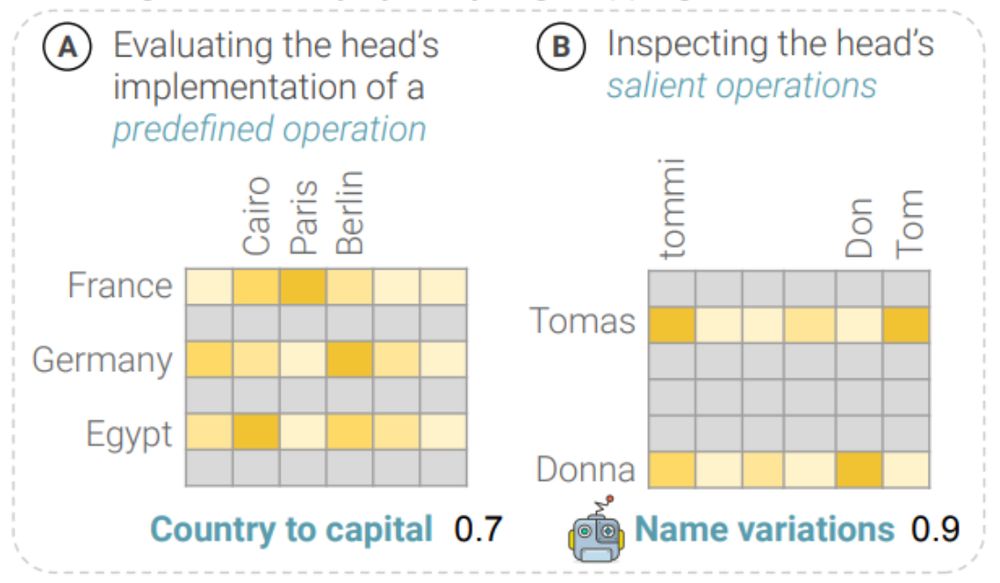

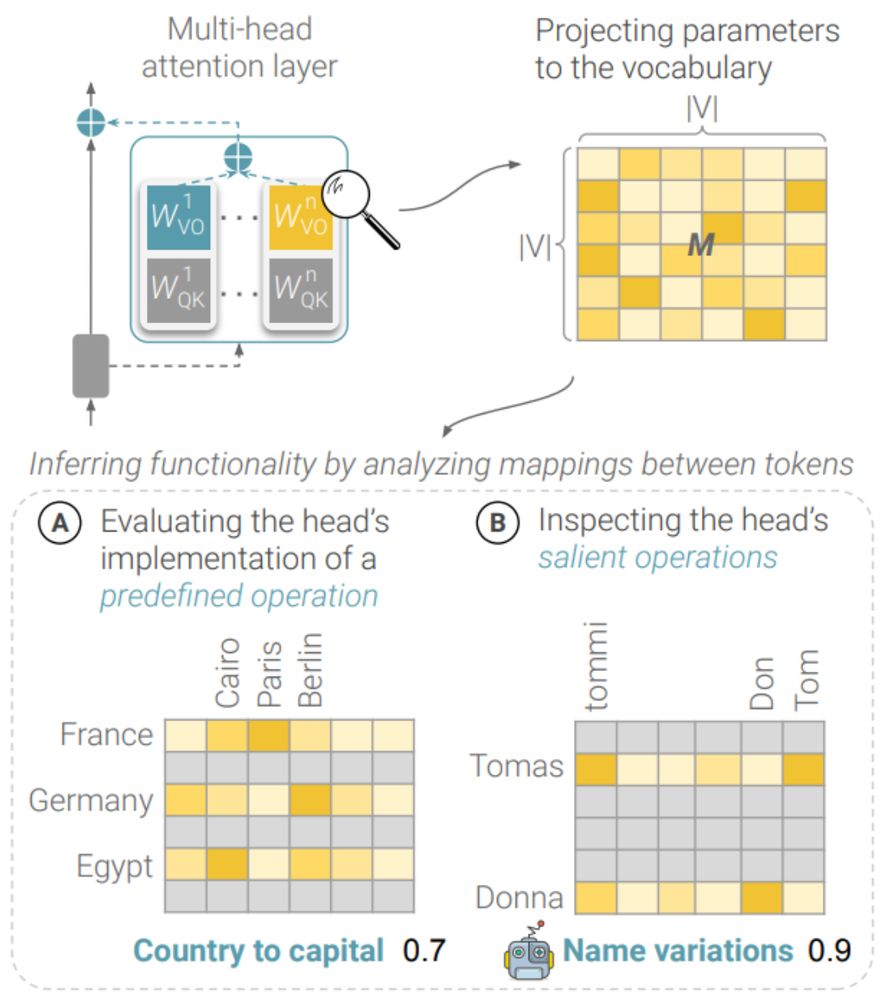

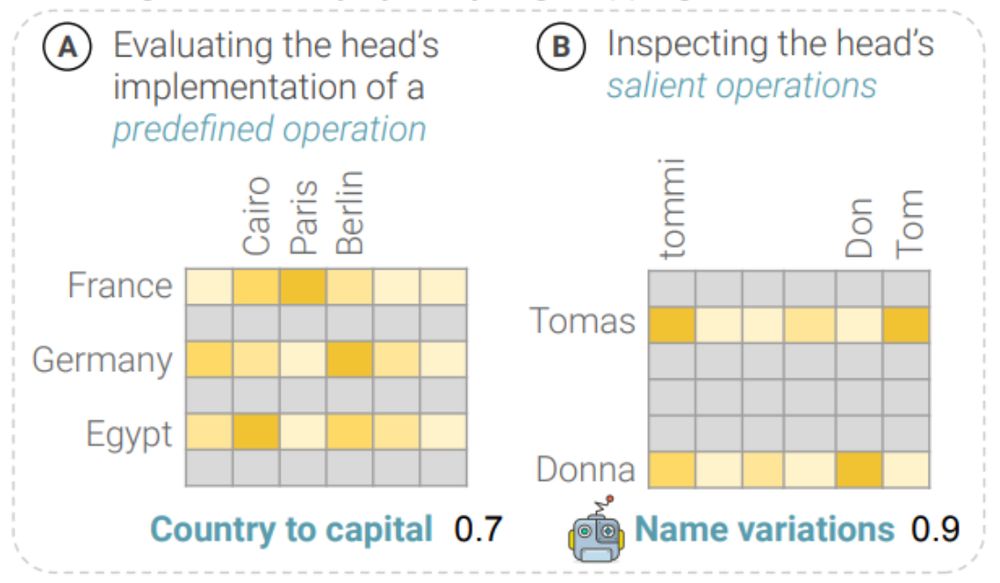

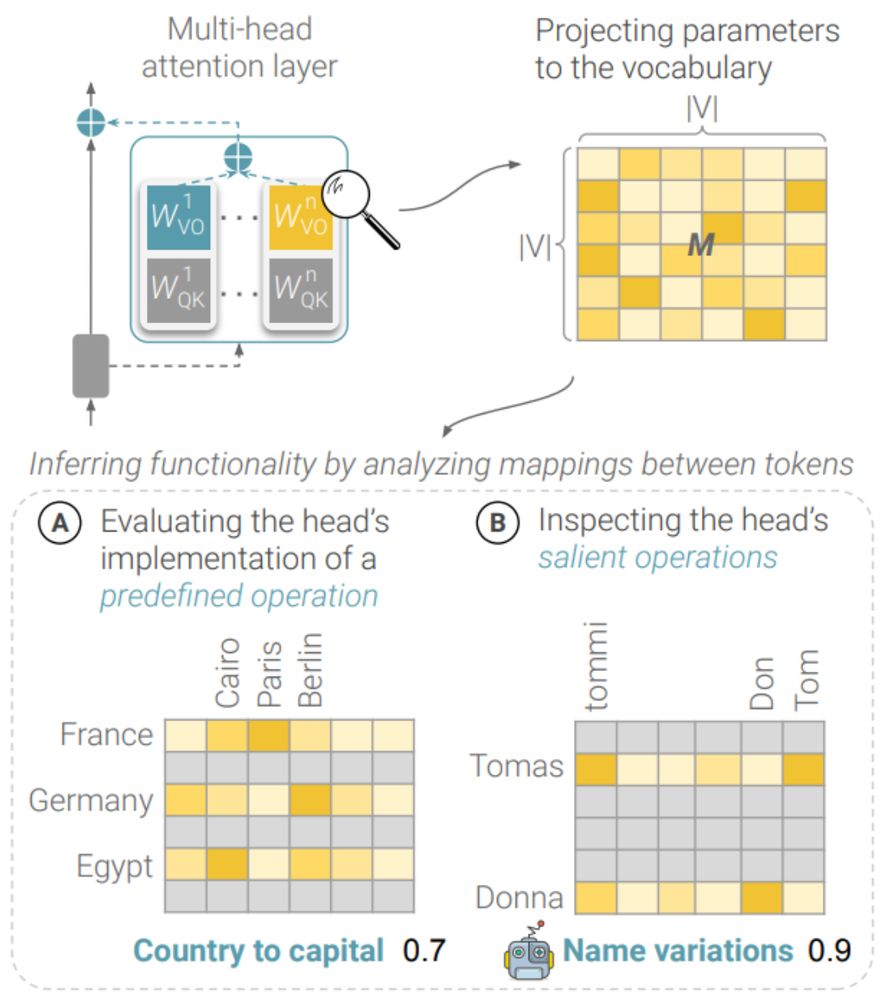

MAPS infers the head’s functionality by examining different groups of mappings:

(A) Predefined relations: groups expressing certain relations (e.g. city of a country)

(B) Salient operations: groups for which the head induces the most prominent effect (3/10)

18.12.2024 17:57 —

👍 1

🔁 0

💬 1

📌 0

Previous works that analyze attention heads mostly focused on studying their attention patterns or outputs for certain tasks or circuits.

Here, we take a different approach, inspired by @anthropic.com @guydar.bsky.social , and inspect the head in the vocabulary space 🔍 (2/10)

18.12.2024 17:56 —

👍 2

🔁 0

💬 1

📌 0

What's in an attention head? 🤯

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

18.12.2024 17:55 —

👍 60

🔁 13

💬 1

📌 0

Volunteer to join ACL 2025 Programme Committee

Use this form to express your interest in joining the ACL 2025 programme committee as a reviewer or area chair (AC). The review period is 1st to 20th of March 2025. ACs need to be available for variou...

We invite nominations to join the ACL2025 PC as reviewer or area chair(AC). Review process through ARR Feb cycle. Tentative timeline: Review 1-20 Mar 2025, Rebuttal is 26-31 Mar 2025. ACs must be available throughout the Feb cycle. Nominations by 20 Dec 2024:

shorturl.at/TaUh9 #NLProc #ACL2025NLP

16.12.2024 00:28 —

👍 11

🔁 12

💬 0

📌 1