Looking forward to presenting at the #AAAI #NeuroAI workshop; including 3 projects that were just accepted to ICLR! arxiv.org/abs/2509.24597, arxiv.org/abs/2510.03684, arxiv.org/abs/2506.13331 🧪🧠🤖

27.01.2026 06:24 — 👍 20 🔁 3 💬 0 📌 0

🎉 Re-Align is back for its 4th edition at ICLR 2026!

📣 We invite submissions on representational alignment, spanning ML, Neuroscience, CogSci, and related fields.

📝 Tracks: Short (≤5p), Long (≤10p), Challenge (blog)

⏰ Deadline: Feb 5, 2026 for papers

🔗 representational-alignment.github.io/2026/

07.01.2026 16:27 — 👍 13 🔁 8 💬 1 📌 3

One week left to apply to the EPFL computer science PhD program www.epfl.ch/education/ph.... It's an amazing environment to do impactful research 🧪 (with unparalleled compute)! My NeuroAI group is hiring 🧠🤖. Consider this review service by our fantastic PhD students: www.linkedin.com/posts/spnesh...

08.12.2025 14:20 — 👍 3 🔁 4 💬 0 📌 0

UCL NeuroAI Talk Series

A series of NeuroAI themed talks organised by the UCL NeuroAI community. Talks will continue on a monthly basis.

For our next UCL #NeuroAI online seminar, we are happy to welcome Dr Martin Schrimpf @mschrimpf.bsky.social (EPFL)

🗓️Wed 19 Nov 2025

⏰2-3pm GMT

Neuro -> AI and Back Again: Integrative Models of the Human Brain in Health and Disease

ℹ️ Details / registration: www.eventbrite.co.uk/e/ucl-neuroa...

17.11.2025 16:26 — 👍 9 🔁 4 💬 0 📌 0

Thrilled to be among this fantastic cohort of AI2050 Fellows. This is a great recognition of the transformative potential of #NeuroAI and our lab’s work in this space 🧪🧠🤖. Many thanks to @schmidtsciences.bsky.social for the support!

06.11.2025 11:16 — 👍 28 🔁 2 💬 4 📌 0

How neuroscientists are using AI

Eight researchers explain how they are using large language models to analyze the literature, brainstorm hypotheses and interact with complex datasets.

Researchers are using LLMs to analyze the literature, brainstorm hypotheses, build models and interact with complex datasets. Hear from @mschrimpf.bsky.social, @neurokim.bsky.social, @jeremymagland.bsky.social, @profdata.bsky.social and others.

#neuroskyence

www.thetransmitter.org/machine-lear...

04.11.2025 16:07 — 👍 26 🔁 9 💬 0 📌 2

The new data by Fernandez, @mbeyeler.bsky.social, Liu et al will be great here to better map the neural effect of various stimulation patterns

09.10.2025 11:00 — 👍 4 🔁 0 💬 0 📌 0

To expand on this: When we built our stimulation->neural predictor (www.biorxiv.org/content/10.1...), we didn't find much experimental data to constrain the model. The best we found was data from @markhisted.org and biophysical modeling by Kumaravelu et al.

09.10.2025 11:00 — 👍 3 🔁 0 💬 1 📌 0

A glimpse at what #NeuroAI brain models might enable: a topographic vision model predicts stimulation patterns that steer complex object recognition behavior in primates. This could be a key 'software' component for visual prosthetic hardware 🧠🤖🧪

08.10.2025 11:11 — 👍 42 🔁 10 💬 1 📌 0

🧠 New preprint: we show that model-guided microstimulation can steer monkey visual behavior.

Paper: arxiv.org/abs/2510.03684

🧵

07.10.2025 15:21 — 👍 35 🔁 16 💬 1 📌 2

Just to support Sam's argument here: there is indeed a lot of evidence across several domains such as vision and language that ML models develop representations similar to the human brain. There are of course many differences but on a certain level of abstraction there is a surprising convergence

05.10.2025 18:31 — 👍 4 🔁 1 💬 1 📌 1

Thank you!

02.10.2025 20:12 — 👍 1 🔁 0 💬 1 📌 0

More precisely we would categorize it as a brain based disorder, but now I'm curious if you would be on board with that?

02.10.2025 18:29 — 👍 0 🔁 0 💬 1 📌 0

You're right and I apologize for the imprecise phrasing. I wanted to connect with the usual "brain in health and disease" phrasing, for which we developed some first tools based on the learning disorder dyslexia. We are hopeful that these tools will be applicable to diseases of brain function

02.10.2025 14:26 — 👍 0 🔁 0 💬 1 📌 0

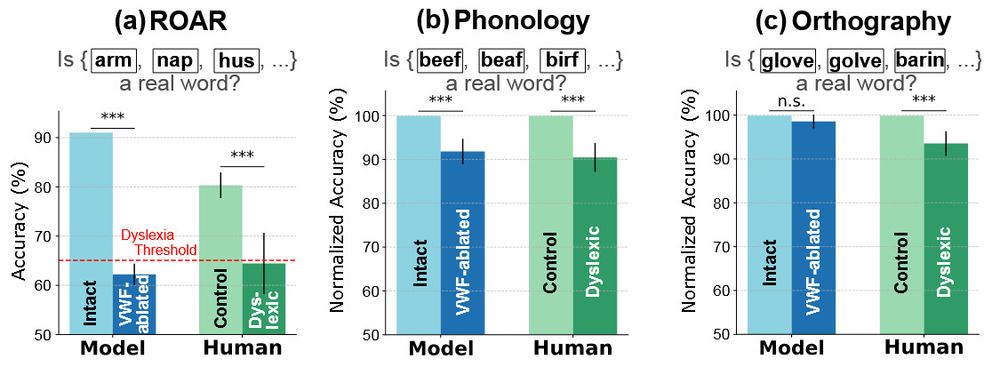

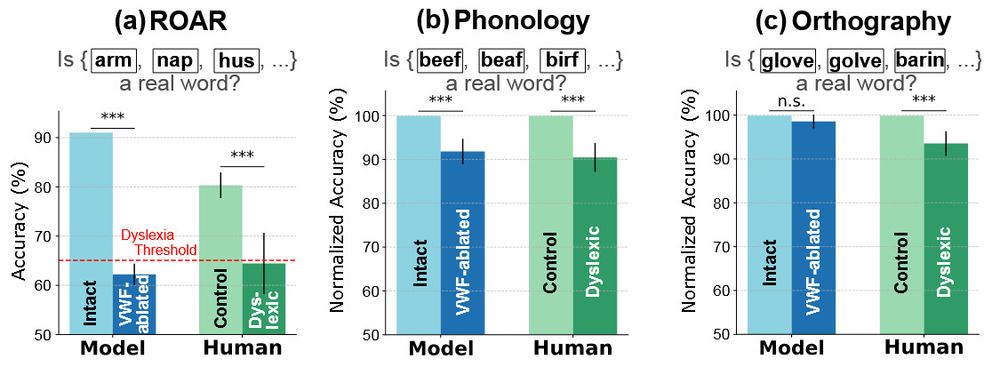

Very happy to be part of this project: Melika Honarmand has done a great job of using vision-language-models to predict the behavior of people with dyslexia. A first step toward modeling various disease states using artificial neural networks.

02.10.2025 12:33 — 👍 3 🔁 1 💬 0 📌 0

We're super excited about this approach: localizing model analogues of hypothesized neural causes in the brain and testing their downstream behavioral effects is applicable much more broadly in a variety of other contexts!

02.10.2025 12:10 — 👍 1 🔁 0 💬 0 📌 0

Digging deeper into the ablated model, we found that its behavioral patterns mirror phonological deficits of dyslexic humans, without a significant deficit in orthographic processing. This connects to experimental work suggesting that phonological and orthographic deficits have distinct origins.

02.10.2025 12:10 — 👍 0 🔁 0 💬 1 📌 0

It turns out that the ablation of these units has a very specific effect: it reduced reading performance to dyslexia levels *but* keeps visual reasoning performance intact. This does not happen with random units, so localization is key.

02.10.2025 12:10 — 👍 0 🔁 0 💬 1 📌 0

We achieve this via the localization and subsequent ablation of units that are "visual-word-form selective" i.e. are more active for the visual presentation of words over other images. After ablating the units we test the effect on behavior in benchmarks testing reading and other control tasks

02.10.2025 12:10 — 👍 0 🔁 0 💬 1 📌 0

I've been arguing that #NeuroAI should model the brain in health *and* in disease -- very excited to share a first step from Melika Honarmand: inducing dyslexia in vision-language-models via targeted perturbations of visual-word-form units (analogous to human VWFA) 🧠🤖🧪 arxiv.org/abs/2509.24597

02.10.2025 12:10 — 👍 49 🔁 12 💬 1 📌 3

We're super excited about this approach more broadly: localizing model analogues of hypothesized neural causes in the brain and testing their downstream behavioral effects is applicable in a variety of other contexts!

02.10.2025 12:04 — 👍 0 🔁 0 💬 0 📌 0

Digging deeper into the ablated model, we found that its behavioral patterns mirror phonological deficits of dyslexic humans, without a significant deficit in orthographic processing. This connects to experimental work suggesting that phonological and orthographic deficits have distinct origins.

02.10.2025 12:04 — 👍 1 🔁 0 💬 1 📌 0

It turns out that the ablation of these units has a very specific effect: it reduced reading performance to dyslexia levels *but* keeps visual reasoning performance intact. This does not happen with random units, so localization is key.

02.10.2025 12:04 — 👍 0 🔁 0 💬 1 📌 0

We achieve this via the localization and subsequent ablation of units that are "visual-word-form selective" i.e. are more active for the visual presentation of words over other images. After ablating the units we test the effect on behavior in benchmarks testing reading and other control tasks

02.10.2025 12:04 — 👍 0 🔁 0 💬 1 📌 0

Diagram showing three ways to control brain activity with a visual prosthesis. The goal is to match a desired pattern of brain responses. One method uses a simple one-to-one mapping, another uses an inverse neural network, and a third uses gradient optimization. Each method produces a stimulation pattern, which is tested in both computer simulations and in the brain of a blind participant with an implant. The figure shows that the neural network and gradient methods reproduce the target brain activity more accurately than the simple mapping.

👁️🧠 New preprint: We demonstrate the first data-driven neural control framework for a visual cortical implant in a blind human!

TL;DR Deep learning lets us synthesize efficient stimulation patterns that reliably evoke percepts, outperforming conventional calibration.

www.biorxiv.org/content/10.1...

27.09.2025 02:52 — 👍 93 🔁 25 💬 2 📌 6

EPFL, ETH Zurich & CSCS just released Apertus, Switzerland’s first fully open-source large language model.

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

02.09.2025 11:48 — 👍 54 🔁 29 💬 1 📌 6

Action potential 👉 3 faculty opportunities to join EPFL neuroscience 1. Tenure Track Assistant Professor in Neuroscience go.epfl.ch/neurofaculty, 2. Tenure Track Assistant Professor in Life Sciences Engineering, or 3. Associate Professor (tenured) in Life Sciences Engineering go.epfl.ch/LSEfaculty

18.08.2025 08:46 — 👍 11 🔁 3 💬 1 📌 0

Speakers and organizers of the GAC debate. Time and location of the GAC debate: 5 PM in Room C1.03.

Our #CCN2025 GAC debate w/ @gretatuckute.bsky.social, Gemma Roig (www.cvai.cs.uni-frankfurt.de), Jacqueline Gottlieb (gottlieblab.com), Klaus Oberauer, @mschrimpf.bsky.social & @brittawestner.bsky.social asks:

📊 What benchmarks are useful for cognitive science? 💭

2025.ccneuro.org/gac

13.08.2025 07:00 — 👍 50 🔁 16 💬 1 📌 1

The MIT Siegel Family Quest for Intelligence's community of scientists, engineers, faculty, students, staff, and supporters are aiming to understand intelligence – how brains produce it and how it can be replicated in artificial systems.

neuroscientist, psychiatrist, writer

optogenetics.org

karldeisseroth.org

https://www.amazon.com/Projections-Story-Emotions-Karl-Deisseroth/dp/1984853694

Assistant Professor in Neuroscience at the Donders Institute & Radboudumc.

Oscillations, language, the visual system, source reconstruction methods, and decoding. Open source enthusiast. https://britta-wstnr.github.io

Computational neuroscientist, NeuroAI lab @EPFL

Studying language in biological brains and artificial ones at the Kempner Institute at Harvard University.

www.tuckute.com

AI, Neuroscience and Music

EPFL Brain Mind Institute researchers develop & deploy technology to gain fundamental insight into brain & spinal cord systems, exploiting this knowledge for new therapies for brain disorders & towards novel intelligent machines https://go.epfl.ch/brain

PhD at EPFL 🧠💻

Ex @MetaAI, @SonyAI, @Microsoft

Egyptian 🇪🇬

The Algonauts Project, first launched in 2019, is on a mission to bring biological and machine intelligence researchers together on a common platform to exchange ideas and pioneer the intelligence frontier.

https://algonautsproject.com/

Strengthening Europe's Leadership in AI through Research Excellence | ellis.eu

AI + security at AISLE | Stanford PhD in AI & Cambridge physics | scientific progress

Cognitive neuroscientist studying visual and social perception. Asst Prof at JHU Cog Sci. She/her

As a hub for artificial intelligence, the EPFL AI Center leverages the extensive expertise of faculty and researchers across the Institution. It fosters a collaborative environment that nurtures multidisciplinary AI research, education, and innovation.

Helping machines make sense of the world. Asst Prof @icepfl.bsky.social; Before: @stanfordnlp.bsky.social @uwnlp.bsky.social AI2 #NLProc #AI

Website: https://atcbosselut.github.io/

Scientist, mentor, activist, explorer.

Biologist, McGill University