My initial point was about the moving frontier of knowledge in G2.5 and G3. Locating correctly areas was mind blowing to me.

I got fooled by the primate Brian with a mouse olfactory bulb. I cannot hide my poor knowledge of mouse anatomy. But I will continue to work in comp neuro if you allow me

21.11.2025 15:00 — 👍 0 🔁 0 💬 0 📌 0

It's not good. I think we agree! Gemini 2.5 and 3 cannot draw a mouse brain.

21.11.2025 14:49 — 👍 0 🔁 0 💬 0 📌 0

To show this funny "primate brain bias" in Gemini. I copy pasted your message as a correction prompt (first image). Gyri disappeared which is good. And then i tried to insist to put the cerebellum behind and not below.

It still likes primate brain too much apparently.

21.11.2025 14:37 — 👍 0 🔁 0 💬 1 📌 0

Exactly, Gemini loves to draw primate brains. It was ridiculous in G2.5, and cortices were all over the place.

I thought it was corrected in 3. Apparently not. Good there is a long way to fool scientists with good anatomical knowledge.

Imo, area locations were consistent with this image, isn't it?

21.11.2025 14:25 — 👍 0 🔁 0 💬 2 📌 0

Tried to correct the big flaws detected by @ctestard.bsky.social and @ackurth.bsky.social .

Well clearly Gemini 3 is still strongly attracted to draw human or primate brains. The teeth look better though

21.11.2025 12:39 — 👍 1 🔁 0 💬 1 📌 0

Thanks 🙏! Indeed, I was too convinced by the olfactory bulb but many things are missing. Good to see that the AI models have a long way to go.

My knowledge of mouse anatomy, is quite limited as you can see. The location of the cortices looked good to me. Any opinion about that?

21.11.2025 12:18 — 👍 0 🔁 0 💬 1 📌 0

Good catch!

Should probably look more like this ideally:

fr.wikipedia.org/wiki/Fichier...

21.11.2025 12:11 — 👍 0 🔁 0 💬 0 📌 0

Now edit capability. Also nailed locations of M2 and S2 (as far as I can fact check myself).

Doing figures will never be the same.

21.11.2025 11:45 — 👍 2 🔁 0 💬 2 📌 0

This was the prompt:

"For a scientific paper I want to draw the head of a mouse with the mouse brain visible and dots locating the primary somatosensory S1 the primary visual cortex V1 and the primary motor cortex M1"

21.11.2025 11:45 — 👍 1 🔁 0 💬 1 📌 0

I am impressed by the improvement with Gemini 3 and nano 🍌.

I used nano banana to make scientific figures. With 2.5 it was repetitively putting a human brain inside the 🐭 head.

Now it draws an accurate mouse brain anatomy even seems to locate correctly the cortical areas. Big jump imo

21.11.2025 11:32 — 👍 12 🔁 1 💬 2 📌 1

Whoopsy

09.11.2025 13:09 — 👍 5 🔁 0 💬 0 📌 0

AI and Neuroscience | IVADO

I’m looking for interns to join our lab for a project on foundation models in neuroscience.

Funded by @ivado.bsky.social and in collaboration with the IVADO regroupement 1 (AI and Neuroscience: ivado.ca/en/regroupem...).

Interested? See the details in the comments. (1/3)

🧠🤖

07.11.2025 13:52 — 👍 43 🔁 23 💬 1 📌 0

Introducing CorText: a framework that fuses brain data directly into a large language model, allowing for interactive neural readout using natural language.

tl;dr: you can now chat with a brain scan 🧠💬

1/n

03.11.2025 15:17 — 👍 128 🔁 52 💬 4 📌 8

Reminder this is happening this Wed/Thu. Free spiking neural network conference - registration required (see below).

03.11.2025 15:28 — 👍 9 🔁 9 💬 0 📌 0

This was a fabulous, once in a lifetime colloquium -- and now the videos are available in high quality on the College de France web site @college-de-france.fr

www.college-de-france.fr/fr/agenda/co...

With talks by Edvard Moser, Nancy Kanwisher, Liz Spelke, Manuela Piazza, Luca Bonatti and more!

02.11.2025 15:08 — 👍 54 🔁 24 💬 1 📌 1

New paper titled "Tracing the Representation Geometry of Language Models from Pretraining to Post-training" by Melody Z Li, Kumar K Agrawal, Arna Ghosh, Komal K Teru, Adam Santoro, Guillaume Lajoie, Blake A Richards.

LLMs are trained to compress data by mapping sequences to high-dim representations!

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

31.10.2025 16:19 — 👍 59 🔁 12 💬 1 📌 3

Tbf, people did request SE or STD in RL where things are less stable.

But now for big LLMs it is not uncommon (although not ideal) to manually restart to intermediate checkpoints.

If the model you publish is strong and people reproduce your work within 3 months. Your work is very important.

27.10.2025 07:43 — 👍 0 🔁 0 💬 0 📌 0

Nop, SE or STD over models do not really capture this.

Depending on learning rate or net size. You can have a model init at 50% acc and another 4 at 98.5%. Making strong STD and SE and the diff with CNN at 99% insignificant. Yet everybody knows it is reproducible like the sunset and the sunrise.

27.10.2025 07:34 — 👍 0 🔁 0 💬 1 📌 0

Ok. An example: MNIST

Every ML researcher knows 98.5% means maxing up an MLP and 99% decent CNN. You know the margin is reproducible f you worked with this.

But reporting STD will not easily capture this margin. Not shuffle the training/test split. Not, STD over models.

26.10.2025 19:18 — 👍 0 🔁 0 💬 1 📌 0

Indeed, bad example 😅

26.10.2025 17:23 — 👍 0 🔁 0 💬 1 📌 0

I agree that inferential versus descriptive is important. But how does STD versus SE address the issue?

In ML for Neuro, the sqrt(n) factor is obscure. n may mean: num of animals, num of models, num of CV shuffles...

The error bar will not represent well reproducibility either way.

26.10.2025 13:32 — 👍 1 🔁 0 💬 2 📌 0

Maybe Neuroscience has been over obsessed with statistical tests. Wasting time on rebranding means, std and linear regression behind complicated tests.

Just to find out in the next batch of papers that those statistical test are easy to cheat, or having significant irrelevant results.

26.10.2025 12:19 — 👍 6 🔁 0 💬 1 📌 0

Or maybe neuro-statisticians should learn from ML.

1st: Yes, most ML researcher had strong training in applied math including stats and proba.

2nd: When the reproducibility that matters is clear. The ML field quickly agree on a simple metric. So no need to make things complicated.

26.10.2025 12:16 — 👍 6 🔁 0 💬 1 📌 0

Okay, sorry I had misunderstood from the context of the our last discussion, cool.

End-to-end back-prop works wonderfully well. So good to study this case too. Cool work

25.10.2025 17:48 — 👍 1 🔁 0 💬 0 📌 0

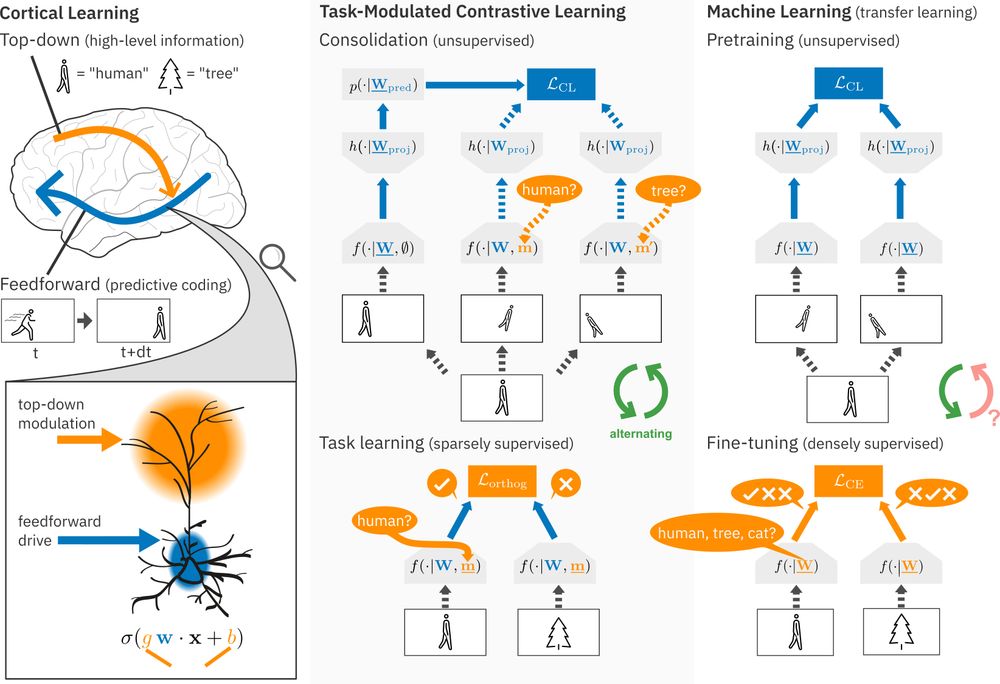

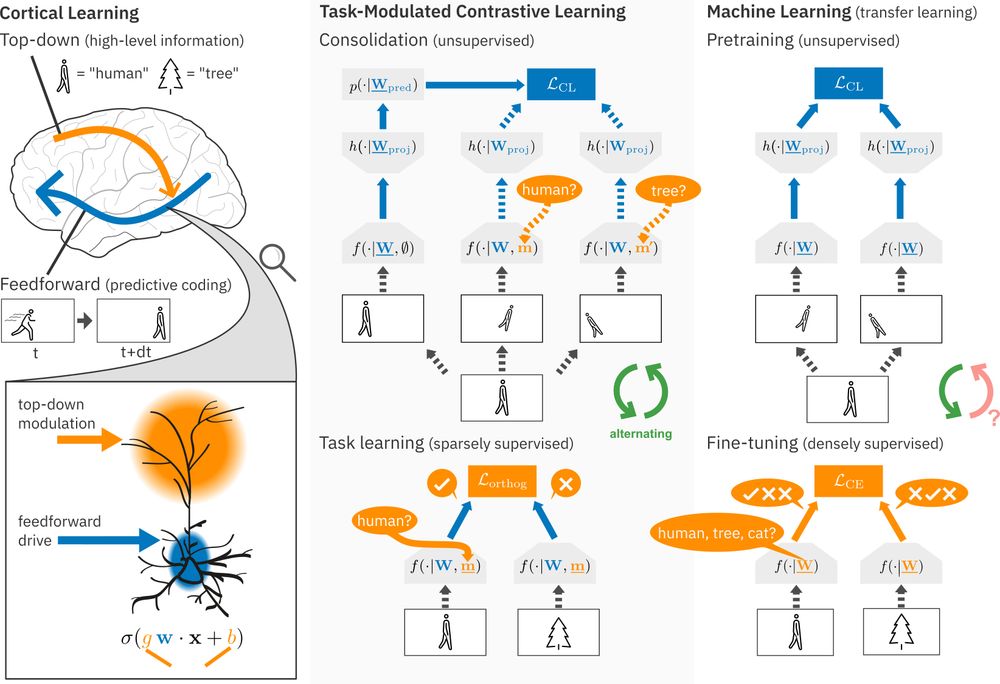

Cool. Does the modulation completely replace the data augmentation via image transformation? (Does your self sup still works up to few percents of acc if you disable data augmentation completely?)

Are all the gradients computed layer wise for weight update?

25.10.2025 16:30 — 👍 0 🔁 0 💬 1 📌 0

New #NeuroAI preprint on #ContinualLearning!

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

10.06.2025 13:17 — 👍 22 🔁 6 💬 1 📌 1

Cool work !!

I only had a quick read but congrats 🎉

The idea of modeling top-down plasticity as rare labeled 1-class-vs-all classifiers is very elegant.

The ff parameters W are learned via constrastive learning? But I did not understand what the modulation does during unlabeled sample?

24.10.2025 08:37 — 👍 0 🔁 0 💬 1 📌 0

Originally a PL researcher, now ML engineer at Bardeen. EU native, US currently. Opinions are a weighted average of everyone around me (the weights are mine). Also @gcampax@mastodon.social. he/him

Ph.D. student studying the in vivo identification of cell types and the neural dynamics of decision making in prefrontal cortex. Chand Lab @ BU; NINDS F31 Fellow; prev. UW, Allen Inst., and U. Puget Sound. From Hawaii 🌴

Computational Neuroscientist:Neural Circuits:Neural Data:PostDoctoral Researcher at RIKEN CBS with Toshitake Asabuki

Neuroethology and Ecology of social behavior in mammals. Special interest in how animals cope with extreme environmental challenges. PhD in Platt lab @Penn, now Junior Fellow @Harvard and Branco Weiss Fellow with Dulac lab

We build probabilistic #MachineLearning and #AI Tools for scientific discovery, especially in Neuroscience. Probably not posted by @jakhmack.bsky.social.

📍 @ml4science.bsky.social, Tübingen, Germany

Researcher in NLP in the ALMAnaCH team (Inria Paris)

ALMAnaCH, the Inria Paris NLP research team.

Cognitive & Computational Neuro Scientist - studying electrophysiological signals in human brains, mostly by writing Python code.

Lecturer of Cognitive Neuroscience @ University of Manchester.

https://tomdonoghue.github.io/

data science postdoc in Tübingen 🧬🖥️🧠 scRNA data analysis, UMAP/tSNE & retina neuroscience | science journalism on AI & sustainability 🤖❤️🌍 | easily sidetracked by small plot details & cool birds 📈🔍🦜

Author of Your Brain is a Time Machine: the Neuroscience and Physics of time.

A brain studying brains at UCLA

Tenure-track astronomer at STScI/JHU working on galaxies, machine learning, and AI for scientific discovery. Opinions my own. He/him.

Website: https://jwuphysics.github.io/

GENCI (Grand Équipement National de Calcul Intensif) est une grande infrastructure de recherche, opérateur public visant à démocratiser l’usage de la simulation numérique par le calcul haute performance associé à l’IA et au calcul quantique

Post-doc at Cornell Tech NYC

Working on the representations of LMs and pretraining methods

https://nathangodey.github.io

neuromantic - ML and cognitive computational neuroscience - PhD student at Kietzmann Lab, Osnabrück University.

⛓️ https://init-self.com

I build tools that propel communities forward

PhD student at Mila & McGill University, Vanier scholar • 🧠+🤖 grad student• Ex-RealityLabs, Meta AI • Believer in Bio-inspired AI • Comedy+Cricket enthusiast

🔥 Blog by Dariusz Majgier. Fun facts, science, brilliant ideas & ways to save money:

👉 https://patreon.com/go4know

🔥 Get prompts, styles & tutorials. Learn how to create Midjourney images/videos for FREE!

👉 Join me: https://patreon.com/ai_art_tutorials

Comp-#CogSci TT-Prof - follow.me @ @benediktehinger@scholar.social

🧠, #vision, #eyetracking, #cognition, VR/mobile #EEG, methods, design (www.thesis-art.de), teaching & supervising

our lab mainly develops in #julialang

PhD student on Dendritic Learning/NeuroAI with Willem Wybo,

at Emre Neftci's lab (@fz-juelich.de).

ktran.de