Actual problems like AI in space?

www.spacex.com/updates#xai-...

Anton Baumann

@antonbaumann.bsky.social

@antonbaumann.bsky.social

Actual problems like AI in space?

www.spacex.com/updates#xai-...

Excited to share our new work on Self-Distillation Policy Optimization (SDPO)!

SDPO is a simple algorithm that turns textual feedback into logit-level learning signals, enabling sample-efficient RL from runtime errors, LLM judges, and even binary feedback.

Preprint: arxiv.org/abs/2601.20802

SDPO enables RL agents to learn from rich feedback (i.e., not only whether an attempt failed, but why it failed, such as error messages). Even without such rich feedback, SDPO can reflect on past attempts and outperform GRPO. SDPO also accelerates solution discovery at test time!

30.01.2026 07:17 — 👍 6 🔁 1 💬 0 📌 0

Training LLMs with verifiable rewards uses 1bit signal per generated response. This hides why the model failed.

Today, we introduce a simple algorithm that enables the model to learn from any rich feedback!

And then turns it into dense supervision.

(1/n)

This has now been accepted at @iclr-conf.bsky.social !

26.01.2026 15:52 — 👍 34 🔁 2 💬 2 📌 0It's really hard to tell nowadays what is a made-up joke and what is reality.

16.01.2026 09:41 — 👍 7 🔁 2 💬 0 📌 0The Nobel Prize committee should announce the World Cup winner tomorrow

06.12.2025 04:29 — 👍 38765 🔁 7495 💬 510 📌 302Super interesting! Will the talk be recorded, or will the slides be available afterward?

06.12.2025 16:09 — 👍 0 🔁 0 💬 0 📌 0I am hiring a PhD & postdoc to work together with me at KTH on probabilistic machine learning. Both positions are fully funded and part of WASP.

I will be attending @euripsconf.bsky.social, if you are around and want to talk about the positions or what we do at KTH, then ping me and we can meet.

Want to work on Trustworthy AI? 🚀

I'm seeking exceptional candidates to apply for the Digital Futures Postdoctoral Fellowship to work with me on Uncertainty Quantification, Bayesian Deep Learning, and Reliability of ML Systems.

The position will be co-advised by Hossein Azizpour or Henrik Boström.

Unfortunately, our submission to #NeurIPS didn’t go through with (5,4,4,3). But because I think it’s an excellent paper, I decided to share it anyway.

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

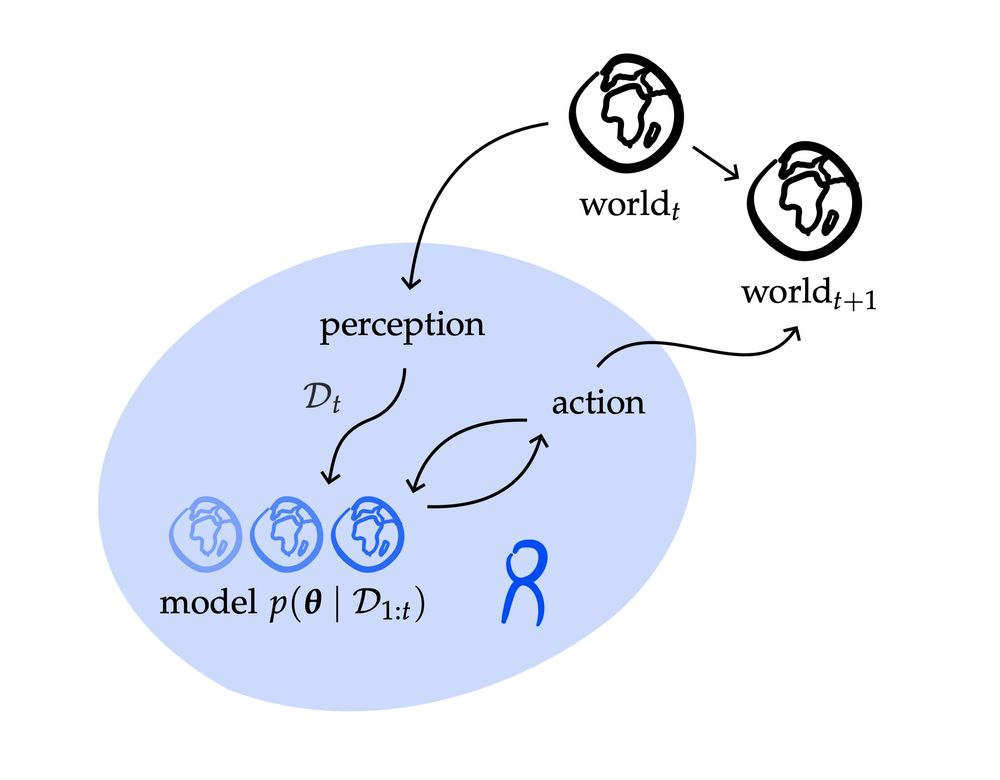

I'm very excited to share notes on Probabilistic AI that I have been writing with @arkrause.bsky.social 🥳

arxiv.org/pdf/2502.05244

These notes aim to give a graduate-level introduction to probabilistic ML + sequential decision-making.

I'm super glad to be able to share them with all of you now!

Tomorrow I’ll be presenting our recent work on improving LLMs via local transductive learning in the FITML workshop at NeurIPS.

Join us for our ✨oral✨ at 10:30am in east exhibition hall A.

Joint work with my fantastic collaborators Sascha Bongni, @idoh.bsky.social, @arkrause.bsky.social

I will present ✌️ BDU workshop papers @ NeurIPS: one by Rui Li (looking for internships) and one by Anton Baumann.

🔗 to extended versions:

1. 🙋 "How can we make predictions in BDL efficiently?" 👉 arxiv.org/abs/2411.18425

2. 🙋 "How can we do prob. active learning in VLMs" 👉 arxiv.org/abs/2412.06014