Didn't quite make it to SIGGRAPH? Consider submitting to SGP, the abstract deadline is tomorrow: sgp2025.my.canva.site/submit-page-...

03.02.2025 20:33 — 👍 17 🔁 5 💬 0 📌 0@lilygoli.bsky.social

PhD student at University of Toronto, Research Intern at Waabi, ex. Google DeepMind, 3D Vision Enthusiast

Didn't quite make it to SIGGRAPH? Consider submitting to SGP, the abstract deadline is tomorrow: sgp2025.my.canva.site/submit-page-...

03.02.2025 20:33 — 👍 17 🔁 5 💬 0 📌 0I need to either read or write a blog post about getting scooped!

It’s becoming outrageous.

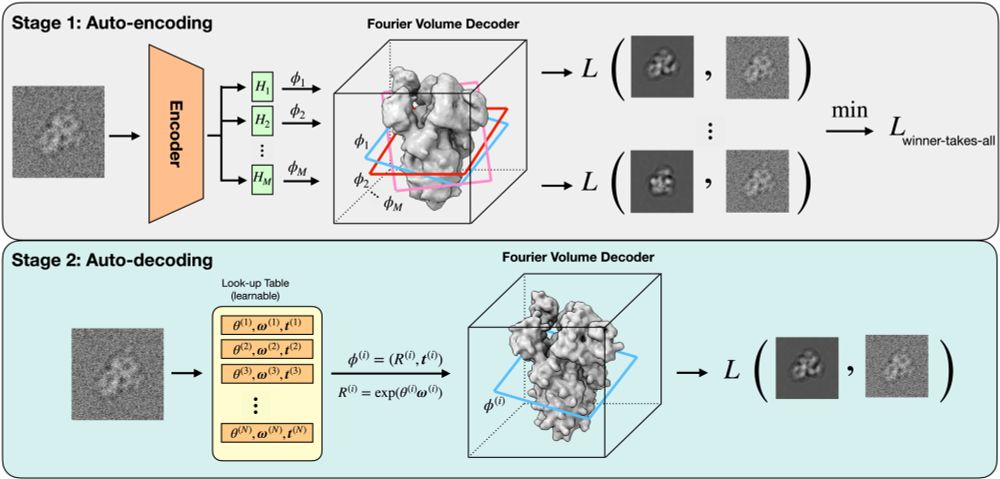

Come check out our work on semi-amortized inference for cryoEM today at NeurIPS!

📅 Friday, Dec 13, 11 AM - 2 PM

📌 East Exhibit Hall A-C, Poster #1105

Paper: arxiv.org/abs/2406.10455

Website: shekshaa.github.io/semi-amortized-cryoem/

With: Shayan Shekarforoush, David Lindell and David Fleet

see you at #neurips2024? 🤩

09.12.2024 18:09 — 👍 3 🔁 0 💬 0 📌 0That words my frustration perfectly! Tho the other side of the coin is that you’ll probably become an expert in that subject which can be useful.

07.12.2024 16:13 — 👍 1 🔁 0 💬 0 📌 0Disclaimer: these are personalized conclusions i.e. your personality has a huge effect on how accurate these thoughts are in your case.

Note: picture is from my breakfast at GDM.

Thought 3:

Developing your own code is a great educational process but always be aware of the open-source code out there. Probably a polished released code from months of effort is more useful/better than your code that was written in half a day and was not tested extensively.

Thought 2: collaboration

Good collaborators can have so much impact on your progress, however you shouldn’t necessarily start a collaboration with every brilliant person out there. Only do so if you have a clear idea of how you all fit into the project. Or find a project that fits the collaboration

Is that exciting tho? For me, not as much as diving into something new.

Is it wise to switch focus often? Probably not. There’s a balance—and with the field moving so fast, it often leans toward sticking with what you know best.

Thought1: speed

As PhD students we usually want to maximize publications which might lead to writing quick papers. Can a paper written relatively fast be a good paper? I would argue: only if it’s building on your previous good work, i.e. you already know a lot about that subject. /

Breakfast photo

Wrapping up my year as a Student Researcher at Google DeepMind today!🥲 It’s been an amazing experience (proof by picture😅).

Excited to join Waabi in the new year and do some cool research on robustness in 🚗 🤖!

I reflected on the past 3.5 years of my PhD today—here are some thoughts/

I have not used it in a loss before, but I do agree that if the epipolar error is not too high or too low compared to your overall stats, you probably can't rely on it to infer anything.

02.12.2024 22:57 — 👍 2 🔁 0 💬 0 📌 0This was my last work at Google DeepMind. An amazing experience. I am very happy that I had the chance to work with my great collaborators on this project: Sara Sabour, Mark Matthews, @marcusabrubaker.bsky.social, Dmitry Lagun, Alec Jacobson, David Fleet, Saurabh Saxena and @taiyasaki.bsky.social

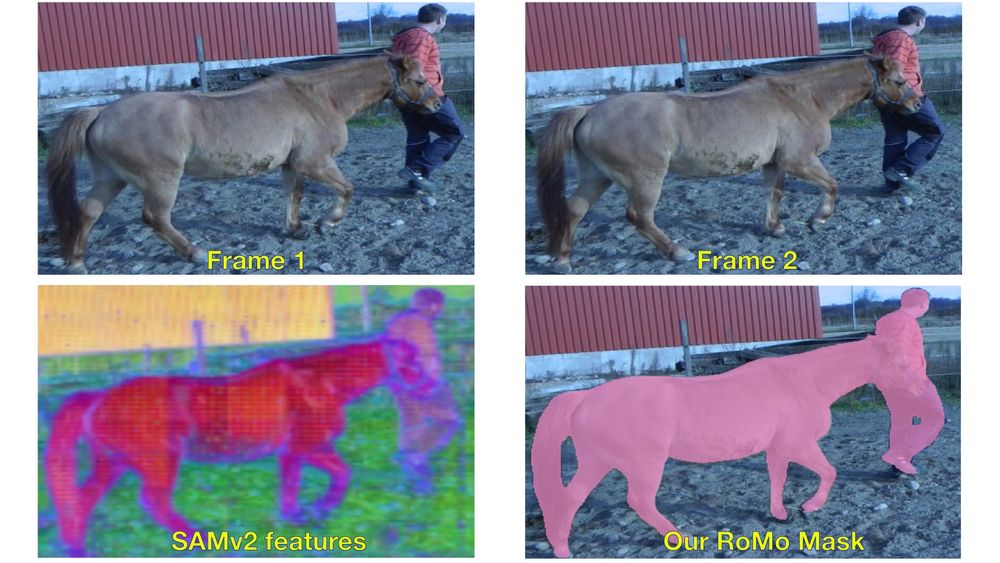

02.12.2024 03:14 — 👍 5 🔁 0 💬 0 📌 0…and with that we get good quality masks, without human annotation or synthetic supervision!

Look at our website for more results!

romosfm.github.io

We train a tiny MLP that classifies SAMv2 features as moving or static given the weak supervisory signal from high and low error masks. These features help complete the motion masks over the video effectively!

02.12.2024 03:14 — 👍 3 🔁 0 💬 1 📌 0

so...how does it work?

We find the Fundamental matrix between each two adjacent frames in the video with RANSAC. We then identify parts of the frame that have a very low or a very high epipolar error, as weak supervision signals to find the moving objects. Now, how do we complete these signals?

Okay but why care about motion masks?

We show that good motion masks improve SfM performance, making COLMAP+our masks the SOTA on synthetic benchmarks. We also collect a real evaluation dataset with GT camera pose using a robotic arm, to evaluate our method in real casual captures.

Our masks are robust to slow or fast camera movements and can find multiple moving objects even when they are in the background. Look at the pedestrian! 🚶

02.12.2024 03:14 — 👍 3 🔁 0 💬 1 📌 0First and foremost: some results!!

In RoMo an optimization process disentangles camera ego motion from scene motion, yielding masks for moving objects 🛵

Hello everyone!! 👋

Excited to be here and share our latest work to get started!

RoMo: Robust Motion Segmentation Improves Structure from Motion

romosfm.github.io

Boost the performance of your SfM pipeline on dynamic scenes! 🚀 RoMo masks dynamic objects in a video, in a zero-shot manner.