Home

Martin Trapp - Assistant Professor in Machine Learning at KTH Royal Institute of Technology.

Want to work on Trustworthy AI? 🚀

I'm seeking exceptional candidates to apply for the Digital Futures Postdoctoral Fellowship to work with me on Uncertainty Quantification, Bayesian Deep Learning, and Reliability of ML Systems.

The position will be co-advised by Hossein Azizpour or Henrik Boström.

02.10.2025 14:46 — 👍 10 🔁 4 💬 1 📌 0

DeSplat: Decomposed Gaussian Splatting for Distractor-Free Rendering

Gaussian splatting enables fast novel view synthesis in static 3D environments. However, reconstructing real-world environments remains challenging as distractors or occluders break the multi-view con...

Paper, videos, and code (nerfstudio) is available!

📄 arxiv.org/abs/2411.19756

🎈 aaltoml.github.io/desplat/

Big ups to Yihao Wang, @maturk.bsky.social, Shuzhe Wang, Juho Kannala, and @arnosolin.bsky.social for making this possible during my time at @aalto.fi 💙🤍

#AaltoUniversity #CVPR2025

[8/8]

13.06.2025 08:04 — 👍 3 🔁 1 💬 0 📌 0

DeSplat has the same FPS and training time as vanilla 3DGS with some additional overhead for storing distractor Gaussians. Extend with MLPs or other models can also be done. Altering DeSplat to video remains to be explored, as distractors barely moving across images can be mistaken as static. [7/8]

13.06.2025 08:01 — 👍 0 🔁 0 💬 1 📌 0

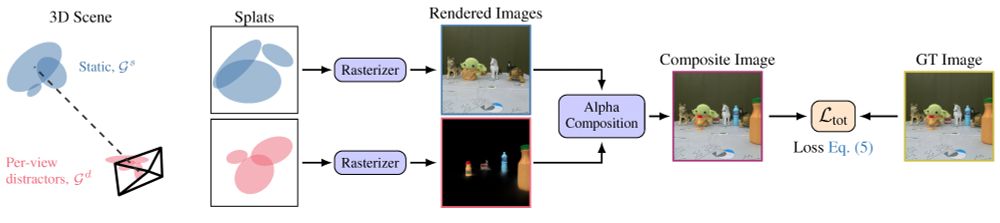

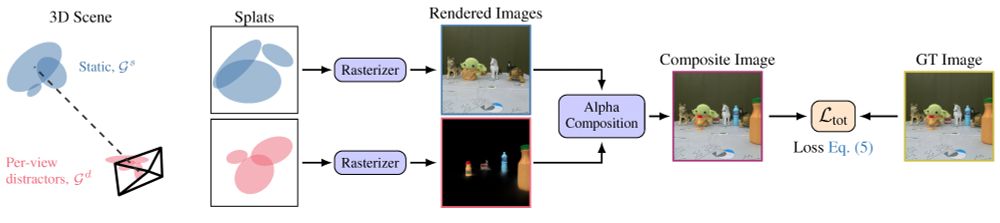

This decomposed splatting (DeSplat) approach explicitly separates distractors from static parts. Earlier methods (e.g. SpotlessSplats, WildGaussians) use loss masking of detected distractors to avoid overfitting, while DeSplat instead jointly reconstructs distractor elements.

[6/8]

13.06.2025 07:59 — 👍 0 🔁 0 💬 1 📌 0

Knowing how 3DGS treats distractors, we initialize a set of Gaussians close to every camera view for reconstructing view-specific distractors. The Gaussians initialized from the point cloud should reconstruct static stuff. These separately rendered images are alpha-blended during training.

[5/8]

13.06.2025 07:58 — 👍 0 🔁 0 💬 1 📌 0

In a viewer, you can see that these spurious artefacts are thin and are located close to the camera view. For the scene-overfitting approach in 3DGS, this makes sense since an object only appearing in one view must be located as close to the camera such that no other camera view can see it.

[4/8]

13.06.2025 07:56 — 👍 0 🔁 0 💬 1 📌 0

This BabyYoda scene from RobustNeRF is similar to a crowdsourced scenario, where a set of static toys appear together with inconsistently-placed toys between the frames.

Vanilla 3DGS is quite robust here, but some views end up being rendered with spurious artefacts (right image).

[3/8]

13.06.2025 07:55 — 👍 0 🔁 0 💬 1 📌 0

Our goal is to learn a scene representation from images that include non-static objects we refer to as distractors. An example is crowdsourced images where different people appear at different locations in the scene, which creates multi-view inconsistencies between the frames.

[2/8]

13.06.2025 07:53 — 👍 0 🔁 0 💬 1 📌 0

👋Interested in Gaussian splatting and removing dynamic content from images?

Our DeSplat is presented today at #CVPR2025 at Poster Session 1, ExHall D Poster #52.

Yihao will be there to present our fully splatting-based method for separating static and dynamic stuff in images.

🧵[1/8]

13.06.2025 07:52 — 👍 6 🔁 1 💬 1 📌 1

You woke up early in the morning jet-lagged and having a hard time deciding for a workshop today @cvprconference.bsky.social ?

Here's a reliable choice for you: our workshop on 🛟 Uncertainty Quantification for Computer Vision!

🗓️ Day: Wed, Jun 11

📍Room: 102 B

#CVPR2025 #UNCV2025

11.06.2025 11:33 — 👍 9 🔁 3 💬 0 📌 0

KTH | Postdoc in robotics with specialization in visual domain adaptation

KTH jobs is where you search for jobs at www.kth.se.

KTH is looking for a *Postdoc* to work on visual domain adaptation for mobile robot perception in a joint project with Ericsson in Stockholm.

Apply by May 15 if you are interested in working with computer vision applied to real robots!

More info: www.kth.se/lediga-jobb/...

23.04.2025 10:08 — 👍 2 🔁 1 💬 0 📌 0

UNCV Workshop @ CVPR 2025

CVPR 2025 Workshop on Uncertainty Quantification for Computer Vision.

Submission deadline is extended to March 20 for submitting your paper to our #CVPR2025 workshop on Uncertainty Quantification for Computer Vision.

Looking forward to see your submissions on recognizing failure scenarios and enabling robust vision systems!

More info: uncertainty-cv.github.io/2025/

17.03.2025 17:29 — 👍 11 🔁 5 💬 0 📌 0

There is still time to submit your papers to our #CVPR2025 workshop on Uncertainty Quantification for Computer Vision, which is part of the workshop lineup at the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) in Nashville, Tennessee.

08.03.2025 15:08 — 👍 13 🔁 6 💬 2 📌 2

I will present ✌️ BDU workshop papers @ NeurIPS: one by Rui Li (looking for internships) and one by Anton Baumann.

🔗 to extended versions:

1. 🙋 "How can we make predictions in BDL efficiently?" 👉 arxiv.org/abs/2411.18425

2. 🙋 "How can we do prob. active learning in VLMs" 👉 arxiv.org/abs/2412.06014

10.12.2024 15:18 — 👍 18 🔁 4 💬 1 📌 1

Professor of Computer Vision/Machine Learning at Imagine/LIGM, École nationale des Ponts et Chaussées @ecoledesponts.bsky.social Music & overall happiness 🌳🪻 Born well below 350ppm

📍Paris 🔗 https://davidpicard.github.io/

Rerun is building the multimodal data stack

🕸️ Website https://rerun.io/

⭐ GitHub http://github.com/rerun-io/rerun

👾 Discord http://discord.gg/ZqaWgHZ2p7

go bears!!!

jessicad.ai

kernelmag.io

Professor at ISTA (Institute of Science and Technology Austria), heading the Machine Learning and Computer Vision group. We work on Trustworthy ML (robustness, fairness, privacy) and transfer learning (continual, meta, lifelong). 🔗 https://cvml.ist.ac.at

Ph.D. student at Visual Recognition Group, Czech Technical University in Prague

🔗 https://stojnicv.xyz

Associate Professor of Computer Science at SLU. Computer vision and machine learning. Trying to do a bit of good in the world by looking at pixels.

https://jytime.github.io/

Large Models, Multimodality, Continual Learning | ELLIS ML PhD with Oriol Vinyals & Zeynep Akata | Previously Google DeepMind, Meta AI, AWS, Vector, MILA

🔗 karroth.com

Postdoctoral Researcher at FAIR/MetaAI: DINOv3 and world models.

PhD at KTH Stockholm: deep learning explainability, concept-based visual representations and reasoning.

https://baldassarrefe.github.io/

Machine learning, environmental modeling, sustainability, robotics

Professor @UCL

He/him

PhD Student in #ComputerVision at the University of Bologna and Verizon Connect. Entre Firenze e Compostela.

Los Angeles - London

Follow for all things synthesizer!

We’ve got a strong synthesizer community here on Bluesky. Join us!!

#synthsky #synthfam #synthesizer #modular #electronica #ambient

Comics by Jorge Cham: Oliver's Great Big Universe, Elinor Wonders Why, ScienceStuff and PHD Comics

senior research scientist at Google | author of DreamBooth

https://natanielruiz.github.io/

Associate professor in Statistics at Uppsala University.

https://mansmeg.github.io/

ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT). June 23rd to June 26th, 2025, in Athens, Greece. #FAccT2025

https://facctconference.org/

Postdoc @ UC Berkeley. 3D Vision/Graphics/Robotics. Prev: CS PhD @ Stanford.

janehwu.github.io

Professor of Statistics @ ESSEC Business School Asia-Pacific campus Singapore 🇸🇬

https://pierrealquier.github.io/

Previously: RIKEN AIP 🇯🇵 ENSAE Paris 🇫🇷 🇪🇺 UCD Dublin 🇮🇪 🇪🇺

Random posts about stats/maths/ML/AI, poor jokes & birds photo 🌈

Safe and robust AI/ML, computational sustainability. Former President AAAI and IMLS. Distinguished Professor Emeritus, Oregon State University. https://web.engr.oregonstate.edu/~tgd/

Aalto University (Aalto-yliopisto) is a multidisciplinary university, where science and art meet technology and business. #AaltoYliopisto #AaltoUniversity