All this history is nice, but which method actually performs best for math?

Read our latest blog to find out:

huggingface.co/spaces/huggi...

[6/N]

@garrethlee.bsky.social

🇮🇩 | Co-Founder at Mundo AI (YC W25) | ex-{Hugging Face, Cohere}

All this history is nice, but which method actually performs best for math?

Read our latest blog to find out:

huggingface.co/spaces/huggi...

[6/N]

Rumor has it that earlier Claude models used a modified three-digit tokenization, processing numbers right-to-left instead of left-to-right.

This method mirrors how we often read and interpret numbers, like grouping digits with commas. Theoretically, this should help with math reasoning!

[5/N]

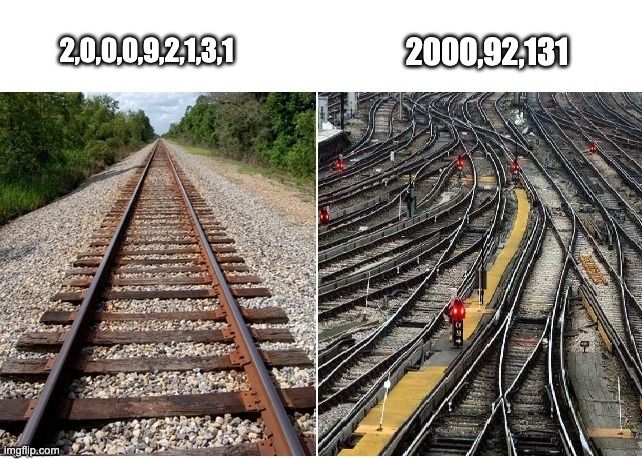

Alas, tokenizing numbers as digits was costly:

A 10-digit numbers now took 10 tokens instead of 3-4, which is ~2-3x more than before. That's a significant hit on training & inference costs!

LLaMA 3 fixed this by grouping numbers into threes, balancing compression and consistency.

[4/N]

Then came LLaMA 1, which took a clever approach to fix number inconsistencies: it tokenized numbers into individual digits (0-9), meaning any large number could now be represented with just 10 tokens.

The consistent representation of numbers made mathematical reasoning much better!

[3/N]

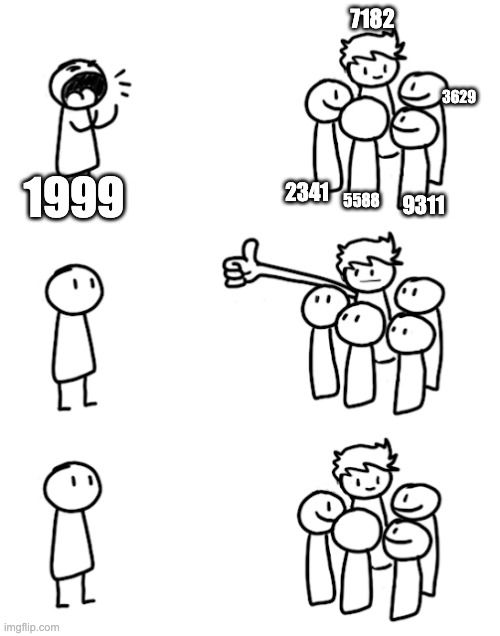

When GPT-2 came out in 2019, its tokenizer used byte-pair encoding (BPE), still common today:

• Merges frequent substrings, saving memory vs. inputting single characters

• However, vocabulary depends on training data

• Common numbers (e.g., 1999) get single tokens; others are split

[2/N]

🚀 With Meta's recent paper replacing tokenization in LLMs with patches 🩹, I figured that it's a great time to revisit how tokenization has evolved over the years using everyone's favourite medium - memes!

Let's take a trip down memory lane!

[1/N]

Shouted out by the goat 🥹🤗

25.11.2024 16:07 — 👍 2 🔁 0 💬 1 📌 0

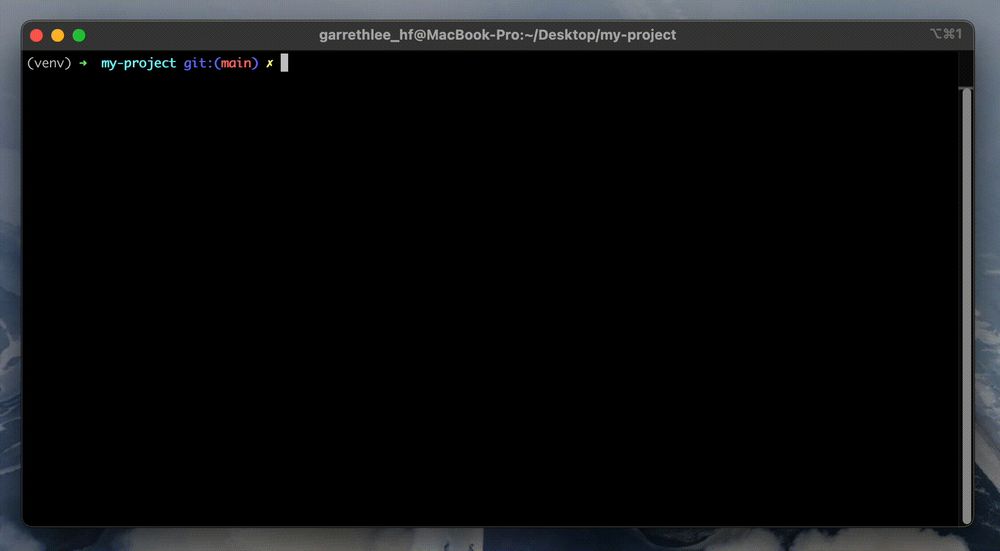

I made a simple CLI tool to write conventional git commit messages using the Hugging Face Inference API 🤗 (with some useful functionality baked into it)

➡️ To install: `pip install gcmt`