🧨 I built something with #Zig!

`tokeni.zig` is a std-only implementation of the Byte Pair Encoding (BPE) algorithm in Zig for tokenizing sequences of text, used by OpenAI (among many others) to tokenize the text when pretraining their large language models!

github.com/alvarobartt/...

13.03.2025 15:50 — 👍 2 🔁 0 💬 0 📌 0

For anyone interested in Zig I wrote a small post titled "How to read and parse JSON with Zig 0.13" that explains how to read JSON from a file with keys with different value types and how to access those values.

10.02.2025 16:15 — 👍 1 🔁 0 💬 1 📌 0

love this quote "working smarter helps, but the real superpower is resting smarter"

a highly recommended read!

03.02.2025 09:05 — 👍 3 🔁 0 💬 0 📌 0

Right, the point is that on Rust you end up "refactoring" a lot (at least I do), but seems easier to handle, whilst on Zig I don't feel is as easy, not especially complex either, just more cumbersome

31.01.2025 16:05 — 👍 2 🔁 0 💬 1 📌 0

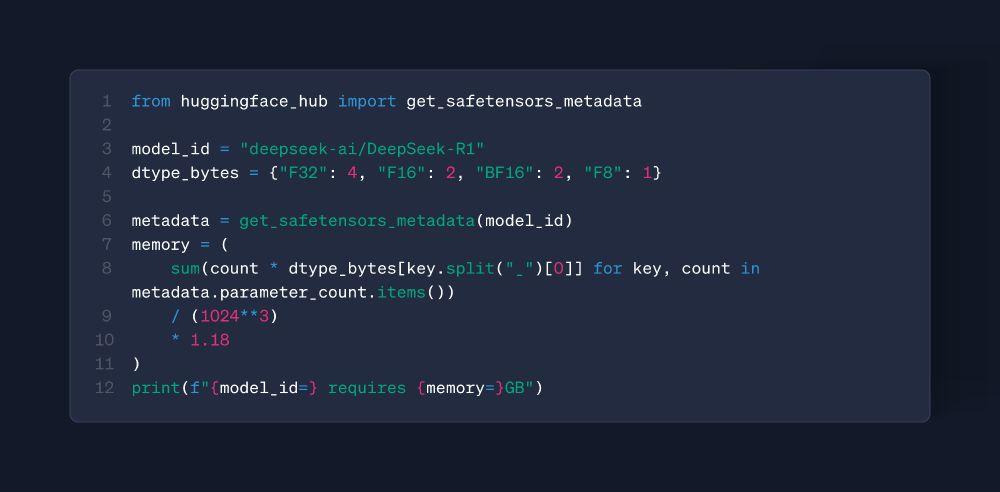

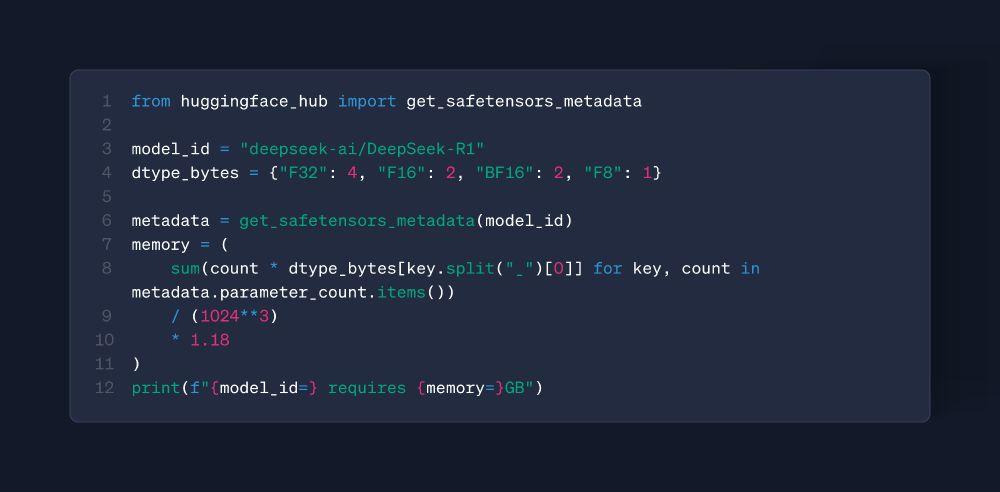

🤗 Here's a simple script that calculates the required VRAM for serving DeepSeek R1 from @huggingface Hub safetensor's metadata!

P.S. The result of the script above is: "model_id='deepseek-ai/DeepSeek-R1' requires memory=756.716GB"

31.01.2025 16:04 — 👍 4 🔁 0 💬 0 📌 0

hmm refactoring in zig is not as easy as it's in rust, even though seems fairly common too, right? or is it just me? 🤔

31.01.2025 08:31 — 👍 1 🔁 0 💬 1 📌 0

stuff that matters takes time

29.01.2025 12:28 — 👍 1 🔁 0 💬 0 📌 0

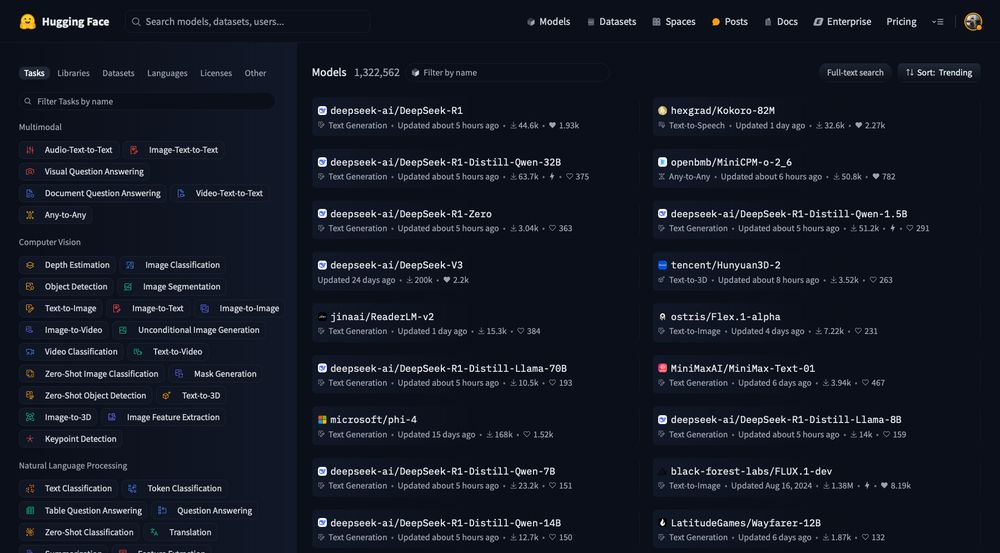

DeepSeek-R1 - a deepseek-ai Collection

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Check DeepSeek-R1 collection on the Hugging Face Hub, with not just DeepSeek-R1 and DeepSeek-R1-Zero, but also distilled their reasoning patterns to fine-tune smaller models!

huggingface.co/collections/...

23.01.2025 13:49 — 👍 1 🔁 0 💬 0 📌 0

🐐 DeepSeek is not on the @hf.co Hub to take part, they are there to take over!

Amazing stuff from the DeepSeek team, ICYMI they recently released some reasoning models (DeepSeek-R1 and DeepSeek-R1-Zero), fully open-source, their performance is on par with OpenAI-o1 and it's MIT licensed!

23.01.2025 13:45 — 👍 10 🔁 1 💬 1 📌 0

you can find so much gold in github gists wow, i was not a big fan because the discoverability doesn't seem great, but been exploring gists lately and so much gold stuff in there!

23.01.2025 08:10 — 👍 0 🔁 0 💬 0 📌 0

Hugging Face

Hugging Face Email Forms

in case anyone missed it, we're running a certified course on ai agents at hugging face starting on feb 2nd; the course is on how to build you own ai agents for different cool use cases built on top of open source!

👇 you can sign up in the link below, don't miss it!

bit.ly/hf-learn-age...

22.01.2025 12:23 — 👍 1 🔁 0 💬 0 📌 0

ok, here we go again 😅

22.01.2025 08:23 — 👍 0 🔁 0 💬 0 📌 0

because it's my native language, anyway it was just an idea, not sure I'll do it anyway 🤗

28.11.2024 08:42 — 👍 0 🔁 0 💬 0 📌 0

Not quite sure yet about how's following me here, but I may consider not just x-posting but also eventually post more random thoughts + content in Spanish, is that something you'd be interested in?

27.11.2024 07:43 — 👍 3 🔁 0 💬 1 📌 0

awesome 🤗

20.11.2024 09:40 — 👍 1 🔁 0 💬 0 📌 0

how do I get in there? 🤗

20.11.2024 09:39 — 👍 0 🔁 0 💬 1 📌 0

here we go again!

i work at hugging face and here you can expect posts about machine learning (llms mainly), some rust, some nvim nerdy stuff and anything related to hugging face 🤗

posting is not easy for me, but i’ll try to do better from now on, support is highly appreciated!

20.11.2024 09:30 — 👍 14 🔁 0 💬 2 📌 0

Read more about the Serverless Inference API in the documentation!

https://huggingface.co/docs/api-inference

19.11.2024 16:15 — 👍 0 🔁 0 💬 0 📌 0

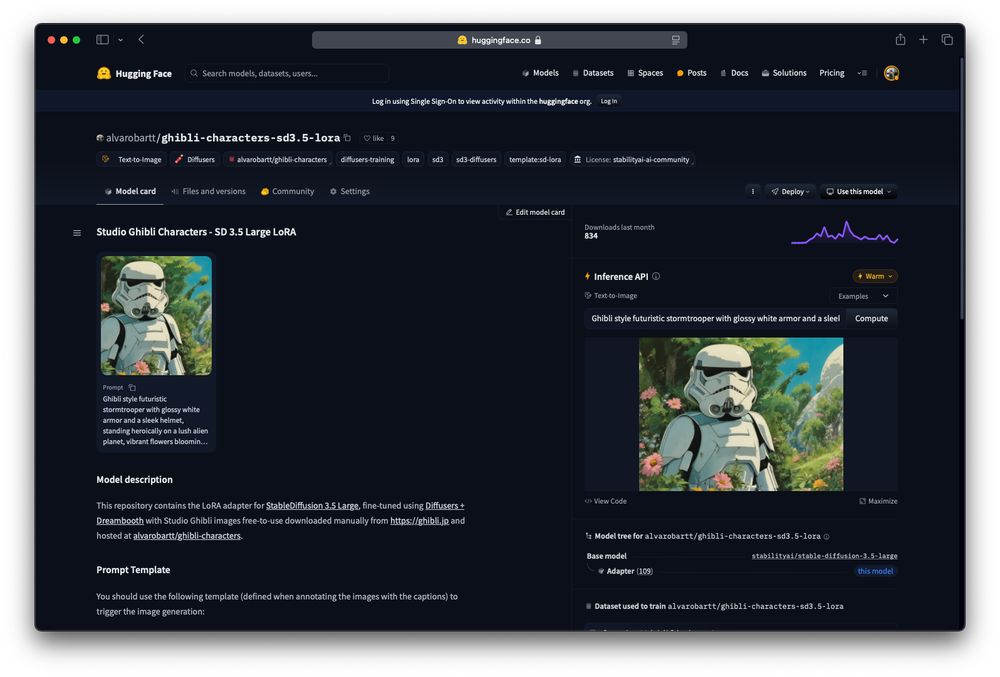

🔥 Finally, if you are willing to get started quickly and experiment with LLMs feel free to give the recently released Inference Playground a try!

https://huggingface.co/playground

19.11.2024 16:15 — 👍 0 🔁 0 💬 1 📌 0

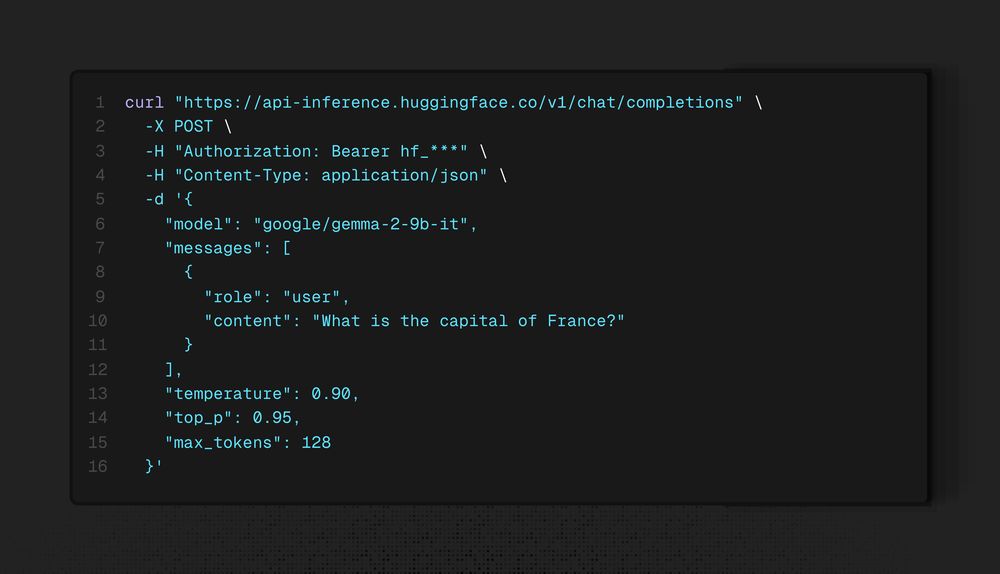

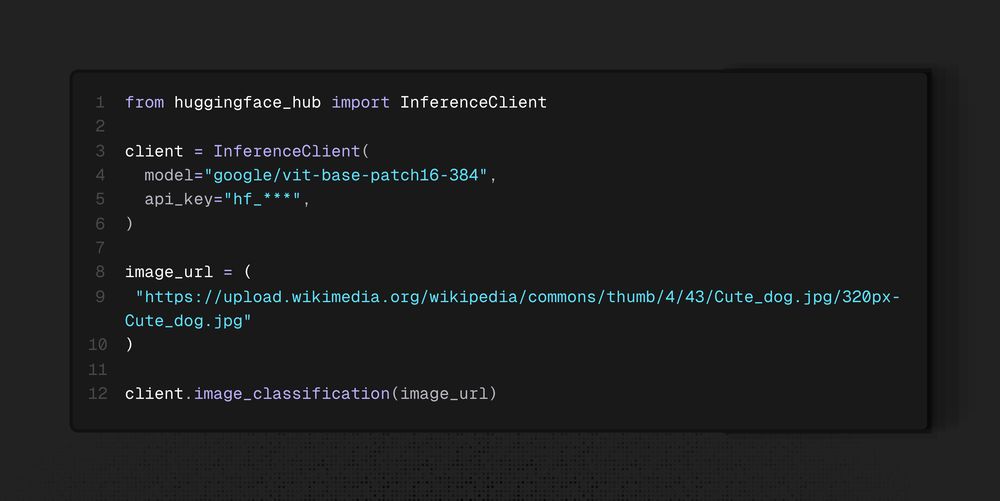

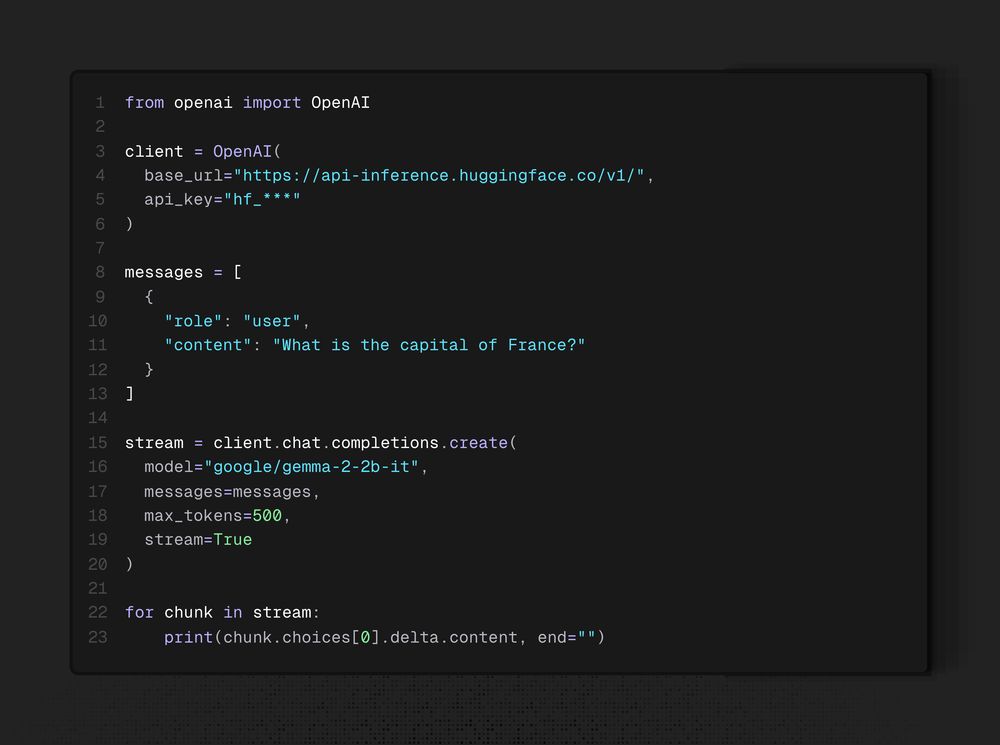

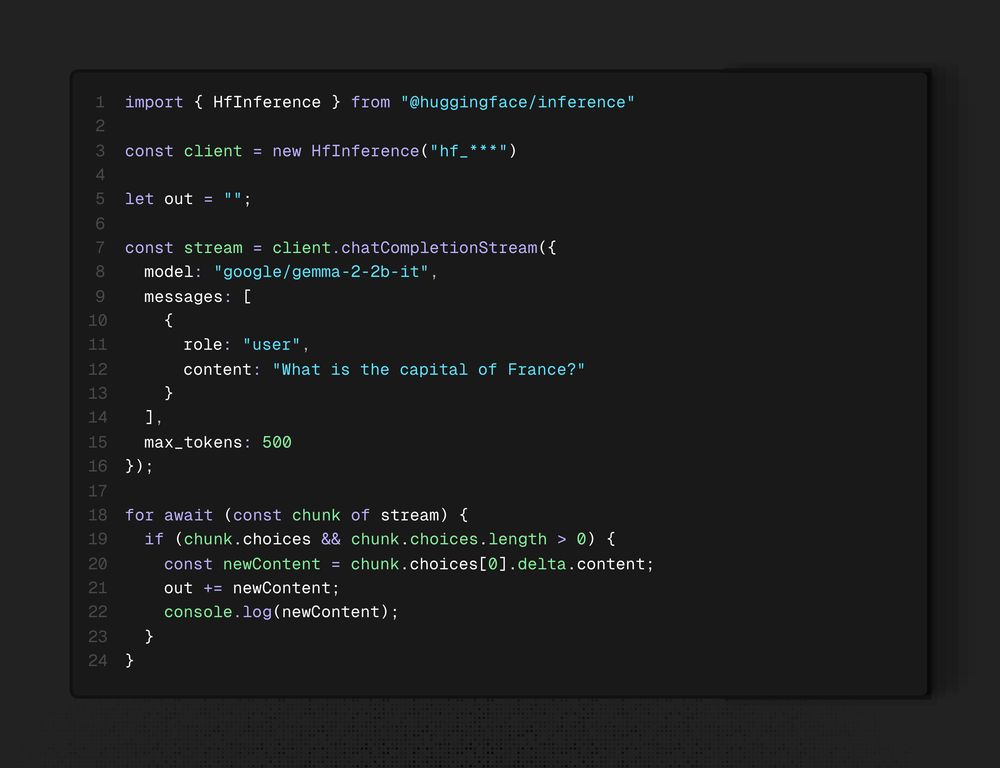

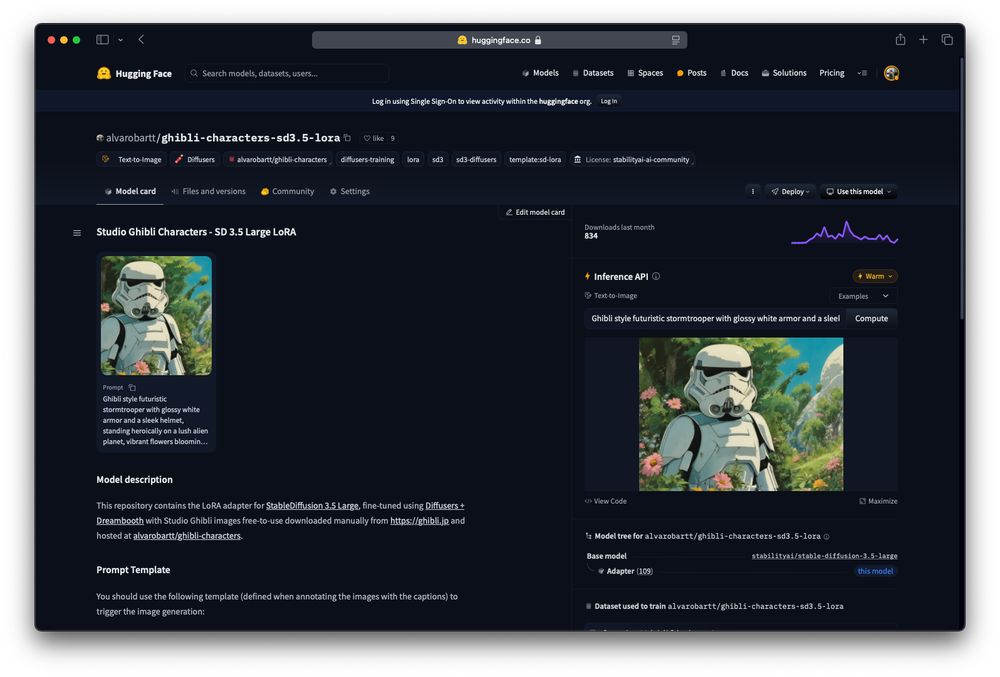

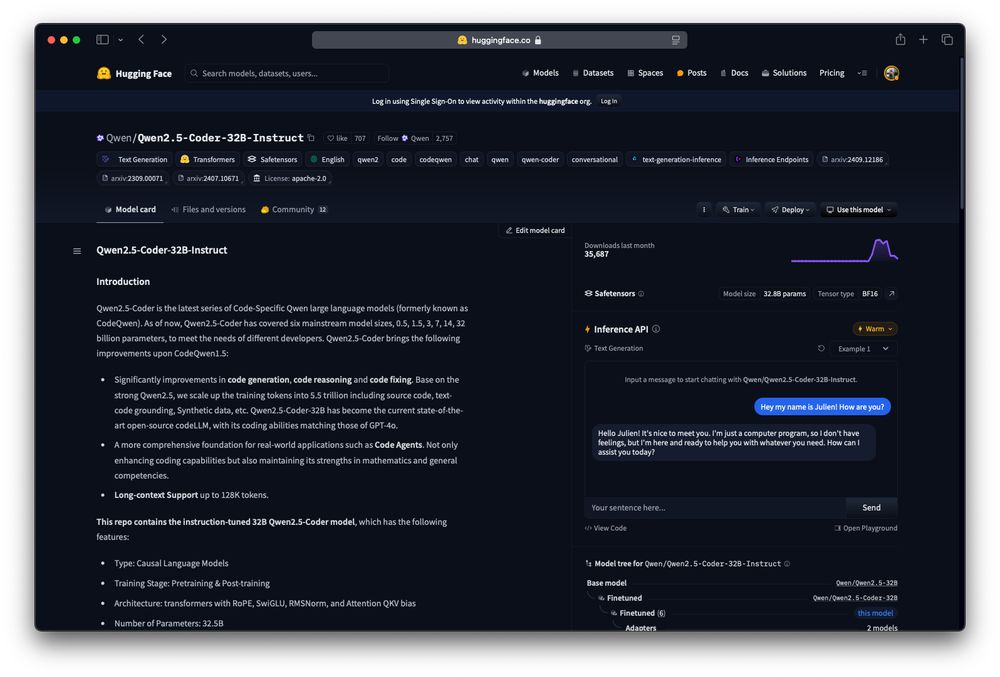

🔎 Now let's explore some of the different alternatives to run inference via the Serverless API!

The most straightforward one is via the Hugging Face Hub available on the model card of the Serverless API supported models!

19.11.2024 16:15 — 👍 0 🔁 0 💬 1 📌 0

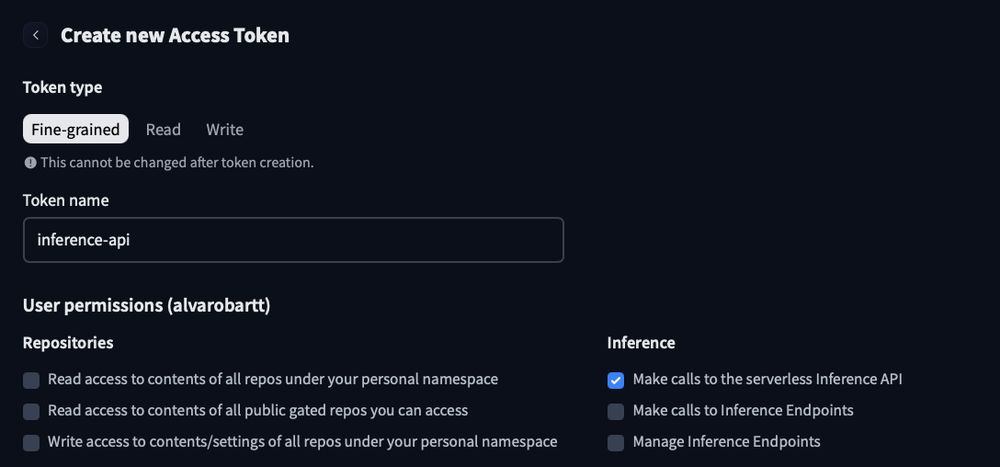

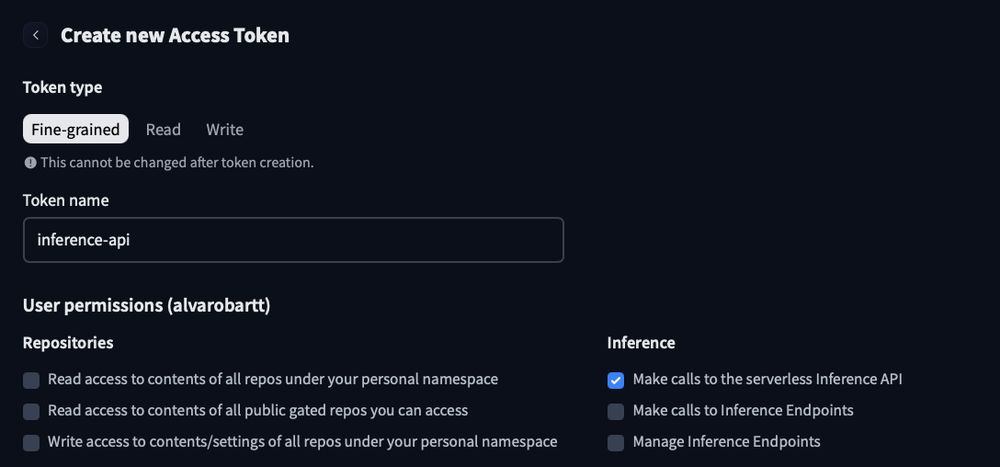

🔒 Before going on, you will first need to generate a Hugging Face fine-grained token with access to the Serverless API, as the requests need to be authenticated so keep the token safe and avoid exposing it!

19.11.2024 16:15 — 👍 0 🔁 0 💬 1 📌 0

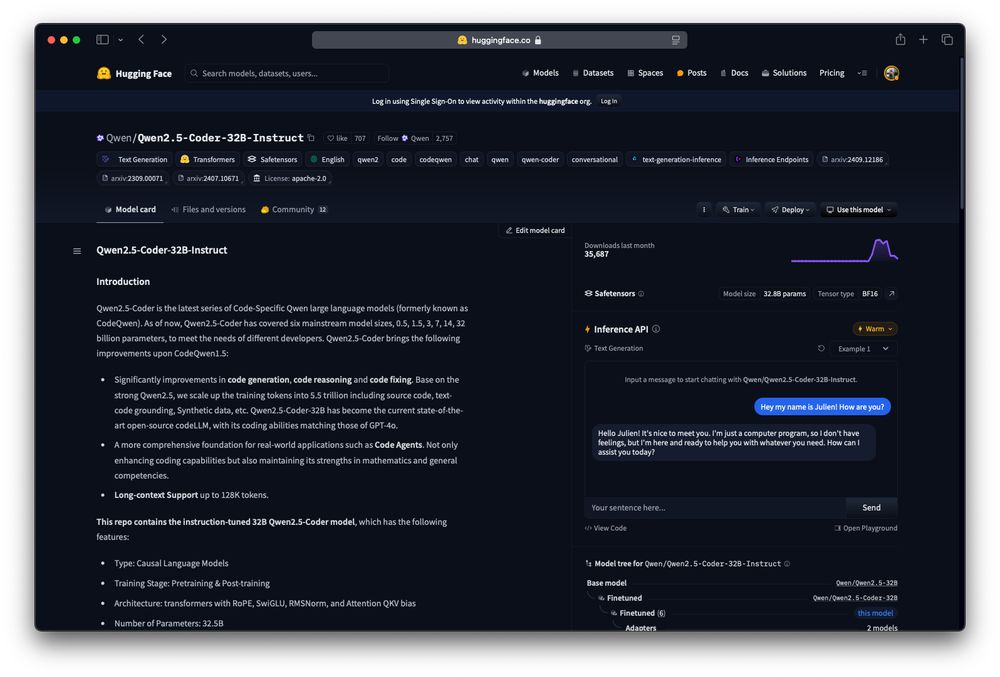

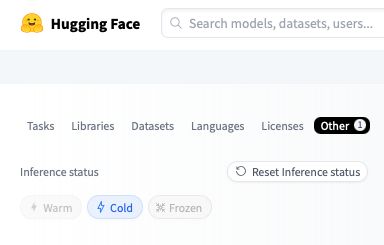

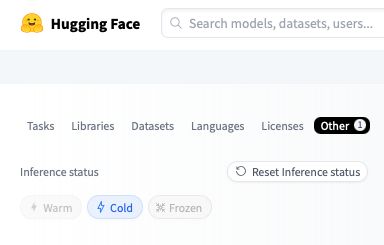

❄️ Additionally, there are a bunch of models (around 1000) that are "Cold", meaning that those are not loaded in the Serverless API, but can be loaded when sending a request to them, also meaning that the first request may take a while until the model is loaded!

19.11.2024 16:15 — 👍 0 🔁 0 💬 1 📌 0

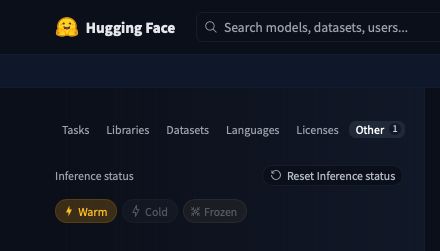

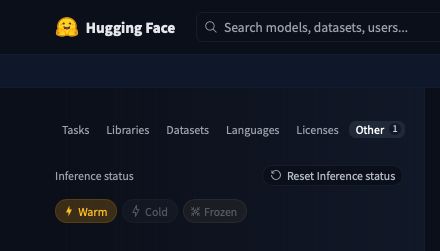

You may be wondering how do you know what models out of the over a million publicly available on the Hub can be used via the Serverless API?

Well, we have the "Warm" tag that indicates that a model is loaded in the Serverless API and ready to be used 🔥

19.11.2024 16:15 — 👍 0 🔁 0 💬 1 📌 0

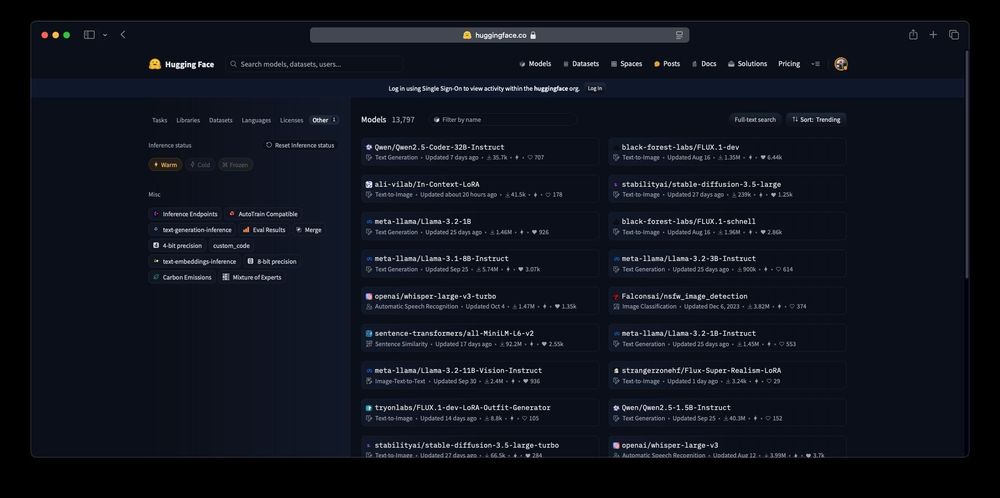

💡 Did you know that you can use over 13700 public open models and adapters on the @huggingface Hub for FREE?

You just need a free account on the Hugging Face Hub (you can also subscribe to PRO to increase the requests per hour)

More details on the thread 🧵

19.11.2024 16:15 — 👍 1 🔁 0 💬 1 📌 0

oh great, just updated mine, thanks for sharing!

19.11.2024 11:42 — 👍 0 🔁 0 💬 0 📌 0

A programming language empowering everyone to build reliable and efficient software.

Website: https://rust-lang.org/

Blog: https://blog.rust-lang.org/

Mastodon: https://social.rust-lang.org/@rust

Creator of Flask • sentry.io ♥︎ writing and giving talks • Excited about AI • Husband and father of three • Inhabits Vienna; Liberal Spirit • “more nuanced in person” • More AI content on https://x.com/mitsuhiko

More stuff: https://mitsuhiko.at/

Rust live-coder and OSS tinkerer who loves teaching. I try to maintain a high SNR. Wrote Rust for Rustaceans. At Helsing.ai. Formerly AWS. Co-founded ReadySet. @jonhoo elsewhere. he/him/they

Machine Learning Engineer @ Hugging Face

Professor, Programmer in NYC.

Cornell, Hugging Face 🤗

Co-founder and CEO at Hugging Face

ML Engineer at Hugging Face

I build things. Software engineer turned full-stack entrepreneur. I share my wins and loses along the journey. Also http://calisteniapp.com cofounder.

inakitajes.me

Training LLM's at huggingface | hf.co/science

PhD - Research @hf.co 🤗

TRL maintainer

Democratizing machine learning through Gradio, acquired by Hugging Face 🤗

ML Infra engineer @huggingface. HPC and ML infra.

Bringing the power of machine learning to the web. Currently working on Transformers.js (@huggingface 🤗)

Feeding LLMs @ Hugging Face