David Holz made an introduction video showing how to make your own kernels with kernel-builder:

www.youtube.com/watch?v=HS5P...

@danieldk.eu.bsky.social

Machine Learning, Natural Language Processing, LLM, transformers, macOS, NixOS, Rust, C++, Python, Cycling. Working on inference at Hugging Face 🤗. Open source ML 🚀.

David Holz made an introduction video showing how to make your own kernels with kernel-builder:

www.youtube.com/watch?v=HS5P...

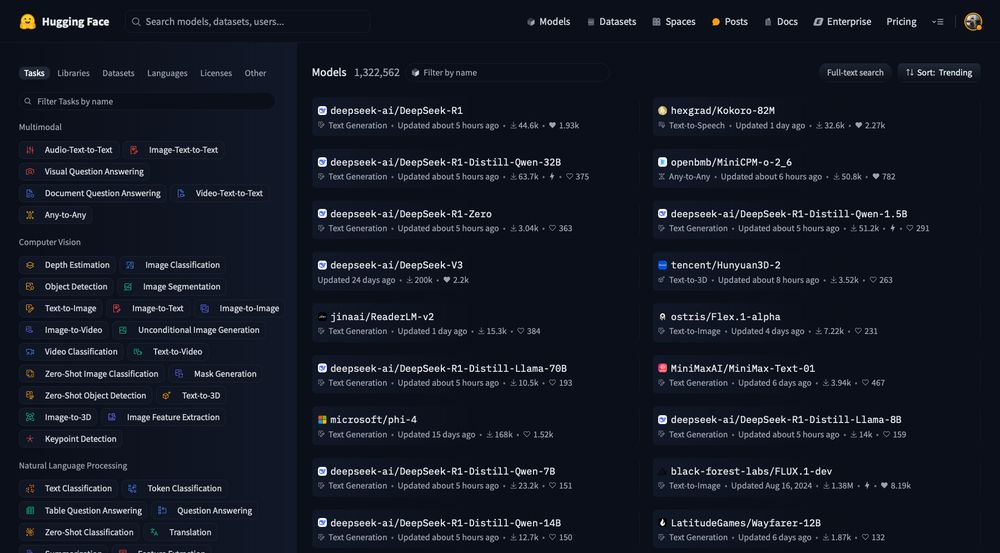

The kernel ecosystem is completely open: you can make your own kernels with kernel-builder, upload them to the hub, and register a mapping using the kernels package and they get used by transformers.

github.com/huggingface/...

github.com/huggingface/...

Transformers 4.54.0 is out! This release adds support for compute kernels hosted on the Hub. When enabled, transformers can replace PyTorch layer implementations by fast, specialized kernels from the hub.

github.com/huggingface/...

Just released a new version of mktestdocs. It now also supports huggingface docstrings!

github.com/koaning/mkt...

Some of the ModernBERT team is back with new encoder models: Ettin, ranging from tiny to small: 17M, 32M, 68M, 150M, 400M & 1B parameters. They also trained decoder models & checked if decoders could classify & if encoders could generate.

Details in 🧵:

So excited to finally release our first robot today: Reachy Mini

A dream come true: cute and low priced, hackable yet easy to use, powered by open-source and the infinite community.

Read more and order now at huggingface.co/blog/reachy-...

SUSE has released Cavil-Qwen3-4B, a fine-tuned, #opensource #LLM on #HuggingFace. Built to detect #legal text like license declarations, it empowers #devs to stay #compliant. #fast #efficiently. #openSUSE #AI #Licenses news.opensuse.org/2025/06/24/s...

24.06.2025 13:59 — 👍 11 🔁 2 💬 1 📌 0

Over the past few months, we have worked on the @hf.co Kernel Hub. Kernel Hub allows you to get cutting-edge compute kernels directly from the hub in a few lines of code.

David Holz made a great writeup of how you can use kernels in your projects: huggingface.co/blog/hello-h...

Hi Berlin people! @hugobowne.bsky.social is in town & we're celebrating by hosting a meetup together 🎉 This one is all about building with AI & we'll also open the floor for lightning talks. If you're around, come hang out with us!

📆 June 16, 18:00

📍 Native Instruments (Kreuzberg)

🎟️ lu.ma/d53y9p2u

TGI v3.3.1 is released! This version switches to Torch 2.7 and CUDA 12.8. This should improve support for GPUs with compute capabilities 10.0 (B200) and 12.0 (RTX50x0 and NVIDIA RTX PRO Blackwell GPUs).

github.com/huggingface/...

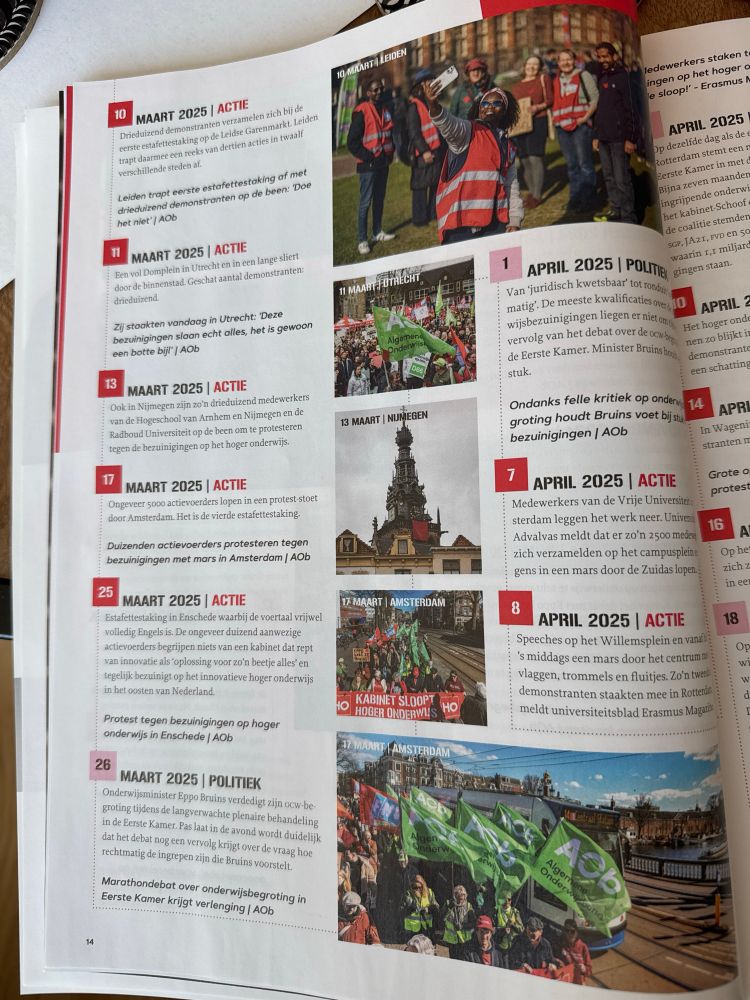

@aob.nl mooie tijdslijn van de stakingen in het onderwijsblad, alleen de staking van 18 maart bij de @rug.nl vergeten, wel een beetje jammer!

17.05.2025 11:51 — 👍 1 🔁 1 💬 1 📌 0

We just released text-generation-inference 3.3.0. This release adds prefill chunking for VLMs 🚀. We have also Gemma 3 faster & use less VRAM by switching to flashinfer for prefills with images.

github.com/huggingface/...

A T-Shirt with a hugging face emoji with a construction hat and the text 'kernels'.

At @hf.co we are also building...

16.04.2025 14:59 — 👍 6 🔁 0 💬 0 📌 0

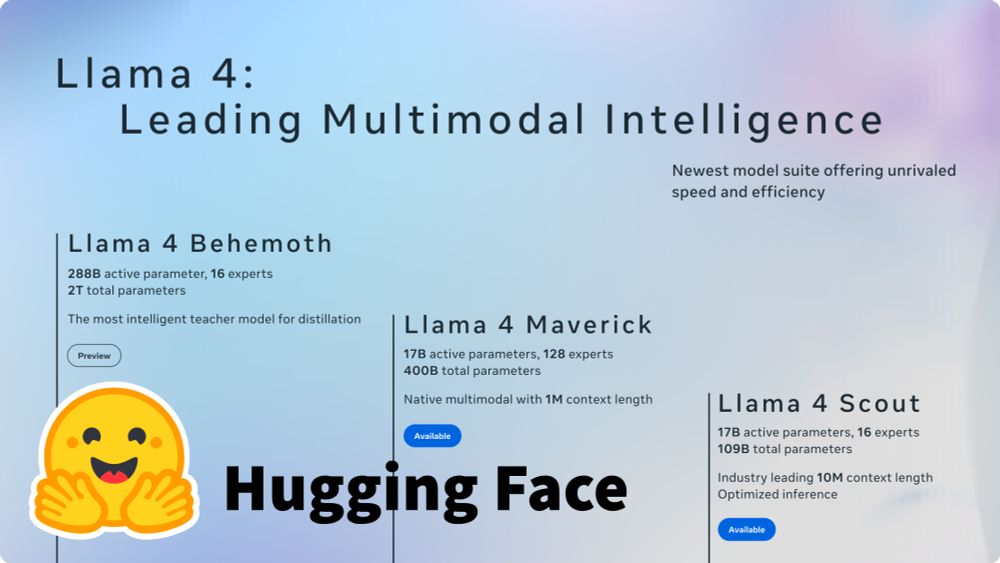

The entire Xet team is so excited to bring Llama 4 to the @hf.co community. Every byte downloaded comes through our infrastructure ❤️ 🤗 ❤️ 🤗 ❤️ 🤗

Read the whole post to see more about these models.

Gemma 3 is live 🔥

You can deploy it from endpoints directly with an optimally selected hardware and configurations.

Give it a try 👇

HuggingChat keycap sticker when?

11.03.2025 15:48 — 👍 0 🔁 0 💬 0 📌 0

A screenshot of the "About Orion" window for the Orion browser. The window features the Orion logo, a starburst design with a central white star and smaller stars on a purple and blue gradient background. The text reads: "Orion. Version 0.0.0.0.1 (WebKit development). Build date Mar 5, 2025. x86_64 (Ubuntu 22.04.5 LTS). Orion Browser by Kagi. Copyright © 2020-2025 Kagi. All rights reserved. Humanize the Web." Below the text are three buttons labeled "Get Orion+," "Send Feedback," and "Licenses." The background is a soft purple gradient.

We're thrilled to announce that development of the Orion Browser for Linux has officially started!

Register here to receive news and early access opportunities throughout the development year: forms.kagi.com?q=orion_linu...

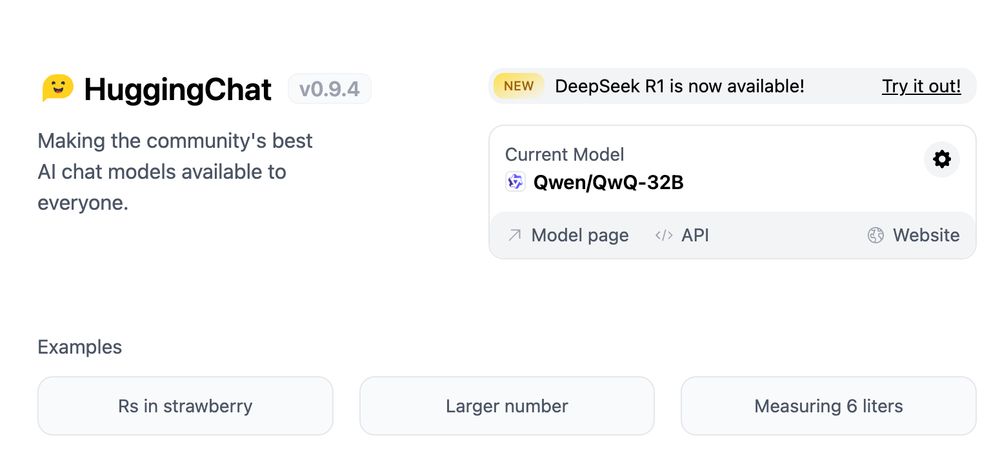

want to try QwQ-32B? it just landed on HuggingChat!

06.03.2025 20:06 — 👍 4 🔁 1 💬 0 📌 0Jikes, good to hear you are feeling better.

I had them two years ago while we were on vacation. Best advise from a Danish doctor: take a lot of painkillers and drink enough beer 😁 (or water).

Six months after joining @hf.co we’re kicking off the first migrations from LFS -> Xet backed storage for a handful of repos on the Hugging Face Hub.

A few months ago, I published a timeline of our work and this is a big step (of many!) to bring our storage to the Hub - more in 🧵👇

Followers gezocht. Nu we niet meer actief zijn op X (algemeen FS-account 150k followers) en Mastodon helaas niet het volume van het oude Twitter lijkt te krijgen, hoop ik dat BlueSky die plaats kan innemen. Social media is toch een goedkope manier om publiek te informeren. pls rt

07.02.2025 17:16 — 👍 1350 🔁 1220 💬 58 📌 42Not only is DeepSeek R1 open, you can now run it on your own hardware with Text Generation Inference 3.1.0.

Awesome work by @mohit-sharma.bsky.social and @narsilou.bsky.social !

Want to run Deepseek R1 ?

Text-generation-inference v3.1.0 is out and supports it out of the box.

Both on AMD and Nvidia !

🐳 DeepSeek is on Hugging Face 🤗

Free for inference!

1K requests for free

20K requests with PRO

Code: https://buff.ly/4glAAa5

900 models more: https://buff.ly/40x1rua

Text-generation-inference v3.0.2 is out.

Basically we can run transformers models (that support flash) at roughly the same speed as native TGI ones.

What this means is broader model support.

Today it unlocks

Cohere2, Olmo, Olmo2 and Helium

Congrats Cyril Vallez

github.com/huggingface/...

🐐 DeepSeek is not on the @hf.co Hub to take part, they are there to take over!

Amazing stuff from the DeepSeek team, ICYMI they recently released some reasoning models (DeepSeek-R1 and DeepSeek-R1-Zero), fully open-source, their performance is on par with OpenAI-o1 and it's MIT licensed!

Hello on the new sky!

22.01.2025 06:06 — 👍 34 🔁 3 💬 3 📌 0Hi 👋

22.01.2025 19:26 — 👍 1 🔁 0 💬 0 📌 0

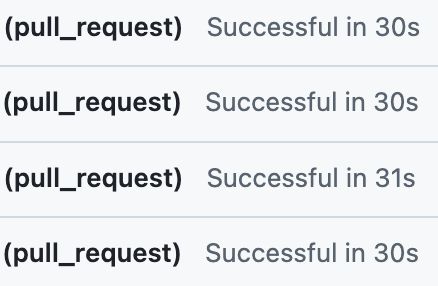

GitHub Actions finishing in 30 seconds

The speed of uv is just insane. Just experimented with using it for CI of a project and installing a project, its dependencies (including Torch), and running some tests takes 30 seconds 🤯.

22.01.2025 12:40 — 👍 2 🔁 0 💬 0 📌 0