Slamming: Training a Speech Language Model on One GPU in a Day

Slam is a training recipe for training high-quality SLMs on 1 gpu in 24 hours.

Hey,

I added some longer generation examples, by enforcing `min_new_tokens`. Definitely can lose itself a bit more but still pretty decent I think :)

Check it out:

pages.cs.huji.ac.il/adiyoss-lab/...

And feel free to generate anything with a single line of code:

github.com/slp-rl/slamkit

04.03.2025 15:46 — 👍 1 🔁 0 💬 1 📌 0

@gallilmaimon.bsky.social and his team trained a Speech Language Models on 1xA5000 GPU in 24 hours

26.02.2025 01:13 — 👍 3 🔁 1 💬 1 📌 0

Slamming: Training a Speech Language Model on One GPU in a Day

We introduce Slam, a recipe for training high-quality Speech Language Models (SLMs) on a single academic GPU in 24 hours. We do so through empirical analysis of model initialisation and architecture, ...

I love papers that make ML training accessible with consumer GPUs. Great example: "Slamming: Training a Speech Language Model on One GPU in a Day" released 3 days ago. The full code and training data are available and reproducible using a 24GB RTX 3090.

- arxiv.org/abs/2502.15814

28.02.2025 16:19 — 👍 12 🔁 1 💬 2 📌 0

We generated samples with a max length, but the model can predict an "end" token before. One could play with sampling params to make the model keep talking:)

I will try get time to generate longer samples, but also encourage everyone to play around themselves. We tried to make it relatively easy🙏

28.02.2025 17:35 — 👍 2 🔁 0 💬 0 📌 0

And about this - yes!

We are accepting PRs to add more tokenisers, better optimisers, efficient attention implementations and anything that seems relevant :)

Feel free to reach out 💪

28.02.2025 17:29 — 👍 1 🔁 1 💬 0 📌 0

Hey!

Really pleased you liked our work:) I think with the help of the open source community we can push results even further.

About generation length - the model context is 1024~=40 seconds of audio, but we used a setup like TWIST for evaluation. Definitely worth testing longer generations!

28.02.2025 17:25 — 👍 2 🔁 0 💬 1 📌 0

🔜🗣️It was shown to be really useful for training SpeechLMs. We are working on some stuff now to hopefully make it even easier. More to come soon!💪

11.01.2025 20:00 — 👍 0 🔁 0 💬 0 📌 0

slprl/mhubert-base-25hz · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

🚨Attention #speech @hf.co people🤗💬

We added official support for mhubert-25hz from TWIST in transformers. We also converted it from fairseq to HF!

Check it out✌️

huggingface.co/slprl/mhuber...

11.01.2025 20:00 — 👍 0 🔁 0 💬 1 📌 0

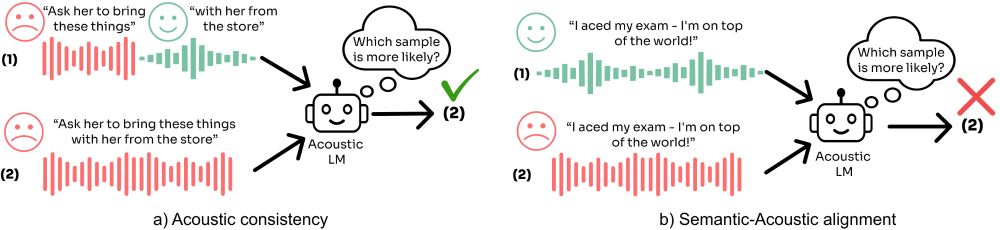

I am thrilled to share that SALMon🍣 got accepted to #ICASSP25

For code, data, preprint and live leaderboard checkout - pages.cs.huji.ac.il/adiyoss-lab/...

w/ Amit Roth and Yossi Adi

21.12.2024 06:11 — 👍 1 🔁 0 💬 0 📌 0

For instance, in my opinion, in this example it feels unlikely that people would use stress to convey these meanings. Happy for all and any suggestions and insights :)

16.12.2024 10:59 — 👍 0 🔁 0 💬 0 📌 0

#Speech people: I am looking for examples (or resources) where stress or emphasis on a phrase changes the meaning of a sentence. This part of a study on intonation in SpeechLMs.

I gave a decent ChatGPT answer below, but many weren't great...

16.12.2024 10:59 — 👍 1 🔁 0 💬 1 📌 0

🪙 I assume sentiment improved because of style tokens (also shown in STSP metric from SpiritLM). I wonder what is limiting performance - data? modelling? tokens? We welcome suggestions and new SLMs!

28.11.2024 08:39 — 👍 0 🔁 0 💬 1 📌 0

We added SpiritLM to the SALMon🍣 leaderboard! Nice jump in emotion consistency, but still no improvement in jointly modelling text content and acoustics🥲

Think your SLM can do better?💪

links👇

28.11.2024 08:39 — 👍 2 🔁 0 💬 1 📌 1

I've started putting together a starter pack with people working on Speech Technology and Speech Science: go.bsky.app/BQ7mbkA

(Self-)nominations welcome!

19.11.2024 11:13 — 👍 82 🔁 34 💬 44 📌 3

Great list! I’d be happy to join as well :)

27.11.2024 10:03 — 👍 1 🔁 0 💬 0 📌 0

Linguist in AI & CogSci 🧠👩💻🤖 PhD student @ ILLC, University of Amsterdam

🌐 https://mdhk.net/

🐘 https://scholar.social/@mdhk

🐦 https://twitter.com/mariannedhk

Post-doc @ University of Edinburgh. Working on Synthetic Speech Evaluation at the moment.

🇳🇴 Oslo 🏴 Edinburgh 🇦🇹 Graz

PhD Student - Deep Learning & Speech Processing @LeCnam

GitHub: github.com/jhauret

Leader of Conversational Systems Team at the Center for Artificial Intelligence at Adam Mickiewicz University, Poznań. Assistant Professor in the Department of Artificial Intelligence. https://marekkubis.com #AI #NLProc

student @georgemasoncs.bsky.social. NLP with GMNLP. optimistic and curious.

CS PhD student @GeorgeMasonU @ComputacionUBA NLP, Speech &🤎

Language Technologies for Crisis Response, AI + Indigenous People 🌱

http://beluticona.github.io

ML/Speech PhD student in Sheffield

phd @ berkeley. #speechproc.

@ltiatcmu.bsky.social '23 + @scsatcmu.bsky.social '22 + ex-Amazon. eng、華語、tâi-gú

BottleCapAI. LLM. Engineer. Researcher. Speaker. Father.

Deep Learning, speech recognition, language modeling, https://scholar.google.com/citations?user=qrh5CBEAAAAJ&hl=en

Open source, https://github.com/albertz/

PhD Student @CMU, Speech AI Research

https://pyf98.github.io/

Ph.D. student at Kyoto University Language Media lab. Prev. vsiting scholar at CMU LTI WAVLab.

Website: https://cromz22.github.io/

PhD Fellow, AI and Sound at @aalborguniversitet.bsky.social

Fulbright Visiting PhD, wavlab at @ltiatcmu.bsky.social

Studying self-supervised learning for speech with a focus on hearing assistive device applications.

PhD Student @ltiatcmu.bsky.social

I work in speech processing.

wanchichen.github.io

Master's student @ltiatcmu.bsky.social, working on speech AI at @shinjiw.bsky.social

PhD student @Trinity College Dublin | Multimodal speech recognition

https://chaufanglin.github.io/

Postdoctoral Scholar Stanford NLP