"The effect of platform on message propagation..." 🙏

30.09.2025 00:02 — 👍 1 🔁 0 💬 0 📌 0"The effect of platform on message propagation..." 🙏

30.09.2025 00:02 — 👍 1 🔁 0 💬 0 📌 0"more work"

30.08.2025 21:42 — 👍 2 🔁 0 💬 0 📌 0

A photograph of mount rainier I took from reflection lake.

life update: a few weeks ago, I made the difficult decision to move on from Samaya AI. Thank you to my collaborators for an exciting 2 years!! ❤️ Starting next month, I'll be joining Anthropic. Excited for a new adventure! 🦾

(I'm still based in Seattle 🏔️🌲🏕️; but in SF regularly)

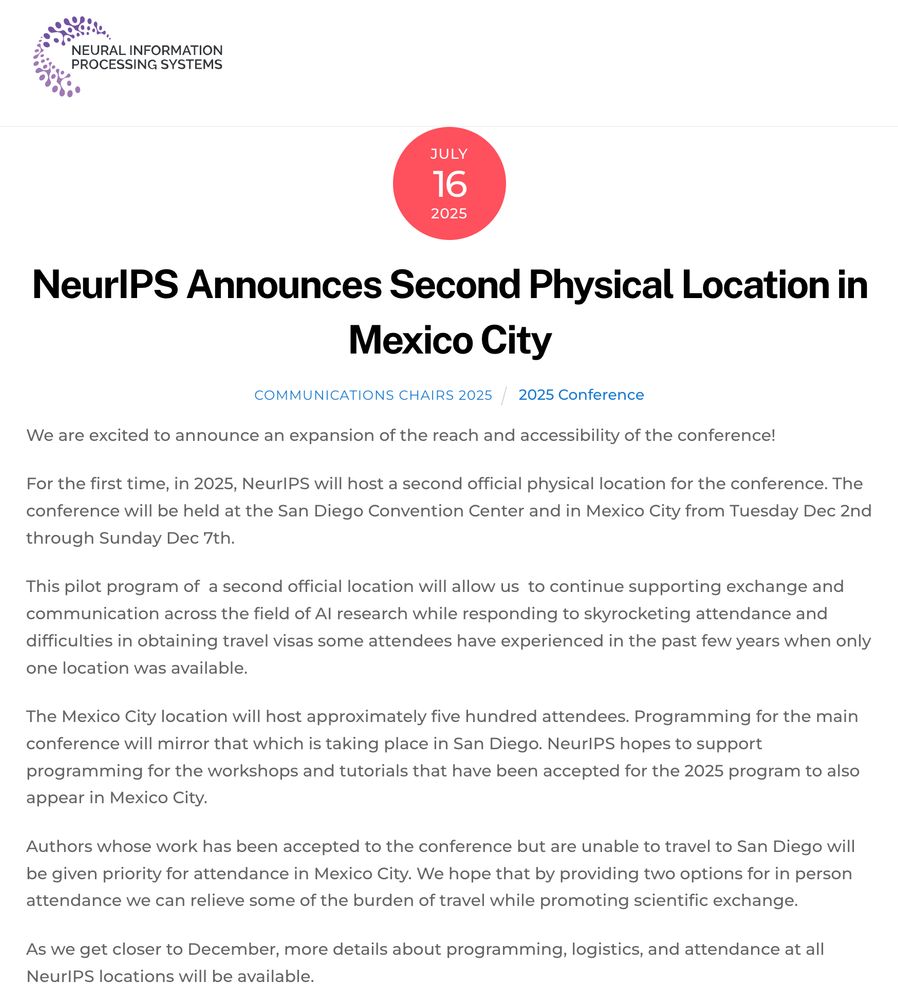

It is a major policy failure that the US cannot accommodate top AI conferences due to visa issues.

buff.ly/DRJOGrB

bring back 8 page neurips papers

24.06.2025 19:04 — 👍 1 🔁 0 💬 0 📌 0m̶e̶n̶ Americans will literally l̶e̶a̶r̶n̶ ̶e̶v̶e̶r̶y̶t̶h̶i̶n̶g̶ ̶a̶b̶o̶u̶t̶ ̶a̶n̶c̶i̶e̶n̶t̶ ̶R̶o̶m̶e̶ invest billions into self driving cars instead of g̶o̶i̶n̶g̶ ̶t̶o̶ ̶t̶h̶e̶r̶a̶p̶y̶ building transit

20.06.2025 20:26 — 👍 7 🔁 0 💬 0 📌 0Wulti wodal wodels

10.06.2025 00:03 — 👍 8 🔁 0 💬 2 📌 0bring back length limits for author responses

06.06.2025 17:56 — 👍 9 🔁 0 💬 1 📌 0

in llm-land, what is a tool, a function, an agent, and (most elusive of all): a "multi-agent system"? (This had been bothering me recently; are all these the same?)

@yoavgo.bsky.social's blog is a clarifying read on the topic -- I plan to adopt his terminology :-)

gist.github.com/yoavg/9142e5...

👍

28.03.2025 07:59 — 👍 1 🔁 0 💬 0 📌 0If you're in WA and think imposing new taxes on things we want more of (e.g., bikes, transit) is a bad idea, consider contacting your reps using this simple form! <3

27.03.2025 18:35 — 👍 5 🔁 0 💬 0 📌 0I pinged editors@ about it, they are working on it

02.03.2025 21:12 — 👍 1 🔁 0 💬 0 📌 0Should you delete softmax from your attention layers? check out Songling Yang's (sustcsonglin.github.io) tutorial, moderated by @srushnlp.bsky.social, for a beginner-friendly tutorial of the why/how/beauty of linear attention :-) www.youtube.com/watch?v=d0HJ...

24.02.2025 20:02 — 👍 4 🔁 1 💬 0 📌 0I've spent the last two years trying to understand how LLMs might improve middle-school math education. I just published an article in the Journal of Educational Data Mining describing some of that work: "Designing Safe and Relevant Generative Chats for Math Learning in Intelligent Tutoring Systems"

30.01.2025 23:41 — 👍 7 🔁 2 💬 0 📌 0

Very good (technical) explainer answering "How has DeepSeek improved the Transformer architecture?". Aimed at readers already familiar with Transformers.

epoch.ai/gradient-upd...

...... I can't decide if this is better or worse than growing alfalfa in the desert

14.01.2025 22:26 — 👍 3 🔁 0 💬 1 📌 0

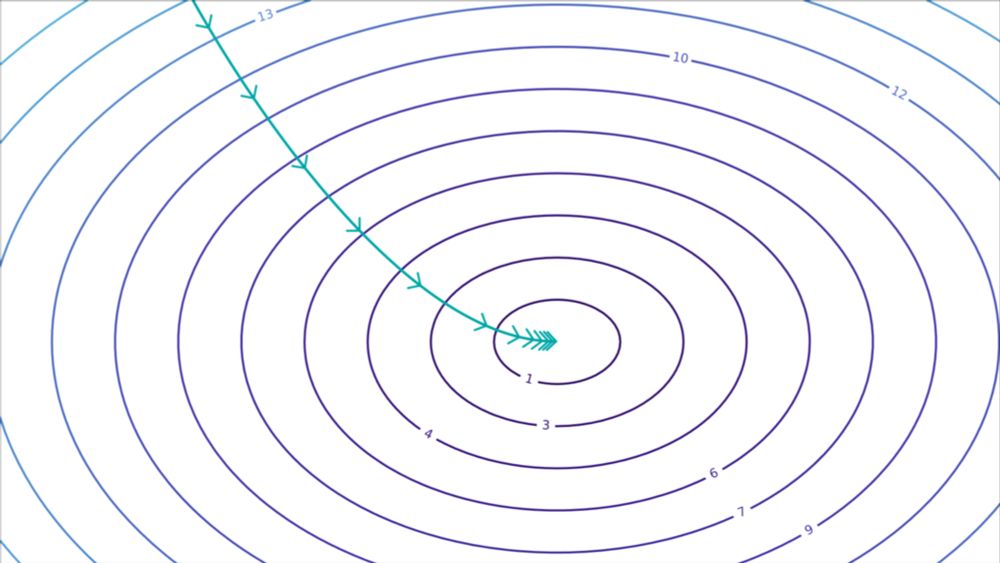

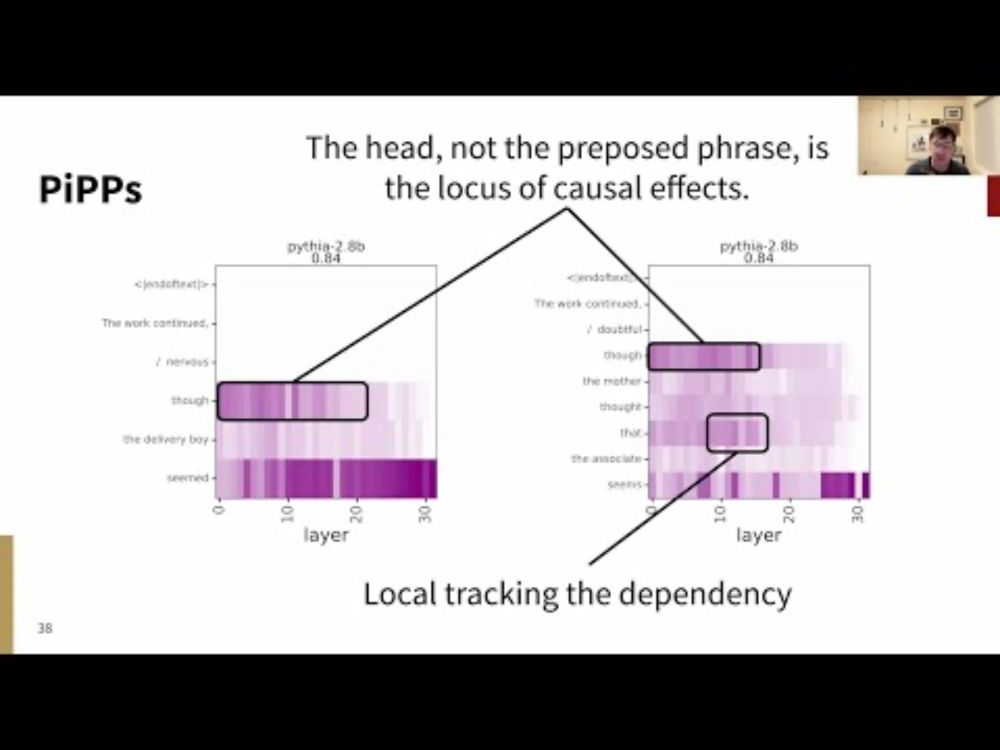

I've posted the practice run of my LSA keynote. My core claim is that LLMs can be useful tools for doing close linguistic analysis. I illustrate with a detailed case study, drawing on corpus evidence, targeted syntactic evaluations, and causal intervention-based analyses: youtu.be/DBorepHuKDM

13.01.2025 02:41 — 👍 74 🔁 20 💬 1 📌 3

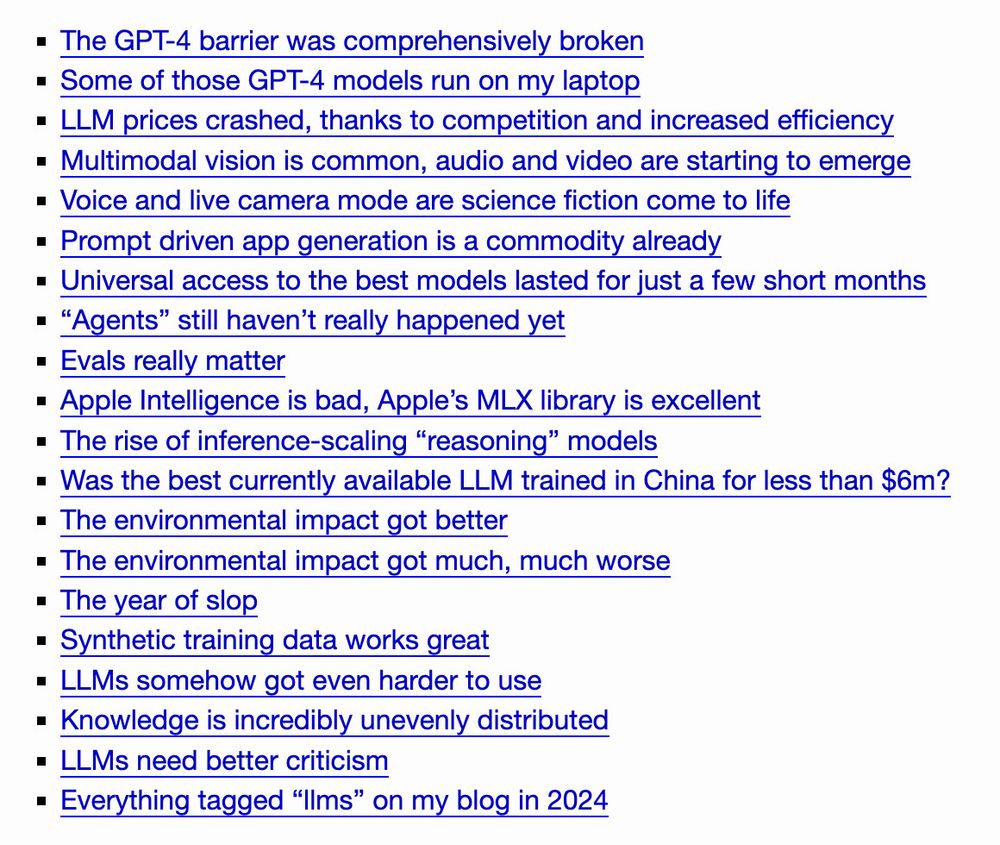

The GPT-4 barrier was comprehensively broken Some of those GPT-4 models run on my laptop LLM prices crashed, thanks to competition and increased efficiency Multimodal vision is common, audio and video are starting to emerge Voice and live camera mode are science fiction come to life Prompt driven app generation is a commodity already Universal access to the best models lasted for just a few short months “Agents” still haven’t really happened yet Evals really matter Apple Intelligence is bad, Apple’s MLX library is excellent The rise of inference-scaling “reasoning” models Was the best currently available LLM trained in China for less than $6m? The environmental impact got better The environmental impact got much, much worse The year of slop Synthetic training data works great LLMs somehow got even harder to use Knowledge is incredibly unevenly distributed LLMs need better criticism Everything tagged “llms” on my blog in 2024

Here's my end-of-year review of things we learned out about LLMs in 2024 - we learned a LOT of things simonwillison.net/2024/Dec/31/...

Table of contents:

It's ready! 💫

A new blog post in which I list of all the tools and apps I've been using for work, plus all my opinions about them.

maria-antoniak.github.io/2024/12/30/o...

Featuring @kagi.com, @warp.dev, @paperpile.bsky.social, @are.na, Fantastical, @obsidian.md, Claude, and more.

Some of my thoughts on OpenAI's o3 and the ARC-AGI benchmark

aiguide.substack.com/p/did-openai...

Sample and verify go brr

21.12.2024 19:17 — 👍 6 🔁 0 💬 0 📌 0

Check out our new encoder model, ModernBERT! 🤖

Super grateful to have been part of such an awesome team effort and very excited about the gains for retrieval/RAG! 🚀

I'm not an """ AGI """ person or anything, but, I do think process reward model RL/scaling inference compute is quite promising for problems with easily verified solutions like (some) math/coding/ARC problems.

20.12.2024 20:26 — 👍 4 🔁 0 💬 0 📌 0

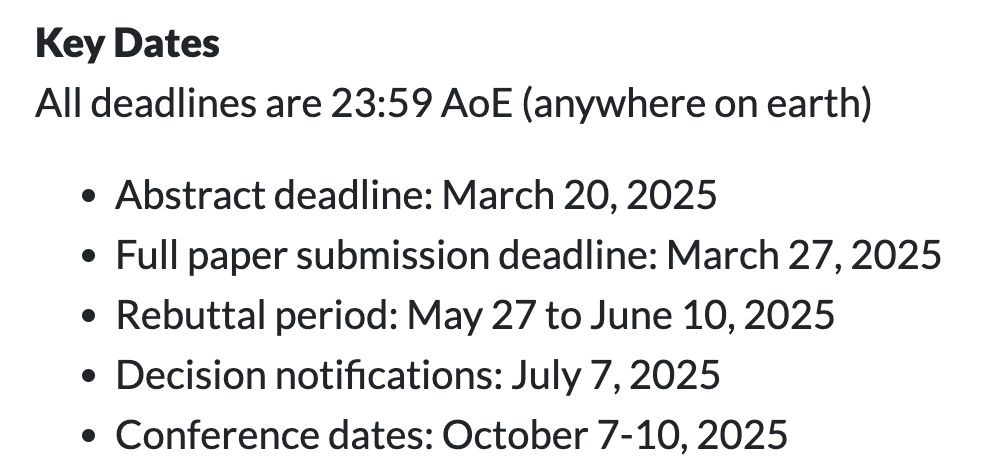

Announcement #1: our call for papers is up! 🎉

colmweb.org/cfp.html

And excited to announce the COLM 2025 program chairs @yoavartzi.com @eunsol.bsky.social @ranjaykrishna.bsky.social and @adtraghunathan.bsky.social

Imo, the reason you don't see more of this is because 1) it's very hard to set up objective, interesting, fair, non-game-able, meaningful, expert-level evals and 2) the incentive for doing this type of careful dataset/environment curation work is not as high as it should be.

16.12.2024 02:38 — 👍 3 🔁 0 💬 0 📌 0

A picture of a transit sign with 4 minute frequencies

Meanwhile in my neighborhood in Seattle we've been fighting 5 years for (1) bus lane and 30 years for a (1) mile bike path

14.12.2024 06:38 — 👍 15 🔁 0 💬 0 📌 0excited to come to #neurips2024 workshops this weekend --- I'll be around sat/sun to say hi to folks :-)

13.12.2024 01:52 — 👍 9 🔁 0 💬 0 📌 0

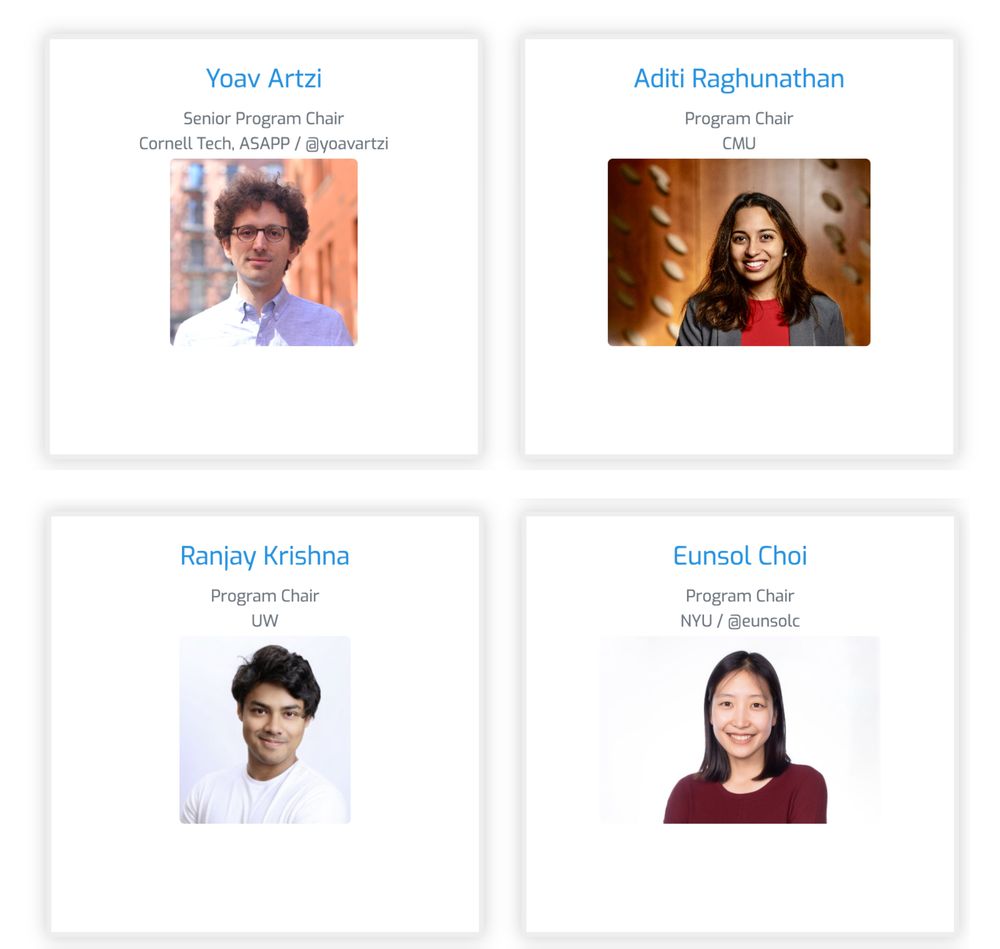

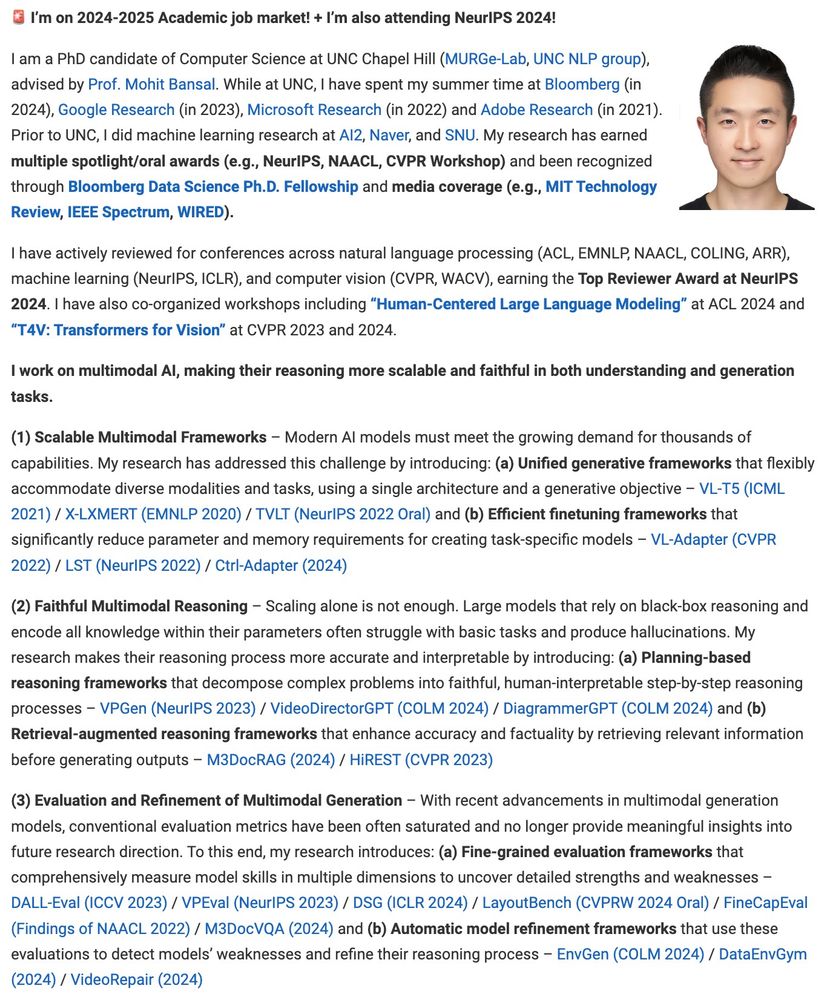

🚨 I’m on the academic job market!

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

“They said it could not be done”. We’re releasing Pleias 1.0, the first suite of models trained on open data (either permissibly licensed or uncopyrighted): Pleias-3b, Pleias-1b and Pleias-350m, all based on the two trillion tokens set from Common Corpus.

05.12.2024 16:39 — 👍 248 🔁 85 💬 11 📌 19

06.12.2024 02:28 —

👍 31

🔁 3

💬 4

📌 0

06.12.2024 02:28 —

👍 31

🔁 3

💬 4

📌 0