Book cover. A silhouette of a person's head filled with colorful geometric shapes—perhaps symbolizing cognitive resources or deployment thereof. The style is attractive and modern, if generic.

text:

The Rational Use of Cognitive Resources

Falk Lieder, Frederick Callaway, Thomas L. Griffithts

I'm excited to announce that I had my first (co-authored) book published today! "The Rational Use of Cognitive Resources" with Falk Lieder and Tom Griffiths (@cocoscilab.bsky.social ). You can read it for free! (see thread)

18.02.2026 01:05 —

👍 142

🔁 45

💬 2

📌 0

We will be releasing edited versions of the interviews in a podcast format over the next few months.

18.12.2025 15:59 —

👍 8

🔁 0

💬 0

📌 0

The book is based in part on interviews conducted over the last decade with researchers including Jerome Bruner, Nick Chater, Noam Chomsky, Susan Dumais, Jeff Elman, Geoff Hinton, Daniel Kahneman, Jay McClelland, Steven Pinker, Eleanor Rosch, Roger Shepard, and Barbara Tversky.

18.12.2025 15:59 —

👍 8

🔁 0

💬 1

📌 0

It explains three major approaches to formalizing thought—rules and symbols, neural networks, and probability and statistics—introducing each idea through the stories of the people behind it.

18.12.2025 15:59 —

👍 8

🔁 0

💬 1

📌 0

The book takes the 19th century idea that there are Laws of Thought just as there are Laws of Nature — mathematical principles that explain how minds work — and traces that idea through the Cognitive Revolution of the 20th century to a 21st century perspective on what those Laws might be.

18.12.2025 15:59 —

👍 7

🔁 0

💬 1

📌 0

Links:

Macmillan: us.macmillan.com/books/978125...

Bookshops: bookshop.org/p/books/the-...

Amazon: www.amazon.com/Laws-Thought...

18.12.2025 15:59 —

👍 4

🔁 0

💬 1

📌 0

Excited to announce a new book telling the story of mathematical approaches to studying the mind, from the origins of cognitive science to modern AI! The Laws of Thought will be published in February and is available for pre-order now.

18.12.2025 15:59 —

👍 167

🔁 39

💬 2

📌 5

Employment Opportunities

Find and learn more about our open positions.Join our team

Princeton's AI Lab is advertising positions for AI Postdoctoral Fellows in two areas: studying natural and artificial minds, and designing, understanding or engineering large AI models. We are also searching for a Lead Research Software Engineer! ai.princeton.edu/ai-lab/emplo...

16.12.2025 14:48 —

👍 27

🔁 10

💬 0

📌 1

Our new preprint explores how advances in AI change how we think about the role of symbols in human cognition. As neural networks show capabilities once used to argue for symbolic processes, we need to revisit how we can identify the level of analysis at which symbols are useful.

15.08.2025 18:59 —

👍 27

🔁 2

💬 0

📌 0

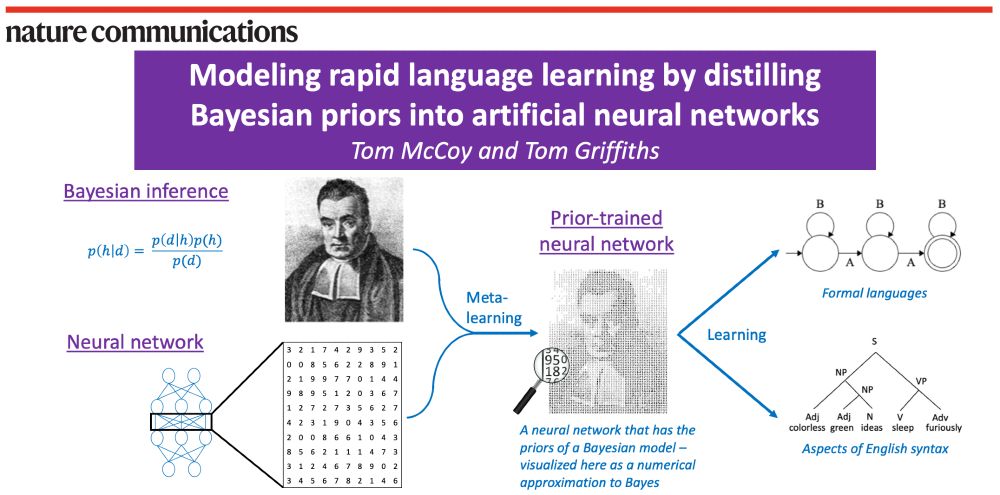

A schematic of our method. On the left are shown Bayesian inference (visualized using Bayes’ rule and a portrait of the Reverend Bayes) and neural networks (visualized as a weight matrix). Then, an arrow labeled “meta-learning” combines Bayesian inference and neural networks into a “prior-trained neural network”, described as a neural network that has the priors of a Bayesian model – visualized as the same portrait of Reverend Bayes but made out of numbers. Finally, an arrow labeled “learning” goes from the prior-trained neural network to two examples of what it can learn: formal languages (visualized with a finite-state automaton) and aspects of English syntax (visualized with a parse tree for the sentence “colorless green ideas sleep furiously”).

🤖🧠 Paper out in Nature Communications! 🧠🤖

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

20.05.2025 19:04 —

👍 155

🔁 43

💬 4

📌 1

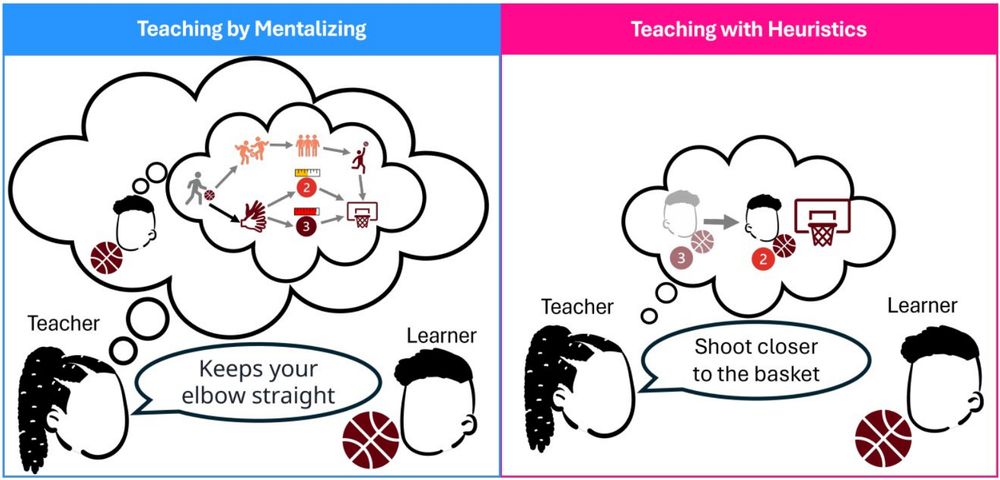

🚨 New preprint alert! 🚨

Thrilled to share new research on teaching!

Work supervised by

@cocoscilab.bsky.social, @yaelniv.bsky.social, and @markkho.bsky.social.

This project asks:

When do people teach by mentalizing vs with heuristics? 1/3

osf.io/preprints/os...

19.05.2025 18:44 —

👍 33

🔁 14

💬 2

📌 1

🚨 New in Nature Human Behavior! 🚨

Binary climate data visuals amplify perceived impact of climate change.

Both graphs in this image reflect equivalent climate change trends over time, yet people consistently perceive climate change as having a greater impact in the right plot than the left.

👇1/n

17.04.2025 18:03 —

👍 246

🔁 87

💬 5

📌 15

New preprint shows that ideas from distributed systems can be used to predict when agents will adopt specialized strategies when working together to perform a task

26.03.2025 21:05 —

👍 10

🔁 0

💬 0

📌 0

Employment Opportunities

Find and learn more about our open positions.Join our team

The new AI Lab at Princeton has positions for AI Postdoctoral Research Fellows for three research initiatives: AI for Accelerating Invention, Natural and Artificial Minds, and Princeton Language and Intelligence. Deadline is 12/31. More information here: ai.princeton.edu/ai-lab/emplo...

10.12.2024 14:41 —

👍 25

🔁 7

💬 1

📌 1

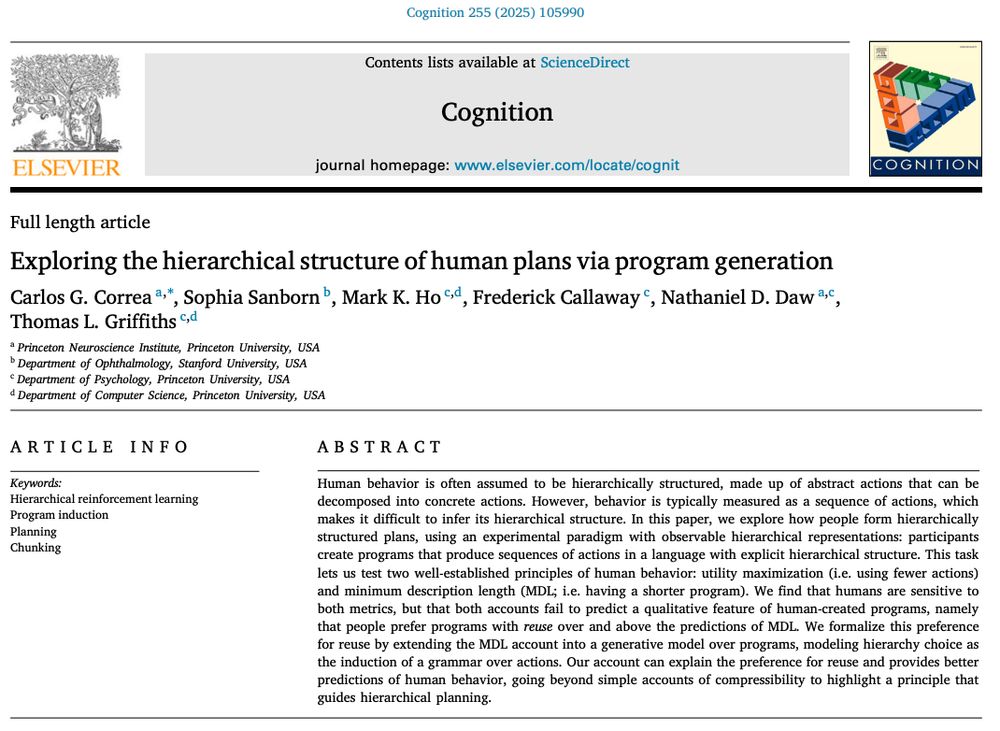

My paper on hierarchical plans is out in Cognition!🎉

tldr: We ask participants to generate hierarchical plans in a programming game. People prefer to reuse beyond what standard accounts predict, which we formalize as induction of a grammar over actions.

authors.elsevier.com/a/1kBQr2Hx2x...

03.12.2024 15:37 —

👍 100

🔁 37

💬 1

📌 3

(5/5) Thanks to the many contributors to the book! @markkho.bsky.social @norijacoby.bsky.social @eringrant.bsky.social @fredcallaway.bsky.social @tomerullman.bsky.social @jhamrick.bsky.social @tobigerstenberg.bsky.social @spiantado.bsky.social

@noahdgoodman.bsky.social @ebonawitz.bsky.social

18.11.2024 16:25 —

👍 20

🔁 1

💬 0

📌 0

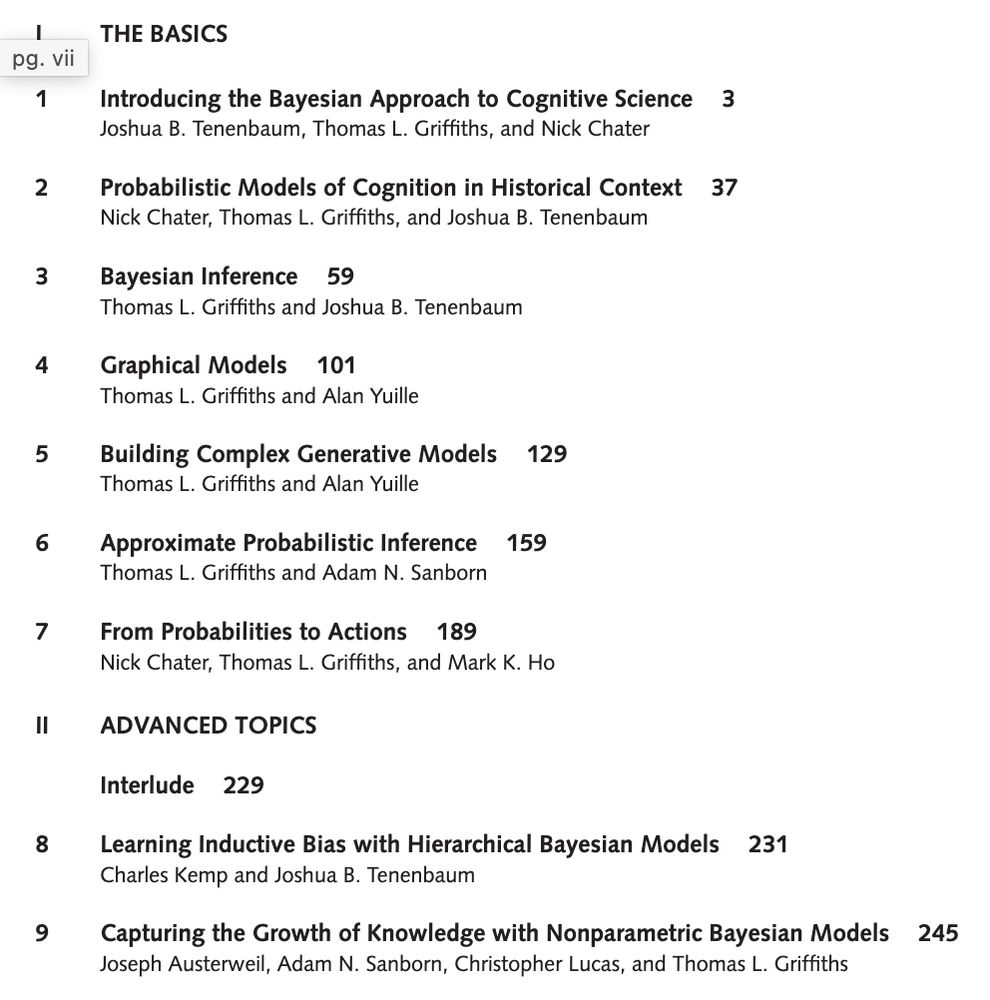

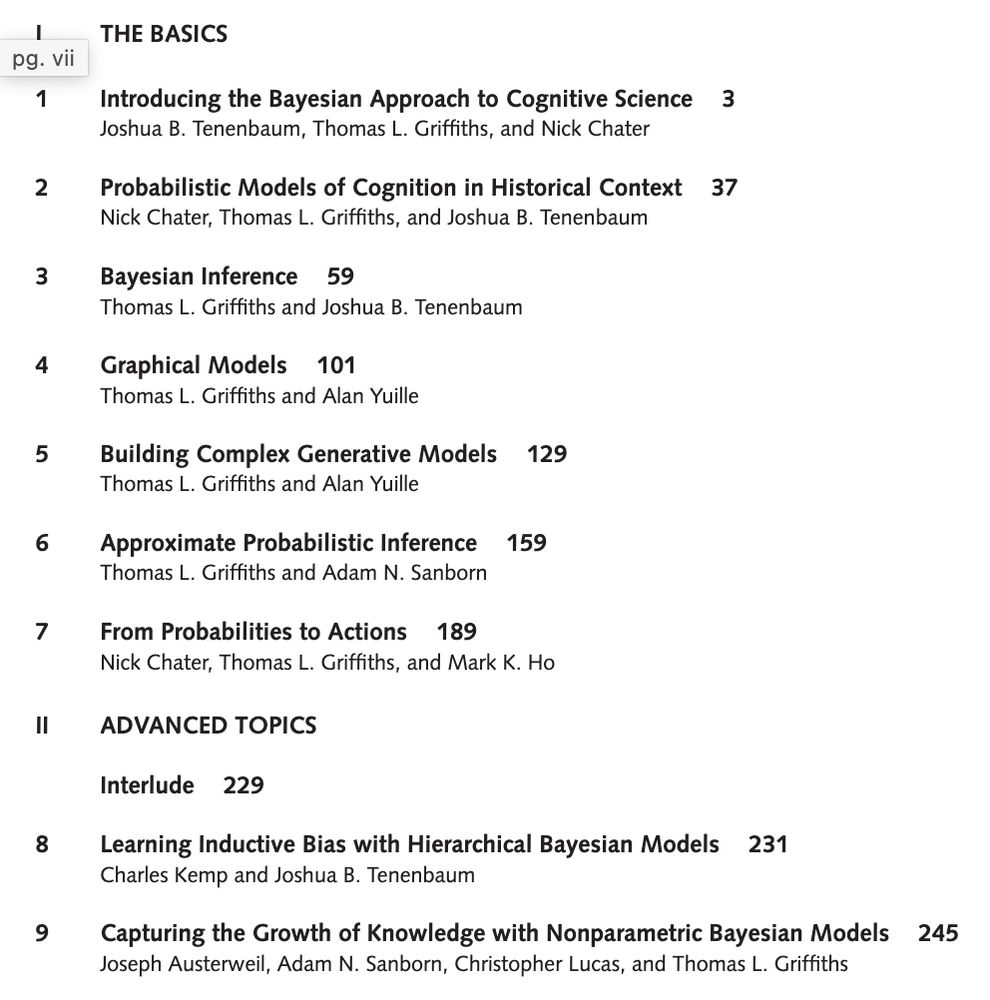

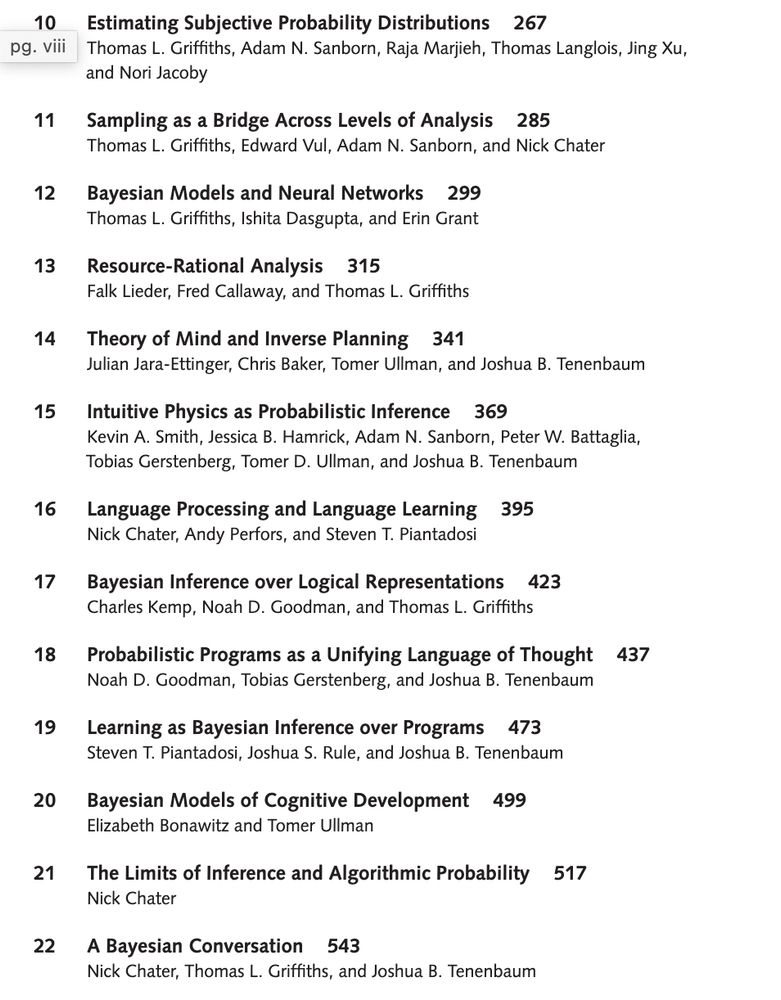

(4/5) Here's the table of contents. An Open Access version of the book is available through the MIT Press website.

18.11.2024 16:25 —

👍 28

🔁 2

💬 1

📌 0

(3/5) That same perspective is valuable for understanding modern AI systems. In particular, Bayesian models highlight the inductive biases that make it possible for humans to learn from small amounts of data, and give us tools for building machines with the same capacity.

18.11.2024 16:25 —

👍 9

🔁 0

💬 1

📌 0

(2/5) Bayesian models start by considering the abstract computational problems intelligent systems have to solve and then identifying their optimal solutions. Those solutions can help us understand why people do the things we do.

18.11.2024 16:25 —

👍 13

🔁 0

💬 1

📌 0

(1/5) Very excited to announce the publication of Bayesian Models of Cognition: Reverse Engineering the Mind. More than a decade in the making, it's a big (600+ pages) beautiful book covering both the basics and recent work: mitpress.mit.edu/978026204941...

18.11.2024 16:25 —

👍 521

🔁 119

💬 15

📌 15

(1) Vision language models can explain complex charts & decode memes, but struggle with simple tasks young kids find easy - like counting objects or finding items in cluttered scenes! Our 🆒🆕 #NeurIPS2024 paper shows why: they face the same 'binding problem' that constrains human vision! 🧵👇

15.11.2024 03:09 —

👍 86

🔁 25

💬 5

📌 4

Application for Postdoctoral Research Associate

We are advertising a new postdoctoral position in computational cognitive science, with specific interest in applications of large language models in cognitive science and use of Bayesian methods and metalearning to understand human cognition and AI systems. www.princeton.edu/acad-positio...

11.01.2024 14:43 —

👍 8

🔁 2

💬 0

📌 0

First post! Does the success of deep neural networks in creating AI systems mean Bayesian models are no longer relevant? Our new paper argues the opposite: these approaches are complementary, creating new opportunities to use Bayes to understand intelligent machines

arxiv.org/abs/2311.10206

01.12.2023 13:57 —

👍 11

🔁 2

💬 0

📌 0