Some personal updates:

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

20.05.2025 17:58 — 👍 27 🔁 5 💬 3 📌 2

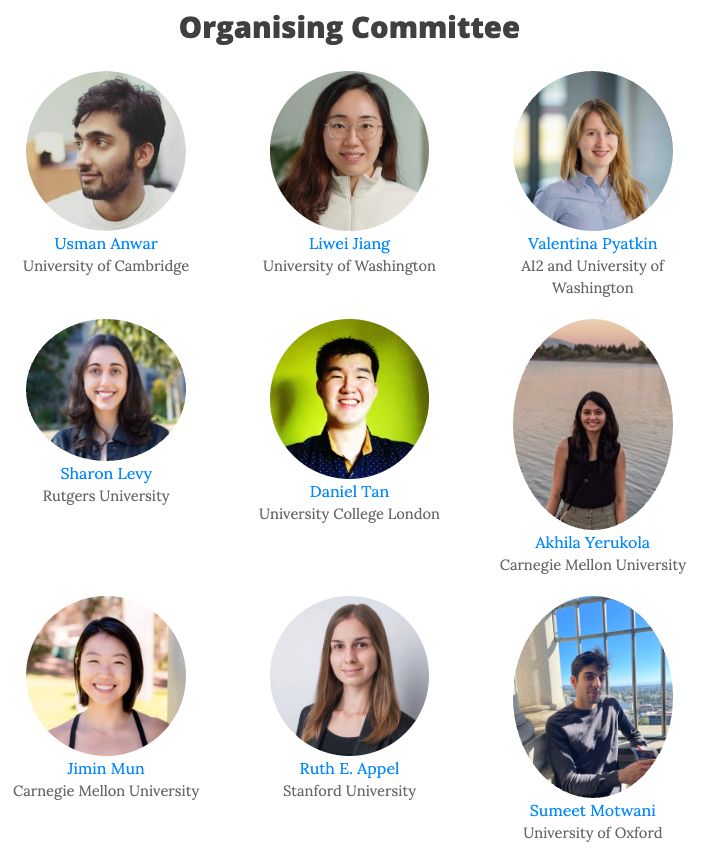

📢 The SoLaR workshop will be collocated with COLM!

@colmweb.org

SoLaR is a collaborative forum for researchers working on responsible development, deployment and use of language models.

We welcome both technical and sociotechnical submissions, deadline July 5th!

12.05.2025 15:25 — 👍 16 🔁 6 💬 1 📌 0

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

07.05.2025 18:54 — 👍 10 🔁 8 💬 1 📌 0

Thank you!

06.05.2025 03:01 — 👍 0 🔁 0 💬 0 📌 0

Thanks @niranjanb.bsky.social!

06.05.2025 03:00 — 👍 1 🔁 0 💬 0 📌 0

🔥 BIG CONGRATS to Elias (and UT Austin)! Really proud of you -- it has been a complete pleasure to work with Elias and see him grow into a strong PI on *all* axes 🤗

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

05.05.2025 22:00 — 👍 12 🔁 4 💬 1 📌 0

Thank you @mohitbansal.bsky.social -- I have learned so much from your mentorship (and benefitted greatly from your job market guidance), and consider myself extremely fortunate to have found such a fantastic lab and postdoc advisor!

05.05.2025 23:27 — 👍 1 🔁 0 💬 1 📌 0

Thanks @kmahowald.bsky.social looking forward to collaborating!

05.05.2025 21:49 — 👍 2 🔁 0 💬 0 📌 0

Thanks @rdesh26.bsky.social ❤️

05.05.2025 21:48 — 👍 0 🔁 0 💬 0 📌 0

And of course thank you to the amazing students/collaborators from @unccs.bsky.social and @jhuclsp.bsky.social 🙏

05.05.2025 20:28 — 👍 2 🔁 0 💬 0 📌 0

A huge shoutout to my mentors who have supported and shaped my research! Esp. grateful to my postdoc advisor @mohitbansal.bsky.social for helping me grow along the whole spectrum of PI skills, and my PhD advisor @vandurme.bsky.social for shaping my trajectory as a researcher

05.05.2025 20:28 — 👍 2 🔁 0 💬 1 📌 0

Elias Stengel-Eskin

Postdoctoral Research Associate, UNC Chapel Hill

Looking forward to continuing to develop AI agents that interact/communicate with people, each other, and the multimodal world. I’ll be recruiting PhD students for Fall 2026 across a range of connected topics (details: esteng.github.io) and plan on recruiting interns for Fall 2025 as well.

05.05.2025 20:28 — 👍 3 🔁 0 💬 1 📌 0

UT Austin campus

Extremely excited to announce that I will be joining

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

05.05.2025 20:28 — 👍 42 🔁 9 💬 5 📌 2

🌵 I'm going to be presenting PBT at #NAACL2025 today at 2PM! Come by poster session 2 if you want to hear about:

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

30.04.2025 15:04 — 👍 8 🔁 3 💬 0 📌 0

I will be presenting ✨Reverse Thinking Makes LLMs Stronger Reasoners✨at #NAACL2025!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

29.04.2025 23:21 — 👍 5 🔁 3 💬 1 📌 0

Teaching Models to Balance Resisting and Accepting Persuasion

Large language models (LLMs) are susceptible to persuasion, which can pose risks when models are faced with an adversarial interlocutor. We take a first step towards defending models against persuasion while also arguing that defense against adversarial (i.e. negative) persuasion is only half of the equation: models should also be able to accept beneficial (i.e. positive) persuasion to improve their answers. We show that optimizing models for only one side results in poor performance on the other. In order to balance positive and negative persuasion, we introduce Persuasion-Training (or PBT), which leverages multi-agent recursive dialogue trees to create data and trains models via preference optimization to accept persuasion when appropriate. PBT allows us to use data generated from dialogues between smaller 7-8B models for training much larger 70B models. Moreover, PBT consistently improves resistance to misinformation and resilience to being challenged while also resulting in the best overall performance on holistic data containing both positive and negative persuasion. Crucially, we show that PBT models are better teammates in multi-agent debates across two domains (trivia and commonsense QA). We find that without PBT, pairs of stronger and weaker models have unstable performance, with the order in which the models present their answers determining whether the team obtains the stronger or weaker model's performance. PBT leads to better and more stable results and less order dependence, with the stronger model consistently pulling the weaker one up.

Links:

1⃣ arxiv.org/abs/2410.14596

2⃣ arxiv.org/abs/2503.15272

3⃣ arxiv.org/abs/2409.07394

With awesome collaborators @mohitbansal.bsky.social, @peterbhase.bsky.social, David Wan, @cyjustinchen.bsky.social, Han Wang, @archiki.bsky.social

29.04.2025 17:52 — 👍 1 🔁 0 💬 0 📌 0

📆 04/30 2PM: Teaching Models to Balance Resisting and Accepting Persuasion

📆 05/01 2PM: MAMM-Refine: A Recipe for Improving Faithfulness in Generation with Multi-Agent Collaboration

📆 05/02 11AM: AdaCAD: Adaptively Decoding to Balance Conflicts between Contextual and Parametric Knowledge

29.04.2025 17:52 — 👍 1 🔁 0 💬 1 📌 0

✈️ Heading to #NAACL2025 to present 3 main conf. papers, covering training LLMs to balance accepting and rejecting persuasion, multi-agent refinement for more faithful generation, and adaptively addressing varying knowledge conflict.

Reach out if you want to chat!

29.04.2025 17:52 — 👍 15 🔁 5 💬 1 📌 0

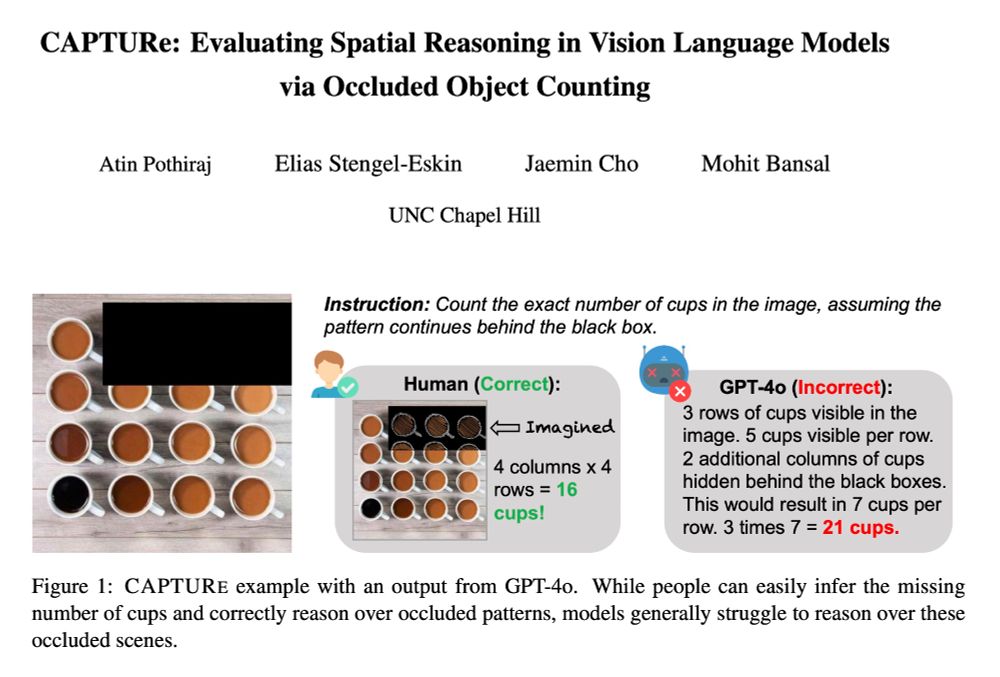

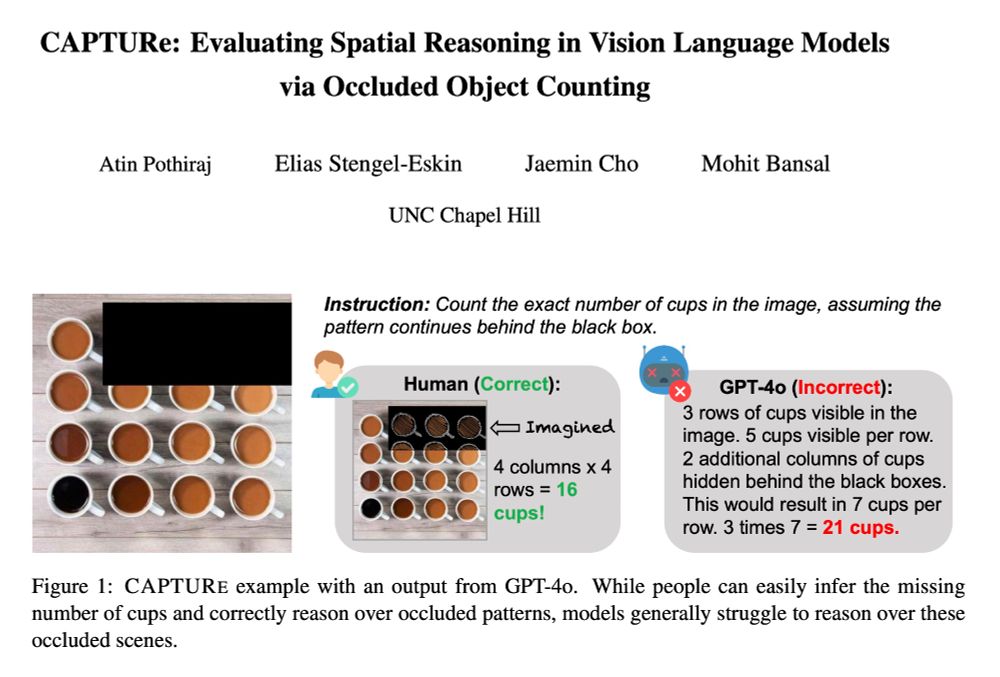

GitHub - atinpothiraj/CAPTURe

Contribute to atinpothiraj/CAPTURe development by creating an account on GitHub.

Kudos to Atin Pothiraj on leading this project, with @jmincho.bsky.social and @mohitbansal.bsky.social

Code: github.com/atinpothiraj...

@hf.co Dataset: huggingface.co/datasets/ati...

Paper: arxiv.org/abs/2504.15485

24.04.2025 15:14 — 👍 1 🔁 0 💬 0 📌 0

By testing VLMs’ spatial reasoning under occlusion, CAPTURe highlights an unexpected weakness. We analyze this weakness by providing the model with additional information:

➡️ Providing object coordinates as text improves performance substantially.

➡️ Providing diffusion-based inpainting also helps.

24.04.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

Interestingly, model error increases with respect to the number of occluded dots, suggesting that task performance is correlated with the level of occlusion.

Additionally, model performance depends on pattern type (the shape in which the objects are arranged).

24.04.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

We evaluate 4 strong VLMs (GPT-4o, InternVL2, Molmo, and Qwen2VL) on CAPTURe.

Models generally struggle with multiple aspects of the task (occluded and unoccluded)

Crucially, every model performs worse in the occluded setting but we find that humans can perform the task easily even with occlusion.

24.04.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

We release 2 splits:

➡️ CAPTURe-real contains real-world images and tests the ability of models to perform amodal counting in naturalistic contexts.

➡️ CAPTURe-synthetic allows us to analyze specific factors by controlling different variables like color, shape, and number of objects.

24.04.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

CAPTURe = Counting Amodally Through Unseen Regions, which requires a model to count objects arranged in a pattern by inferring how the pattern continues behind an occluder (an object that blocks parts of the scene).

This needs pattern recognition + counting, making it a good testbed for VLMs!

24.04.2025 15:14 — 👍 0 🔁 0 💬 1 📌 0

Check out 🚨CAPTURe🚨 -- a new benchmark testing spatial reasoning by making VLMs count objects under occlusion.

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

24.04.2025 15:14 — 👍 5 🔁 4 💬 1 📌 0

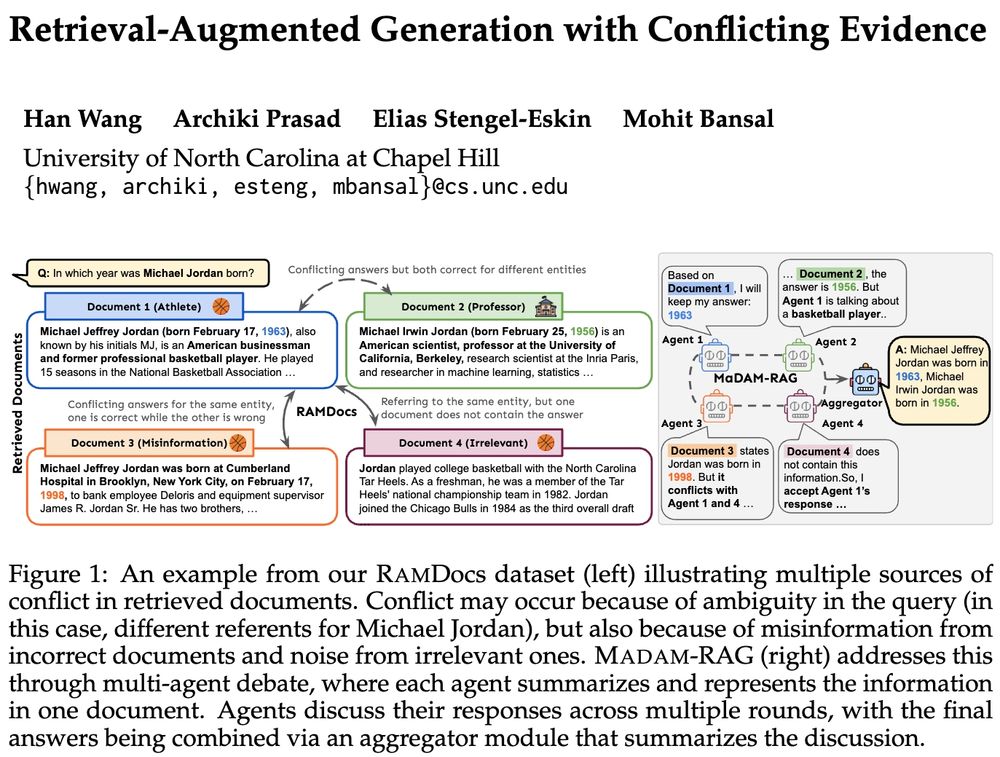

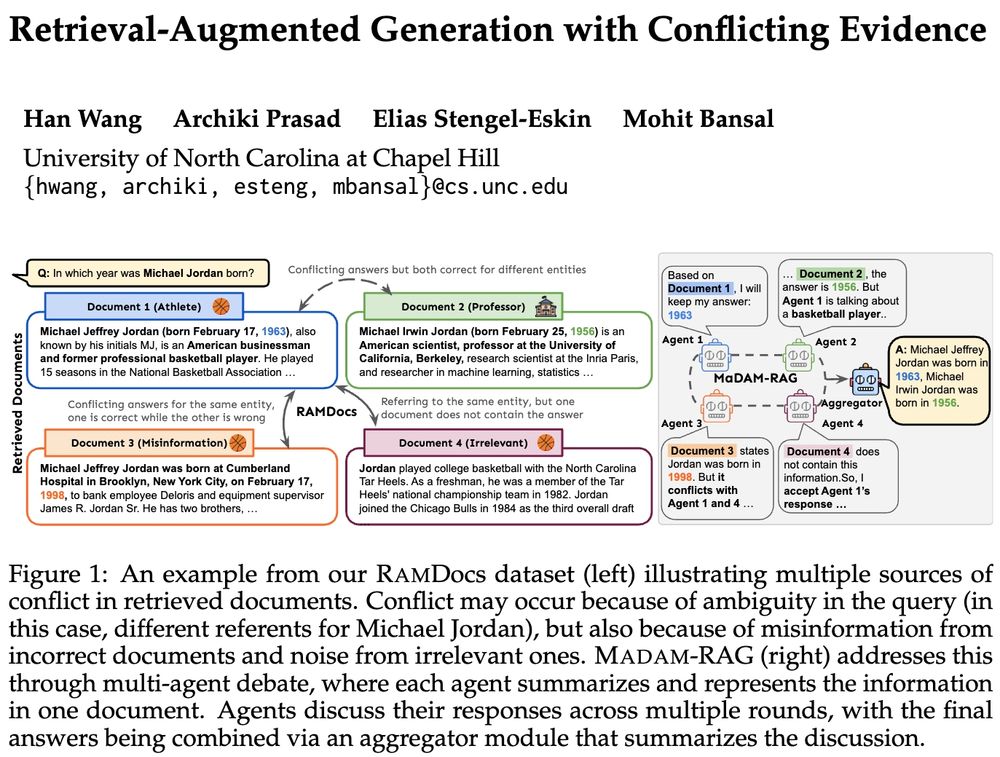

🚨Real-world retrieval is messy: queries are ambiguous or docs conflict & have incorrect/irrelevant info. How can we jointly address these problems?

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

18.04.2025 17:05 — 👍 14 🔁 7 💬 3 📌 0

Excited to share my first paper as first author: "Task-Circuit Quantization" 🎉

I led this work to explore how interpretability insights can drive smarter model compression. Big thank you to @esteng.bsky.social, Yi-Lin Sung, and @mohitbansal.bsky.social for mentorship and collaboration. More to come

16.04.2025 16:19 — 👍 5 🔁 2 💬 0 📌 0

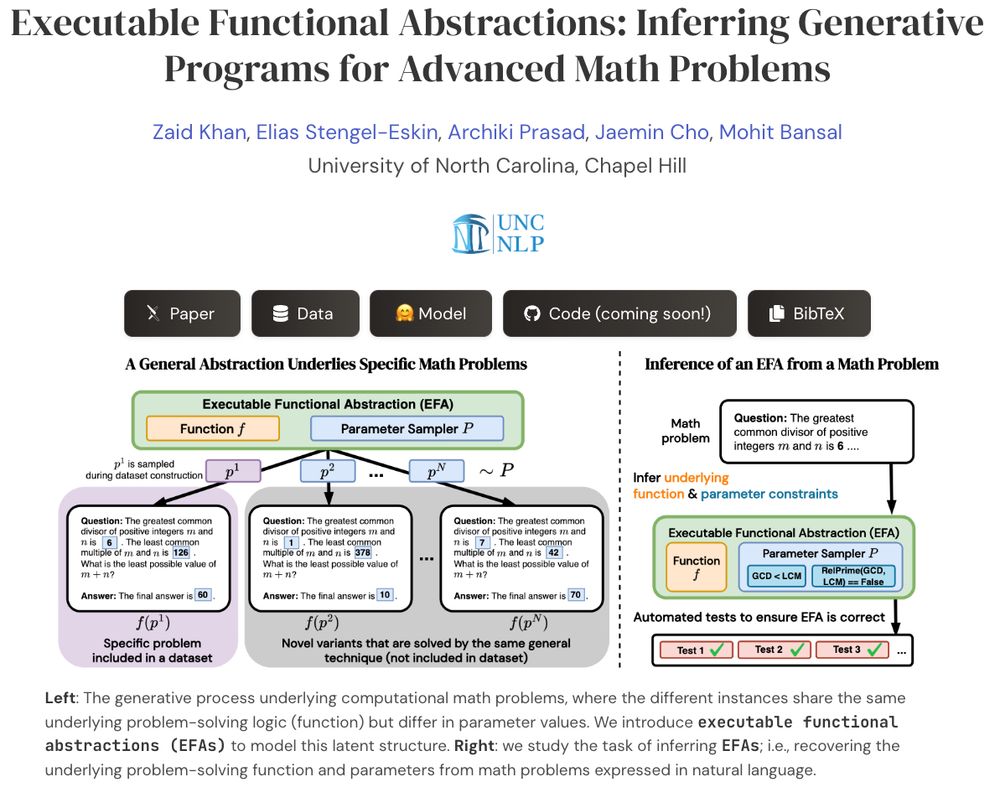

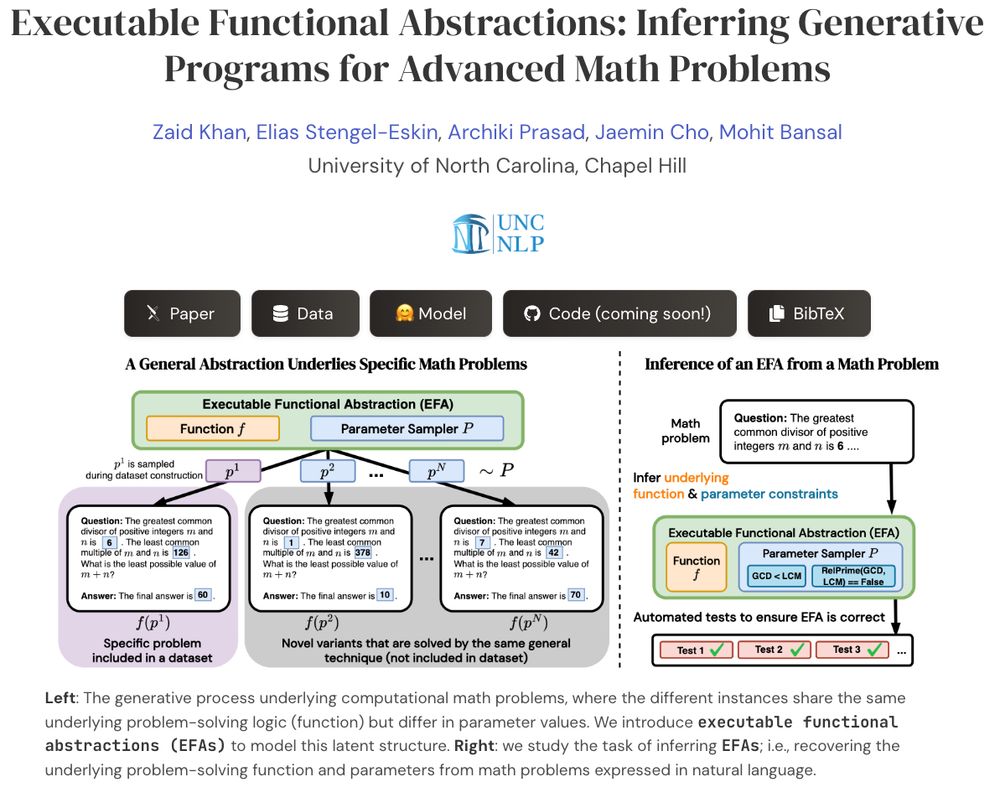

What if we could transform advanced math problems into abstract programs that can generate endless, verifiable problem variants?

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

15.04.2025 19:37 — 👍 15 🔁 5 💬 1 📌 1

To simulate a realistic use case involving generation, we evaluate on Spider for text-to-sql.

Quantization methods struggle with preserving performance on generative tasks. We show that TaCQ is the only method to achieve non-zero performance in 2-bits for Llama-3-8B-Instruct.

12.04.2025 14:19 — 👍 1 🔁 0 💬 1 📌 0

Linguistics, statistics, speech, cognitive science | McGill University Department of Linguistics

Philosophy professor at UT Austin who thinks about attitudes, epistemology, and communication. https://www.danieldrucker.info/

The largest workshop on analysing and interpreting neural networks for NLP.

BlackboxNLP will be held at EMNLP 2025 in Suzhou, China

blackboxnlp.github.io

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

she/her

McGillNLP & Mila

occasionally live on ckut 90.3 fm :-)

adadtur.github.io

Assi. Prof @UofTCompSci. Postdoc @MPI_IS w/ @bschoelkopf. Research on (1) @CausalNLP and (2) NLP4SocialGood @NLP4SG. Mentor & mentee @ACLMentorship.

Asst Prof. @ UCSD | PI of LeM🍋N Lab | Former Postdoc at ETH Zürich, PhD @ NYU | computational linguistics, NLProc, CogSci, pragmatics | he/him 🏳️🌈

alexwarstadt.github.io

AI technical gov & risk management research. PhD student @MIT_CSAIL, fmr. UK AISI. I'm on the CS faculty job market! https://stephencasper.com/

Associate Professor (University of Arizona).

Visiting Scientist (Allen Institute for AI/Ai2)

AI/NLP; Scientific Discovery; DiscoveryWorld; EntailmentBank; ScienceWorld; http://textgames.org list. Tweets/opinions my own

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

The Milan Natural Language Processing Group #NLProc #AI

milanlproc.github.io

https://shramay-palta.github.io

CS PhD student . #NLProc at CLIP UMD| Commonsense + xNLP, AI, CompLing | ex Research Intern @msftresearch.bsky.social

website: https://t.co/ml5yPJjZLO Natural Language Processing and Machine Learning researcher at the University of Cambridge. Member of the PaNLP group: https://www.panlp.org/ and fellow of Fitzwilliam College.

Assistant Professor @ UChicago CS/DSI (NLP & HCI) | Writing with AI ✍️

https://minalee-research.github.io/

Assistant Professor in Media Law and Ethics, UMass-Amherst

Proud UNC J-school PhD alumna

heesoojang.com

Visiting Scientist at Schmidt Sciences. Visiting Researcher at Stanford NLP Group

Interested in AI safety and interpretability

Previously: Anthropic, AI2, Google, Meta, UNC Chapel Hill