GitHub - fanegg/Human3R: An unified model for 4D human-scene reconstruction

An unified model for 4D human-scene reconstruction - fanegg/Human3R

Code, model and 4D interactive demo now available

🔗Page: fanegg.github.io/Human3R

📄Paper: arxiv.org/abs/2510.06219

💻Code: github.com/fanegg/Human3R

Big thanks to our awesome team!

@fanegg.bsky.social @xingyu-chen.bsky.social Yuxuan Xue @apchen.bsky.social @xiuyuliang.bsky.social Gerard Pons-Moll

08.10.2025 08:54 — 👍 2 🔁 0 💬 0 📌 0

GT comparison shows our feedforward method, without any iterative optimization, is not only fast but also accurate.

This is achieved by reading out humans from a 4D foundation model, #CUT3R, with our proposed 𝙝𝙪𝙢𝙖𝙣 𝙥𝙧𝙤𝙢𝙥𝙩 𝙩𝙪𝙣𝙞𝙣𝙜.

08.10.2025 08:51 — 👍 1 🔁 0 💬 1 📌 0

Bonus: #Human3R is also a compact human tokenizer!

Our human tokens capture ID+ shape + pose + position of human, unlocking 𝘁𝗿𝗮𝗶𝗻𝗶𝗻𝗴-𝗳𝗿𝗲𝗲 4D tracking.

08.10.2025 08:50 — 👍 2 🔁 0 💬 1 📌 0

#Human3R: Everyone Everywhere All at Once

Just input a RGB video, we online reconstruct 4D humans and scene in 𝗢𝗻𝗲 model and 𝗢𝗻𝗲 stage.

Training this versatile model is easier than you think – it just takes 𝗢𝗻𝗲 day using 𝗢𝗻𝗲 GPU!

🔗Page: fanegg.github.io/Human3R/

08.10.2025 08:49 — 👍 2 🔁 0 💬 1 📌 1

Again, training-free is all you need.

01.10.2025 07:06 — 👍 3 🔁 0 💬 0 📌 0

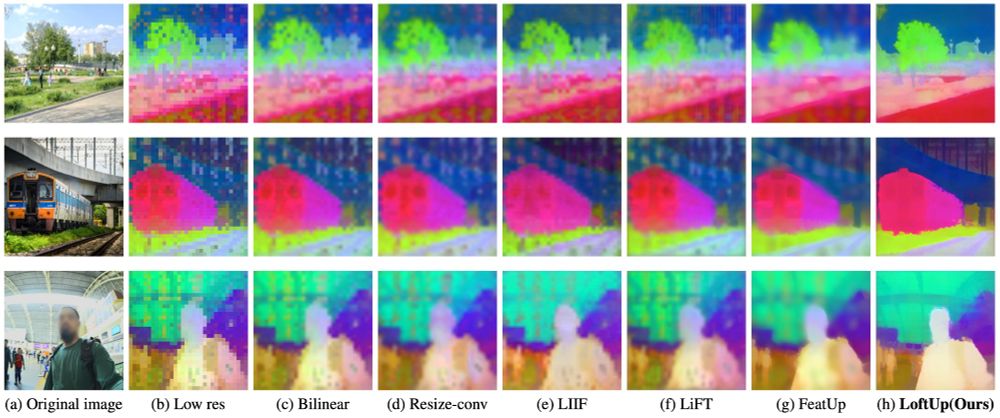

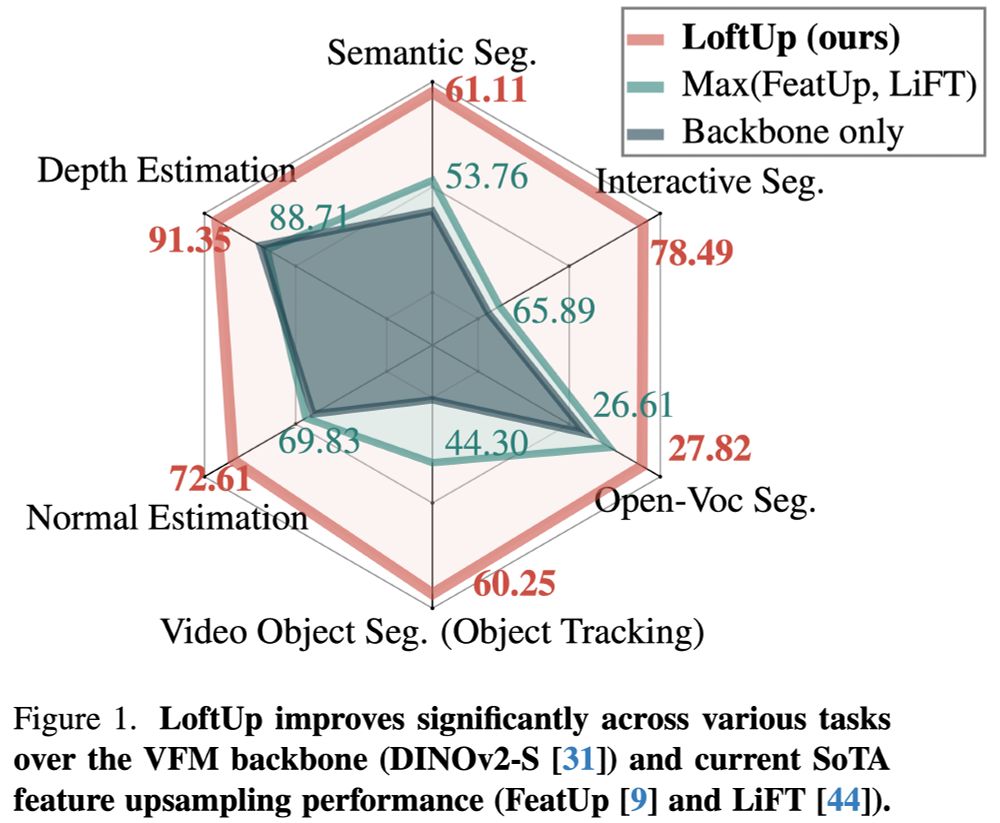

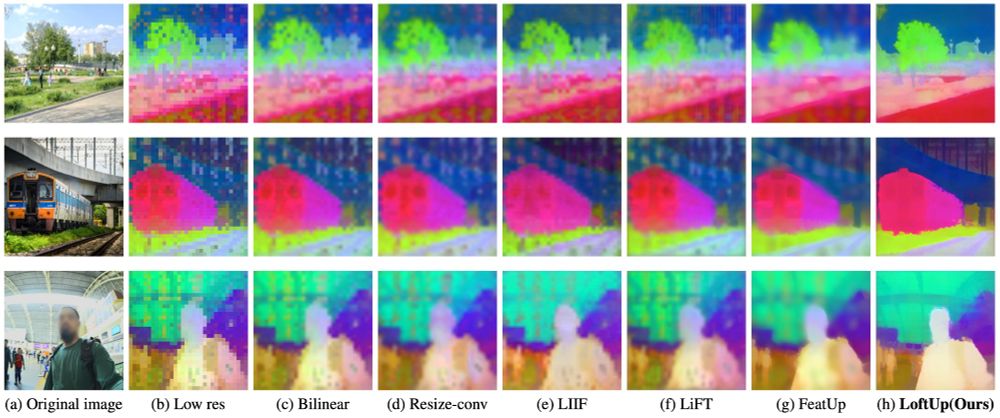

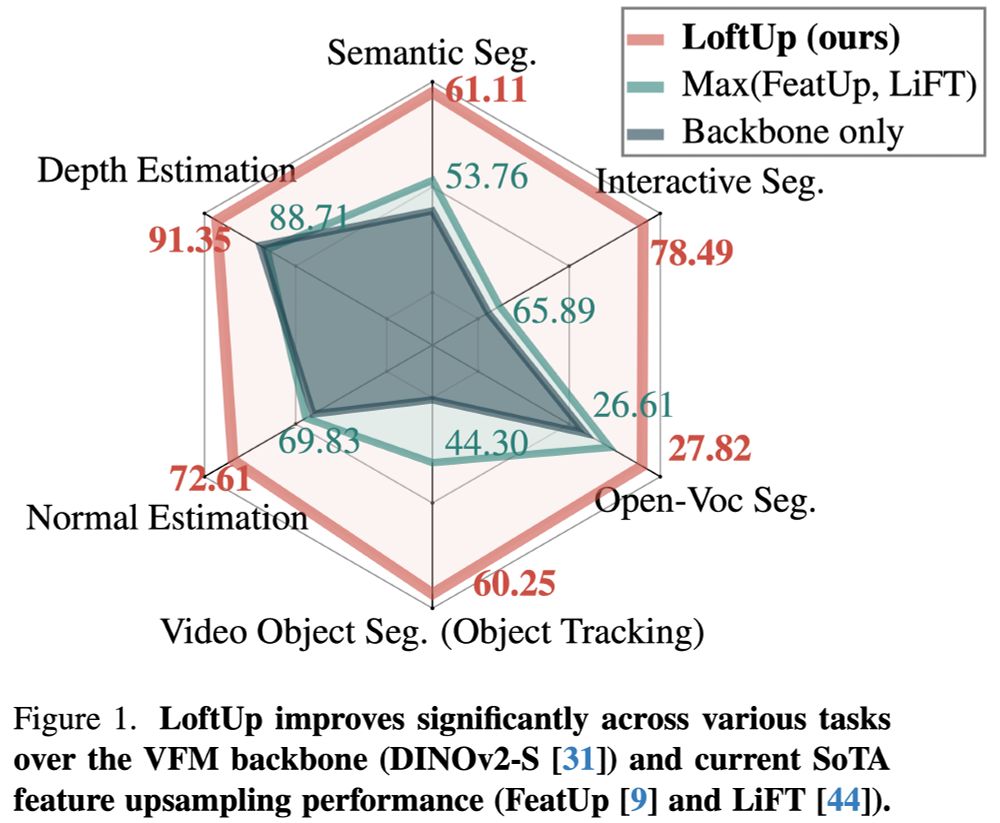

Excited to introduce LoftUp!

A strong (than ever) and lightweight feature upsampler for vision encoders that can boost performance on dense prediction tasks by 20%–100%!

Easy to plug into models like DINOv2, CLIP, SigLIP — simple design, big gains. Try it out!

github.com/andrehuang/l...

22.04.2025 07:55 — 👍 19 🔁 5 💬 0 📌 0

I was really surprised when I saw this. Dust3R has learned very well to segment objects without supervision. This knowledge can be extracted post-hoc, enabling accurate 4D reconstruction instantly.

01.04.2025 18:45 — 👍 31 🔁 2 💬 1 📌 0

Just "dissect" the cross-attention mechanism of #DUSt3R, making 4D reconstruction easier.

01.04.2025 15:45 — 👍 4 🔁 0 💬 0 📌 0

#Easi3R is a simple training-free approach adapting DUSt3R for dynamic scenes.

01.04.2025 15:45 — 👍 4 🔁 0 💬 0 📌 0

YouTube video by Yue Chen

[CVPR 2025] Feat2GS: Probing Visual Foundation Models with Gaussian Splatting

💻Code: github.com/fanegg/Feat2GS

🎥Video: youtu.be/4fT5lzcAJqo?...

Big thanks to the amazing team!

@fanegg.bsky.social, @xingyu-chen.bsky.social, Anpei Chen, Gerard Pons-Moll, Yuliang Xiu

#DUSt3R #MASt3R #MiDaS #DINOv2 #DINO #SAM #CLIP #RADIO #MAE #StableDiffusion #Zero123

31.03.2025 16:11 — 👍 1 🔁 0 💬 0 📌 0

Our findings in 3D probe lead to a simple-yet-effective solution, by just combining features from different visual foundation models and outperform prior works.

Apply #Feat2GS in sparse & causal captures:

🤗Online Demo: huggingface.co/spaces/endle...

31.03.2025 16:08 — 👍 0 🔁 0 💬 1 📌 0

With #Feat2GS we evaluated more than 10 visual foundation models (DUSt3R, DINO, MAE, SAM, CLIP, MiDas, etc) in terms of geometry and texture — see the paper for comparison.

📄Paper: arxiv.org/abs/2412.09606

🔍Try it NOW: fanegg.github.io/Feat2GS/#chart

31.03.2025 16:07 — 👍 0 🔁 0 💬 1 📌 0

How much 3D do visual foundation models (VFMs) know?

Previous work requires 3D data for probing → expensive to collect!

#Feat2GS @cvprconference.bsky.social 2025 - our idea is to read out 3D Gaussains from VFMs features, thus probe 3D with novel view synthesis.

🔗Page: fanegg.github.io/Feat2GS

31.03.2025 16:06 — 👍 24 🔁 7 💬 1 📌 1

Nerfstudio contributor, fan of fast software 😎

Official account for IEEE/CVF Conference on Computer Vision & Pattern Recognition. Hosted by @CSProfKGD with more to come.

📍🌎 🔗 cvpr.thecvf.com 🎂 June 19, 1983

PhD Student at Ommer Lab (Stable Diffusion)

Trying to understand motion...

🌐 https://nickstracke.dev

Distinguished Scientist at Google. Computational Imaging, Machine Learning, and Vision. Posts are personal opinions. May change or disappear over time.

http://milanfar.org

Machine Learning and Neuroscience Lab @ Uni Tuebingen, PI: Matthias Bethge, bethgelab.org

This is the Tübingen research campus of the Max Planck Society in Germany. We do basic research in fields of biology, neuroscience, and AI.

For Institute specific updates follow:

@mpicybernetics.bsky.social

@mpi-bio-fml.bsky.social

Building 3D embodiment for AI agents so that they can see, understand and interact just like real people.

🏂 Platform: meshcapade.me

🤖 API: meshcapade.com/docs/api

Making robots part of our everyday lives. #AI research for #robotics. #computervision #machinelearning #deeplearning #NLProc #HRI Based in Grenoble, France. NAVER LABS R&D

europe.naverlabs.com

PhDing @UCSanDiego @NVIDIA @hillbot_ai on scalable robot learning and embodied AI. Co-founded @LuxAIChallenge to build AI competitions. @NSF GRFP fellow

http://stoneztao.com

PhD student in AI at University of Tuebingen.

Dreaming for a better world.

https://andrehuang.github.io/

Strengthening Europe's Leadership in AI through Research Excellence | ellis.eu

Researcher at Google, 3D computer vision & machine learning. Previously PhD at ETH Zurich, intern at Google, Meta Reality Labs, Microsoft, MagicLeap. Migrating from x.com/pesarlin ...

Assistant Prof. Stanford CEE | Gradient 𝚫 Spaces Group. Researching #VisualMachinePerception to transform #Design & #Construction | http://gradientspaces.stanford.edu

World Labs. Former research scientist at Google. Ph.D UWCSE.

📍 San Francisco 🔗 keunhong.com

Ph.D. student at HKU. Researcher on computer vision, autonomous driving and robotics (starter). Hobbyist on hiking, j-pop, scenery photography and anime.

Professor in applied mathematics at University of Orléans 🇫🇷 & IUF Junior 2022 on an innovation chair.

Interested in image processing and deep generative models.

I teach generative models for imaging at #MasterMVA.

Assistant Professor @ Westlake University. PhD @ MPI-IS. Focusing on democratizing human-centric digitization.

![[CVPR 2025] Feat2GS: Probing Visual Foundation Models with Gaussian Splatting](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ijjudzcxhhfkcfasisxuxw43/bafkreiej2sruf2lyxcxcowq6otorwcm3wxyzksst6uc7nsd3ofjjs4yw4e@jpeg)