Two great works on how we can manipulate style for generative modeling by PiMa!

18.10.2025 08:37 — 👍 4 🔁 0 💬 1 📌 0Nick Stracke

@rmsnorm.bsky.social

PhD Student at Ommer Lab (Stable Diffusion) Trying to understand motion... 🌐 https://nickstracke.dev

@rmsnorm.bsky.social

PhD Student at Ommer Lab (Stable Diffusion) Trying to understand motion... 🌐 https://nickstracke.dev

Two great works on how we can manipulate style for generative modeling by PiMa!

18.10.2025 08:37 — 👍 4 🔁 0 💬 1 📌 0

🤔 What happens when you poke a scene — and your model has to predict how the world moves in response?

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

Our method pipeline

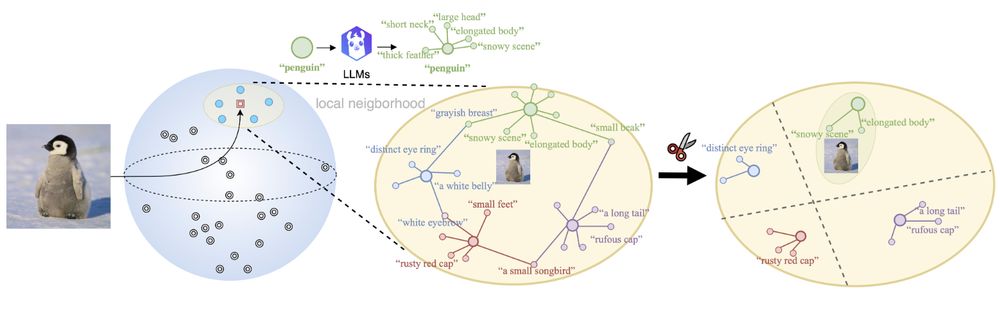

🤔When combining Vision-language models (VLMs) with Large language models (LLMs), do VLMs benefit from additional genuine semantics or artificial augmentations of the text for downstream tasks?

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

And thanks for the kind words ! :)

09.12.2024 11:29 — 👍 2 🔁 0 💬 0 📌 0It was due to a compute constraint at that time. We will update it with numbers run on the complete test set once we release a new version of the paper.

09.12.2024 11:29 — 👍 2 🔁 0 💬 2 📌 0We make code and cleaned 🧹 weights available for SD 1.5 and SD 2.1.

Have a look now!

📝 Paper: compvis.github.io/cleandift/st...

💻 Code: github.com/CompVis/clea...

🤗 Hugging Face: huggingface.co/CompVis/clea...

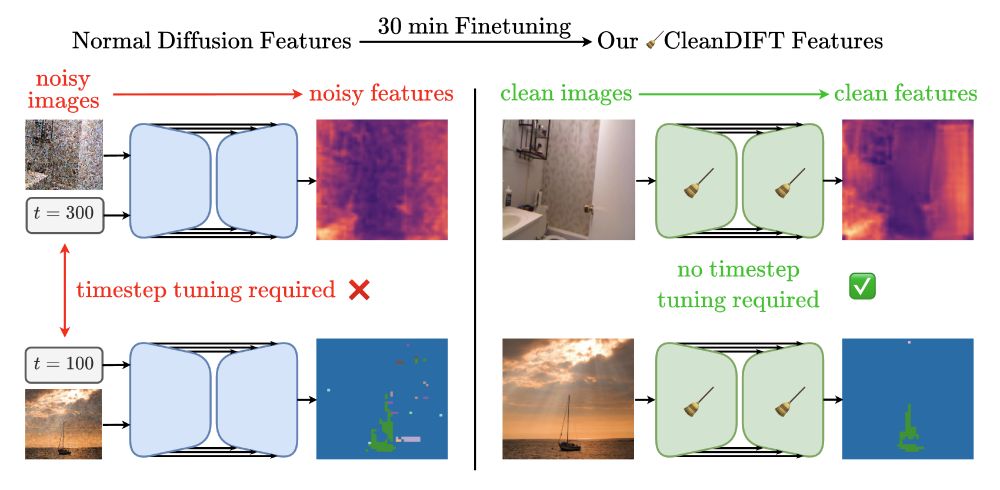

We show you can, with just 30 minutes of task-agnostic finetuning on a single GPU. 🤯

No noise. Better features. Better performance. Across many tasks.

And no timestep searching headaches! 👇

They need noisy images as input - and the right noise level for each task.

So we have to find the right timestep for every downstream task? 🤯

What if you could ditch all of that? 👇

This work was co-led by @stefanabaumann.bsky.social and @koljabauer.bsky.social.

✨ Diffusion models are amazing at learning world representations. Their features power many tasks:

• Semantic correspondence

• Depth estimation

• Semantic segmentation

… and more!

But here’s the catch ⚡️👇

🤔 Why do we extract diffusion features from noisy images? Isn’t that destroying information?

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

me right now..

20.11.2024 14:22 — 👍 48 🔁 3 💬 4 📌 0

Hi, just sharing an updated version of the PyTorch 2 Internals slides: drive.google.com/file/d/18YZV.... Content: basics, jit, dynamo, Inductor, export path and executorch. This is focused on internals so you will need a bit of C/C++. I show how you can export and run a model on a Pixel Watch too.

19.11.2024 11:05 — 👍 87 🔁 17 💬 2 📌 1![[EEML'24] Sander Dieleman - Generative modelling through iterative refinement](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:tl4gcezwgrweh4ft7eng7omj/bafkreie63ykfpesuxfzkobn6t7buaj4ulfgbyarzypyp2tagfjfcvuygdu@jpeg)

While we're starting up over here, I suppose it's okay to reshare some old content, right?

Here's my lecture from the EEML 2024 summer school in Novi Sad🇷🇸, where I tried to give an intuitive introduction to diffusion models: youtu.be/9BHQvQlsVdE

Check out other lectures on their channel as well!