Attention-like regulation of theta sweeps in the brain's spatial navigation circuit

Spatial attention supports navigation by prioritizing information from selected locations. A candidate neural mechanism is provided by theta-paced sweeps in grid- and place-cell population activity, which sample nearby space in a left-right-alternating pattern coordinated by parasubicular direction signals. During exploration, this alternation promotes uniform spatial coverage, but whether sweeps can be flexibly tuned to locations of particular interest remains unclear. Using large-scale Neuropixels recordings in freely-behaving rats, we show that sweeps and direction signals are rapidly and dynamically modulated: they track moving targets during pursuit, precede orienting responses during immobility, and reverse during backward locomotion — without prior spatial learning. Similar modulation occurs during REM sleep. Canonical head-direction signals remain head-aligned. These findings identify sweeps as a flexible, attention-like mechanism for selectively sampling allocentric cognitive maps. ### Competing Interest Statement The authors have declared no competing interest. European Research Council, Synergy Grant 951319 (EIM) The Research Council of Norway, Centre of Neural Computation 223262 (EIM, MBM), Centre for Algorithms in the Cortex 332640 (EIM, MBM), National Infrastructure grant (NORBRAIN, 295721 and 350201) The Kavli Foundation, https://ror.org/00kztt736 Ministry of Science and Education, Norway (EIM, MBM) Faculty of Medicine and Health Sciences; NTNU, Norway (AZV)

The hippocampal map has its own attentional control signal!

Our new study reveals that theta #sweeps can be instantly biased towards behaviourally relevant locations. See 📹 in post 4/6 and preprint here 👉

www.biorxiv.org/content/10.6...

🧵(1/6)

28.01.2026 10:03 — 👍 182 🔁 62 💬 4 📌 10

How do brain areas control each other? 🧠🎛️

✨In our NeurIPS 2025 Spotlight paper, we introduce a data-driven framework to answer this question using deep learning, nonlinear control, and differential geometry.🧵⬇️

26.11.2025 19:32 — 👍 89 🔁 30 💬 1 📌 3

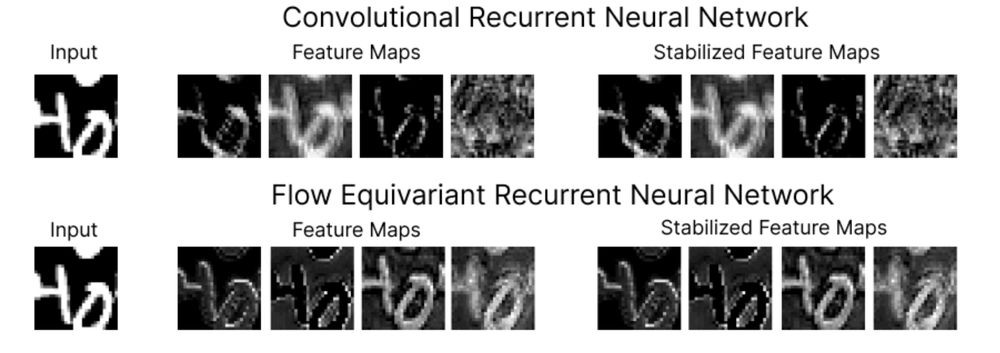

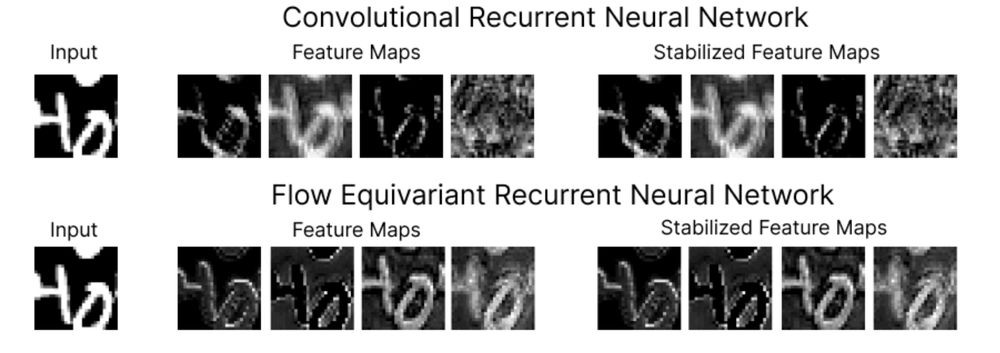

@andykeller.bsky.social @kempnerinstitute.bsky.social presented “Flow Equivariant Cybernetics”, a blueprint for agents that learn through continuous feedback with their environment.

15.10.2025 13:55 — 👍 4 🔁 2 💬 1 📌 0

Flow Equivariant Recurrent Neural Networks - Kempner Institute

Sequence transformations, like visual motion, dominate the world around us, but are poorly handled by current models. We introduce the first flow equivariant models that respect these motion symmetrie...

New in the #DeeperLearningBlog: #KempnerInstitute research fellow @andykeller.bsky.social introduces the first flow equivariant neural networks, which reflect motion symmetries, greatly enhancing generalization and sequence modeling.

bit.ly/451fQ48

#AI #NeuroAI

22.07.2025 13:21 — 👍 8 🔁 4 💬 0 📌 0

(1/7) New preprint from Rajan lab! 🧠🤖

@ryanpaulbadman1.bsky.social & Riley Simmons-Edler show–through cog sci, neuro & ethology–how an AI agent with fewer ‘neurons’ than an insect can forage, find safety & dodge predators in a virtual world. Here's what we built

Preprint: arxiv.org/pdf/2506.06981

02.07.2025 18:33 — 👍 94 🔁 32 💬 3 📌 2

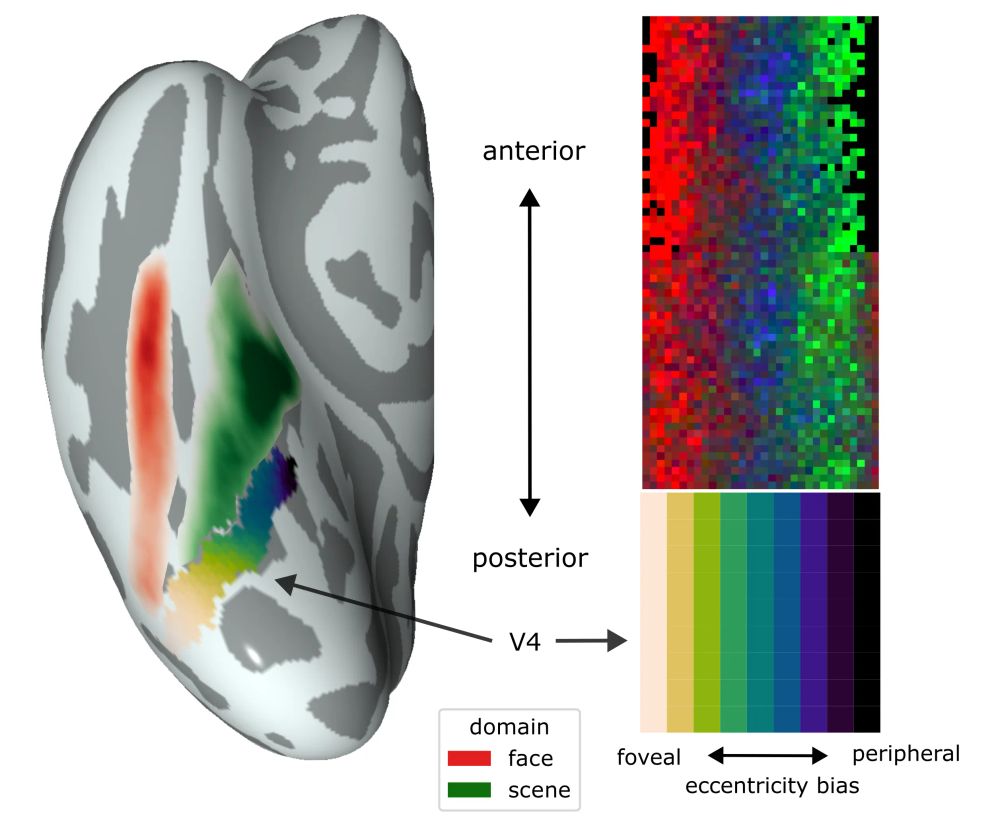

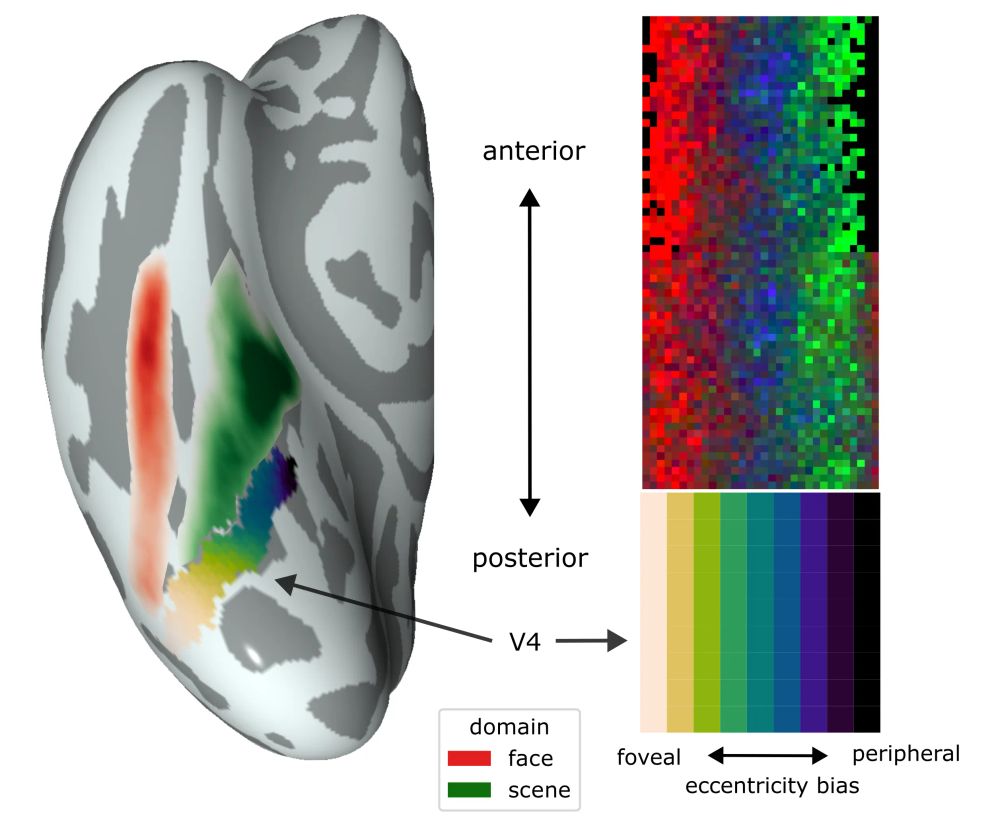

What shapes the topography of high-level visual cortex?

Excited to share a new pre-print addressing this question with connectivity-constrained interactive topographic networks, titled "Retinotopic scaffolding of high-level vision", w/ Marlene Behrmann & David Plaut.

🧵 ↓ 1/n

16.06.2025 15:11 — 👍 67 🔁 24 💬 1 📌 0

Are you an RL PhD at Harvard who has had your funding wrecked by the government and working on topics related to multi-agent? Reach out, I am happy to try to find a way to support you.

23.05.2025 00:30 — 👍 50 🔁 7 💬 1 📌 0

Looking forward to presenting our work on cortico-hippocampal coupling and wave-wave interactions as a basis for some core human cognitions

5pm May 6th EST (US)

8am May 7th AEST (Sydney)

Zoom link: columbiacuimc.zoom.us/j/92736430185

Thanks to WaveClub conveners Erfan Zabeh & Uma Mohan

06.05.2025 08:59 — 👍 19 🔁 4 💬 2 📌 0

It’s another big day for the #KempnerInstitute at @CosyneMeeting! Check out our work highlighted in poster session 3 today! #COSYNE2025

29.03.2025 13:11 — 👍 12 🔁 2 💬 1 📌 0

Such a cool connection!! I never heard of that, but that is an ingenious solution. I will likely use this reference in my future talks and mention your comment if you don’t mind!

12.03.2025 19:26 — 👍 2 🔁 0 💬 1 📌 0

Thanks for reading! Can you explain your thought process here? Imagine a neuron with a receptive field (size of the yellow square) localized to the center of the pentagon. Its input would be entirely white — same as if it were localized to the center of the triangle; and therefore indistinguishable.

11.03.2025 22:51 — 👍 2 🔁 0 💬 0 📌 0

Super interesting thread!

10.03.2025 19:58 — 👍 38 🔁 2 💬 2 📌 1

And not to forget, a huge thanks to all those involved in the work: Lyle Muller, Roberto Budzinski & Demba Ba!! And further thanks to those who advised me and shaped my thoughts on these ideas @wellingmax.bsky.social & Terry Sejnowski. This work would not have been possible without their guidance.

10.03.2025 19:14 — 👍 4 🔁 0 💬 0 📌 0

Traveling waves of neural activity are observed all over the brain. Can they be used to augment neural networks?

I am thrilled to share our new work, "Traveling Waves Integrate Spatial Information Through Time" with @andykeller.bsky.social!

1/13

10.03.2025 16:46 — 👍 38 🔁 10 💬 2 📌 1

Really interesting RNN work.

And based on some spiking simulations I've tinkered with, it seems plausible that PV, CB & CR interneurons can contribute to changing the boundary conditions and the 'elasticity' of the oscillating 'rubber sheet' of cortex (and probably hippocampus and amygdala too). 🤓

10.03.2025 15:58 — 👍 10 🔁 2 💬 0 📌 0

Overall, we believe this is the first step of many towards creating neural networks with alternative methods of information integration, beyond those that we have currently such as network depth, bottlenecks, or all-to-all connectivity, like in Transformer self-attention.

12/14

10.03.2025 15:33 — 👍 5 🔁 0 💬 1 📌 0

Tables from the paper comparing wave based models and baselines (CNNs and U-Nets) on a variety of semantic segmentation tasks

We found that wave-based models converged much more reliably than deep CNNs, and even outperformed U-Nets with similar numbers parameter when pushed to their limits. We hypothesize that this is due to the parallel processing ability that wave-dynamics confer and other CNNs lack.

11/14

10.03.2025 15:33 — 👍 6 🔁 0 💬 1 📌 0

As a first step towards the answer, we used the Tetris-like dataset and variants of MNIST to compare the semantic segmentation ability of these wave-based models (seen below) with two relevant baselines: Deep CNNs w/ large (full-image) receptive fields, and small U-Nets.

10/14

10.03.2025 15:33 — 👍 7 🔁 1 💬 1 📌 1

Was this just due to using Fourier transforms for semantic readouts, or wave-biased architectures? No! The same models with LSTM dynamics and a linear readout of the hidden-state timeseries still learned waves when trying to semantically segment images of Tetris-like blocks!

8/14

10.03.2025 15:33 — 👍 5 🔁 0 💬 1 📌 0

Plot of five representative frequency bins from the FFT of the dynamics of our wave-RNN on the shape task. We see different shapes pop out in different bins, indicating that they 'sound' different, and allowing the model to uniquely classify each shape. On the right we plot the average FFT for each pixel, separated by each shape, over the whole dataset, showing that different shapes do have measurably different frequency spectra, even in this average case.

Looking at the Fourier transform of the resulting neural oscillations at each point in the hidden state, we then saw that the model learned to produce different frequency spectra for each shape, meaning each neuron really was able to 'hear' which shape it was a part of!

7/14

10.03.2025 15:33 — 👍 7 🔁 0 💬 1 📌 0

We made wave dynamics flexible by adding learned damping and natural frequency encoders, allowing hidden state dynamics to adapt based on the input stimulus. On simple polygon images, we found the model learned to use these parameters to produce shape-specific wave dynamics:

6/14

10.03.2025 15:33 — 👍 7 🔁 0 💬 2 📌 0

Visualization of the input stimuli to our network (left) and the target segmentation labels by color (right). The receptive field of the final layer neurons in our model is plotted as the yellow box, demonstrating that a single neuron has no way to know what shape it may be a part of simply from its local neighborhood, and therefore will require global integration of information over time to solve the task.

To test this, we needed a task; so we opted for semantic segmentation on large images, but crucially with neurons having very small one-step receptive fields. Thus, if we were able to decode global shape information from each neuron, it must be coming from recurrent dynamics.

5/14

10.03.2025 15:33 — 👍 6 🔁 0 💬 2 📌 0

Visualization of the same wave-based RNN on two drums of different sizes (13 and 33 side length respectively). In the middle (in purple) we show the displacement of the drum head at a point just off the center, and (in red) the theoretical fundamental frequency of vibration that we can analytically derive for a square of side length L plotted. On the right we show the Fourier transform of these time-series dynamics, showing the frequency peak in the expected location. This validates we can estimate the size of a drum head from the frequency spectrum of vibration at any point.

We found that, in-line with theory, we could reliably predict the area of the drum analytically by looking at the fundamental frequency of oscillations of each neuron in our hidden state. But is this too simple? How much further can we take it if we add learnable parameters?

4/14

10.03.2025 15:33 — 👍 6 🔁 1 💬 1 📌 0

Inspired by Mark Kac’s famous question, "Can one hear the shape of a drum?" we thought: Maybe a neural network can use wave dynamics to integrate spatial information and effectively "hear" visual shapes... To test this, we tried feeding images of squares to a wave-based RNN:

3/14

10.03.2025 15:33 — 👍 9 🔁 1 💬 1 📌 0

Traveling Waves in Visual Cortex

In this Review, Sato et al. summarize the evidence in favor of traveling waves in

primary visual cortex. The authors suggest that their substrate may lie in long-range

horizontal connections and that ...

Just as ripples in water carry information across a pond, traveling waves of activity in the brain have long been hypothesized to carry information from one region of cortex to another (Sato 2012)*; but how can a neural network actually leverage this information?

* www.cell.com/neuron/fullt...

2/14

10.03.2025 15:33 — 👍 7 🔁 1 💬 1 📌 0

In the physical world, almost all information is transmitted through traveling waves -- why should it be any different in your neural network?

Super excited to share recent work with the brilliant @mozesjacobs.bsky.social: "Traveling Waves Integrate Spatial Information Through Time"

1/14

10.03.2025 15:33 — 👍 151 🔁 43 💬 3 📌 6

New research shows neurons learn to encode and transmit information to other spatially distant neurons through traveling waves. Read more in the #KempnerInstitute’s blog: bit.ly/3DrIPEq

10.03.2025 15:18 — 👍 7 🔁 2 💬 0 📌 0

PhD Student at Cold Spring Harbor Laboratory | Computational Neuroscience

I once cracked a good joke, really.

🌍 Paris Brain Institute is an international scientific and medical research centre.

🇫🇷 L’Institut du Cerveau est un centre de recherche scientifique et médical.

👉 https://parisbraininstitute.org/

Origins of the Social Mind • Apes • Dogs • Evolutionary Cognitive Scientist, Assistant Professor @JohnsHopkins • he/him

Neuroscientist studying the mechanisms of psychiatric treatments.

apredictiveprocessinglab.org

PhD student studying cortical computations in https://www.apredictiveprocessinglab.org

Neuroscientist at KISN at NTNU, Tronheim, Norway

Nobel prize in Medicine or Physiology, 2014 together with Edvard I. Moser and John O’Keefe for the discoveries of cells that constitute a positioning system in the brain

The vision of Novo Nordisk is to improve people's health and the sustainability of society and the planet

(This account is not run by Novo Nordisk)

The Swiss National Science Foundation funds excellent research at universities and other institutions – from chemistry to medicine to sociology.

We invest in researchers and their ideas 🔬🌱📚 — www.snf.ch/en

Deutsch & Français: @snf-fns.ch

Agents, memory, representations, robots, vision. Sr Research Scientist at Google DeepMind. Previously at Oxford Robotics Institute. Views my own.

Assistant Prof in ML @ KTH 🇸🇪

WASP Fellow

ELLIS Member

Ex: Aalto Uni 🇫🇮, TU Graz 🇦🇹, originally 🇩🇪.

—

https://trappmartin.github.io/

—

Reliable ML | UQ | Bayesian DL | tractability & PCs

Academy Professor in computational Bayesian modeling at Aalto University, Finland. Bayesian Data Analysis 3rd ed, Regression and Other Stories, and Active Statistics co-author. #mcmc_stan and #arviz developer.

Web page https://users.aalto.fi/~ave/

Computational Neuroscience | PhD. candidate at @cmc-lab.bsky.social | Fine Arts, Music

PhD student @ UC Berkeley | Previous: CS + Music @ MIT | underfit to the demands of reality

https://stephanie-fu.github.io/

Neuroscientist, statistician, programmer, and dad in St. Louis, Missouri

Ph.D. student studying the in vivo identification of cell types and the neural dynamics of decision making in prefrontal cortex. Chand Lab @ BU; NINDS F31 Fellow; prev. UW, Allen Inst., and U. Puget Sound. From Hawaii 🌴

theoretical neuroscience phd student at columbia

Mexican Historian & Philosopher of Biology • Postdoctoral Fellow at @theramseylab.bsky.social (@clpskuleuven.bsky.social) • Book Reviews Editor for @jgps.bsky.social • https://www.alejandrofabregastejeda.com • #PhilSci #HistSTM #philsky • Escribo y edito

Old school neuromorph: implementing cortical network models with elegant analog/digital electronic circuits.

Basic research in pursuit of truth and beauty.

https://www.ini.uzh.ch/en/research/groups/ncs.html

https://fediscience.org/@giacomoi

Neuroscience PhD candidate at Columbia University | UC Berkeley alum | Studying RL mechanisms for vocal learning (+ fan of animals 🐋🦇🦜🦋🦑 🦎🦕)