Interested in doing a Ph.D. to work on building models of the brain/behavior? Consider applying to graduate schools at CU Anschutz:

1. Neuroscience www.cuanschutz.edu/graduate-pro...

2. Bioengineering engineering.ucdenver.edu/bioengineeri...

You could work with several comp neuro PIs, including me.

27.09.2025 20:30 — 👍 52 🔁 30 💬 1 📌 4

PhD Position: Theory of Learning in Artificial and Biologically Inspired Neural Networks | Radboud University

Do you want to work as a PhD candidate Theory of Learning in Artificial and Biologically Inspired Neural Network? Check our vacancy!

Please RT - Open PhD position in my group at the Donders Center for Neuroscience, Radboud University.

We're looking for a PhD candidate interested in developing theories of learning in neural networks.

Applications are open until October 20th.

For more info: www.ru.nl/en/working-a...

22.09.2025 17:17 — 👍 14 🔁 13 💬 2 📌 1

Home

The school will open the thematic period on Data Science and will be dedicated to the mathematical foundations and methods for high-dimensional data analysis. It will provide an in-depth introduction ...

Just got back from a great summer school at Sapienza University sites.google.com/view/math-hi... where I gave a short course on Dynamics and Learning in RNNs. I compiled a (very biased) list of recommended readings on the subject, for anyone interested: aleingrosso.github.io/_pages/2025_...

15.09.2025 11:57 — 👍 15 🔁 2 💬 0 📌 1

With all the sad developments in the US - go study in the Netherlands: relatively low tuition (and adequate job search visa after graduating) for high-quality programs like this one in Neurophysics or Cognitive Neuroscience at the neuroscience Donders hub

27.05.2025 19:57 — 👍 6 🔁 2 💬 0 📌 1

Fantastic. Congrats Will.

15.05.2025 15:54 — 👍 1 🔁 0 💬 1 📌 0

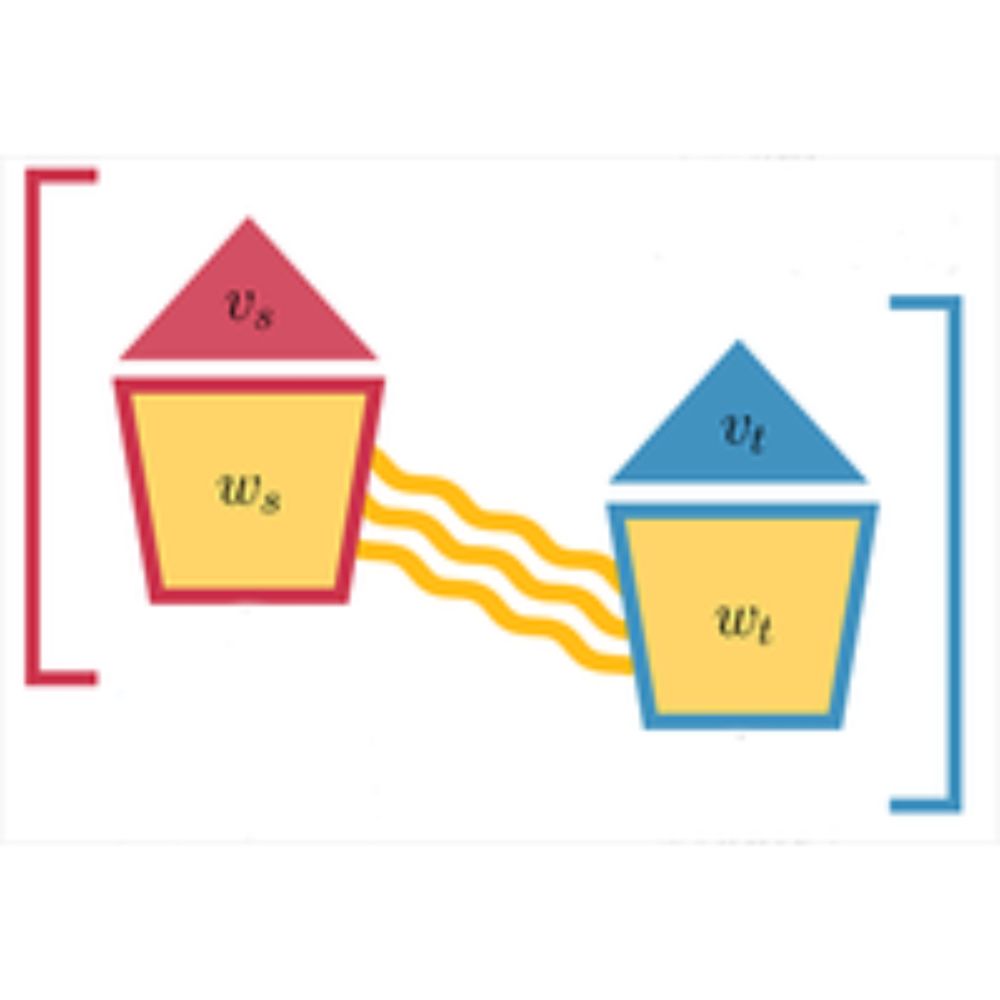

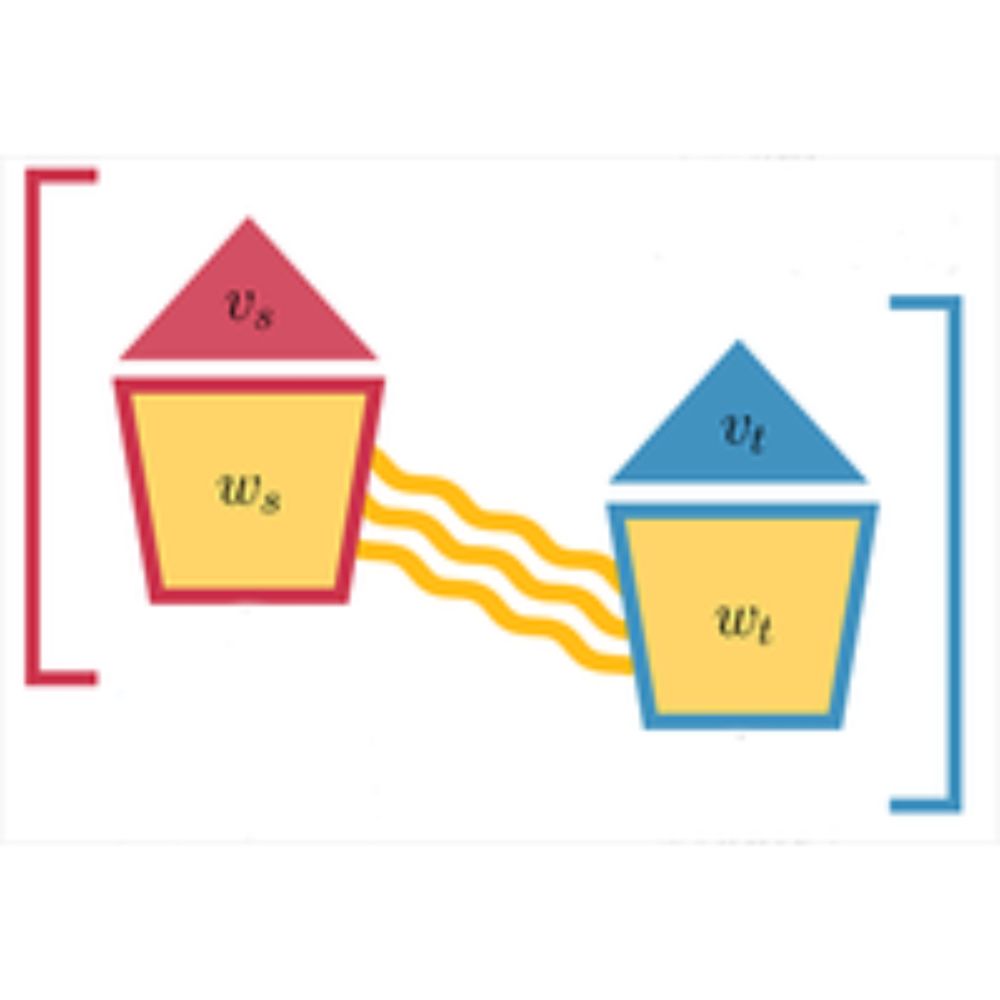

Statistical Mechanics of Transfer Learning in Fully Connected Networks in the Proportional Limit

Tools from spin glass theory such as the replica method help explain the efficacy of transfer learning.

Our paper on the statistical mechanics of transfer learning is now published in PRL. Franz-Parisi meets Kernel Renormalization in this nice collaboration with friends in Bologna (F. Gerace) and Parma (P. Rodondo, R. Pacelli).

journals.aps.org/prl/abstract...

01.05.2025 16:13 — 👍 7 🔁 2 💬 0 📌 0

External seminar - Alessandro Ingrosso (Radboud University, NL) | QBio

:

🇳🇱 For the next FRESK seminar, Alessandro Ingrosso (Radboud University, NL) will give a lecture on "Statistical mechanics of transfer learning in the proportional limit"

@aingrosso.bsky.social

More info on Qbio's website ! ⤵️

qbio.ens.psl.eu/en/events/ex...

28.03.2025 18:51 — 👍 0 🔁 1 💬 0 📌 0

Announcing our StatPhys29 Satellite Workshop "Molecular biophysics at the transition state: from statistical mechanics to AI" to be held in Trento, Italy, from July 7th to 11th, 2025: indico.ectstar.eu/event/252/.

Co-organized with Raffaello Potestio and his lab in Trento.

11.03.2025 13:38 — 👍 2 🔁 0 💬 0 📌 0

Density of states in neural networks: an in-depth exploration of...

Learning in neural networks critically hinges on the intricate geometry of the loss landscape associated with a given task. Traditionally, most research has focused on finding specific weight...

Our paper on density of states in NNs is now published in TMLR. We show how the loss landscape in simple learning problems can be characterized by Wang-Landau sampling. A nice collaboration with the Potestio Lab in Trento, at the interface between ML and soft-matter.

openreview.net/forum?id=BLD...

18.02.2025 13:20 — 👍 3 🔁 0 💬 0 📌 0

New paper with @leonlufkin.bsky.social and @eringrant.bsky.social!

Why do we see localized receptive fields so often, even in models without sparisity regularization?

We present a theory in the minimal setting from @aingrosso.bsky.social and @sebgoldt.bsky.social

13.12.2024 10:49 — 👍 28 🔁 8 💬 0 📌 0

Excess kurtosis strikes back.

13.12.2024 08:56 — 👍 5 🔁 0 💬 0 📌 0

MIT BCS | grad

part-time reductionist, full time human

Computational Neuroscience Ph.D. Student @ Boston University. Labs of Dr. Brian DePasquale, Ben Scott, & Steve Ramirez. #JuliaLang stan.

I’m a postdoc @Imperial, advised by @danakarca.bsky.social and @neural-reckoning.org.

I’m passionate about brain‑inspired neural networks, focusing on delay learning in RNNs (spiking/rate) and lightweight attention mechanisms.

PhD Student at MIT Brain and Cognitive Sciences studying Computational Neuroscience / ML. Prev Yale Neuro/Stats, Meta Neuromotor Interfaces

Aspiring philosopher; tolerable human; "amusing combination of sardonic detachment & literally all the feelings felt entirely unironically all at once" [he/his]

Theoretical Neuroscience | Physics PhD candidate at the University of Ottawa 🇨🇦 | Interested in how neural networks encode information and compute | BJJ hobbyist

The Laboratory of Computational Neuroscience @EPFL studies models of neurons, networks of neurons, synaptic plasticity, and learning in the brain.

Professor, Northwestern University

Computational neuroscience | Neural manifolds

Comp Neuro PhD student @ Princeton. Visiting Scientist @ Allen Institute. MIT’24

https://minzsiure.github.io

Professor of Computational Cognitive Science | @AI_Radboud | @Iris@scholar.social on 🦣 | http://cognitionandintractability.com | she/they 🏳️🌈

Professor a NYU; Chief AI Scientist at Meta.

Researcher in AI, Machine Learning, Robotics, etc.

ACM Turing Award Laureate.

http://yann.lecun.com

I work at Sakana AI 🐟🐠🐡 → @sakanaai.bsky.social

https://sakana.ai/careers

Computational Neurobiologist from Sydney, Australia. https://shine-lab.org. Banner image from https://www.gregadunn.com.

Non-profit organisation - Champalimaud Centre for the Unknown

Neuroscience & Cancer Research

Science Communication & Outreach

https://www.fchampalimaud.org/champalimaud-research

Computational cognitive scientist. Perception and action are inseparably intertwined. Prof TUDarmstadt, Director Centre For Cognitive Science https://www.cogsci.tu-darmstadt.de/, Member Hessian.AI https://hessian.ai/ & ELLIS

https://www.pip.tu-darmstadt.de

Postdoctoral Researcher, working at the intersection of AI 💻 and neurosciences 🧠 @UKEHamburg

Previously @etislab and @cbcUPF

https://sites.google.com/view/raphael-bergoin/home