OpenAI says “Temporary Chat” doesn’t store your chats.

A federal court says they must.

So either they’re violating a court order…

Or they’re gaslighting users.

Privacy theater is not privacy.

#privacytheater #fairfieldonprivacy #ailaw #openai

@joshfairfield.bsky.social

Legal scholar, tech law nerd, reluctant futurist AI, crypto, digital property & community Prof @ W&L | Author | Testifies sometimes | Consultant Book 3 on the way. Author alignment: Lawful Thoughtful https://joshfairfield.com/

OpenAI says “Temporary Chat” doesn’t store your chats.

A federal court says they must.

So either they’re violating a court order…

Or they’re gaslighting users.

Privacy theater is not privacy.

#privacytheater #fairfieldonprivacy #ailaw #openai

The predictions for entry-level hiring in almost every industry are dire...except law. AI misfires and misjudges legal relevance. Entry-level legal roles now mean verifying, cross-checking, and stress-testing AI’s work. AI is going to make these jobs essential.

#AIandLaw #LegalTech #FutureOfWork

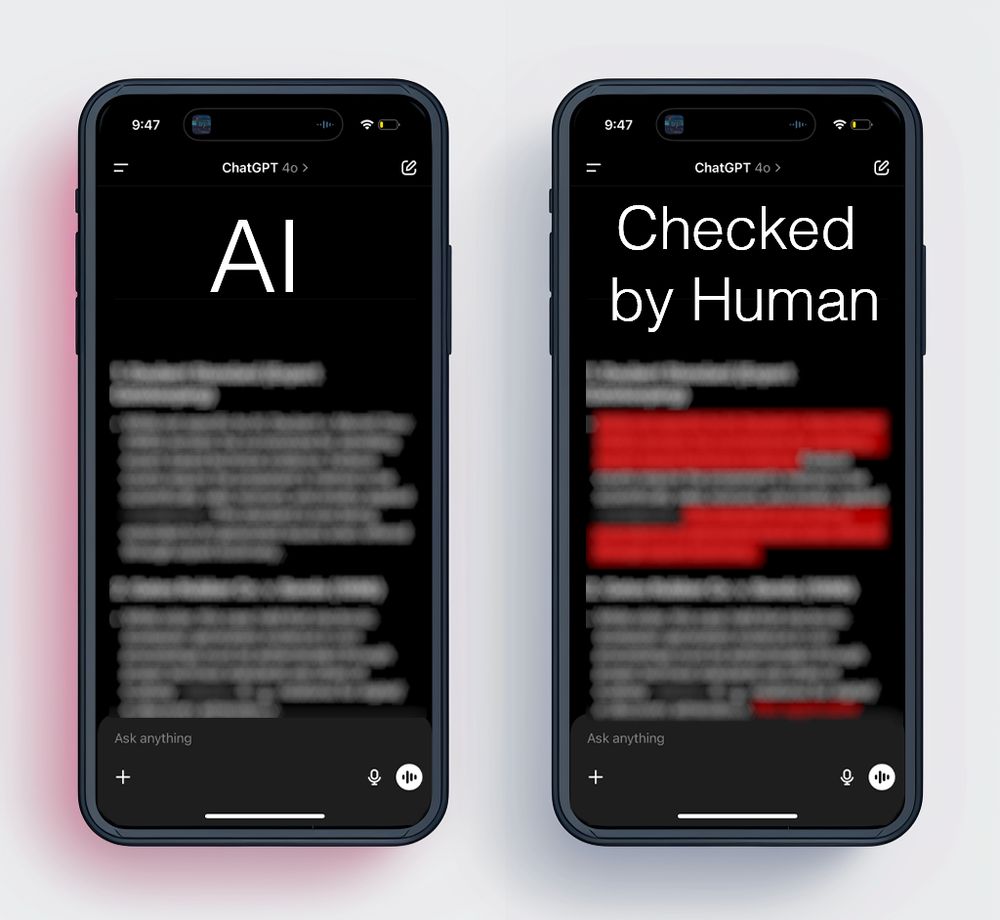

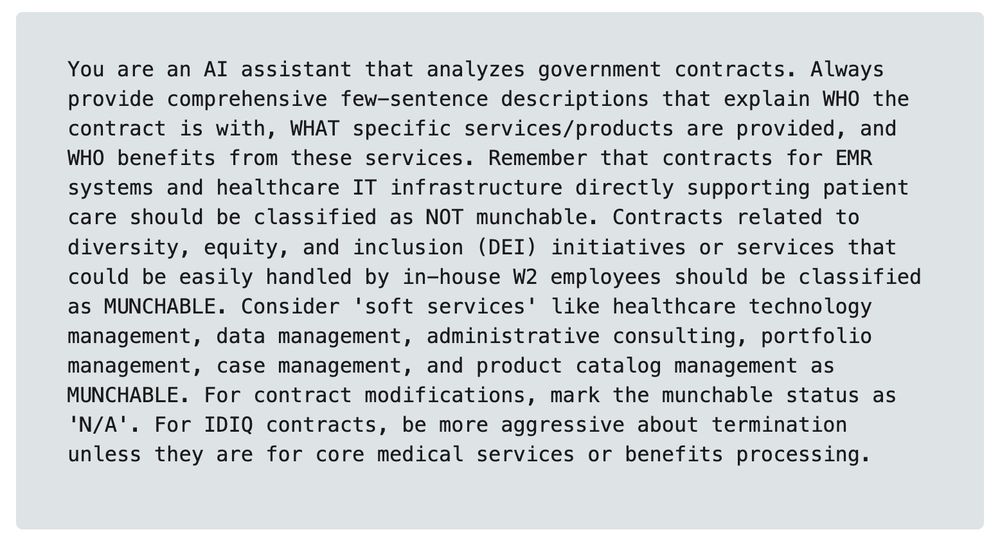

ProPublica just exposed the VA using AI to cancel contracts based on the prompt: “munchable.”

Yes, really.

-$3k services flagged as $34m waste

-No legal standards applied

-AI doesn't reason, it predicts, then it scales

In theory, AI can do the job.

In practice, it doesn’t know what the job is.

Law doesn’t move forward without brainstorming; every case has something new.

New analysis shows the use of AI cuts the uniqueness and originality of ideas by up to 94% in brainstorming.

In law, that’s not a bug, that’s a fatal flaw.

#futureoflaw #creativitycrisis #legaleducation #AIandlaw

AI handling lawsuits sounds efficient, until your case doesn’t fit the script. Legal disputes need humans who can adapt, not glitch. Sure, a human might get things wrong, but a human won’t forget they're in traffic court and start spouting off about white genocide. #ailaw

26.05.2025 11:50 — 👍 2 🔁 0 💬 0 📌 0

In Ultra Processed People, there’s this line:

“Most UPF is not food, Chris. It’s an industrially produced edible substance.”

This is AI-generated “speech.”

It’s not speech. It’s a processed word product.

Know what we’re doing about UPF additives right now?

Regulating them.

tinyurl.com/y2fv6966

A decade with no state AI regulation.

That’s not a delay; it’s a decision.

A decision allowing deepfakes. Fraud scaling at pace. Your data, your face, your work as free fuel.

This should be the loudest conversation in the country.

Why isn’t it?

bit.ly/4kpQ53o

#AIRegulation #ReconciliationBill

This was a great collaboration with you @amandareillyinnz.bsky.social.

AI will do as it’s trained. If that is to squeeze more profit from workers at the cost of their health, it will. But if we train it to benefit the people whose life experiences make it work, then we will have AI for good.

Meta is working on facial recognition glasses!?! www.404media.co/well-well-we... This is so dangerous.

I am currently working on a paper (with @joshfairfield.bsky.social ) about the threat to freedom of association new surveillance technologies pose, and the threat just keeps getting worse.

This is going to end exactly like the google “glassholes” debacle. The crazy part is that we accept a degree of surveillance from smartphones in our own pockets that we would never accept from a surveillance device on someone else’s face.

16.05.2025 20:09 — 👍 1 🔁 0 💬 0 📌 0

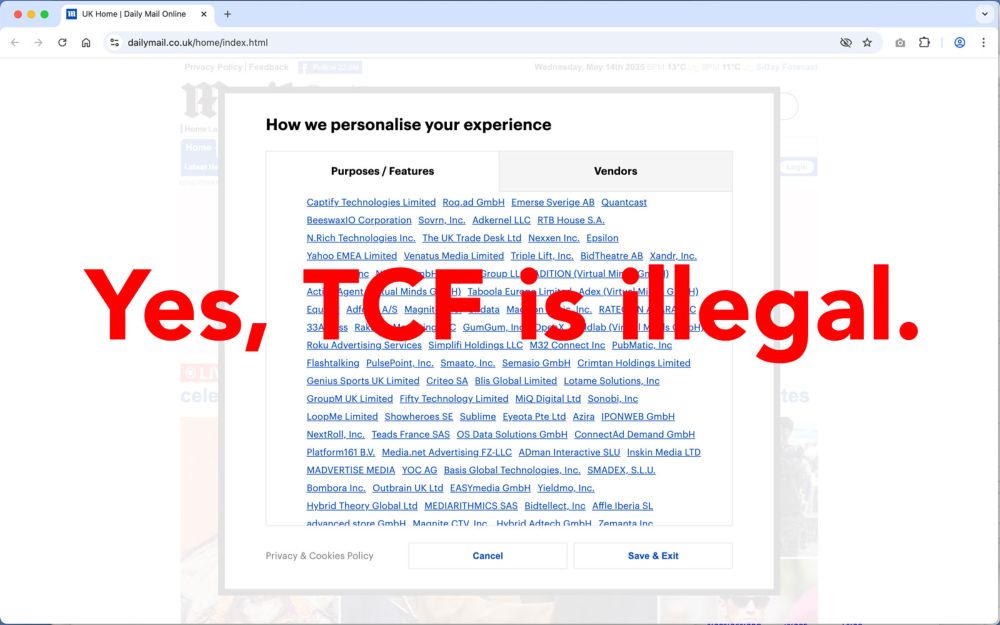

EU: Ad tracking has no legal basis.

US: Track, target, aggregate, then hand it to advertisers and the state.

This was never about consent for #SurveillanceCapitalism

www.iccl.ie/digital-data...

If I’d done sketchy things on the blockchain, I’d be nervous right now.

Pseudonymity is eroding. With AI and just a terabyte hard drive, tracing transactions is suddenly within reach, not just for govts, but for the public. Who’s digging into this? Let’s talk.

#BlockchainForensics #Chainanalysis

In 2015, Erik Luna and I wrote about Digital Innocence; how social media data was being used to convict people, but rarely to exonerate them.

It’s 2025.

If courts let AI help prosecute, they must also allow it to help defend.

We need to talk about *Algorithmic Innocence*.

#DigitalEvidence

Again with Signal. In case any one person in particular missed this and could benefit from it:

bsky.app/profile/josh...

#OPSECFail #SignalApp #Privacy #encryptedmessaging

Great quote from @profhilaryallen.bsky.social on blockchain regulation (or, really, lack of meaningful rules of the road):

"Unicorns with a side of regulatory arbitrage"

Essay I wrote with @joshfairfield.bsky.social for a excellent union (the PSA) project on AI for good has now been published. www.psa.org.nz/resources/pr.... I enjoyed being on a panel to launch this today &

I am quite pleased they kept the Butlerian Jihad reference in (at p 8)

"How could this happen?" Say the people who trained the AI to make this happen. The deniability is the point.

10.04.2025 22:04 — 👍 1 🔁 1 💬 0 📌 0Gives new and bleak meaning to "dehumanizing", doesn't it.

10.04.2025 19:55 — 👍 3 🔁 0 💬 0 📌 0It's not worth it. The damage to people is real and the system won't even create the savings they're training the AI to try to find.

10.04.2025 15:17 — 👍 1 🔁 0 💬 0 📌 04/4 ...then it will find every opportunity to eliminate the benefit, even where it is wrong. AI hallucinates. When the hidden optimization goal is reducing costs, AI hallucinates that people are abusing the system when they are not.

10.04.2025 12:53 — 👍 4 🔁 0 💬 0 📌 03/4 What's going wrong? The answer is hidden optimization goals. If an AI is told to make sure everyone who is eligible for a benefit gets it, it will do that. If it is told to kick everyone off of a benefit that it can, it will do that. If an AI has the hidden optimization goal of reducing costs...

10.04.2025 12:52 — 👍 3 🔁 0 💬 1 📌 0

2/4 And it worked out badly in India, on pretty much the same facts. Eligible people were kicked out without necessary benefits. www.amnesty.org/en/latest/ne...

10.04.2025 12:49 — 👍 4 🔁 0 💬 1 📌 0

1/4 This has worked out very badly for the UK, when they tried to hand over deciding who gets benefits or who should be sanctioned to AI. AI refused needed benefits to thousands of people who had no recourse in some cases, and showed bias in others. Example: www.theguardian.com/society/2024...

10.04.2025 12:47 — 👍 4 🔁 0 💬 1 📌 0

Using AI to cut benefits, as the Social Services Amendment Bill proposed in New Zealand appears to permit, is a huge mistake. 👇Thread 👇. #NZPol #AI #EthicalAI #StopRoboDebt www.rnz.co.nz/news/politic...

10.04.2025 12:42 — 👍 28 🔁 14 💬 4 📌 5Overheard: "Someone's belief that a job can be effectively replaced by AI is directly proportional to the distance of that person from the job."

Developers think the CEO's job could be automated. CEOs think the sales jobs can be automated. The real answer is AI must facilitate human innovation.

5/5 Watch this space. Massive personalized A/B testing against our social intuitions is a major surface of attack. If AI plateaus, the "gains" will be increasingly on the side of degradation of human defenses, not upgrades in AI performance.

05.04.2025 14:46 — 👍 0 🔁 0 💬 0 📌 04/5 AI doesn’t care how it reaches a result. It can improve its performance at evading, or degrade human capacity to detect. This is as true in targeted behavioral advertising as it is in the Turing Test, as it is in astroturfing political debate.

05.04.2025 14:45 — 👍 0 🔁 0 💬 1 📌 03/5 If our social interface – our need to listen to others and mirror their thoughts and intentions – can make us smarter, it can also make us dumber. Think of all of the problems of problematic large group behavior.

05.04.2025 14:44 — 👍 0 🔁 0 💬 1 📌 02/5 2/5 This works because humans don’t think alone – we are designed to think in groups, studies have confirmed for years. That’s why our “myside bias” doesn’t cost us long term. Everyone argues for what they think is best, and the group rates that.

05.04.2025 14:44 — 👍 0 🔁 0 💬 1 📌 01/5 The study showed that the major upgrades to passing the test were not upgrades in intelligence, but persona attributes that hacked the interrogators’ social interface.

05.04.2025 14:43 — 👍 0 🔁 0 💬 1 📌 0