Hello 👋

10.12.2024 13:17 — 👍 0 🔁 0 💬 0 📌 0Colin Webb

@colinwebb.bsky.social

I write software - Java/Scala, Python, and Rust. Based in a village near Oxford, UK

@colinwebb.bsky.social

I write software - Java/Scala, Python, and Rust. Based in a village near Oxford, UK

Hello 👋

10.12.2024 13:17 — 👍 0 🔁 0 💬 0 📌 0I think AI tools need sandboxes, rather than allowing them full access to your computer via things like the Claude Desktop app.

🤔 brew install <87 things>

‼️ pip install <the whole world>, but without a virtualenv

I've been down this path myself. It wasn't fun.

Separating concerns - makes sense also 😀

Thanks for taking the time to reply!

www.emec.org.uk/six-tidal-st...

Looks like there's a handful now with financing.

Hopefully they'll prove successful 🤞

Does the UK have any tidal power at present?

I'm aware of many projects that never got approved, or haven't started yet... but have any been built?

Makes sense.

At some point your systems have to affect the real-world though. How does that happen if redundant systems are doing the same work? Which system takes precedence? How's that configured? Is it first-write-wins?

How do you handle resiliency in these types of systems? Do you have a favourite pattern?

05.12.2024 19:56 — 👍 1 🔁 1 💬 1 📌 0

How do you build trading systems capable of sub-microsecond latencies? What are some key principles behind deterministic microservices, event sourcing, and optimised Java architectures?

This post is based on a presentation I have on this topic.

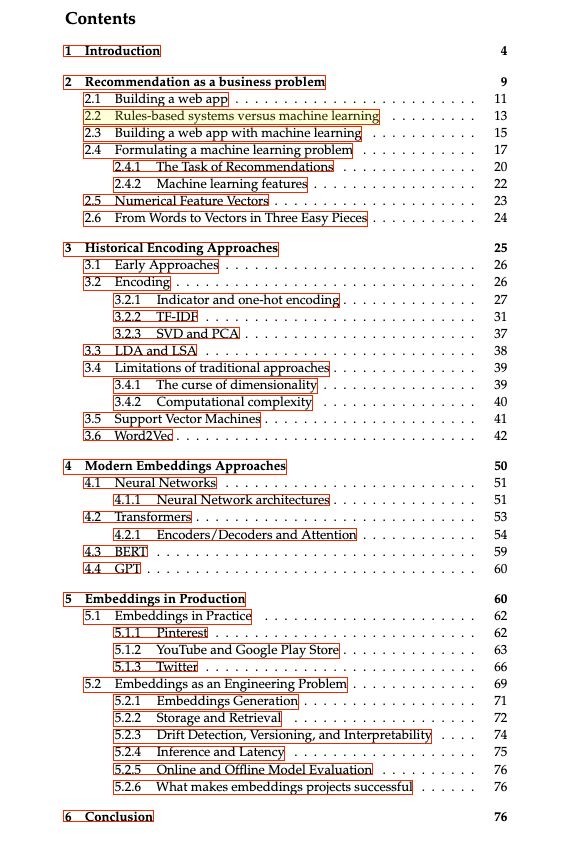

Book outline

Over the past decade, embeddings — numerical representations of machine learning features used as input to deep learning models — have become a foundational data structure in industrial machine learning systems. TF-IDF, PCA, and one-hot encoding have always been key tools in machine learning systems as ways to compress and make sense of large amounts of textual data. However, traditional approaches were limited in the amount of context they could reason about with increasing amounts of data. As the volume, velocity, and variety of data captured by modern applications has exploded, creating approaches specifically tailored to scale has become increasingly important. Google’s Word2Vec paper made an important step in moving from simple statistical representations to semantic meaning of words. The subsequent rise of the Transformer architecture and transfer learning, as well as the latest surge in generative methods has enabled the growth of embeddings as a foundational machine learning data structure. This survey paper aims to provide a deep dive into what embeddings are, their history, and usage patterns in industry.

Cover image

Just realized BlueSky allows sharing valuable stuff cause it doesn't punish links. 🤩

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...