Dean W. Ball

@deanwb.bsky.social

Senior Policy Advisor for AI and Emerging Technology, White House Office of Science and Technology Policy | Strategic Advisor for AI, National Science Foundation https://hyperdimensional.co

@deanwb.bsky.social

Senior Policy Advisor for AI and Emerging Technology, White House Office of Science and Technology Policy | Strategic Advisor for AI, National Science Foundation https://hyperdimensional.co

There are tons of technocratic AI policy proposals, but little concrete intuitions about the near-term future to motivate those proposals. The result is that the field sometimes feels disembodied, devoid of a concrete vision.

Here is what I think is coming.

OpenAI releasing a model spec is one of my favorite things about them. All frontier labs should be doing this. Closes the gap between intentions and results, so you can interpret them better.

openai.com/index/sharin...

Enjoy!

t.co/eQczeWf4Vl

My latest, on the AI export controls generally and the diffusion rule in particular.

This regulatory adventure, whatever you think of it on the merits, is a case study in how rules beget more rules—how regulation grows, like a plant or a weed.

Excited to launch a new podcast with @deanwb.bsky.social about AI. It's called AI summer, and can be found on all major podcast apps. The first episode is an interview with political scientist @jonaskonas.bsky.social about AI policy in the Trump era. www.aisummer.org/p/jon-askona...

14.01.2025 18:53 — 👍 19 🔁 5 💬 0 📌 0

A little late, but--my 2025 'lookahead' post is here, covering some of the major AI products, research, and policy I'll be watching this year. I hope you enjoy!

t.co/CZdR8zjsFH

American AI policy in 2025 will almost certainly be dominated, yet again, by state legislative proposals rather than federal government proposals, writes Dean Ball.

09.01.2025 15:33 — 👍 6 🔁 7 💬 1 📌 0Hey, it’s worked well for my specific uses (which often involve broad surveys of state policies), but I have heard lots of people saying it is mid for them. Ymmv!

09.01.2025 03:23 — 👍 1 🔁 0 💬 1 📌 0I think it mostly wouldn’t apply to the dod specifically but it absolutely would apply to any dod contractors with a presence in Texas.

05.01.2025 07:35 — 👍 3 🔁 0 💬 0 📌 0

I would say I’ve heard these points made by people from many of the most prominent think tanks in dc. Would also point you to articles like this recent one from the Wall Street journal

www.wsj.com/opinion/ai-c...

Link here: www.hyperdimensional.co/p/one-down-m...

19.12.2024 14:07 — 👍 3 🔁 0 💬 0 📌 0

Hyperdimensional is almost a year old! So I wrote a reflection on the first year, covering the origins of the project, SB 1047, where I erred, the impossibility of controlling technocracy, where I see the project going next, and more. Enjoy!

19.12.2024 14:07 — 👍 5 🔁 0 💬 3 📌 0ah man, we’re fucked

14.12.2024 22:02 — 👍 1 🔁 0 💬 0 📌 0I really wish more people criticized my own work, too.

11.12.2024 02:13 — 👍 3 🔁 0 💬 0 📌 0

“Since we necessarily underestimate our creativity, it is desirable that we underestimate to a roughly similar extent the difficulties of the tasks we face, so as to be tricked… into undertaking tasks which we can, but otherwise would not dare, tackle.”

-Albert Hirschman

Everyone I know who follows AI policy closely knows about these bills and is concerned. But there is no public outcry, like there was with SB 1047.

It’s time to change that.

One is already law (going into effect in 2026) in CO. Another will probably be law soon enough, via bureaucrat “rule making,” in CA. It has a good chance of passing next year in TX, VA, and CT. We’ll see it elsewhere, too.

06.12.2024 19:46 — 👍 0 🔁 0 💬 1 📌 0I’m referring to a slate of civil rights-based bills we will see throughout America. They’re among the most complex AI bills with a serious chance of becoming law.

06.12.2024 19:46 — 👍 0 🔁 0 💬 1 📌 0

America is charging into an AI policy regime that will slow down adoption of AI, shrink model developers’ addressable market, and could deter frontier AI investment.

It’s one of the worst ways to regulate AI, it could become a national standard soon, and it’s under-discussed.

Please share!

05.12.2024 01:58 — 👍 0 🔁 0 💬 1 📌 0 05.12.2024 01:55 —

👍 2

🔁 0

💬 0

📌 0

05.12.2024 01:55 —

👍 2

🔁 0

💬 0

📌 0

If chatbots stay in their current role—assistants I use for random tasks throughout the day—then ads have a negligible effect on their utility.

If however chatbots become something like what the ai companies all aspire to—genuine “virtual chiefs of staff”—ads may be ruinous.

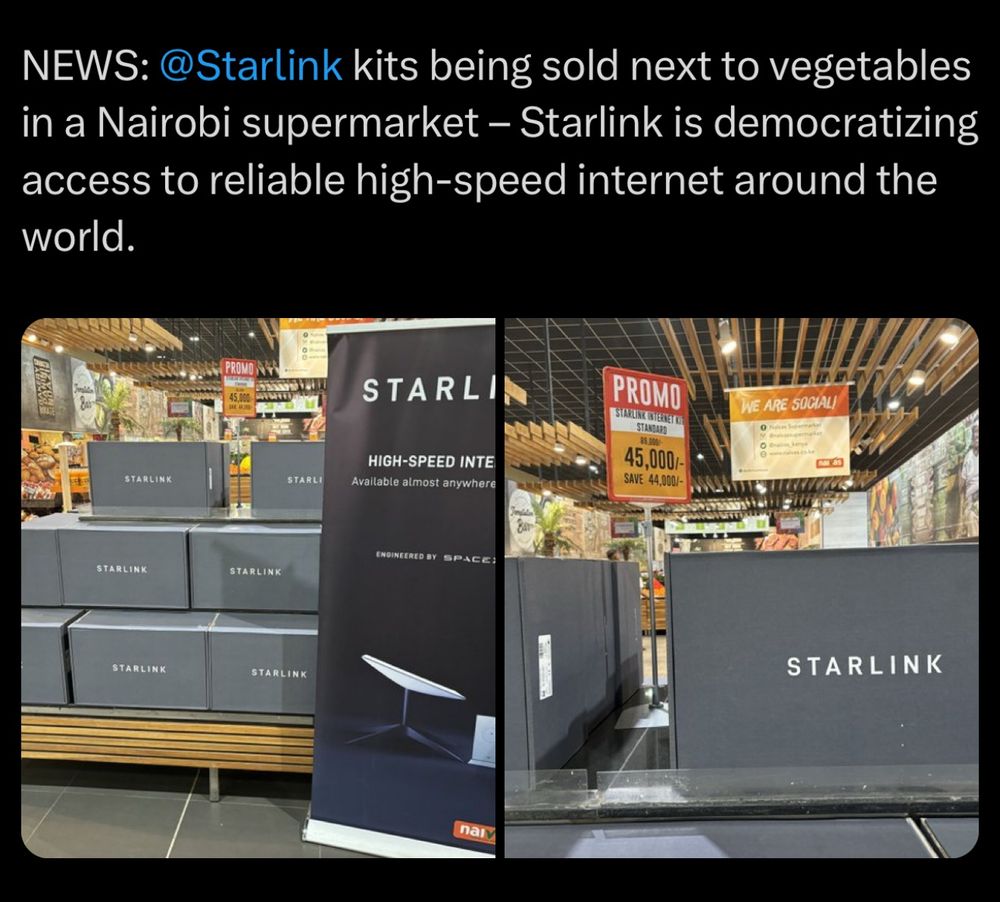

The world we live in today is already incredibly Cyberpunk, by comparison with the world of the 90s and early 2000s that I grew up in

02.12.2024 20:55 — 👍 9 🔁 1 💬 1 📌 1Evidently, yes. There is one item from the bulleted list that we omit in that passage (discrimination).

29.11.2024 12:42 — 👍 0 🔁 0 💬 1 📌 0The post is just that: a post summarizing my own views. And my view is that the focus on nebulous risks in the CoP, in the AI Act itself, and in various American policy documents is a foundational error. Also, I’ll just note that you are wrong: we don’t say every listed risk is easily measurable.

28.11.2024 22:14 — 👍 1 🔁 0 💬 1 📌 0I would suggest reading the articles you’re criticizing before you criticize them, in general. Neither miles nor i strawmanned anyone. if you think the current document is even close to well scoped, that’s fine! We just disagree.

28.11.2024 21:03 — 👍 0 🔁 0 💬 1 📌 0I also do not think it’s right to say that the government (the people who can fine these companies billions) should “merely” tell developers to “consider” what amounts to a massive range of nebulous risks. There is nothing “mere” about that!

28.11.2024 12:11 — 👍 0 🔁 0 💬 1 📌 0The portions we quoted and criticized are quoted directly from the piece. You can disagree with our criticism and believe these risks should be considered, but it is not a strawman to quote directly from a piece.

28.11.2024 12:09 — 👍 0 🔁 0 💬 1 📌 0