Front page of the website for the book: Practical Spiking Neural Networks

The field of #neuromorphics is lacking *accessible*, *intuitive*, and *practical* introductions. Ramashish Gaurav, Petruț Antoniu Bogdan, and I are setting out to fix this with a book on Practical Spiking Neural Networks! ✅

Any and all contributions are welcome! 💕

Early access at: snnbook.net

14.01.2026 10:36 —

👍 17

🔁 5

💬 1

📌 1

One of the underrated papers this year:

"Small Batch Size Training for Language Models:

When Vanilla SGD Works, and Why Gradient Accumulation Is Wasteful" (arxiv.org/abs/2507.07101)

(I can confirm this holds for RLVR, too! I have some experiments to share soon.)

29.12.2025 15:52 —

👍 69

🔁 10

💬 0

📌 1

Very neat approach to mapping biological circuits to neuromorphic HW!

Had the pleasure of providing some feedback to Suraj Honnuraiah on an earlier draft of the paper, great to see it finally in print! (I actually missed that it already came out in Oct)

Link to paper:

www.pnas.org/doi/10.1073/...

11.12.2025 22:00 —

👍 0

🔁 0

💬 0

📌 0

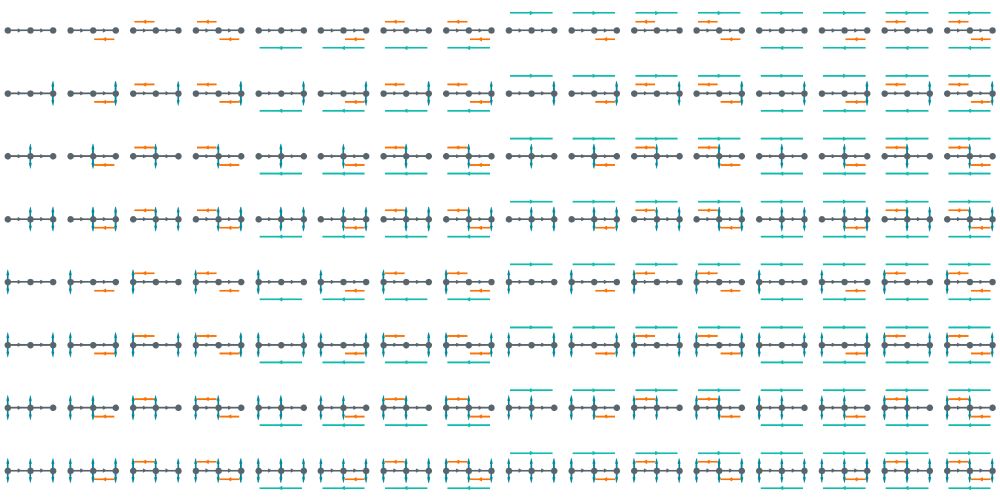

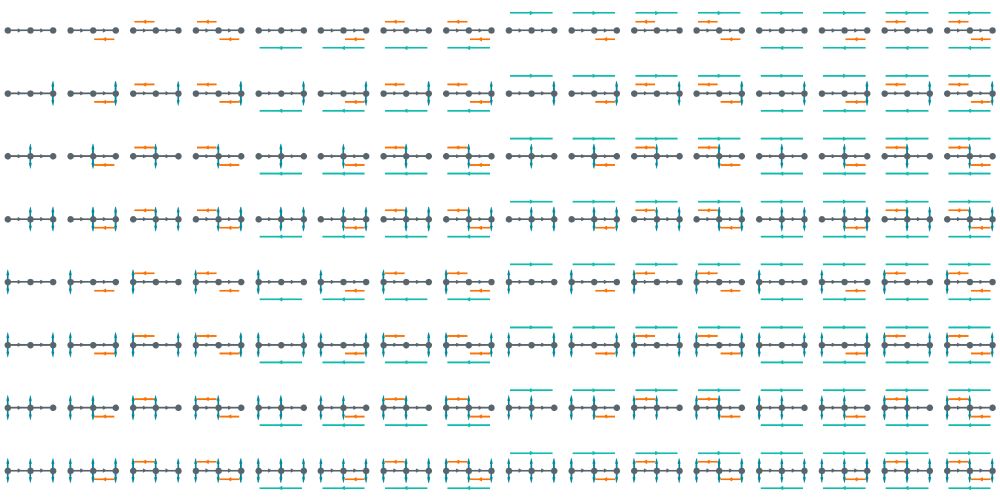

A diagram showing 128 neural network architectures.

How does the structure of a neural circuit shape its function?

@neuralreckoning.bsky.social & I explore this in our new preprint:

doi.org/10.1101/2025...

🤖🧠🧪

🧵1/9

01.08.2025 08:26 —

👍 109

🔁 40

💬 6

📌 7

With Masashi launching his new lab, we’ll be recruiting a new postdoc in the Oldenburg Lab.

Work: high-precision multiphoton holography, neural coding, motor cortex circuits, all-optical physiology.

If you’re interested, just reach out.

01.12.2025 14:38 —

👍 3

🔁 4

💬 0

📌 0

1/6 New preprint 🚀 How does the cortex learn to represent things and how they move without reconstructing sensory stimuli? We developed a circuit-centric recurrent predictive learning (RPL) model based on JEPAs.

🔗 doi.org/10.1101/2025...

Led by @atenagm.bsky.social @mshalvagal.bsky.social

27.11.2025 08:24 —

👍 141

🔁 41

💬 3

📌 4

An old record player set by the side of the road, with a note reading: "does not work but could be fun to fix!"

When you join a lab to do some voltage imaging

26.11.2025 13:40 —

👍 75

🔁 8

💬 4

📌 1

I am still able to login fine, can try submitting an error/bug report on your behalf

26.11.2025 12:30 —

👍 0

🔁 0

💬 0

📌 0

Maybe it's 'just' a question of figuring out how few neurons can you nudge (and which ones) to get max desynch. Anyway, definitely sound like a cool modelling problem! 😅

26.11.2025 08:12 —

👍 1

🔁 0

💬 0

📌 0

Interesting, how would one actually do this? (either the isochron or the lyapunov) E.g optogenetics seems more likely to induce synchrony

26.11.2025 07:58 —

👍 1

🔁 0

💬 1

📌 0

whereas more global LFP (~0.1-0.5mV amplitude) would have a much smaller direct effect (but maybe still meaningful). Much harder to demonstrate such causal effects in mammals, since manipulating single neurons generally doesn't affect behavior like it does in drosophila

25.11.2025 19:38 —

👍 1

🔁 0

💬 0

📌 0

Yes, thanks for sharing @stevenflorek.bsky.social! This is a pretty convincing example of eph coupling having causal role (drosophila ppl always have the coolest results!). My guess is that the key variable here is distance, e.g. many neurons might have such effect on their immediate neighbors

25.11.2025 19:38 —

👍 3

🔁 0

💬 1

📌 0

E.g. you could argue that hippocampal theta-sweeps would be completely messed up if you jitter the spikes just a little, but the underlying place field would be essentially the same

25.11.2025 16:43 —

👍 1

🔁 0

💬 0

📌 0

Yes, it's always a question of temp resolution. Saying "spike time matters" implies that that jittering spikes by ~1-2 ms would meaningfully change the computation. "Rate code" implies that computation would only be meaningfully affected by shifting the spike times a much larger amount (eg >10ms)

25.11.2025 16:43 —

👍 1

🔁 0

💬 1

📌 0

Since this is clearly one of your favorite topics, would be able to point to 1-2 papers / key results that you find to be the most compelling piece(s) of evidence?

25.11.2025 13:55 —

👍 1

🔁 0

💬 1

📌 0

Lots of insignificant weak things can be measured, showing that something matters causally is extremely difficult. The claim that spike timing matters broadly is only moderately controversial. The claim that ephaptic coupling is causal in some larger circuit computation is much more controversial

25.11.2025 13:13 —

👍 1

🔁 0

💬 1

📌 0

💯, I'm confused about what people are actually trying to claim here. Oscillations are important in the sense that *spike timing* matters. I think there is a good amount of data backing that up (e.g. HPC theta). This has nothing to do with any direct effect of the (extremely weak) LFP electric field

25.11.2025 12:56 —

👍 3

🔁 0

💬 1

📌 0

supportive mentor I could have wished for, and who gave me the time and space to learn and grow, and the intellectual freedom explore my ideas (and the occasional rabbit hole). Will miss all the wonderful colleagues I am leaving behind. Now on to new adventures, see you all back in Europe!

19.11.2025 12:26 —

👍 2

🔁 0

💬 1

📌 0

Feel incredibly fortunate to have worked alongside @neurosutras.bsky.social over the last few years and proud of all that we achieved. I'm very grateful to all the people who supported my many fellowship applications over the years.

Especially grateful to Aaron, who has been the most

19.11.2025 12:26 —

👍 2

🔁 0

💬 1

📌 0

Last week was my last at Rutgers University. After nearly 5 years in the US, I am moving to the Netherlands to join Innatera, a neuromorphic computing startup pushing the boundaries of what we can do with ultra-efficient hardware running SNNs for edge computing.

19.11.2025 12:26 —

👍 17

🔁 0

💬 1

📌 0

There was never any point to having reference letters. That's why we've all started using AI to do this nonesense task.

References should only be used for short-listed candidates for important positions/awards, and ideally, be done via a call to get the most honest opinion possible.

14.11.2025 19:10 —

👍 52

🔁 11

💬 7

📌 2

Very cool! Maybe it's just my bad intuition, but I find it surprising that weights can tolerate more extreme quantisation that delays

14.11.2025 14:00 —

👍 0

🔁 0

💬 1

📌 0

Exploiting heterogeneous delays for efficient computation in low-bit neural networks

Neural networks rely on learning synaptic weights. However, this overlooks other neural parameters that can also be learned and may be utilized by the brain. One such parameter is the delay: the brain...

Psst - neuromorphic folks. Did you know that you can solve the SHD dataset with 90% accuracy using only 22 kb of parameter memory by quantising weights and delays? Check out our preprint with @pengfei-sun.bsky.social and @danakarca.bsky.social, or read the TLDR below. 👇🤖🧠🧪 arxiv.org/abs/2510.27434

13.11.2025 17:40 —

👍 43

🔁 16

💬 3

📌 3

With my great advisors and colleagues, @achterbrain.bsky.social @zhe @danakarca.bsky.social @neural-reckoning.org, we show that if heterogeneous axonal delays (imprecise) can capture the essential temporal structure of a task, spiking networks do not need precise synaptic weights to perform well.

13.11.2025 20:51 —

👍 22

🔁 10

💬 2

📌 0

It's been a pleasure and a privilege, going to really miss working with you and with the rest of the lab!

08.11.2025 17:27 —

👍 3

🔁 0

💬 0

📌 0

Really enjoyed reading this short opinion piece by Tim O'Leary. I think it echoes the classic Feynman quote "what I cannot create I do not understand". I think engineering approaches such as neuromorphic computing will prove fundamental to scientific understanding of how biological brains work

03.11.2025 17:00 —

👍 7

🔁 1

💬 0

📌 0

Reminder this is happening this Wed/Thu. Free spiking neural network conference - registration required (see below).

03.11.2025 15:28 —

👍 9

🔁 9

💬 0

📌 0

Microsoft Research Lab - New York City - Microsoft Research

Apply for a research position at Microsoft Research New York & collaborate with academia to advance economics research, prediction markets & ML.

MSR NYC is hiring senior researchers in AI, both broadly in AI/ML & in specific areas (post-training, test-time scaling, modular transfer learning, science of deep learning).

aka.ms/msrnyc-jobs

We're reviewing on a rolling basis, interviews in Nov/Dec. Please apply here: tinyurl.com/MSRNYCjob

27.10.2025 17:11 —

👍 12

🔁 4

💬 1

📌 0

Ah nice, that's a good trick

21.10.2025 16:32 —

👍 0

🔁 0

💬 0

📌 0