On a Thursday your best bet is probably Silbergold or Pracht

29.09.2025 16:13 — 👍 1 🔁 0 💬 2 📌 0

Congrats!! & welcome to one of the best cities ;)

23.09.2025 16:09 — 👍 3 🔁 0 💬 1 📌 0

Bet: this flavor of same-stimulus, same-task, compare-behavior, compare-physiology is the future of model testing and theory development in neuroscience.

22.09.2025 23:29 — 👍 16 🔁 4 💬 3 📌 1

Thanks to the whole team behind Mouse vs AI:

@jingpeng.bsky.social , Yuchen Hou, Joe Canzano, @spencerlaveresmith.bsky.social & @mbeyeler.bsky.social !

A special shout-out to Joe, who designed the Unity task, trained the mice, and recorded the neural data — making this benchmark possible!

22.09.2025 23:13 — 👍 4 🔁 0 💬 0 📌 0

Submit your model. Compete against mice.

Which model architectures solve the task, and which find brain-like solutions?

Let us uncover what it takes to build robust, biologically inspired agents!

Read the whitepaper: arxiv.org/abs/2509.14446

Explore the challenge: robustforaging.github.io

22.09.2025 23:13 — 👍 4 🔁 0 💬 1 📌 0

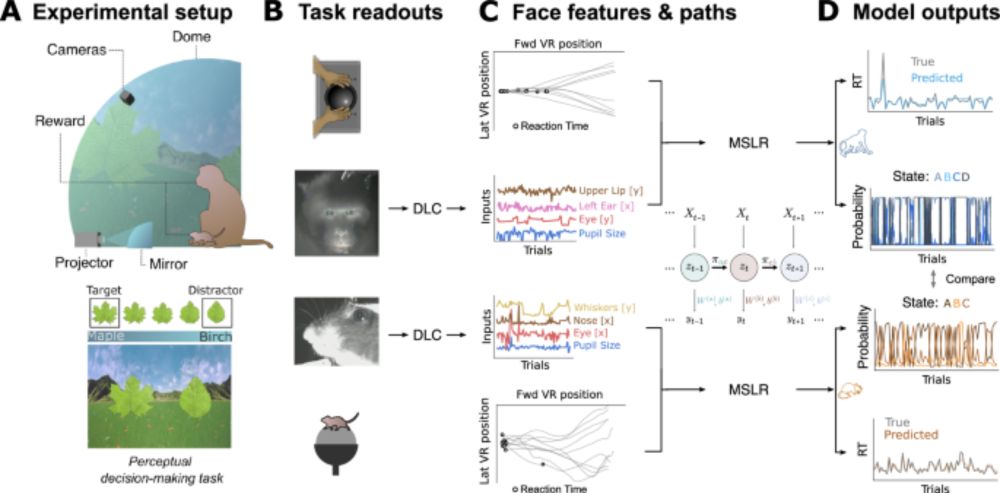

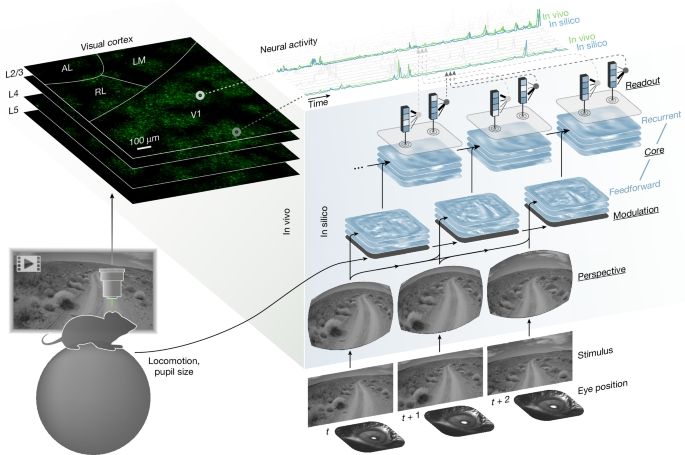

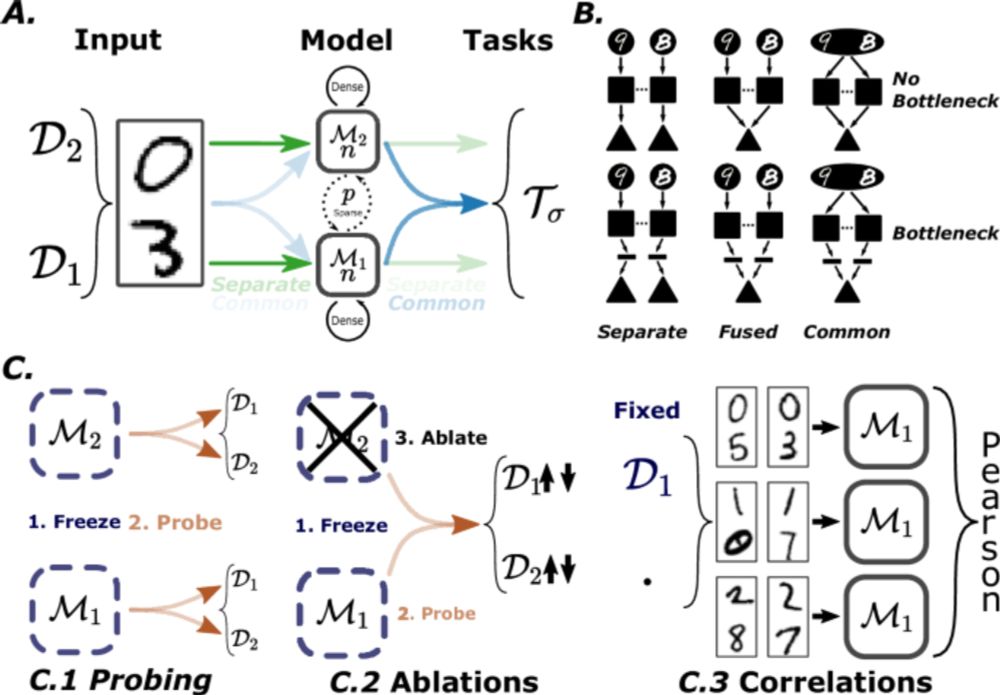

Participants submit models trained only on robust foraging.

We then regress from their model activations onto SOTA mesoscale population scale recordings including all mouse visual areas at once.

This let us test whether brain-like codes emerge naturally from behavior-driven learning

🧠 Track 2: Neural Alignment

Do task-trained agents develop brain-like internal representations?

We regress model activations onto 19k+ neurons across all mouse visual areas recorded during the same task.

This lets us test whether brain-like codes emerge naturally from behavior-driven learning.

22.09.2025 23:13 — 👍 2 🔁 0 💬 1 📌 0

Track 1 tests generalization to unseen visual perturbations and ranks models based on how well they generalize (perform under these unseen perturbations)

🧭Track 1: Visual Robustness

Track 1 evaluates how well RL agents generalize to unseen visual perturbations, like different weather or lighting changes, while performing a foraging task.

Mice perform the same task in VR—enabling direct behavioral comparisons across species.

22.09.2025 23:13 — 👍 2 🔁 0 💬 1 📌 0

train mouse and AI on shared foraging task

We built a naturalistic Unity world (not blocky or toy-like) and trained mice to perform a foraging task in it.

Now it’s your turn: train RL agents on the same task and submit your model!

22.09.2025 23:13 — 👍 3 🔁 0 💬 1 📌 0

An illustration of a mouse on a ball looking at a field of grass displayed on monitors.

Can your AI beat a mouse?

Mice still outperform our best computing machines in some ways. One way is robust visual processing.

10 years ago I decided to work on HARD behavior driven by COMPLEX visual processing. And that risk is paying off now. #neuroAI 1/n

10.07.2025 12:22 — 👍 45 🔁 16 💬 1 📌 3

#Imbizo - Simons Computational Neuroscience Imbizo - #Imbizo

Simons Computational Neuroscience Imbizo summer school in Cape Town, South Africa

Here is your last reminder that the application deadline for Imbizo.Africa is nearing quickly, the 1st of July, in fact tomorrow. Still the place where diversity is at its best in the world! Tell all who need to hear. #africa #neuro

30.06.2025 19:53 — 👍 20 🔁 17 💬 0 📌 0

A Brief History of Young People Today Don't Want to Work

🧵

27.05.2025 22:01 — 👍 1355 🔁 574 💬 50 📌 81

Highly recommended for all neuroscientists who want to learn more about computational neuroscience in a supportive community!

09.05.2025 19:30 — 👍 2 🔁 1 💬 0 📌 0

Re-posting is appreciated: We have a fully funded PhD position in CMC lab @cmc-lab.bsky.social (at @tudresden_de). You can use forms.gle/qiAv5NZ871kv... to send your application and find more information. Deadline is April 30. Find more about CMC lab: cmclab.org and email me if you have questions.

20.02.2025 14:50 — 👍 77 🔁 89 💬 3 📌 8

Top-down feedback matters: Functional impact of brainlike connectivity motifs on audiovisual integration

Top-down feedback is ubiquitous in the brain and computationally distinct, but rarely modeled in deep neural networks. What happens when a DNN has biologically-inspired top-down feedback? 🧠📈

Our new paper explores this: elifesciences.org/reviewed-pre...

15.04.2025 20:11 — 👍 106 🔁 34 💬 3 📌 1

12.04.2025 03:06 — 👍 15061 🔁 4132 💬 408 📌 216

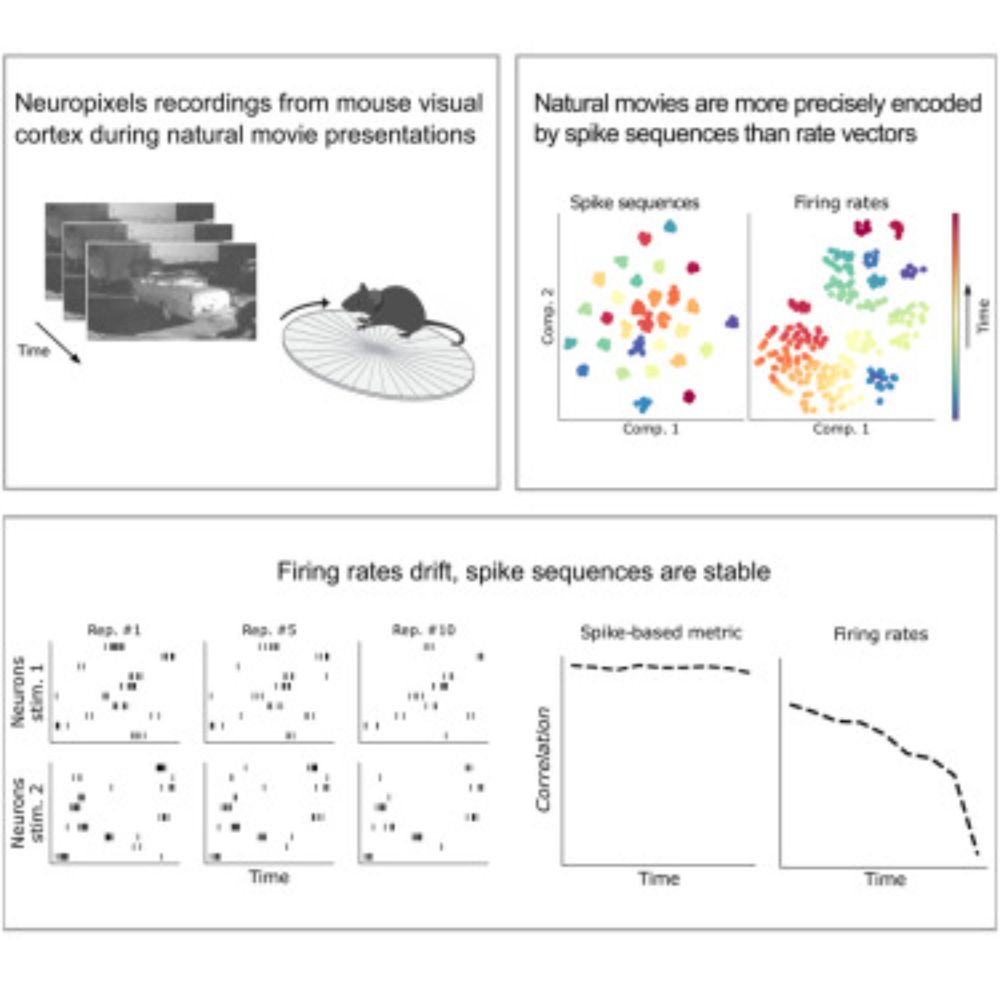

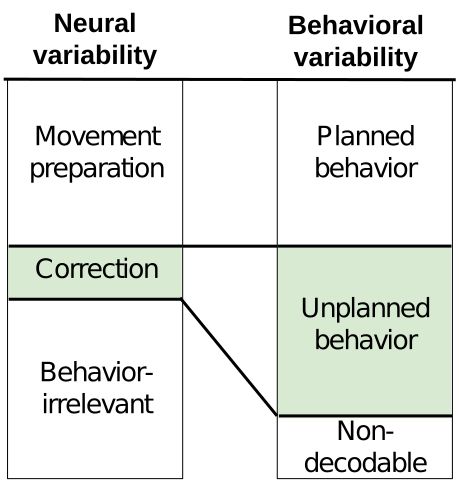

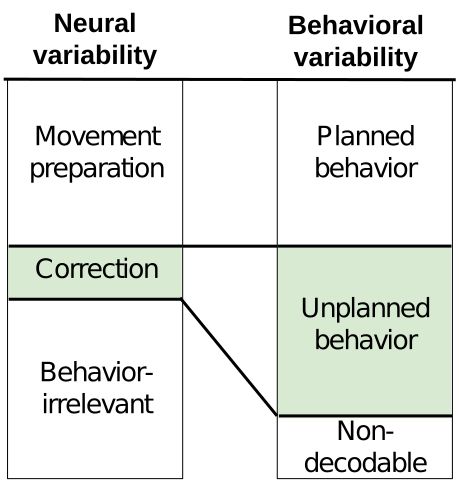

Excited to share our new pre-print on bioRxiv, in which we reveal that feedback-driven motor corrections are encoded in small, previously missed neural signals.

07.04.2025 14:54 — 👍 25 🔁 16 💬 1 📌 1

Excited for the poster session at #Cosyne tonight!

Come check out the two posters I am involved in—one on neural representations and generalization in mice & RL agents trained on the same foraging task, and another on selective attention in spiking networks.

27.03.2025 21:17 — 👍 2 🔁 0 💬 0 📌 0

Party poster for dance party on final night of Cosyne 2025 workshops. It will take place April 1st, 2025, 10PM to 3AM at Le P'tit Caribou.

Coming to the #Cosyne2025 workshops? Wanna dance on the final night? We got you covered.

@glajoie.bsky.social and I have organized a party in Tremblant. Come and get on the dance floor y'all. 🕺

April 1st

10PM-3AM

Location: Le P'tit Caribou

DJs Mat Moebius, Xanarelle, and Prosocial

Please share!

24.03.2025 17:37 — 👍 43 🔁 10 💬 2 📌 1

🧵 time!

1/15

Why are CNNs so good at predicting neural responses in the primate visual system? Is it their design (architecture) or learning (training)? And does this change along the visual hierarchy?

🧠🤖

🧠📈

13.03.2025 21:32 — 👍 34 🔁 7 💬 3 📌 0

Why academia is sleepwalking into self-destruction. My editorial @brain1878.bsky.social If you agree with the sentiments please repost. It's important for all our sakes to stop the madness

academic.oup.com/brain/articl...

06.03.2025 19:15 — 👍 537 🔁 309 💬 51 📌 104

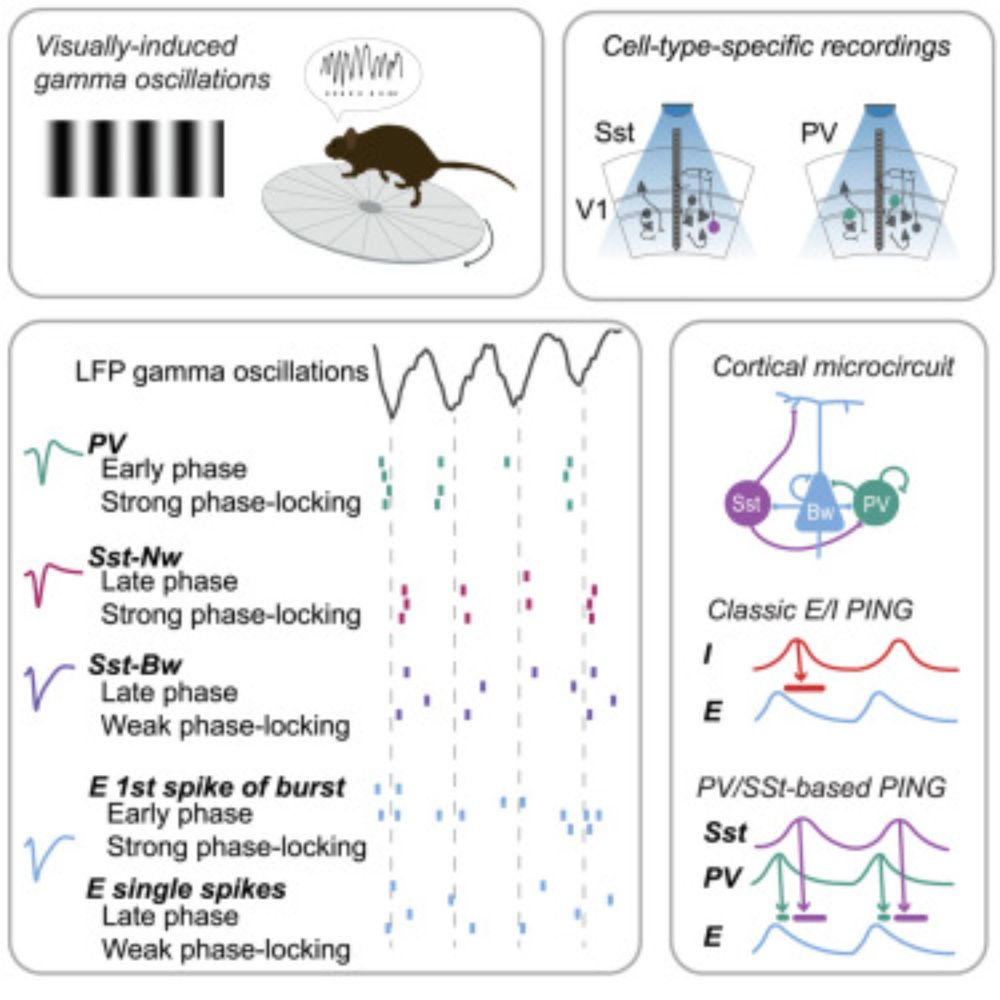

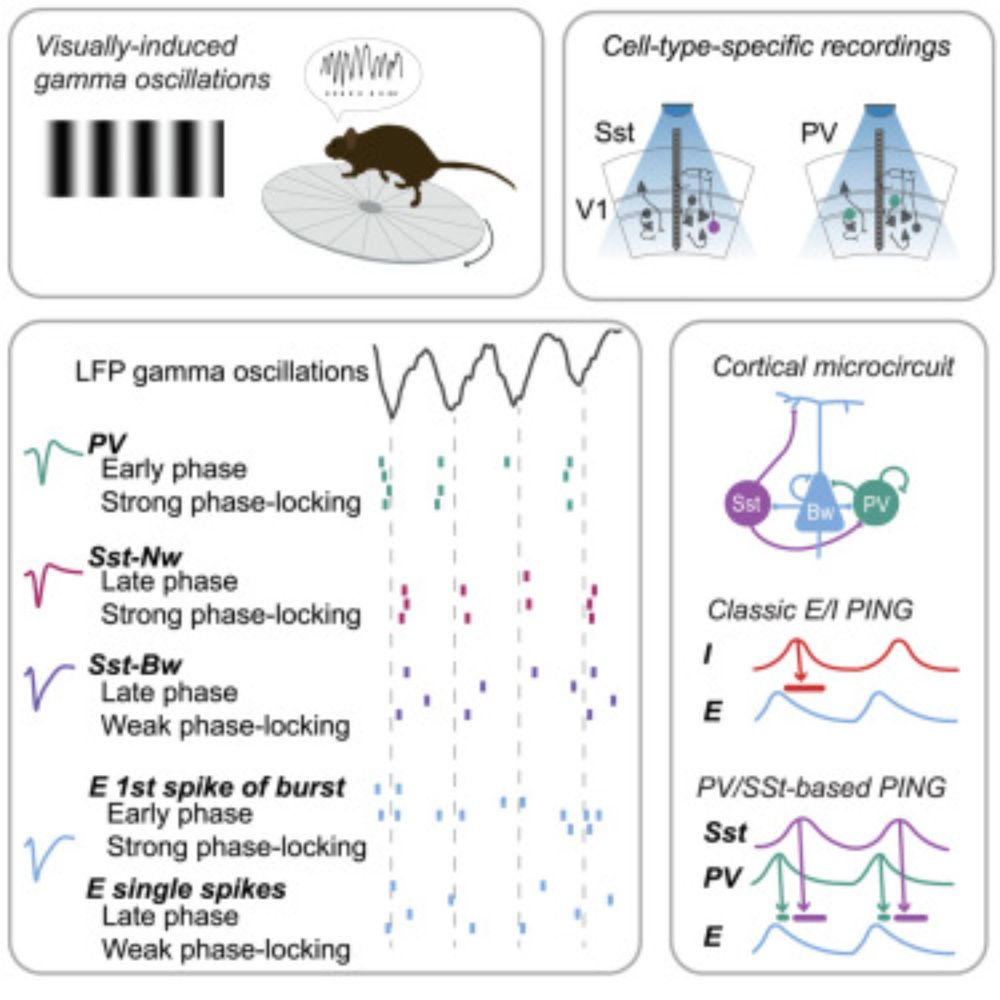

Distinct roles of PV and Sst interneurons in visually induced gamma oscillations

Gamma-frequency oscillations are a hallmark of active information processing and are generated by interactions between excitatory and inhibitory neuro…

Happy to see this study led by Irene Onorato finally out - we show distinct phase locking and spike timing of optotagged PV cells and Sst interneuron subtypes during gamma oscillations in mouse visual cortex, suggesting an update to the classic PING model www.sciencedirect.com/science/arti...

06.03.2025 22:22 — 👍 35 🔁 13 💬 1 📌 0

🚨Preprint Alert

New work with @martinavinck.bsky.social

We elucidate the architectural bias that enables CNNs to predict early visual cortex responses in macaques and humans even without optimization of convolutional kernels.

🧠🤖

🧠📈

08.02.2025 15:06 — 👍 29 🔁 10 💬 1 📌 1

Spiros Chavlis and I are very excited to share our latest #dendritic ANN research published in Nature Comms. @natureportfolio.bsky.social. We show that adopting the structure and sparse sampling features of biological #dendrites makes ANNs accurate, highly efficient and robust to overfitting.

25.01.2025 14:04 — 👍 42 🔁 11 💬 0 📌 2

It’s shocking how much they’ve all decided to bow down and kiss the ring. Certainly, it has made it clear that if things get really bad, they will do nothing other than protect their own financial interests.

24.01.2025 14:33 — 👍 13 🔁 1 💬 3 📌 0

Serene, empty lecture hall with assigned seats (dark table-clothed desks with named mugs for every participants; white plastic chairs) that looks out onto a landscape of dunes and summery beaches, waiting to be filled with life of the next class of Imbizo.

... and so it begins again.🧠🌴🌊🌍 Imbizo.africa is on its way, ... without me 😥, but look! at the views through those windows.

I wanna go and learn about all things neuro at one of the most beautiful spots in the world. Don't you, too?

13.01.2025 12:44 — 👍 23 🔁 3 💬 2 📌 0

Prof.@AGH_Krakow. Intersecting #neuroscience/#AI and #impact #social development.

#innovation #globalhealth All links: http://linktr.ee/alecrimi

Neuroscientist. Interested in activity patterns in the cortex, unaffordable guitars and mexican food. Worked at Yale University, University of Amsterdam and ESPCI Paris

Postdoc in comp neuro @BrownU, interested in biophysical modeling, deep learning, and open-source software development

Study pain and emotions using fMRI and AI; PI of the Cocoan lab, SKKU & IBS Center for Neuroscience Imaging Research (CNIR)

Lab: https://cocoanlab.github.io/

Lab instagram: https://www.instagram.com/cocoanlab/

Circuits, Manifolds & Development 🐭

Postdoc Fellow in the Moser Lab

~ Developmental Systems Neuroscience ~

Computational Machinery of Cognition (CMC) lab focuses on understanding the computational and neural machinery of goal-driven behavior | PI: @neuroprinciplist.bsky.social | https://shervinsafavi.github.io/cmclab/

Postdoc @harvard.edu @kempnerinstitute.bsky.social

Homepage: http://satpreetsingh.github.io

Twitter: https://x.com/tweetsatpreet

Assistant Professor at UCLA | Alum @MIT @Princeton @UC Berkeley | AI+Cognitive Science+Climate Policy | https://ucla-cocopol.github.io/

Mathematics/Music Composition Undergrad at Soochow Univ. in Taiwan

Computational/Theoretical Neuroscience RA at Academia Sinica

Interested in the interplay between memory and mental simulation, DeepRL & Philosophy of Neuroscience!!

Ex-Esports Coach (Apex)

asst prof @Stanford linguistics | director of social interaction lab 🌱 | bluskies about computational cognitive science & language

Associate Research Scientist at Center for Theoretical Neuroscience @cu_neurotheory @ZuckermanBrain @Columbia K99-R00 scholar @NIH @NatEyeInstitute

https://toosi.github.io/

In discussions with neuroscientists it is obvious they think I am a fool of some kind. I hope a day will come that they will realize that they were foolish all the way.

https://www.researchgate.net/profile/Nj-Mol/research

At the junction of rationality and hypocrisy

For legal purposes, the views expressed here aren't mine.

spatial and abstract navigation @cimecunitrento.bsky.social

Assistant prof at Baylor College of Medicine. Sensory circuits & brain state modulation. Imaging, patching, and pupils. Kiddo dad. Opinions are my own. (he/him)

☀️ 🧠🧘♀️ 💪✨🧗♀️ 🐱

PhD student at Human Emotional Systems Lab 🙂🙃 @ Turku PET Center

I am a postdoc in computational neuroscience, with my PhD in statistical physics.

I am into music and theater.

Neuroscience to improve the human condition

Science Events helps you organise a scientific conference or workshop. Use our platform to organise your next event! Visit our platform at https://www.sci.events

Neuroscientist and University College London