Helps you more quickly understand, ask questions, get to the parts of the original content that you are looking to understand. Would love to hear what you think!

Read more: medium.com/people-ai-re...

Github: github.com/PAIR-code/lumi

@iislucas.bsky.social

Machine learning, interpretability, visualization, Language Models, People+AI research

Helps you more quickly understand, ask questions, get to the parts of the original content that you are looking to understand. Would love to hear what you think!

Read more: medium.com/people-ai-re...

Github: github.com/PAIR-code/lumi

New open-source AI assited reading experience we built for arxiv papers: lumi.withgoogle.com

01.10.2025 15:55 — 👍 1 🔁 0 💬 1 📌 0

An image with the Vancouver skyline and the words "sign up to review". At the top are the logos of both the Actionable Interpretability workshop (a magnifying glass) and the ICML conference (a brain).

🚨 We're looking for more reviewers for the workshop!

📆 Review period: May 24-June 7

If you're passionate about making interpretability useful and want to help shape the conversation, we'd love your input.

💡🔍 Self-nominate here:

docs.google.com/forms/d/e/1F...

Take a look at some initial research projects, and see if there's one you'd like to work on:

github.com/ARBORproject...

Or propose your own idea! There are many ways to contribute, and we welcome all of them.

Great thread describing the new ARBOR open interpretability project, which has some fascinating projects already. Take a look!

20.02.2025 22:50 — 👍 8 🔁 2 💬 0 📌 0

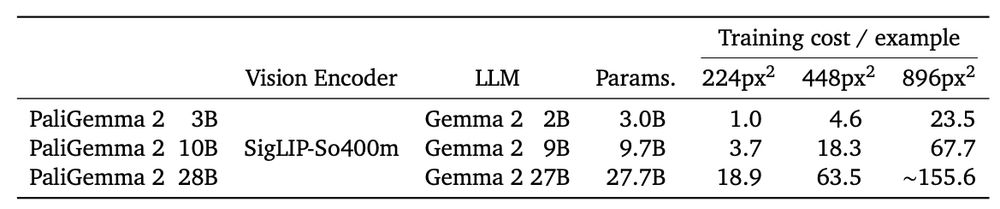

Looking for a small or medium sized VLM? PaliGemma 2 spans more than 150x of compute!

Not sure yet if you want to invest the time 🪄finetuning🪄 on your data? Give it a try with our ready-to-use "mix" checkpoints:

🤗 huggingface.co/blog/paligem...

🎤 developers.googleblog.com/en/introduci...

In December, I posted about our new paper on mastering board games using internal + external planning. 👇

Here's a talk now on Youtube about it given by my awesome colleague John Schultz!

www.youtube.com/watch?v=JyxE...

Yeah, I'm skeptical of how good LLMs alone can be, but when they get to use existing search based theorem provers, and lookup tools (SAT, induction provers, etc), then I would expect a good deal better w.r.t. gap sizes, and ability to find counter examples.

09.01.2025 08:07 — 👍 9 🔁 0 💬 0 📌 0I have a hope that modern AI/LLMs might help here: by helping translate informal papers to formal mathematical statements, and informal proof to formal proof, and thereby help highlight gaps and help find counter examples. A few others are interested in this... @wattenberg.bsky.social maybe?

02.01.2025 11:33 — 👍 12 🔁 0 💬 2 📌 0Love the idea! Is there any stats / evals for it! And how does one get to play with it? :)

21.12.2024 09:49 — 👍 3 🔁 0 💬 0 📌 0

Google research scholar programme applications open until 27th Jan. support early-career professors (received PhD within seven years of submission).

research.google/programs-and...

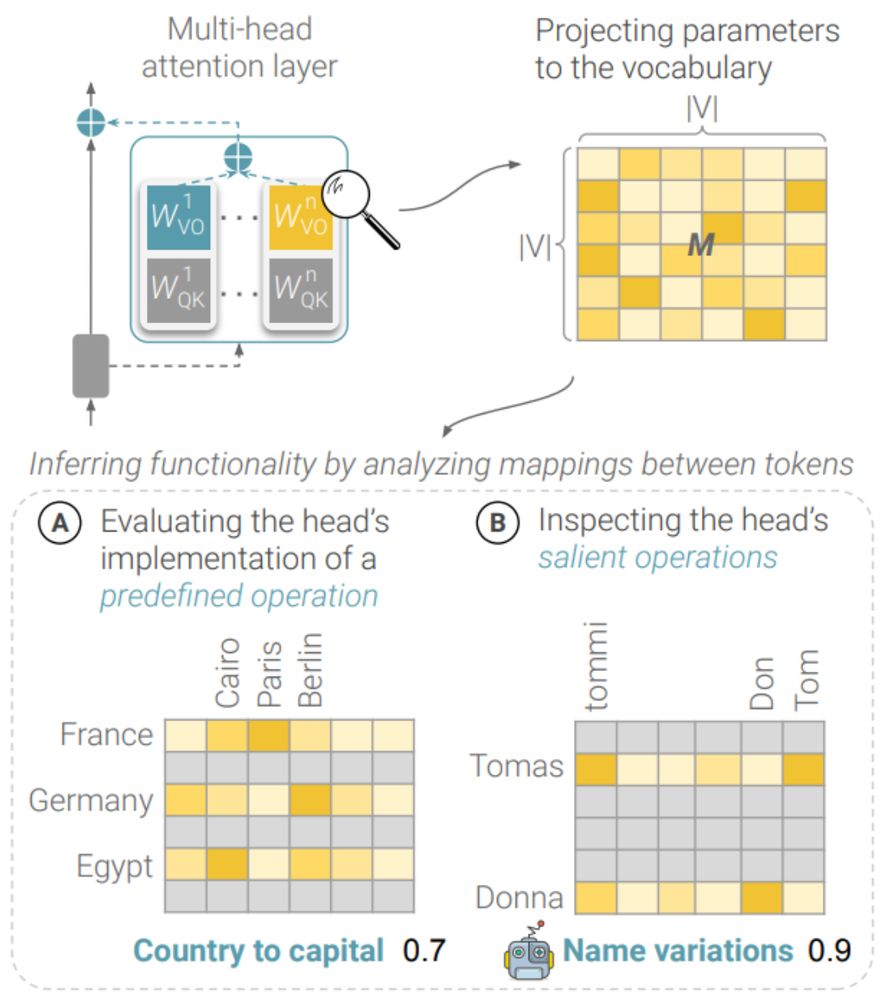

What's in an attention head? 🤯

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We scaled training data attribution (TDA) methods ~1000x to find influential pretraining examples for thousands of queries in an 8B-parameter LLM over the entire 160B-token C4 corpus!

medium.com/people-ai-re...

I think this (LLMs makes making small scripts super easy) gets more profound agian when we start to make lots of tiny voice-powered apps/agents... would love to see more prototyping tools for this and play with them. Send me pointers!

11.12.2024 17:49 — 👍 3 🔁 0 💬 0 📌 0Option 1.

11.12.2024 00:35 — 👍 0 🔁 0 💬 0 📌 0I just wish search was semantic instead of substring! Still fun to see and explore! Btw - which embedding model did you use and what input text per paper?

11.12.2024 00:33 — 👍 1 🔁 0 💬 1 📌 0That's neat! Did you also try a few different other styles/mood boards?

30.11.2024 08:45 — 👍 0 🔁 0 💬 0 📌 0

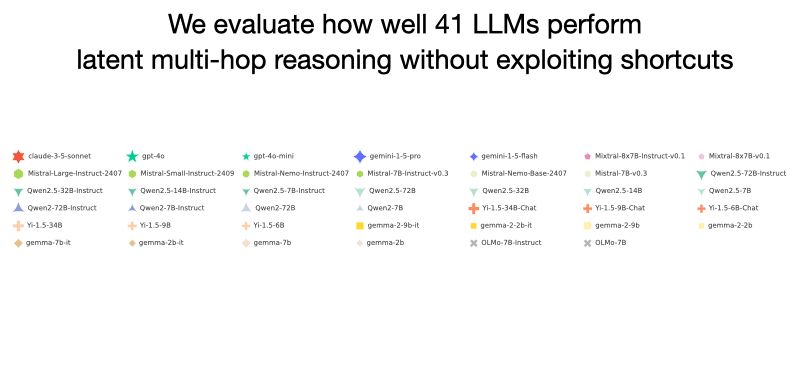

🚨 New Paper 🚨

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

arxiv.org/abs/2405.14838 by supervised learning curriculum of incrementally eliminating the start of a CoT they are able to train gpt2-small to do 9 digit multiplication without CoT; a fascinating and impressive result!

29.11.2024 09:27 — 👍 2 🔁 0 💬 0 📌 0I'm learning a lot from this visually rich blog post. Also, I'm charmed by the rotating list of equal-contribution authors. Good knights-of-the-round-table energy!

dl.heeere.com/conditional-...

I want to describe my experience of coding with AI, because it seems to differ from other people's expectations. Earlier this morning, I saw a beautiful image here, based on roots of polynomials: bsky.app/profile/scon...

I wanted to try this idea myself, but with animation in a Javascript context!

It's so beautiful to see this kind of fluid interaction with huge data!

21.11.2024 18:07 — 👍 16 🔁 2 💬 1 📌 0

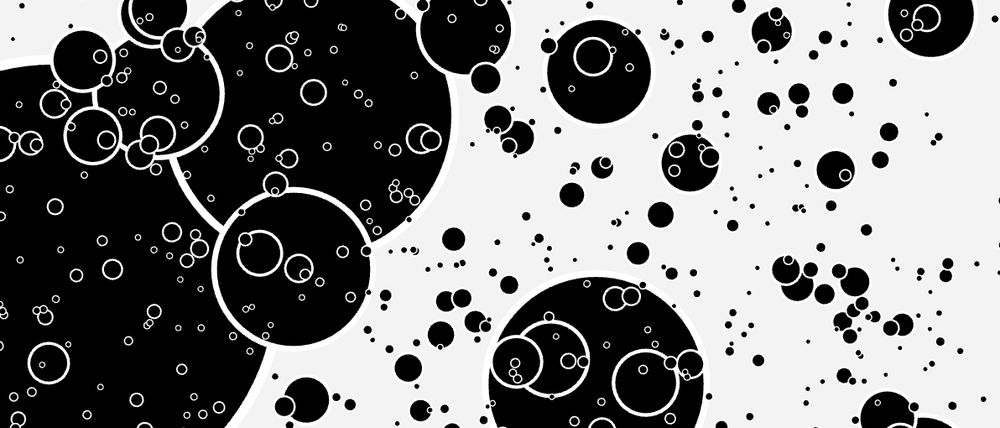

Many circles of different sizes, representing a visualization of inequality

The Gini coefficient is the standard way to measure inequality, but what does it mean, concretely? I made a little visualization to build intuition:

www.bewitched.com/demo/gini

A meditative toy, visualizing Jupiter's Galilean moons:

www.bewitched.com/demo/jupiter/

And here is the context page for the DeepMind student researcher programme: deepmind.google/about/studen...

21.11.2024 09:50 — 👍 0 🔁 0 💬 0 📌 0People can now apply for Student researcher roles (basically a kind of internship) at Google/Deep Mind (until Dec 13)

www.google.com/about/career...

Neat! Are there any human-eval results for comparing the output w.r.t. Things like hallucinations and human enjoyment. I'd love to see eval here!

30.10.2024 09:51 — 👍 0 🔁 0 💬 0 📌 0