Check out Wenxuan's work on cost-uncertainty tradeoffs in agents!

Providing different info to the model (here, estimates of its uncertainty) triggers a really different type of reasoning.

Agent behavior under a harness reflects what info is included, not just the instructions.

23.02.2026 16:17 — 👍 0 🔁 0 💬 0 📌 0

Still accepting applications for this postdoc position in my lab at NYU! Applications due Feb 1. Please see the posting below for more information and apply on Interfolio:

cims.nyu.edu/taur/postdoc...

apply.interfolio.com/178940

12.01.2026 15:14 — 👍 4 🔁 3 💬 0 📌 0

Submit to COLM! Deadline of March 31. This llama gets to enjoy his holidays and isn't stressed out just yet...

16.12.2025 15:36 — 👍 8 🔁 1 💬 0 📌 0

Sorry, no, it's an in-person full-time role.

03.12.2025 01:45 — 👍 0 🔁 0 💬 1 📌 0

Hiring researchers & engineers to work on

–building reliable software on top of unreliable LLM primitives

–statistical evaluation of real-world deployments of LLM-based systems

I’m speaking about this on two NeurIPS workshop panels:

🗓️Saturday – Reliable ML Workshop

🗓️Sunday – LLM Evaluation Workshop

02.12.2025 14:55 — 👍 19 🔁 5 💬 2 📌 0

More details here:

cims.nyu.edu/taur/postdoc...

Interfolio link to apply coming soon! Feel free to email me in the meantime following the instructions there.

02.12.2025 16:05 — 👍 1 🔁 0 💬 0 📌 0

📢 Postdoc position 📢

I’m recruiting a postdoc for my lab at NYU! Topics include LM reasoning, creativity, limitations of scaling, AI for science, & more! Apply by Feb 1.

(Different from NYU Faculty Fellows, which are also great but less connected to my lab.)

Link in 🧵

02.12.2025 16:04 — 👍 21 🔁 12 💬 2 📌 1

TTIC Faculty Opportunities at TTIC

Two brief advertisements!

TTIC is recruiting both tenure-track and research assistant professors: ttic.edu/faculty-hiri...

NYU is recruiting faculty fellows: apply.interfolio.com/174686

Happy to chat with anyone considering either of these options

23.10.2025 13:57 — 👍 8 🔁 6 💬 0 📌 0

Unfortunately I won't be at #COLM2025 this week, but please check out our work being presented by my collaborators/advisors!

If you are interested in evals of open-ended tasks/creativity please reach out and we can schedule a chat! :)

08.10.2025 00:19 — 👍 4 🔁 1 💬 0 📌 0

Find my students and collaborators at COLM this week!

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

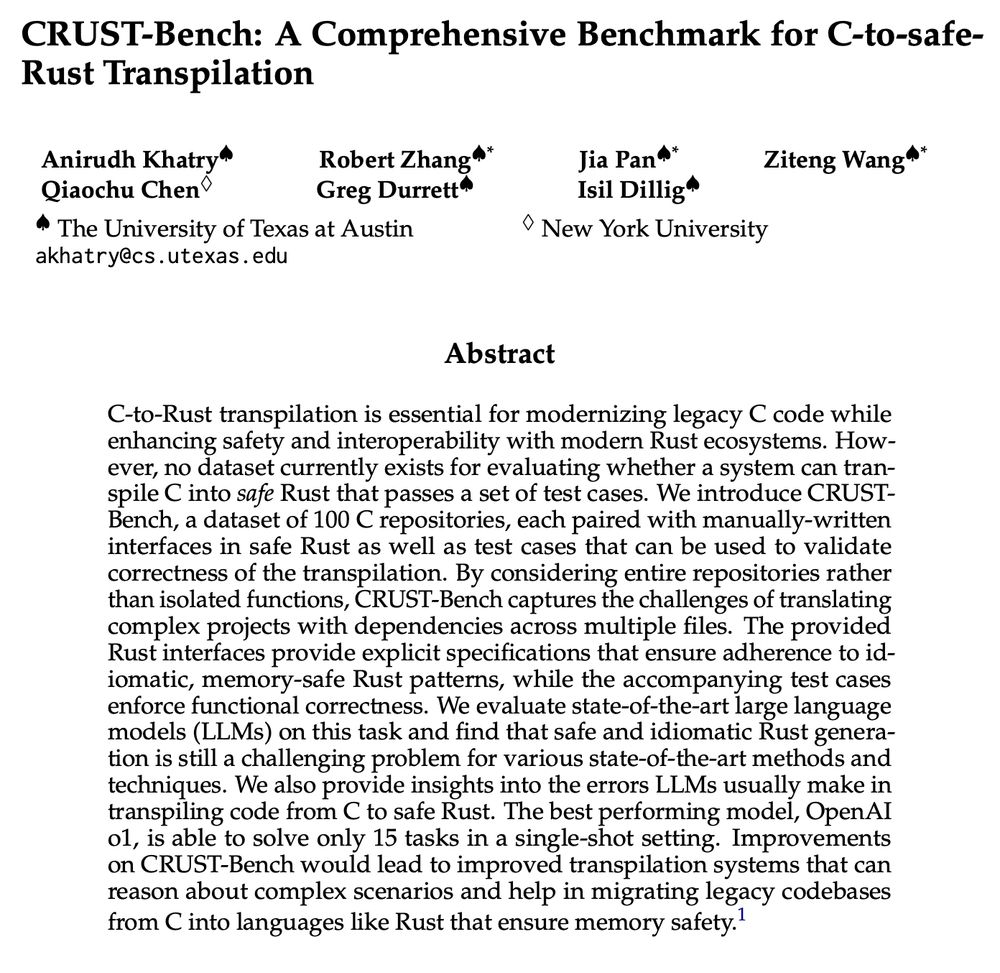

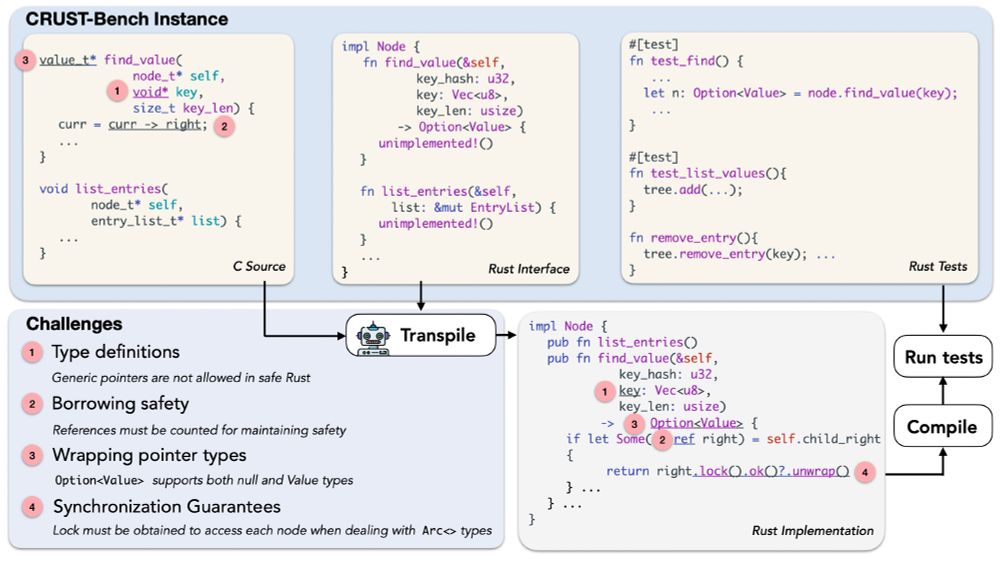

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

07.10.2025 18:03 — 👍 9 🔁 5 💬 0 📌 1

Excited to present this at #COLM2025 tomorrow! (Tuesday, 11:00 AM poster session)

06.10.2025 20:40 — 👍 10 🔁 4 💬 0 📌 0

Check out this feature about AstroVisBench, our upcoming NeurIPS D&B paper about code workflows and visualization in the astronomy domain! Great testbed for the interaction of code + VLM reasoning models.

25.09.2025 20:43 — 👍 2 🔁 0 💬 0 📌 0

Picture of the UT Tower taken by me on my first day at UT as a postdoc in 2023!

News🗞️

I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

02.06.2025 13:18 — 👍 66 🔁 8 💬 12 📌 2

Great to work on this benchmark with astronomers in our NSF-Simons CosmicAI institute! What I like about it:

(1) focus on data processing & visualization, a "bite-sized" AI4Sci task (not automating all of research)

(2) eval with VLM-as-a-judge (possible with strong, modern VLMs)

02.06.2025 15:49 — 👍 6 🔁 0 💬 0 📌 0

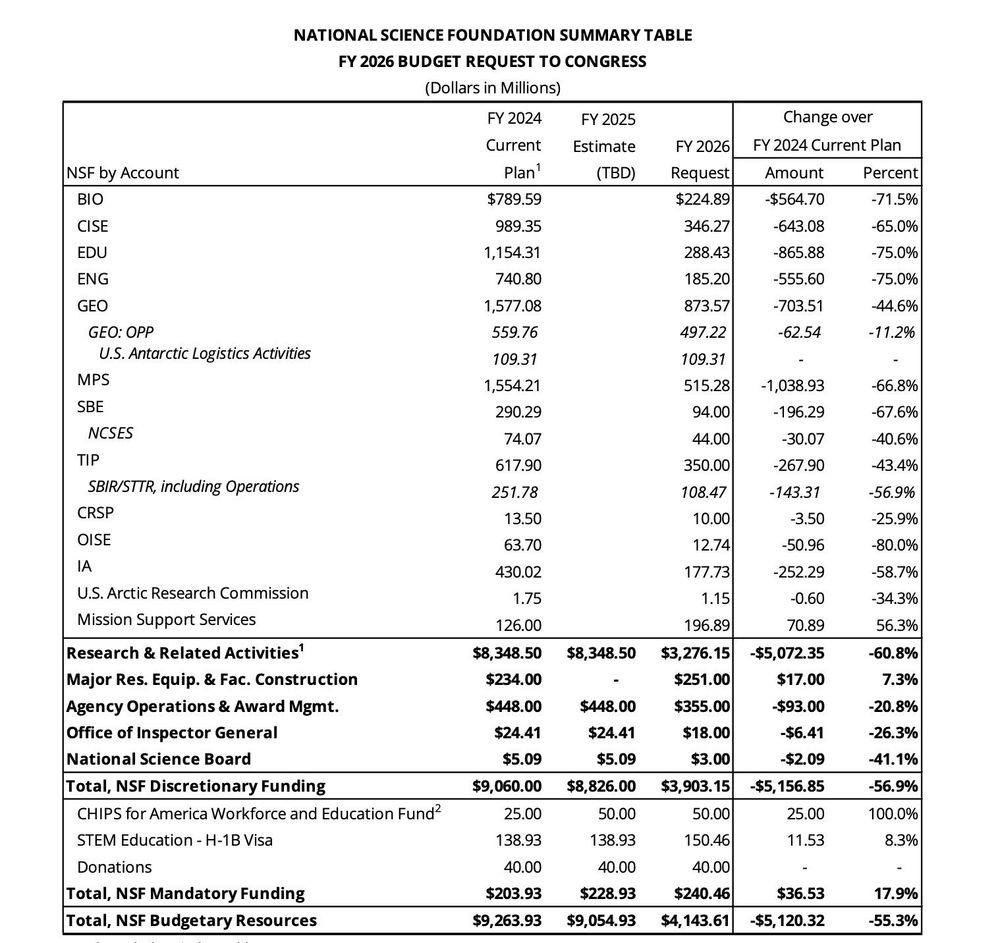

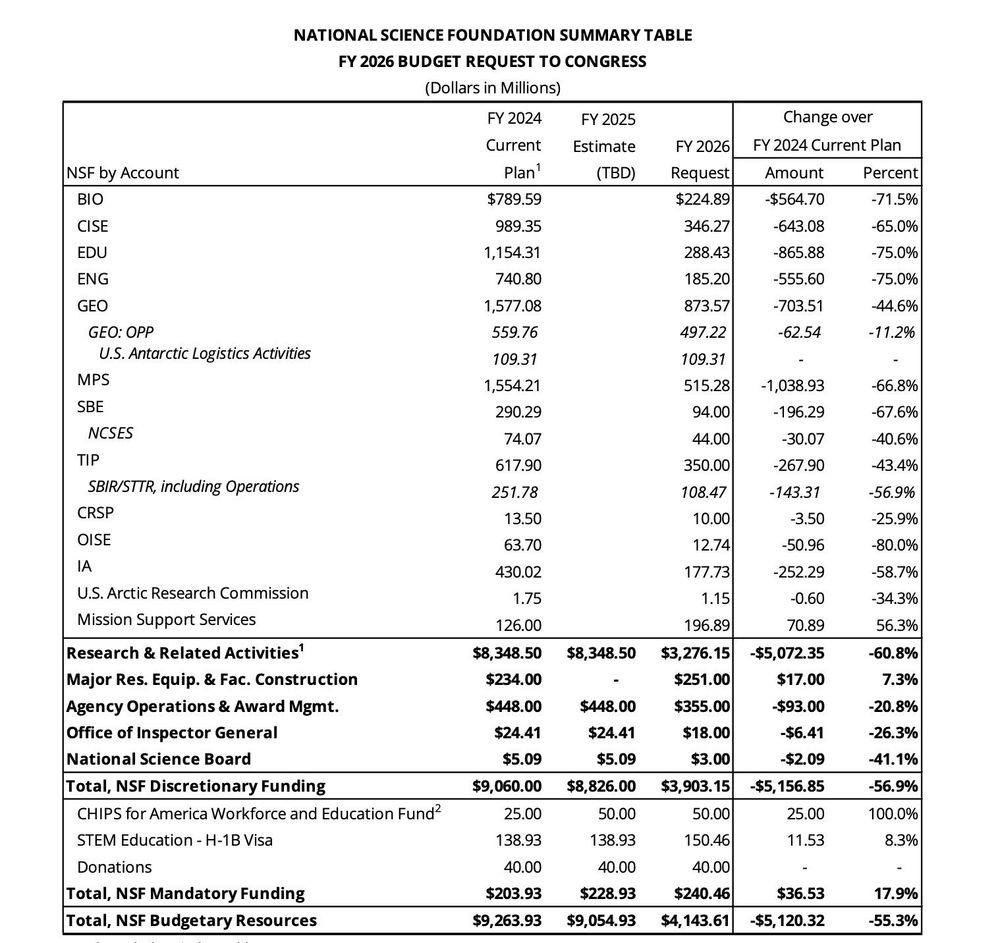

The end of US leadership in science, technology, and innovation.

All in one little table.

A tremendous gift to China, courtesy of the GOP.

nsf-gov-resources.nsf.gov/files/00-NSF...

30.05.2025 21:26 — 👍 1053 🔁 421 💬 37 📌 28

Percy Liang on X: "What would truly open-source AI look like? Not just open weights, open code/data, but *open development*, where the entire research and development process is public *and* anyone can contribute. We built Marin, an open lab, to fulfill this vision: https://t.co/racsvmhyA3" / X

What would truly open-source AI look like? Not just open weights, open code/data, but *open development*, where the entire research and development process is public *and* anyone can contribute. We built Marin, an open lab, to fulfill this vision: https://t.co/racsvmhyA3

Super excited Marin is finally out! Come see what we've been building! Code/platform for training fully reproducible models end-to-end, from data to evals. Plus a new high quality 8B base model. Percy did a good job explaining it on the other place. marin.community

x.com/percyliang/s...

19.05.2025 19:35 — 👍 20 🔁 6 💬 1 📌 0

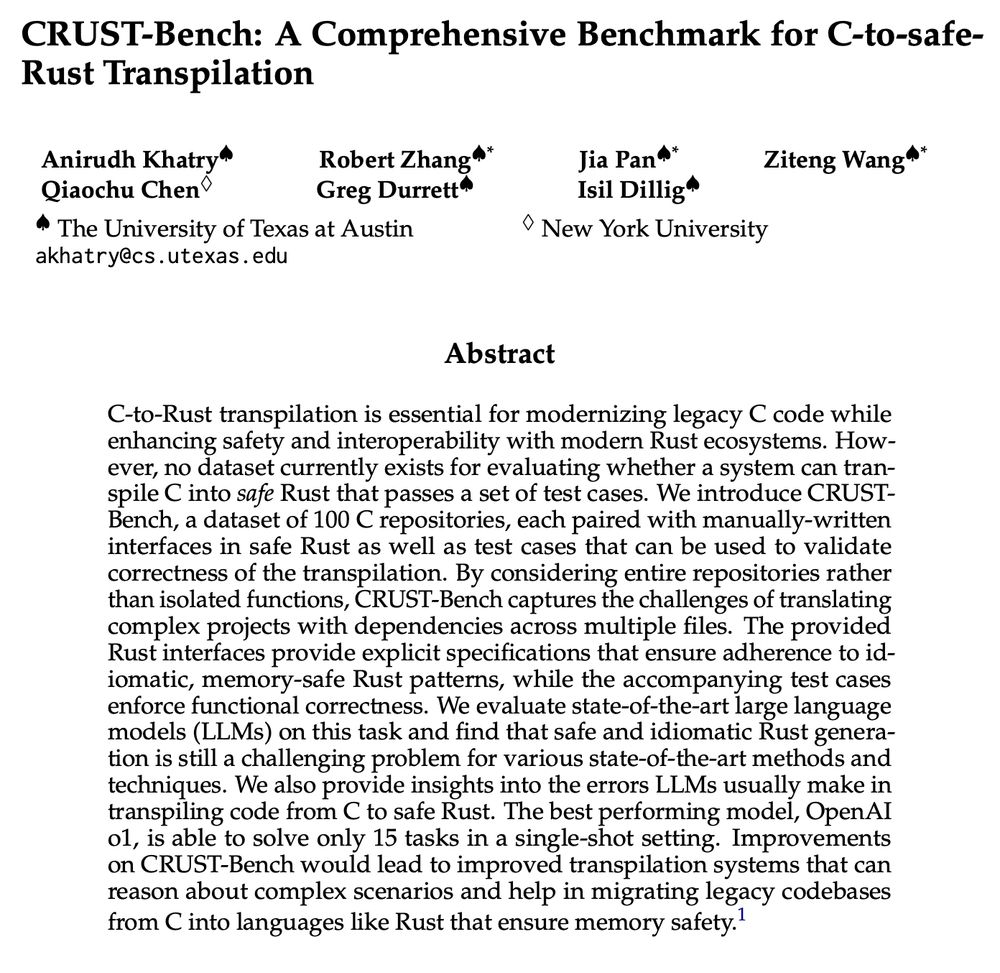

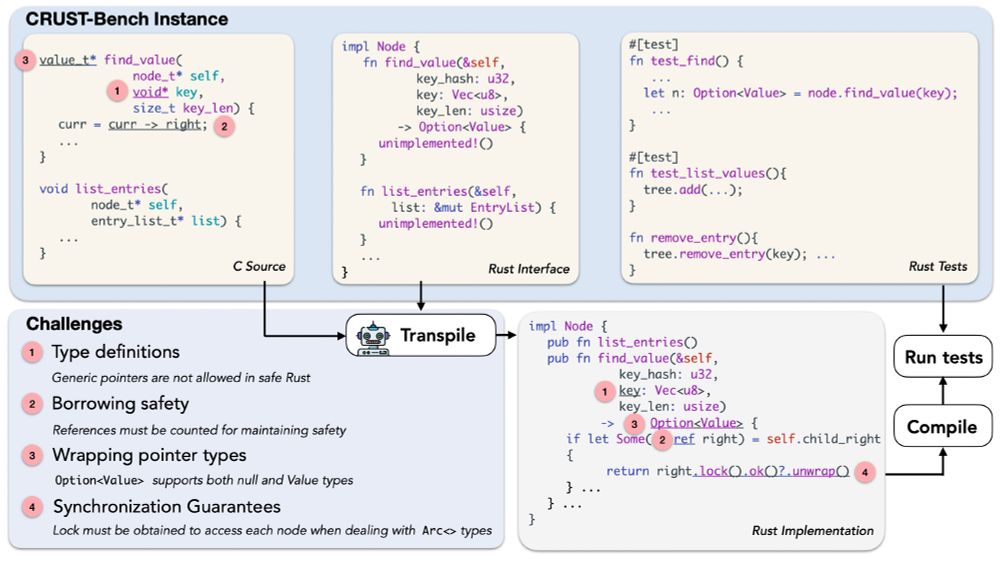

Check out Anirudh's work on a new benchmark for C-to-Rust transpilation! 100 realistic-scale C projects, plus target Rust interfaces + Rust tests that let us validate the transpiled code beyond what prior benchmarks allow.

23.04.2025 18:37 — 👍 5 🔁 1 💬 0 📌 0

🚀Meet CRUST-Bench, a dataset for C-to-Rust transpilation for full codebases 🛠️

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

23.04.2025 17:00 — 👍 16 🔁 5 💬 1 📌 1

Check out Manya's work on evaluation for open-ended tasks! The criteria from EvalAgent can be plugged into LLM-as-a-judge or used for refinement. Great tool with a ton of potential, and there's LOTS to do here for making LLMs better at writing!

22.04.2025 16:30 — 👍 3 🔁 2 💬 0 📌 0

Check out Ramya et al.'s work on understanding discourse similarities in LLM-generated text! We see this as an important step in quantifying the "sameyness" of LLM text, which we think will be a step towards fixing it!

21.04.2025 22:10 — 👍 6 🔁 1 💬 0 📌 0

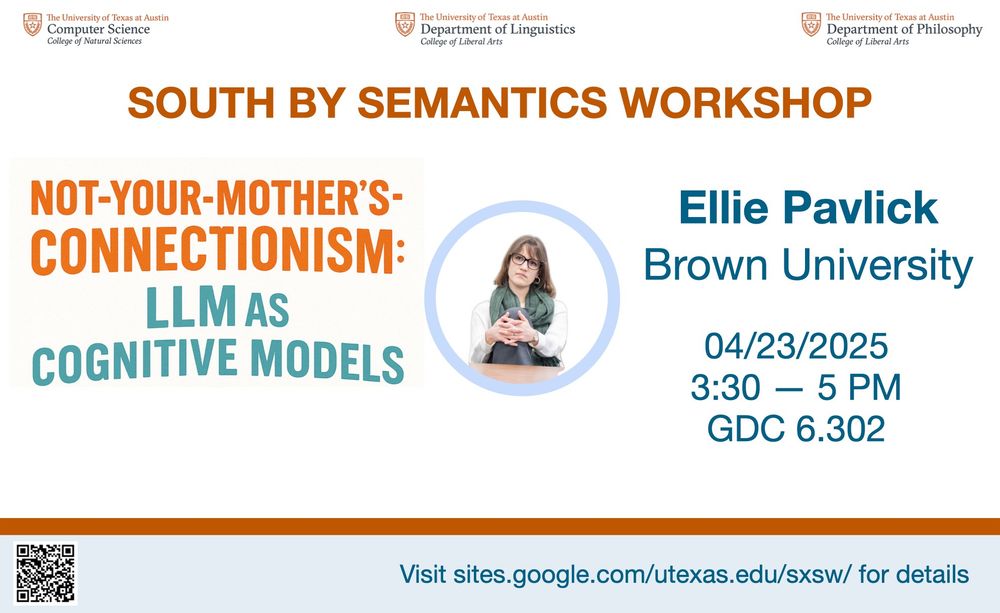

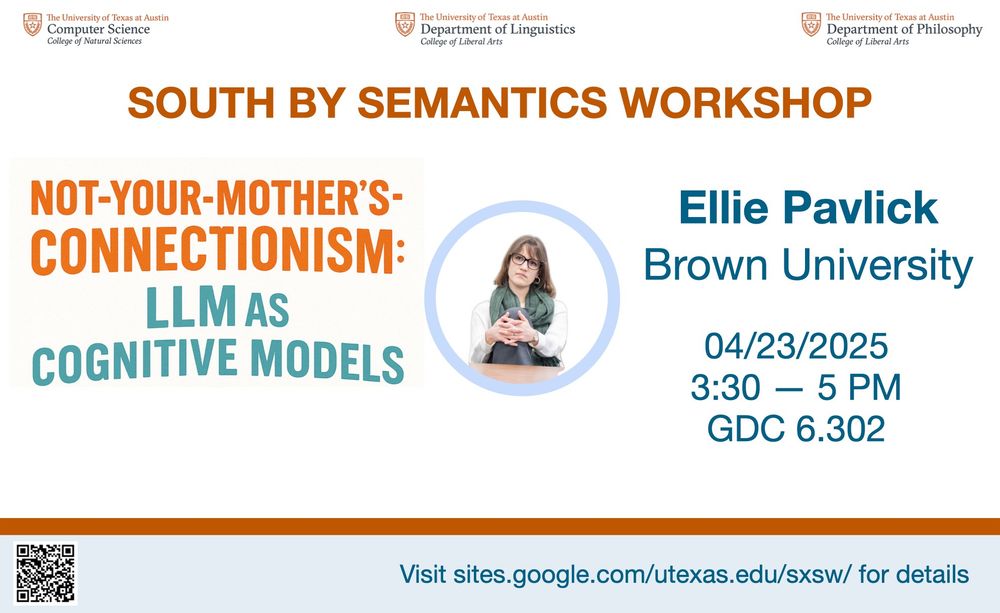

South by Semantics Workshop

Title: "Not-your-mother's connectionism: LLMs as cognitive models"

Speaker: Ellie Pavlick (Brown University)

Date and time: April 23, 2025. 3:30 - 5 PM.

Location: GDC 6.302

Our final South by Semantics lecture at UT Austin is happening on Wednesday April 23!

21.04.2025 13:39 — 👍 15 🔁 4 💬 2 📌 0

Check out @juand-r.bsky.social and @wenxuand.bsky.social 's work on improving generator-validator gaps in LLMs! I really like the formulation of the G-V gap we present, and I was pleasantly surprised by how well the ranking-based training closed the gap. Looking forward to following up in this area!

16.04.2025 18:18 — 👍 11 🔁 2 💬 0 📌 0

If you're scooping up students off the street for writing op-eds, you're secret police, and should be treated accordingly.

26.03.2025 20:00 — 👍 9084 🔁 2316 💬 97 📌 39

I'm excited to announce two papers of ours which will be presented this summer at @naaclmeeting.bsky.social eting.bsky.social and @iclr-conf.bsky.social !

🧵

11.03.2025 22:03 — 👍 10 🔁 3 💬 1 📌 0

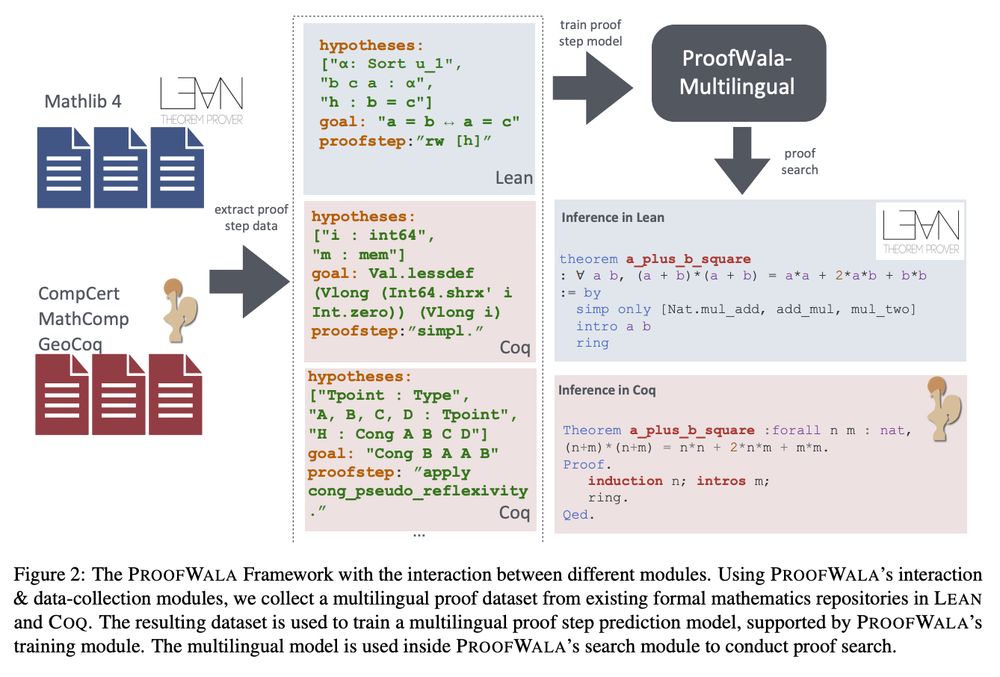

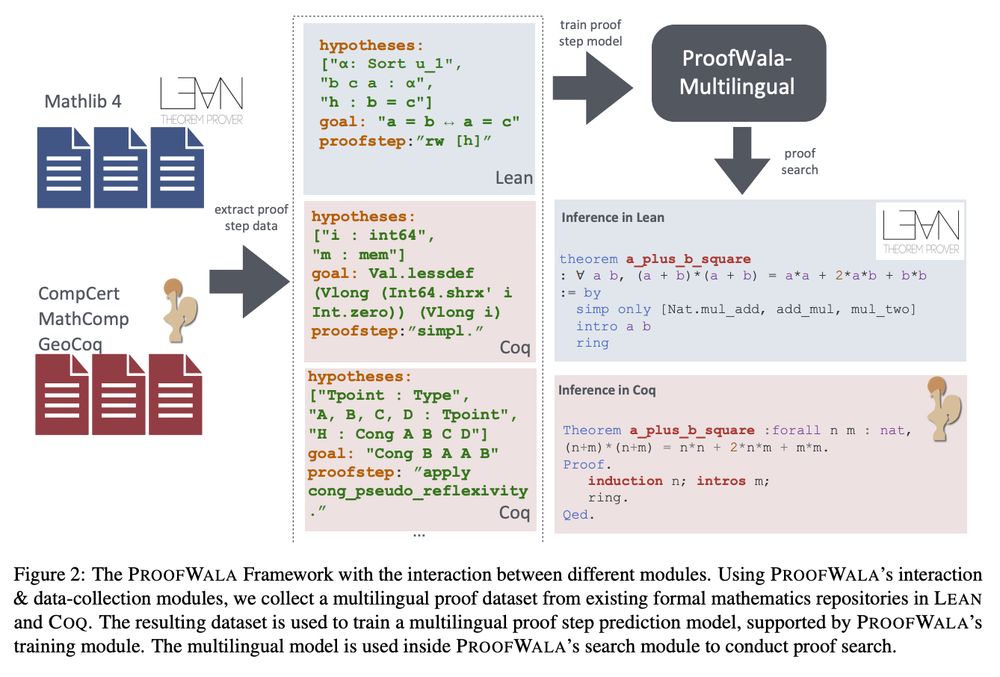

Excited about Proofwala, @amitayush.bsky.social's new framework for ML-aided theorem-proving.

* Paper: arxiv.org/abs/2502.04671

* Code: github.com/trishullab/p...

Proofwala allows the collection of proof-step data from multiple proof assistants (Coq and Lean) and multilingual training. (1/3)

22.02.2025 21:32 — 👍 21 🔁 5 💬 1 📌 1

Popular or not Dems cannot bend on the need for trans people to be treated with basic humanity and respect. If we give up that because the right made trans people unpopular, we give up everything. They’ll dice us group by group like a salami. We die on this hill or we die alone in a ditch

05.02.2025 21:19 — 👍 6842 🔁 1338 💬 140 📌 169

Here are just a few of the NSF review panels that were shut down today, Chuck.

This is research that would have made us competitive in computer science that will now be delayed by many months if not lost forever.

AI is fine but right now the top priority is keeping the lights on at NSF and NIH.

28.01.2025 03:06 — 👍 748 🔁 197 💬 10 📌 6

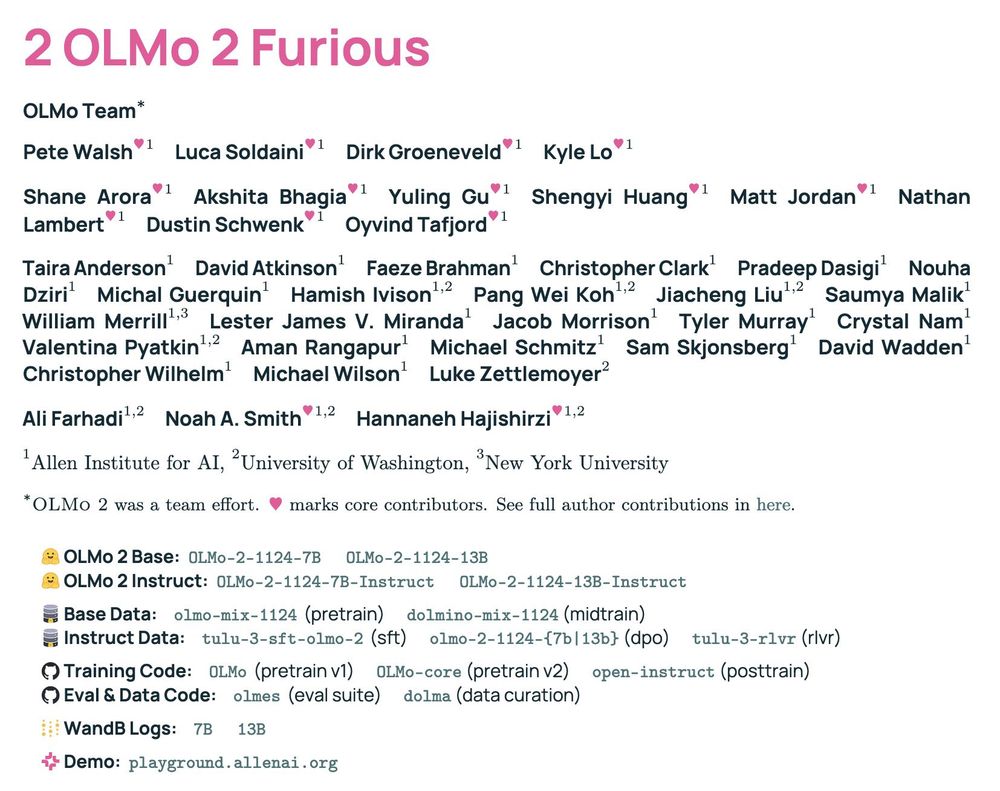

kicking off 2025 with our OLMo 2 tech report while payin homage to the sequelest of sequels 🫡

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

03.01.2025 16:02 — 👍 69 🔁 17 💬 2 📌 1

Taulbee Report 2024

Visit the post for more.

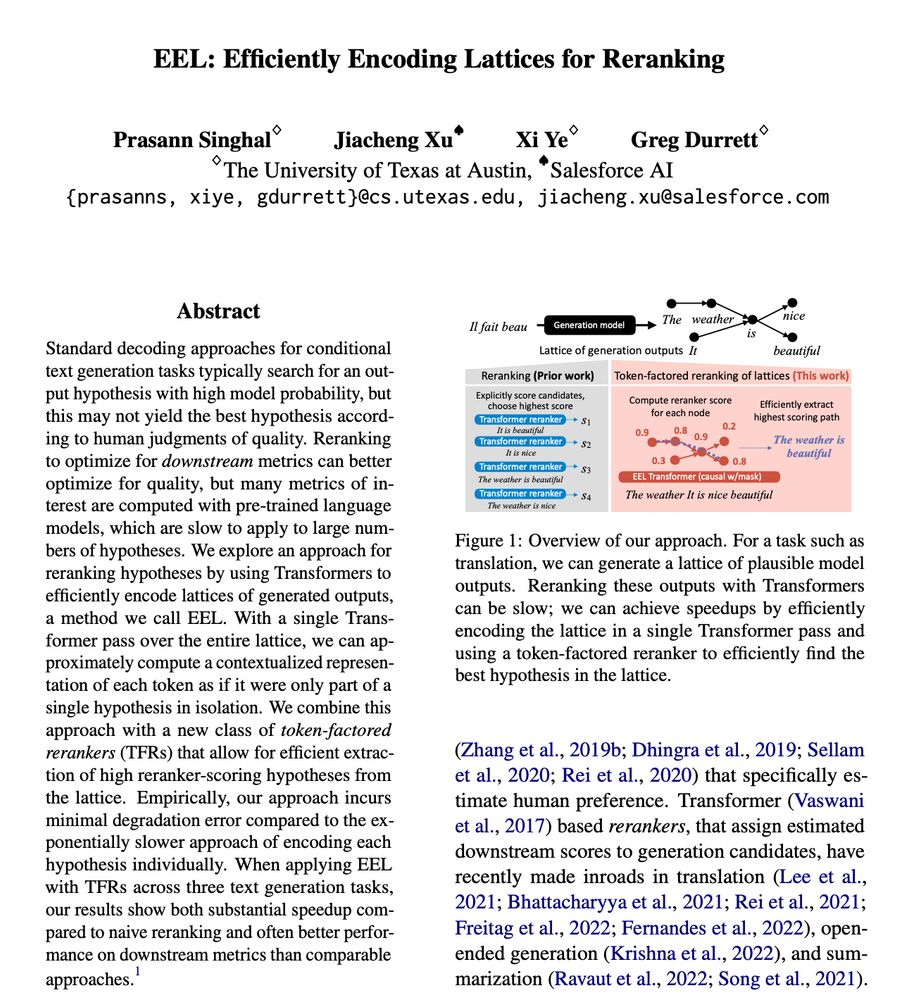

Congrats to Prasann and all the other awardees! Full list is here: cra.org/about/awards...

03.01.2025 14:39 — 👍 1 🔁 0 💬 0 📌 0

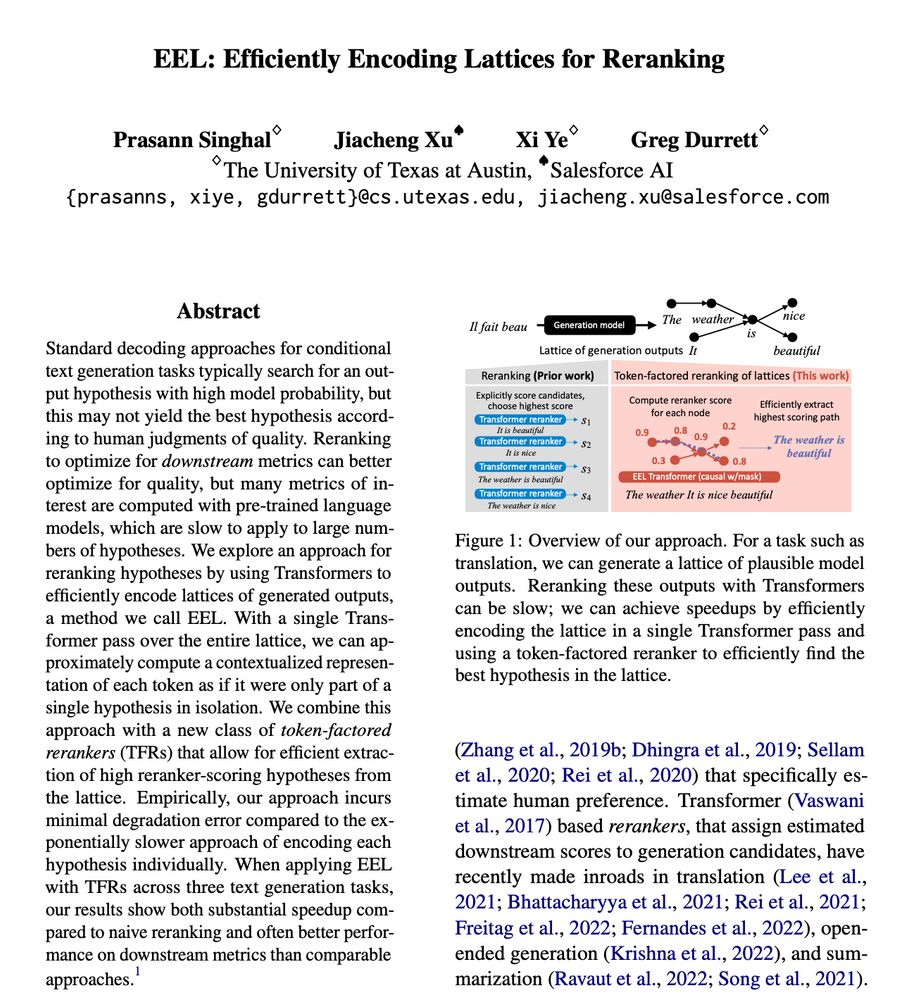

Before his post-training work, Prasann did a great project on representing LM outputs with lattices, which remains one of my favorite algorithms-oriented papers from my group in the last few years, with a lot of potential for interesting follow-up work!

03.01.2025 14:39 — 👍 3 🔁 0 💬 1 📌 0

I study algorithms/learning/data applied to democracy/markets/society. Asst. professor at Cornell Tech. https://gargnikhil.com/. Helping building personalized Bluesky research feed: https://bsky.app/profile/paper-feed.bsky.social/feed/preprintdigest

The 2025 Conference on Language Modeling will take place at the Palais des Congrès in Montreal, Canada from October 7-10, 2025

CS Ph.D. Student at UT Austin

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

Assoc. Prof. of CS at UMass. Assoc. Director of the Center for Intelligent Information Retrieval (CIIR). Interested in IR, NLP & ML.

Associate Professor and Computational Linguist @ University of Augsburg, Germany

Undergrad at UT Austin in CS and Linguistics

Assistant Professor at University of Pennsylvania.

Robot Learning.

https://www.seas.upenn.edu/~dineshj/

Dad, husband, President, citizen. barackobama.com

Assi. Prof @UofTCompSci. Postdoc @MPI_IS w/ @bschoelkopf. Research on (1) @CausalNLP and (2) NLP4SocialGood @NLP4SG. Mentor & mentee @ACLMentorship.

Associate professor @EmoryUniversity. Working on large language models, LLM inference, reasoning, natural language generation, and various aspects of GenAI. #NLProc #LLM #AI

Janice M. Jenkins Collegiate Professor of Computer Science at U. Michigan, Director Michigan AI Lab, Former ACL President, AAAI Fellow, ACM Fellow. Researcher #NLProc #AI

🔗 https://web.eecs.umich.edu/~mihalcea/

Assistant Professor @ UChicago CS & DSI UChicao

Leading Conceptualization Lab http://conceptualization.ai

Minting new vocabulary to conceptualize generative models.

A field-defining intellectual hub for data science and AI research, education, and outreach at the University of Chicago

https://datascience.uchicago.edu

AP @ University of Pittsburgh CS

PhD from UMass Amherst

she/her.

Professor for Natural Language Processing @uniwuerzburg