Last week, Dr. Nils Feldhus @nfel.bsky.social, postdoctoral researcher at @tuberlin.bsky.social and @bifold.berlin, visited our lab and presented his research during our weekly lab meeting.

21.01.2026 14:00 — 👍 10 🔁 4 💬 1 📌 1

Last week, Dr. Nils Feldhus @nfel.bsky.social, postdoctoral researcher at @tuberlin.bsky.social and @bifold.berlin, visited our lab and presented his research during our weekly lab meeting.

21.01.2026 14:00 — 👍 10 🔁 4 💬 1 📌 1

📄 openreview.net/forum?id=btJ...

Special thanks to our co-authors @nfel.bsky.social @kirillbykov.bsky.social @philinelb.bsky.social Anna Hedström, and Marina Höhne who are not with us in person at the conference.

I’m at #NeurIPS in San Diego this week! Come see our poster on feature interpretability. Find @eberleoliver.bsky.social and me at:

🪧Poster Session 1 @ Exhibit Hall C,D,E #1015

Wed 3 Dec, 11 am - 2 pm

🪧Poster @ Mech Interp Workshop

Upper Level Room 30A-E

Sun 7 Dec, 8 am - 5 pm

🚀 Visit our #NeurIPS posters at @neuripsconf.bsky.social!

Meet and interact with our authors at all locations — San Diego, Mexico City, and Copenhagen.

Details in the thread.

👇👇👇

Heading to the EMNLP BlackboxNLP Workshop this Sunday? Don’t miss @nfel.bsky.social and @lkopf.bsky.social poster on „Interpreting Language Models Through Concept Descriptions: A Survey“

aclanthology.org/2025.blackbo...

#EMNLP #BlackboxNLP #XAI #Interpretapility

We are grateful for the opportunity to present some of our work at the All Hands Meeting of the German AI Centers, hosted by @dfki.bsky.social in Saarbrücken.

Andreas Lutz @eberleoliver.bsky.social Manuel Welte @lorenzlinhardt.bsky.social @lkopf.bsky.social

#AI #XAI #Interpretability

Nov 9, @blackboxnlp.bsky.social , 11:00-12:00 @ Hall C – Interpreting Language Models Through Concept Descriptions: A Survey (Feldhus & Kopf) @lkopf.bsky.social

🗞️ aclanthology.org/2025.blackbo...

bsky.app/profile/nfel...

This is the eXplainable AI research channel of the machine learning group of Prof. Klaus-Robert Müller at Technische Universität Berlin @tuberlin.bsky.social & BIFOLD @bifold.berlin.

Let's connect!

#XAI #ExplainableAI #MechInterp #MachineLearning #Interpretability

Overview of descriptions for model components (neurons, attention heads) and model abstractions (SAE features, circuits).

🔍 Are you curious about uncovering the underlying mechanisms and identifying the roles of model components (neurons, …) and abstractions (SAEs, …)?

We provide the first survey of concept description generation and evaluation methods.

Joint effort w/ @lkopf.bsky.social

📄 arxiv.org/abs/2510.01048

Many thanks as well to the institutions that supported this research:

@tuberlin.bsky.social

@bifold.berlin

UMI Lab

@fraunhoferhhi.bsky.social

@unipotsdam.bsky.social

@leibnizatb.bsky.social

I’m very grateful to my amazing collaborators @nfel.bsky.social, @kirillbykov.bsky.social, @philinelb.bsky.social, Anna Hedström, Marina M.-C. Höhne, and @eberleoliver.bsky.social 🙏

19.09.2025 12:01 — 👍 4 🔁 0 💬 1 📌 0

Happy to share that our PRISM paper has been accepted at #NeurIPS2025 🎉

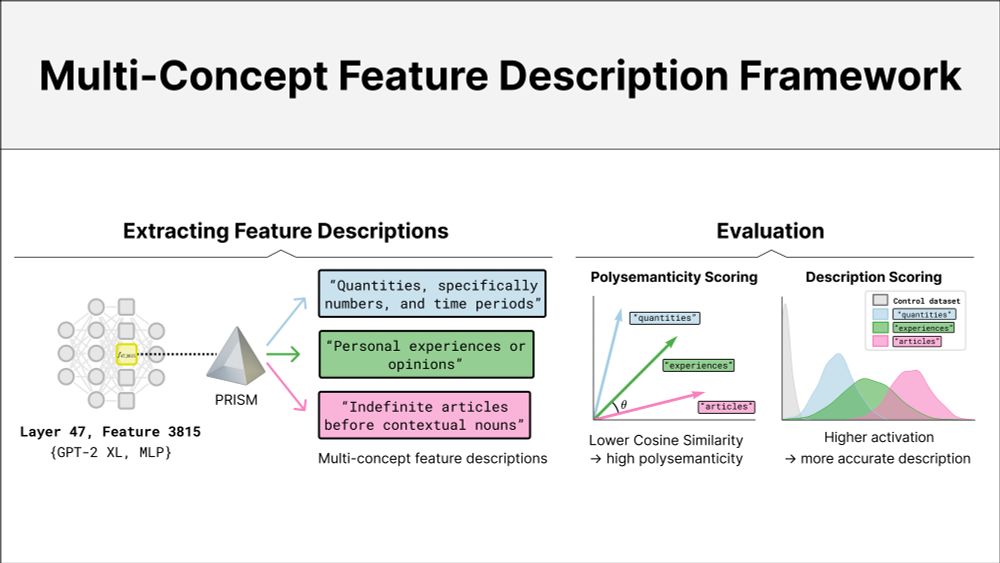

In this work, we introduce a multi-concept feature description framework that can identify and score polysemantic features.

📄 Paper: arxiv.org/abs/2506.15538

#NeurIPS #MechInterp #XAI

Grateful to the institutions that supported this work:

@tuberlin.bsky.social

@bifold.berlin

UMI Lab

@fraunhoferhhi.bsky.social

@unipotsdam.bsky.social

@leibnizatb.bsky.social

(7/7)

Many thanks to my amazing co-authors:

@nfel.bsky.social

@kirillbykov.bsky.social

@philinelb.bsky.social

Anna Hedström

Marina M.-C. Höhne

@eberleoliver.bsky.social

(6/7)

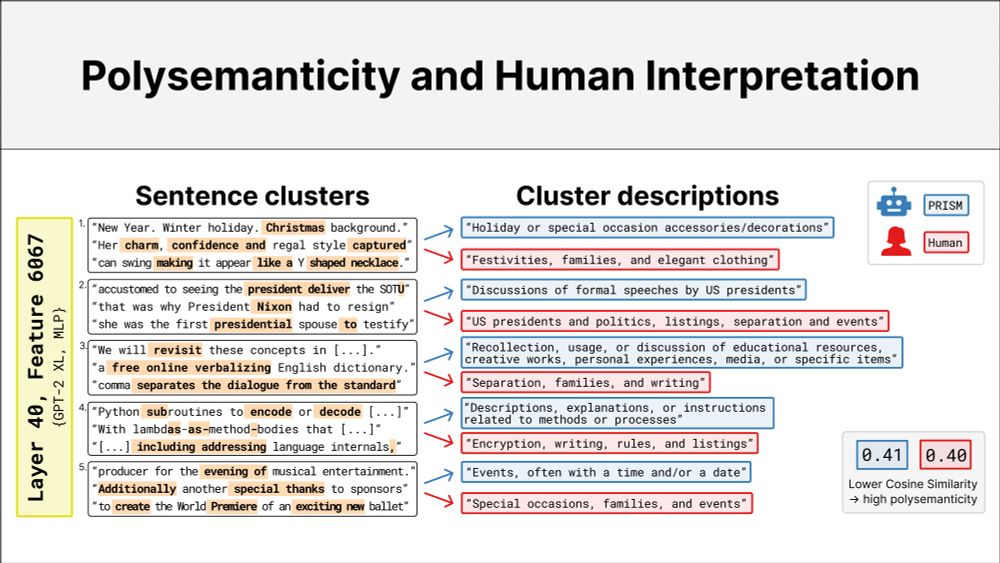

Our results highlight that the PRISM framework not only provides multiple human interpretable descriptions for neurons but also aligns with the human interpretation of polysemanticity. (5/7)

19.06.2025 15:18 — 👍 2 🔁 0 💬 1 📌 0

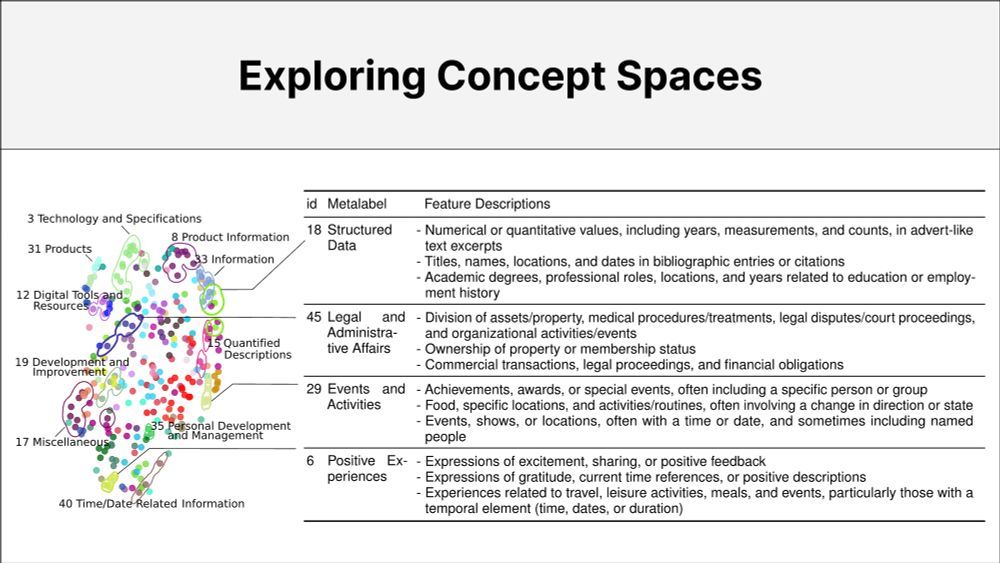

In exploring the concept space, we use PRISM to characterize more complex components, finding and interpreting patterns that specific attention heads or groups of neurons respond to. (4/7)

19.06.2025 15:18 — 👍 2 🔁 0 💬 1 📌 0

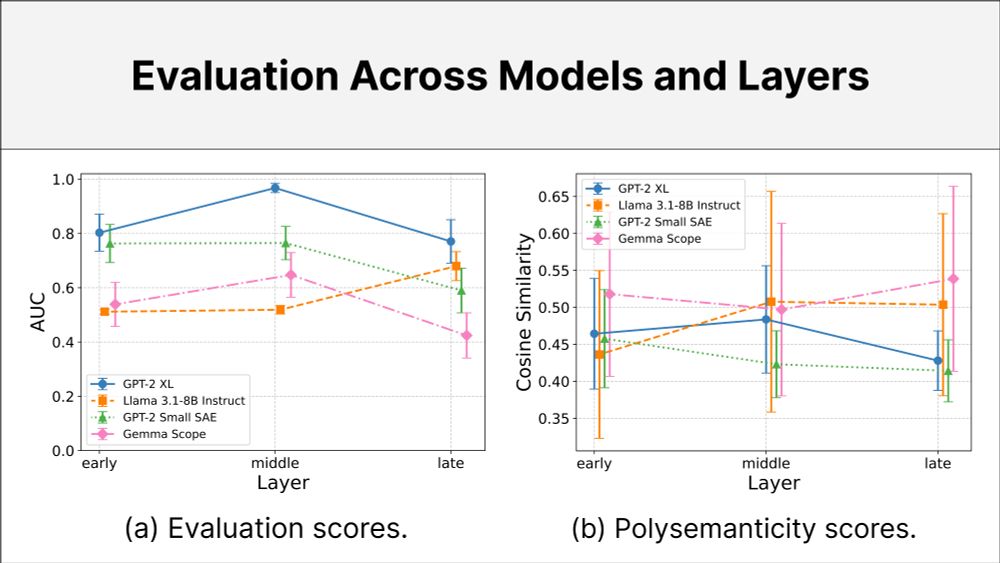

We benchmark PRISM across layers and architectures, showing how polysemanticity and interpretability shift through the model. (3/7)

19.06.2025 15:18 — 👍 2 🔁 0 💬 1 📌 0

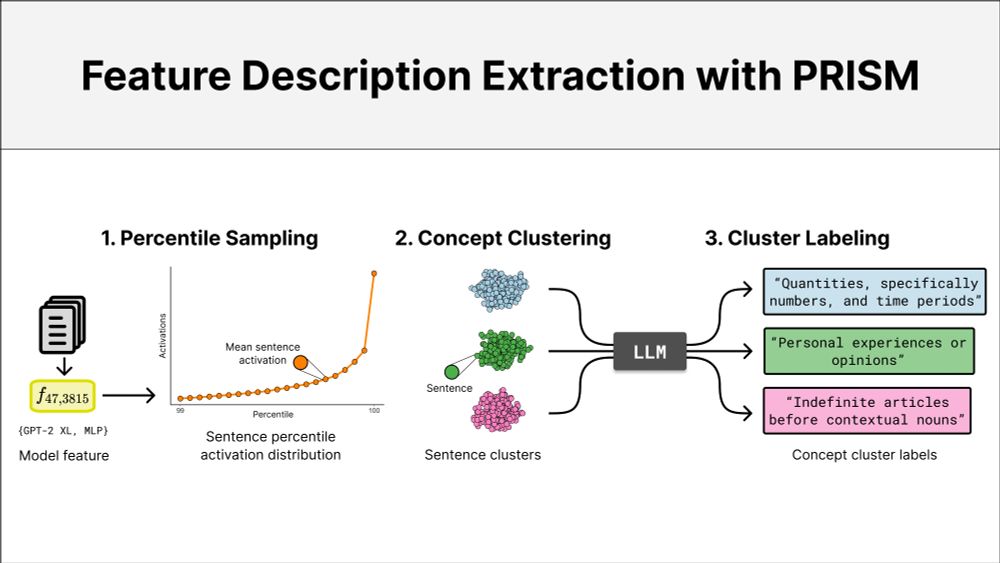

PRISM samples sentences from the top percentile activation distribution, clusters them in embedding space, and uses an LLM to generate labels for each concept cluster. (2/7)

19.06.2025 15:18 — 👍 2 🔁 0 💬 1 📌 0

🔍 When do neurons encode multiple concepts?

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

Huge thanks to my incredible supervisor

@kirillbykov.bsky.social, who laid the foundation for this project and provided brilliant guidance 🙏, and to @philinelb.bsky.social and Sebastian Lapuschkin, who unfortunately couldn’t be there.

Still overwhelmed by the amazing response to our poster session at @neuripsconf.bsky.social with Anna Hedström and Marina Höhne! It was incredible to have such lively and inspiring discussions with brilliant people whose work I admire. ✨

13.12.2024 02:48 — 👍 11 🔁 2 💬 1 📌 0Thanks for putting together this amazing list Margaret! I would love to be added if you still have space :)

12.12.2024 08:24 — 👍 2 🔁 0 💬 1 📌 0

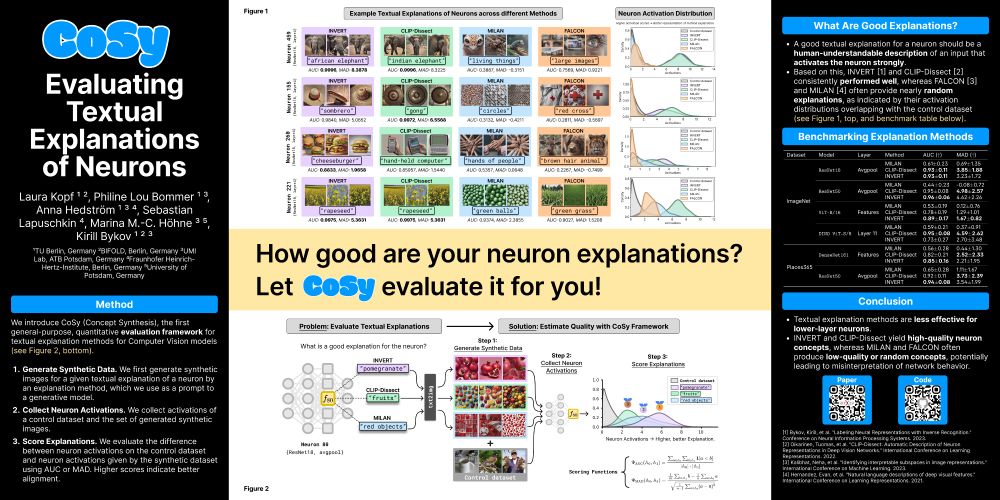

Want to know more about CoSy?

📄 Paper: arxiv.org/abs/2405.20331

💻 Code: github.com/lkopf/cosy

🔗 Poster: neurips.cc/virtual/2024...

#NeurIPS2024 #MechInterp #ExplainableAI #Interpretability

Special thanks to our supporting institutions: UMI Lab, @xtraexer.bsky.social, @tuberline.bsky.social, Uni Potsdam, ATB Potsdam, and Fraunhofer Heinrich-Hertz-Institut.

11.12.2024 06:43 — 👍 0 🔁 0 💬 1 📌 0My co-authors Anna Hedström and Marina Höhne will also be at @neuripsconf.bsky.social. A big thank you to my other co-authors @kirillbykov.bsky.social, @philinelb.bsky.social and Sebastian Lapuschkin, who unfortunately couldn’t be there.

11.12.2024 06:43 — 👍 0 🔁 0 💬 1 📌 0

I’ll be presenting our work at @neuripsconf.bsky.social in Vancouver! 🎉

Join me this Thursday, December 12th, in East Exhibit Hall A-C, Poster #3107, from 11 a.m. PST to 2 p.m. PST. I'll be discussing our paper “CoSy: Evaluating Textual Explanations of Neurons.”