GSoC really is a win-win: students gain OSS experience with dedicated mentorship, while open source projects get substantial improvements. Thanks to Massimiliano and Abel, to Manuel for mentoring and @janboelts.bsky.social for mentoring and coordination, and of course GSoC and @numfocus.bsky.social🙏

17.10.2025 13:30 — 👍 5 🔁 0 💬 0 📌 0

Abel @abelabate.bsky.social improved our codebase with software engineering best practices: strong typing via dataclasses, clearly defined interfaces using protocols, and systematic refactoring. Multiple PRs transformed our internal architecture for better maintainability and developer experience.

17.10.2025 13:30 — 👍 2 🔁 0 💬 1 📌 0

This was a massive undertaking: masked transformers, score-based diffusion variants, comprehensive tests & tutorials - all integrated into sbi's existing API. Kudos to Massimiliano for navigating this complexity!

17.10.2025 13:30 — 👍 2 🔁 0 💬 1 📌 0

@nmaax.bsky.social implemented the SIMFORMER. Simformer combines transformers + diffusion models to learn arbitrary conditioning between parameters and data, enabling "all-in-one" SBI: posterior/likelihood estimation, predictive sampling, and even missing data imputation 🚀

17.10.2025 13:30 — 👍 3 🔁 0 💬 1 📌 0

🎉 sbi participated in GSoC 2025 through @numfocus.bsky.social and it was a great success: our two students contributed major new features and substantial internal improvements: 🧵 👇

17.10.2025 13:30 — 👍 11 🔁 4 💬 1 📌 0

Materials from my EuroSciPy talk "Pyro meets SBI" are now available: github.com/janfb/pyro-meets-sbi

I show how we can use @sbi-devs.bsky.social-trained neural likelihoods in pyro 🔥

Check it out if you need hierarchical Bayesian inference but your simulator / model has no tractable likelihood.

18.09.2025 14:38 — 👍 8 🔁 1 💬 3 📌 0

Two more highlights: Your sbi-trained NLE can now be wrapped into a Pyro model object for flexible hierarchical inference. And based on your feedback, we added to(device) for priors and posteriors—switching between CPU and GPU is now even easier!

6/7

09.09.2025 15:00 — 👍 1 🔁 0 💬 1 📌 0

Here's where it gets wild: we unified flow matching (ODEs) and score-based models (SDEs). Train with one, sample with the other. E.g., train with the flexibility and stability of flow-matching, then handle iid data with score-based posterior sampling. 🤯

5/7

09.09.2025 15:00 — 👍 3 🔁 0 💬 1 📌 0

Welcome to sbi!

We completely rebuilt our documentation! Switched to Sphinx for a cleaner, more modular structure. No more wading through lengthy tutorials—now you get short, targeted how-to guides for exactly what you need, plus streamlined tutorials for getting started.

📚 sbi.readthedocs.io/en/latest/

4/7

09.09.2025 15:00 — 👍 1 🔁 0 💬 1 📌 0

New inference methods: MNPE now handles mixed discrete and continuous parameters for posterior estimation (like MNLE but for posteriors).

And for our nostalgic users: we finally added SNPE-B, that classic sequential variant you've been asking about since 2020.

3/7

09.09.2025 15:00 — 👍 2 🔁 0 💬 1 📌 0

After the creative burst of the hackathon in March, we spent months cleaning up, testing, and polishing. Re-basing ten exciting feature branches into main takes time—but the result is worth it.

2/7

09.09.2025 15:00 — 👍 1 🔁 0 💬 1 📌 0

From hackathon to release: sbi v0.25 is here! 🎉

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

09.09.2025 15:00 — 👍 29 🔁 16 💬 1 📌 1

More great news from the SBI community! 🎉

Two projects have been accepted for Google Summer of Code under the NumFOCUS umbrella, bringing new methods and general improvements to sbi. Big thanks to @numfocus.bsky.social, GSoC and our future contributors!

20.05.2025 10:50 — 👍 10 🔁 2 💬 0 📌 0

A wide shot of approximately 30 individuals standing in a line, posing for a group photograph outdoors. The background shows a clear blue sky, trees, and a distant cityscape or hills.

Great news! Our March SBI hackathon in Tübingen was a huge success, with 40+ participants (30 onsite!). Expect significant updates soon: awesome new features & a revamped documentation you'll love! Huge thanks to our amazing SBI community! Release details coming soon. 🥁 🎉

12.05.2025 14:29 — 👍 26 🔁 7 💬 0 📌 1

🎉 Exciting news! We are lauching an sbi office hour!

Join the sbi developers Thursdays 09:45-10:15am CET via Zoom (link: sbi Discord's "office hours" channel).

Get guidance on contributing, explore sbi for your research, or troubleshoot issues. Come chat with us! 🤗

github.com/sbi-dev/sbi/...

04.04.2025 07:36 — 👍 13 🔁 4 💬 0 📌 1

sbi 0.24.0 is out! 🎉 This comes with important new features:

- 🎯 Score-based i.i.d sampling

- 🔀 Simultaneous estimation of multiple discrete and continuous parameters or data.

- 📊: mini-sbibm for quick benchmarking.

Just in time for our 1-week SBI hackathon starting tomorrow---stay tuned for more!

16.03.2025 12:49 — 👍 24 🔁 3 💬 1 📌 1

🙏 Please help us improve the SBI toolbox! 🙏

In preparation for the upcoming SBI Hackathon, we’re running a user study to learn what you like, what we can improve, and how we can grow.

👉 Please share your thoughts here: forms.gle/foHK7myV2oaK...

Your input will make a big difference—thank you! 🙌

28.01.2025 15:18 — 👍 18 🔁 8 💬 0 📌 1

What to expect:

- Coding sessions to enhance the sbi toolbox

- Research talks & lightning talks

- Networking & idea exchange

🌍 In-person attendance is encouraged but a remote option is available.

It's free to attend, but seats are limited. Beginners are welcome! 🤗

Let’s push SBI forward—together! 🚀

14.01.2025 15:58 — 👍 2 🔁 0 💬 0 📌 0

🚀 Join the 4th SBI Hackathon! 🚀

The last SBI hackathon was a fantastic milestone in forming a collaborative open-source community around SBI. Be part of it this year as we build on that momentum!

📅 March 17–21, 2025

📍 Tübingen, Germany or remote

👉 Details: github.com/sbi-dev/sbi/...

More Info:🧵👇

14.01.2025 15:58 — 👍 20 🔁 5 💬 1 📌 2

🙌 Huge thanks to our contributors for this release, including 5 first-time contributors! 🌟

Special shoutout to:

emmanuel-ferdman, CompiledAtBirth, tvwenger, matthewfeickert, and manuel-morales-a 🎉

Let us know what you think of the new version!

30.12.2024 14:57 — 👍 2 🔁 0 💬 0 📌 0

✨ Highlights in v0.23.3:

- sbi is now available via condaforge 🛠️

- we now support MCMC sampling with multiple i.i.d. conditions 🎯 (this is for you, decision-making researchers)

💡 Plus, improved docs here and there, clarified SNPE-A behavior, and a couple of bug fixes.

30.12.2024 14:57 — 👍 1 🔁 0 💬 1 📌 0

🎉 Just in time for the end of the year, we’ve released a new version of sbi!

📦 v0.23.3 comes packed with exciting features, bug fixes, and docs updates to make sbi smoother and more robust. Check it out! 👇

🔗 Full changelog: github.com/sbi-dev/sbi/...

30.12.2024 14:57 — 👍 27 🔁 6 💬 1 📌 2

SBI Discord Server 🤖 · sbi-dev sbi · Discussion #1318

Dear all, we are launching an SBI Discord Server! 🎉 We want to use this server to further build a community around SBI, i.e., for sharing insights, questions and events around SBI in general and th...

We are launching an SBI Discord Server! 🎉

We want to use this server to further build a community around SBI, i.e., for sharing insights, questions and events around simulation-based inference in general and the sbi package in particular.

You are all invited to join! 🤗 github.com/sbi-dev/sbi/...

03.12.2024 08:54 — 👍 15 🔁 6 💬 0 📌 2

GitHub - sbi-dev/sbi: Simulation-based inference toolkit

Simulation-based inference toolkit. Contribute to sbi-dev/sbi development by creating an account on GitHub.

As of today, 61 scientists and engineers have contributed to the sbi toolbox. We are extremely happy about this, and want to give a huge **thank you** to all contributors! Stay tuned for more info on events and updates! github.com/sbi-dev/sbi

27.11.2024 11:17 — 👍 1 🔁 0 💬 0 📌 0

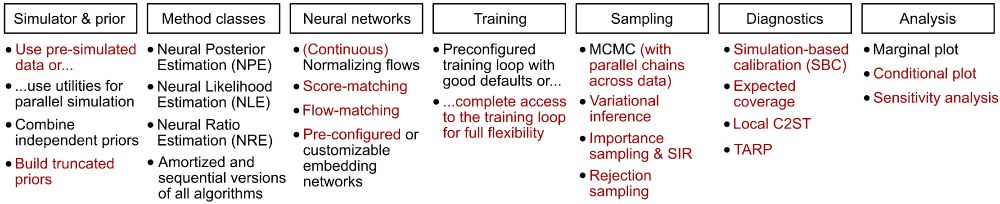

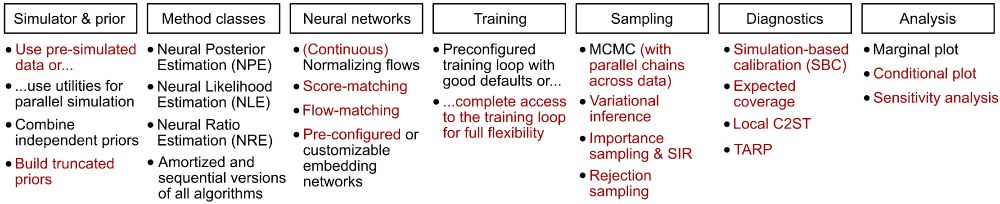

For all steps of the inference process, the sbi toolbox supports strong defaults if needed, but also provides full flexibility if desired. For example, you can use a pre-configured training loop, or you can write it yourself.

27.11.2024 11:17 — 👍 1 🔁 0 💬 1 📌 0

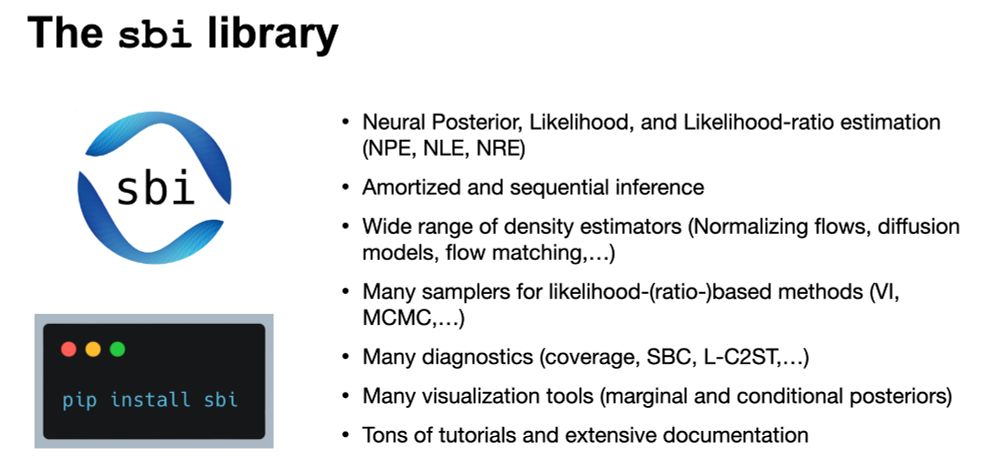

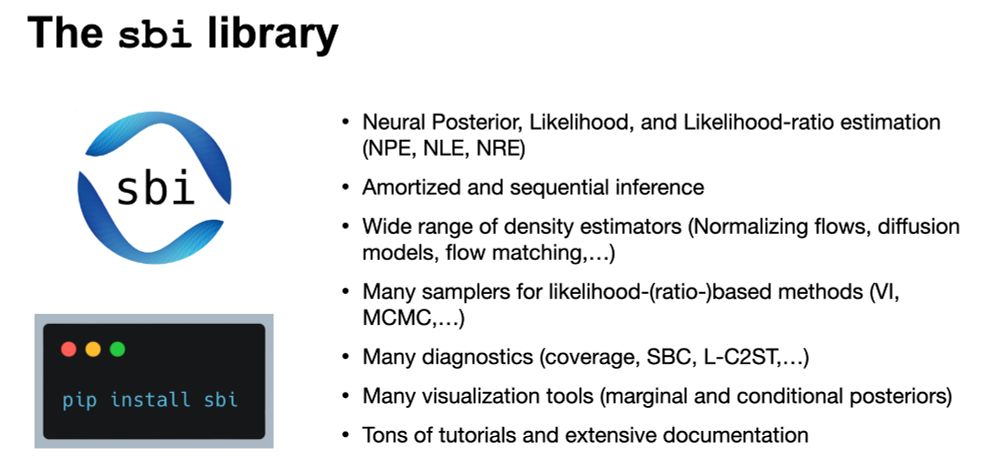

The sbi toolbox implements a wide range of simulation-based inference methods. It implements NPE, NLE, and NRE (all amortized or sequential), modern neural networks (flows, flow-matching, diffusion models), samplers, and diagnostic tools.

27.11.2024 11:17 — 👍 3 🔁 0 💬 2 📌 0

sbi reloaded: a toolkit for simulation-based inference workflows

Scientists and engineers use simulators to model empirically observed phenomena. However, tuning the parameters of a simulator to ensure its outputs match observed data presents a significant challeng...

The sbi package is growing into a community project 🌍 To reflect this and the many algorithms, neural nets, and diagnostics that have been added since its initial release, we have written a new software paper 📝 Check it out, and reach out if you want to get involved: arxiv.org/abs/2411.17337

27.11.2024 11:17 — 👍 60 🔁 22 💬 1 📌 4

Hello, world! We are a community-developed toolkit that performs Bayesian inference for simulators. We support a broad range of methods (NPE, NLE, NRE, amortized and sequential), neural network architectures (flows, diffusion models), samplers, and diagnostics. Join us!

18.11.2024 07:26 — 👍 26 🔁 8 💬 1 📌 0

Using Machine Learning for Matter Research @helmholtzai.bsky.social

We are a joint partnership of University of Tübingen and Max Planck Institute for Intelligent Systems. We aim at developing robust learning systems and societally responsible AI. https://tuebingen.ai/imprint

https://tuebingen.ai/privacy-policy#c1104

Official Bluesky Account Of The Open Science Community Amsterdam.

UvA | VU | AUAS | Amsterdam UMC | SIOS

https://osc-international.com/osc-amsterdam/

PostDoc Tübingen @mackelab.bsky.social 🇩🇪

PhD Uppsala 🇸🇪

MSc Delft 🇳🇱

Machine learning for science

dgedon.github.io

🧙🏻♀️ scientist at Meta NYC | http://bamos.github.io

Researcher at appliedAI Institute for Europe.

Working on simulation-based inference and responsible ML

Full Professor of Computational Statistics at TU Dortmund University

Scientist | Statistician | Bayesian | Author of brms | Member of the Stan and BayesFlow development teams

Website: https://paulbuerkner.com

Opinions are my own

Asst. Prof. University of Amsterdam, rock climber, husband, father. Model-based (Mathematical) Cognitive Neuroscientist. I study decision-making, EEG, 🧠, statistical methods, etc.

🇺🇦🇪🇺

Professor of Statistics and Machine Learning at UCL Statistical Science. Interested in computational statistics, machine learning and applications in the sciences & engineering.

Full Professor at @deptmathgothenburg.bsky.social | simulation-based inference | Bayes | stochastic dynamical systems | https://umbertopicchini.github.io/

Machine learner & physicist. At CuspAI, I teach machines to discover materials for carbon capture. Previously Qualcomm AI Research, NYU, Heidelberg U.

Using deep learning to study neural dynamics

@mackelab.bsky.social

Posting about the One World Approximate Bayesian Inference (ABI) Seminar, details at https://warwick.ac.uk/fac/sci/statistics/news/upcoming-seminars/abcworldseminar/

Amortized Bayesian Workflows in Python.

🎲 Post author sampled from a multinomial distribution, choices

⋅ @marvin-schmitt.com

⋅ @paulbuerkner.com

⋅ @stefanradev.bsky.social

🔗 GitHub github.com/bayesflow-org/bayesflow

💬 Forum discuss.bayesflow.org

Assoc. Prof. of Machine & Human Intelligence | Univ. Helsinki & Finnish Centre for AI (FCAI) | Bayesian ML & probabilistic modeling | https://lacerbi.github.io/

Assistant Professor at Rensselaer Polytechnic Institute (RPI)

Bayesian | Computational guy | Name dropper | Deep learner | Book lover

Opinions are my own.

Postdoctoral Researcher at University of Basel

Interested in Cognitive Modeling | Decision Making | Dynamics in Cognition | Amortized Bayesian Inference | Superstatistics

PhD student at TU Berlin, working on generative models and inverse problems

he/him

Doctoral researcher at Aalto University

Simulation-based Inference

yugahikida.github.io