Cool! I love your blenderproc code!

10.12.2024 18:50 — 👍 1 🔁 0 💬 0 📌 0

🚀 How well does it work?

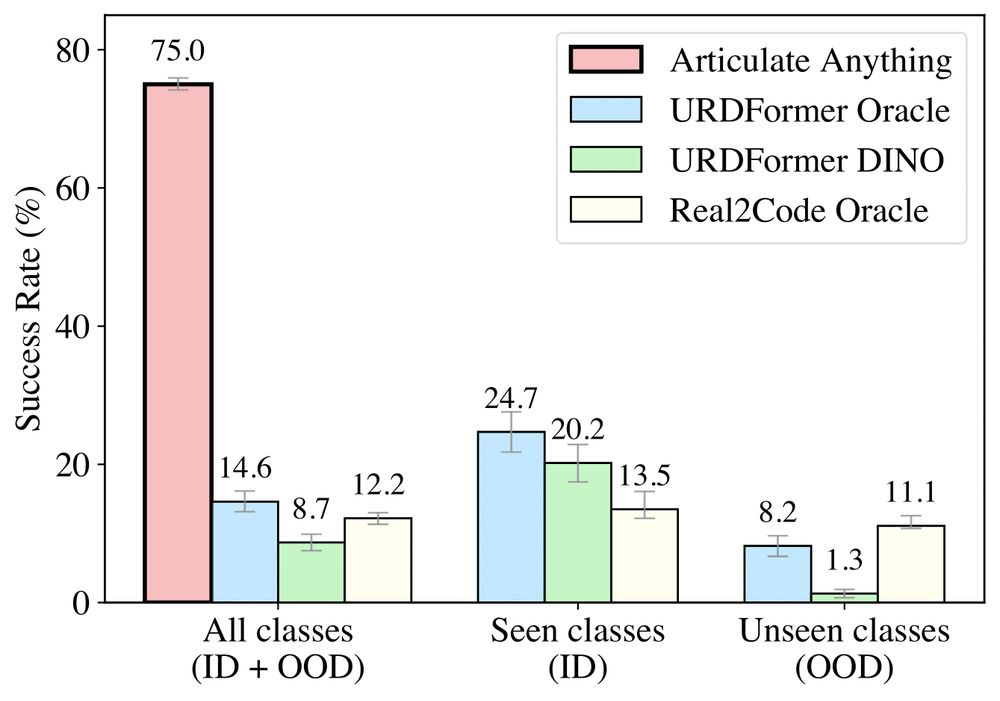

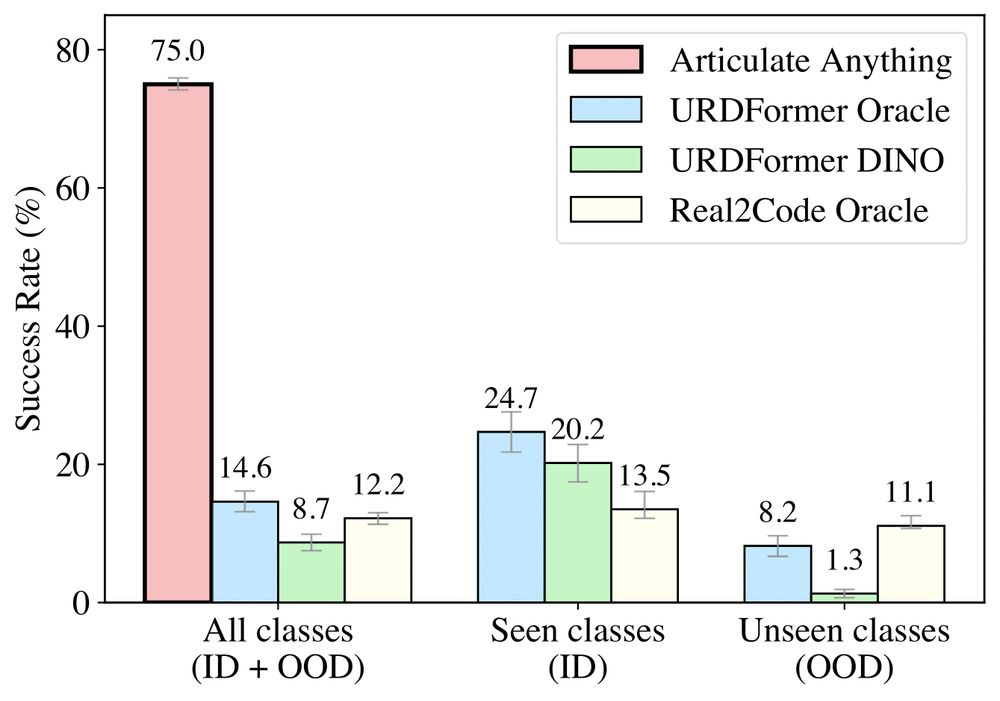

Articulate-Anything is much better than the baselines both quantitatively and qualitatively. This is possible due to (1) leveraging richer input modalities, (2) modeling articulation as a high-level program synthesis, (3) leveraging a closed-loop actor-critic system

10.12.2024 16:44 — 👍 0 🔁 0 💬 0 📌 0

🧩How does it work?

Articulate-Anything breaks the problem into three steps: (1) Mesh retrieval, (2) Link placement, which spatially arranges the parts together, and (3) Joint prediction, which determines the kinematic movement between parts. Take a look at a video explaining this pipeline!

10.12.2024 16:44 — 👍 0 🔁 0 💬 1 📌 0

🙀Why does this matter?

Creating interactable 3D models of the world is hard. An artist have to model the physical appearance of the object to create a mesh. Then a roboticist needs to manually annotate the kinematic joints to give object movement in URDF.

But what we can automate all these steps?

10.12.2024 16:44 — 👍 1 🔁 0 💬 1 📌 0

📦 Can frontier AI transform ANY physical object from ANY input modality into a high-quality digital twin that also MOVES?

Excited to share our work,Articulate-Anything 🐵, exploring how VLMs can bridge the gap between the physical and digital worlds.

Website: articulate-anything.github.io

10.12.2024 16:44 — 👍 4 🔁 1 💬 1 📌 0

Hello, can I be added please 🙏? I work on robot/reinforcement learning and we have a cool sim2real RL paper in submission!

14.11.2024 15:55 — 👍 0 🔁 0 💬 0 📌 0

Hello! Can I be added please 🙏? I work on 3d vision for robot learning

14.11.2024 15:12 — 👍 1 🔁 0 💬 1 📌 0

Principal research scientist at Google DeepMind. Synthesized views are my own.

📍SF Bay Area 🔗 http://jonbarron.info

This feed is a mostly-incomplete mirror of https://x.com/jon_barron, I recommend you just follow me there.

PhD student at TU Munich

Researcher with the German Aerospace Center (DLR)

Visualization Aficionado

Blog: hummat.com

research @ Google DeepMind

Ph.D. in Neuroimaging | AI/Computer Vision Researcher | Making training and inference more efficient | 🇲🇫 CTO & Startup Founder | Linux Aficionado

PhD @ MICAS, KU Leuven 🇧🇪 | Neuromorphic hardware for edge AI | Elec. Eng. grad (ULiège), neuromorphic focus.

#RobotLearning Professor (#MachineLearning #Robotics) at @ias-tudarmstadt.bsky.social of

@tuda.bsky.social @dfki.bsky.social @hessianai.bsky.social

Ex-SWE @ Google Life Science // Radiology Resident @ UPenn // researching self supervision for radiology AI

Principal Research Scientist in Computer Vision at Naver Labs Europe

https://philippeweinzaepfel.github.io/

PhD candidate @ The Ohio State University

autonomous systems | edge computing | robotics | animal ecology

I build autonomous aerial systems for ecology missions. 🚁🦓

https://jennamk14.github.io/

Robotics PhD Student ◆ Animal Lover ◆ Curious Person

Predictive dynamics of hexapod robots in granular media research is underway

Exploring frontiers of AI and its applications to science/engineering

Formerly: PhD/postdoc in Berkeley AI Research Lab + UC Berkeley Computational Imaging Lab

https://henrypinkard.github.io/

Computer Vision Laboratory @ Kyoto University (Ko Nishino, Ken Sakurada, Ryo Kawahara)

https://vision.ist.i.kyoto-u.ac.jp/

ML for drug discovery, protein dynamics, medical image analysis, PhD, Max-Delbrück Center Berlin

M.Sc. @ ETH Zurich, 👀 for Ph.D. opportunities

3D vision, neural rendering, and 3D reconstruction

📍Zurich, Switzerland

Research Fellow @ York. Using Computer Vision to improve film and tv production. Interested in 3D body models, video for 3D, and inverse rendering.

Defence, 3D computer vision, EW, GNSS, open source and C++. #NAFO

R&D Lead Engineer at Milrem Robotics.

github.com/valgur

Currently studying Masters in Human centered AI in Technical university of Denmark, interested in 3D vision and Vision Language Models

Previously bachelor student in Lund university

PhD student @stanfordnlp.bsky.social. Robotics Intern at the Toyota Research Institute. I like language, robots, and people.

On the academic job market!

PhD candidate @ ETH Zurich & Max Planck Institute for Informatics

From SLAM to Spatial AI; Professor of Robot Vision, Imperial College London; Director of the Dyson Robotics Lab; Co-Founder of Slamcore. FREng, FRS.