01.07.2025 02:26 — 👍 4 🔁 1 💬 0 📌 0

01.07.2025 02:26 — 👍 4 🔁 1 💬 0 📌 0

YouTube video by Diego Molina

Juno - This Is The Way It Goes And Goes And Goes (Full Album) (1999)

www.youtube.com/watch?v=jiwk...

16.06.2025 02:21 — 👍 0 🔁 0 💬 0 📌 0

Awesome!

06.04.2025 14:59 — 👍 0 🔁 0 💬 0 📌 0

Text from van der Vaart, "Asymptotic Statistics" Ch 27, http://www.stat.yale.edu/~pollard/Books/LeCamFest/VanderVaart.pdf

The theorem may have looked to somewhat too complicated to gain popularity. Nevertheless Hájek's result, for general locally asymptotically normal models and general loss functions, is now considered the final result in this direction, Hájek wrote:

"The proof that local asymptotic minimax implies local asymptotic admissibility was first given by LeCam (1953, Theorem 14). ... Apparently not many people have studied Le Cam's paper so far as to read this very last theorem, and the present author is indebted to Professor LeCam for giving him the reference"

Not reading to the end of Le Cam's papers became not uncommon in later years. His ideas have been regularly rediscovered

Went to look up textbook results after getting the nagging feeling that an ML paper was reinventing classical ideas, and found this gem:

"Not reading to the end of Le Cam's papers became not uncommon in later years. His ideas have been regularly rediscovered."

At least they're in good company.

28.03.2025 23:37 — 👍 18 🔁 3 💬 1 📌 1

Ok I think I'll stop now :) I'm always amazed at how ahead of its time this work was.

It's too bad it's not as widely known among us causal+ML people

17.02.2025 02:47 — 👍 2 🔁 0 💬 0 📌 0

x.com

Pfanzagl uses pathwise differentiability above, but w/regularity conditions this is just a distributional Taylor expansion, which is easier to think about

I note this in my tutorial here:

www.ehkennedy.com/uploads/5/8/...

Also v related to so-called "Neyman orthogonality" - worth separate thread

17.02.2025 02:47 — 👍 1 🔁 0 💬 1 📌 0

Here’s Pfanzagl on the gradient of a functional/parameter, aka derivative term in a von Mises expansion, aka influence function, aka Neyman-orthogonal score

Richard von Mises first characterized smoothness this way for stats in the 30s/40s! eg:

projecteuclid.org/journals/ann...

17.02.2025 02:47 — 👍 1 🔁 0 💬 1 📌 0

From twitter:

A short thread:

It amazes me how many crucial ideas underlying now-popular semiparametrics (aka doubly robust parameter/functional estimation / TMLE / double/debiased/orthogonal ML etc etc) were first proposed many decades ago.

I think this is widely under-appreciated!

30.09.2024 03:11 — 👍 45 🔁 12 💬 3 📌 2

The m-estimator logic certainly relies on “exactly correct”

Once you start moving to “close enough” to me that means you’re no longer getting precise root-n rates with the nuisances. Then you’ll have to deal with the bias/variance consequences just as if you were using flexible ML

11.02.2025 15:12 — 👍 3 🔁 0 💬 0 📌 0

And here for more specific discussion:

arxiv.org/pdf/2405.08525

I think DR estimation vs inference are two quite different things and we need different assumptions to make them work

11.02.2025 14:47 — 👍 2 🔁 0 💬 0 📌 0

If we really rely on 2 parametric models, we should of course use a variance estimator recognizing this. But this is more about how we model nuisances vs DR estimator itself

Also our paper here suggests strictly more assumptions are needed for DR inference vs estimation:

arxiv.org/pdf/2305.04116

11.02.2025 14:43 — 👍 3 🔁 0 💬 1 📌 0

I find it much more believable that I could estimate both nuisances consistently, but at slower rates, vs that I could pick 2 parametric models (without looking at data) & happen to get one exactly correct

11.02.2025 14:43 — 👍 5 🔁 0 💬 2 📌 0

Hm not sure I agree with this logic…

To me the beautiful thing about the DR estimator is you can get away with estimating both nuisances at slower rates (as long as the product is < 1/sqrt(n))

This opens the door to using much more flexible methods - random forests, lasso, ensembles, etc etc

11.02.2025 14:43 — 👍 3 🔁 0 💬 2 📌 0

"Randomized trials should be used to answer any causal question that can be so studied...

But the reality is that observational methods are used everyday to answer pressing causal questions that cannot be studied in randomized trials."

- Jamie Robins, 2002

tinyurl.com/4yuxfxes

tinyurl.com/zncp39mr

13.01.2025 02:49 — 👍 24 🔁 3 💬 2 📌 0

What's the best paper you read this year?

27.12.2024 17:02 — 👍 35 🔁 4 💬 13 📌 2

Here's the recent paper!

bsky.app/profile/edwa...

19.12.2024 02:24 — 👍 8 🔁 0 💬 0 📌 0

Thank you Alec for leading this project, I learned a lot! This paper has a very useful study of what contrasts are feasible in situations with many treatments and positivity violations, including necessary assumptions and efficient one-step estimators. Check it out!

13.12.2024 23:53 — 👍 12 🔁 3 💬 0 📌 0

Found slides by Ankur Moitra (presented at a TCS For All event) on "How to do theoretical research." Full of great advice!

My favourite: "Find the easiest problem you can't solve. The more embarrassing, the better!"

Slides: drive.google.com/file/d/15VaT...

TCS For all: sigact.org/tcsforall/

13.12.2024 20:31 — 👍 132 🔁 29 💬 3 📌 4

@bonv.bsky.social presented this at NYU this week -- terrific work with an excellent presentation (no surprise there)! I found the connections to higher-order estimators and the orthogonalizing property of the U-stat kernel fascinating&illuminating.

13.12.2024 19:05 — 👍 2 🔁 1 💬 1 📌 0

has lots of connections to doubly robust inference

academic.oup.com/biomet/artic...

arxiv.org/abs/1905.00744

arxiv.org/abs/2107.06124

13.12.2024 04:06 — 👍 1 🔁 0 💬 1 📌 0

I see renewed discussion on #statsky about the interpretation of confidence intervals. I will leave here this quote from Larry Wasserman's All of Statistics, which I love. Controlling one's lifetime proportion of studies with an interval that does not contain the parameter is surely desirable!

06.12.2024 14:44 — 👍 35 🔁 4 💬 1 📌 0

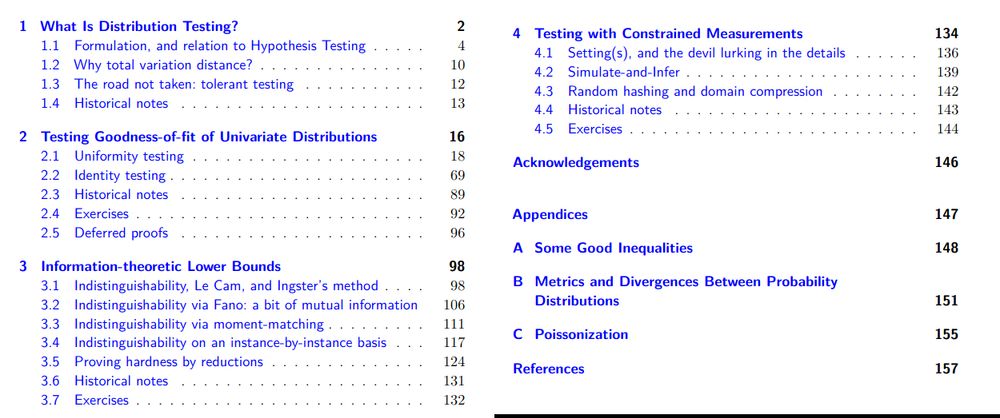

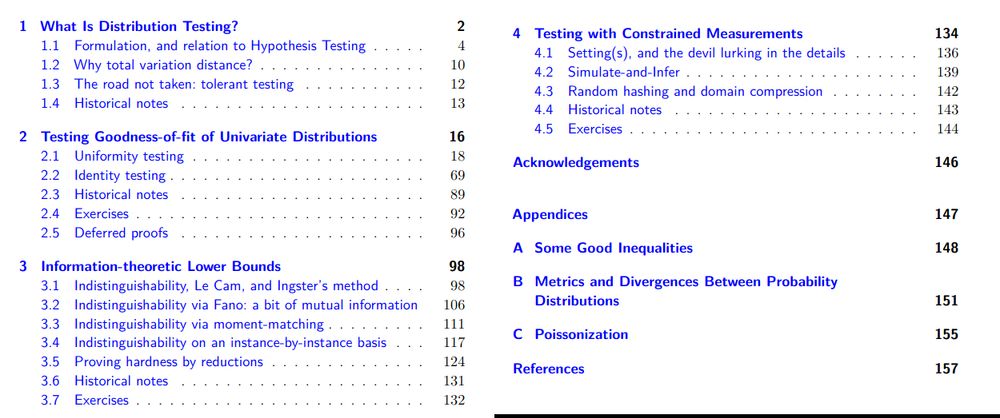

Table of contents of the monograph

Reminder/plug: my graduate-level monograph on "Topics and Techniques in Distribution Testing" (FnT Comm. and Inf Theory, 2022).

📖 ccanonne.github.io/survey-topic... [Latest draft+exercise solns, free]

📗 nowpublishers.com/article/Deta... [Official pub]

📝 github.com/ccanonne/sur... [LaTeX source]

15.11.2024 20:02 — 👍 72 🔁 9 💬 3 📌 0

I strongly believe that the average treatment effect is given way too much

prominence in economics and econometrics. ATE can be informative, but it

can also badly mislead policy makers and decision makers. If we know the joint

distribution of potential outcomes, then we may be able to better calibrate

the policy. I hope that Kolmogorov bounds will become a part of the modern

econometrician’s toolkit. A good place to learn more about this approach is

Fan and Park (2010). Mullahy (2018) explores this approach in the context of

health outcomes.

Chuck Manski revolutionized econometrics with the introduction of set

identification. He probably does not think so, but Chuck has changed the

way many economists and most econometricians think about problems. We

think much harder about the assumptions we are making. Are the assumptions

credible? We are much more willing to present bounds on estimates, rather

than make non-credible assumptions to get point estimates.

Manski’s natural bounds allow the researcher to estimate the potential

effect of the policy with minimal assumptions. These bounds may not be

informative, but that in and of itself is informative. Stronger assumptions may

lead to more informative results but at the risk that the assumptions, not the

data, determine the results.

🔥🔥🔥 from Chris Adams's "Learning Microeconometrics with R:"

25.11.2024 02:20 — 👍 23 🔁 5 💬 1 📌 2

If by distribution of the treatment effect you mean the distribution of the counterfactual difference Y1-Y0, then (a) this is not a soft intervention (asks what happen if all treated vs control), and (b) that distribution (other than the mean) is not identified even when all confounders are measured

25.11.2024 14:47 — 👍 0 🔁 0 💬 0 📌 0

The main motivation is that soft / stochastic intervention effects are typically much more realistic/plausible than more standard effects - instead of asking what would happen if every single person got treatment vs control, they ask what would happen if the treatment distribution shifted slightly

24.11.2024 15:45 — 👍 6 🔁 1 💬 4 📌 0

"There’s no way you can just sit down & do a `big thing', or at least I can’t. So I just went back to doing lots of little things, & hoping that some of them will turn out okay. Statistics is a wonderfully forgiving field... all you have to do is get an idea & keep at it."

- Brad Efron #statsquotes

24.11.2024 03:09 — 👍 52 🔁 5 💬 3 📌 2

Professor of Epidemiology

Emory University

Sociologist at @gesis.org working with EU-SILC in @fdzgml.bsky.social. Research interests: econometrics, survey methodology, sociology of wealth, family sociology, integration/migration, ethnic segregation. Typos galore🌻🏴🚩

Rhetoric professor at Carnegie Mellon. Lawyer, bold wordsmith. Exploring how legal language shapes justice, identity, and power. Latest book Judicial Rhapsodies: https://www.fulcrum.org/concern/monographs/b2773z23s

Data Scientist and Postdoc at @pophel.bsky.social. PhD in Demography and Sociology at UPenn, Statistics MA at Wharton. My research focuses on mortality determinants and trends. Bayesian statistics, forecasting, statistical modeling.

Columnist and chief data reporter the Financial Times | Stories, stats & scatterplots | john.burn-murdoch@ft.com

📝 ft.com/jbm

FT columnist, BBC journalist, author, host of Cautionary Tales, professional nerd.

Statistician at UW developing methods for demography, climate change, cluster analysis, model selection & averaging.

Math Assoc. Prof. (On leave, Aix-Marseille, France)

Teaching Project (non-profit): https://highcolle.com/

social epidemiologist with @harvardstriped.bsky.social | queer mental health, eating disorders, quantitative intersectionality | she/her 🌈

Assistant Teaching Professor, Department of Statistics & Data Science, Carnegie Mellon University, Director of Carnegie Mellon #SportsAnalytics Center (CMSAC) https://stat.cmu.edu/cmsac

Lecturer at School of Population Health, RCSI University of Medicine and Health Sciences. Interested in epidemiological modelling, ageing, chronic disease, stroke, dementia and cognitive impairment, quality of life.

hi this is @annierau.bsky.social! my DMs are open

Genetics, bioinformatics, comp bio, statistics, data science, open source, open science!

Assistant Professor of Epidemiology at Brown University. Dementia Pharmacoepidemiology. Dynamical Modeling. Statistics. Metascience & Knowledge Representation. Cat Mom. She/her.

PhD student in Health Policy, Methods for Policy Research | Harvard University

Passionate about research in mental health, the social safety net, and research methods

Views are my own and don't represent those of employer

Rollins Assistant Professor of Biostatistics @EmoryRollins, PhD @JHU.edu

Interested in causal inference, missing data, machine learning, algorithmic fairness, non/semiparametrics, graphical models, etc

raziehnabi.com

Asst Prof at Carnegie Mellon Stats Dept interested in infectious disease, genomics, time series, and discrete stable distributions.

01.07.2025 02:26 — 👍 4 🔁 1 💬 0 📌 0

01.07.2025 02:26 — 👍 4 🔁 1 💬 0 📌 0