Joint work w/ wonderful colleagues at Google: Ananth Balashankar (co-lead), @jonathanberant.bsky.social @jacobeisenstein.bsky.social, Michael Collins, Adrian Hutter, Jong Lee, Chirag Nagpal, Flavien Prost , Ananda Theertha Suresh, and @abeirami.bsky.social.

11.02.2025 16:26 — 👍 3 🔁 0 💬 0 📌 0

Check out the paper for more details: arxiv.org/pdf/2412.19792.

11.02.2025 16:26 — 👍 1 🔁 0 💬 1 📌 0

We also show that our proposed reward calibration method is a strong baseline for optimizing standard win rate on all considered datasets, with comparable or better performance than other SOTA methods, demonstrating the benefits of the reward calibration step.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

For Worst-of-N, we use Anthropic harmlessness dataset, and observe similar improvements. The best improvement is achieved by an exponential transformation with a negative exponent.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

We empirically compare InfAlign-CTRL with other SOTA alignment methods. For Best-of-N, we use Anthropic helpfulness and Reddit summarization quality dataset. We show that it offers up to 3-8% improvement on inference-time win rates, achieved by an exponential transformation with a positive exponent.

11.02.2025 16:26 — 👍 1 🔁 0 💬 1 📌 0

We provide an analytical tool to compare InfAlign-CTRL with different transformation functions for a given inference-time procedure. We find that exponential transformations, which optimize different quantiles of the reward with different t's, achieves close-to-optimal performance for BoN and WoN.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

We then particularize the study to two popular inference-time strategies, BoN sampling (BoN) and BoN jailbreaking (WoN). Despite simplicity, BoN is known to be an effective procedure for inference-time alignment and scaling. Variants of WoN are effective for evaluating safety against jailbreaks.

11.02.2025 16:26 — 👍 1 🔁 0 💬 1 📌 0

The reward calibration step makes the reward model more robust to its learning process. We empirically show that it could help mitigate reward hacking. The transformation function allows us to further tailor the alignment objective to different inference-time procedures.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

To enable practical solutions, we provide the calibrate-and-transform RL (InfAlign-CTRL) algorithm to solve this problem, which involves a reward calibration step and a KL-regularized reward maximization step with a transformation Ф of the calibrated reward.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

We show that the optimal reward transformation satisfies a coupled-transformed reward/policy optimization objective, which lends itself to iterative optimization. However, the approach is unfortunately computationally inefficient and infeasible for real-world models.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

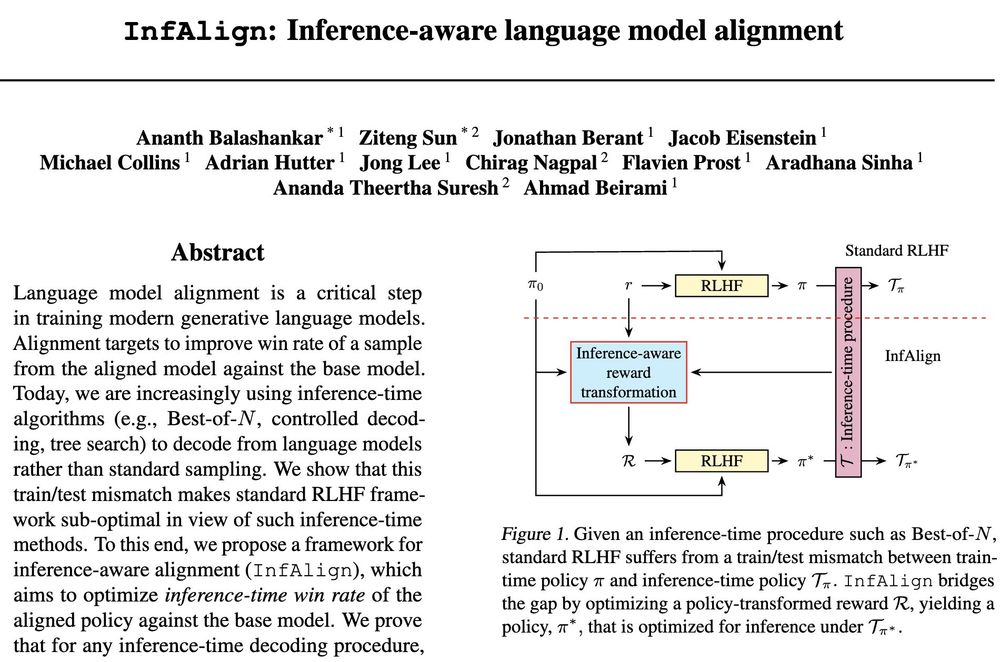

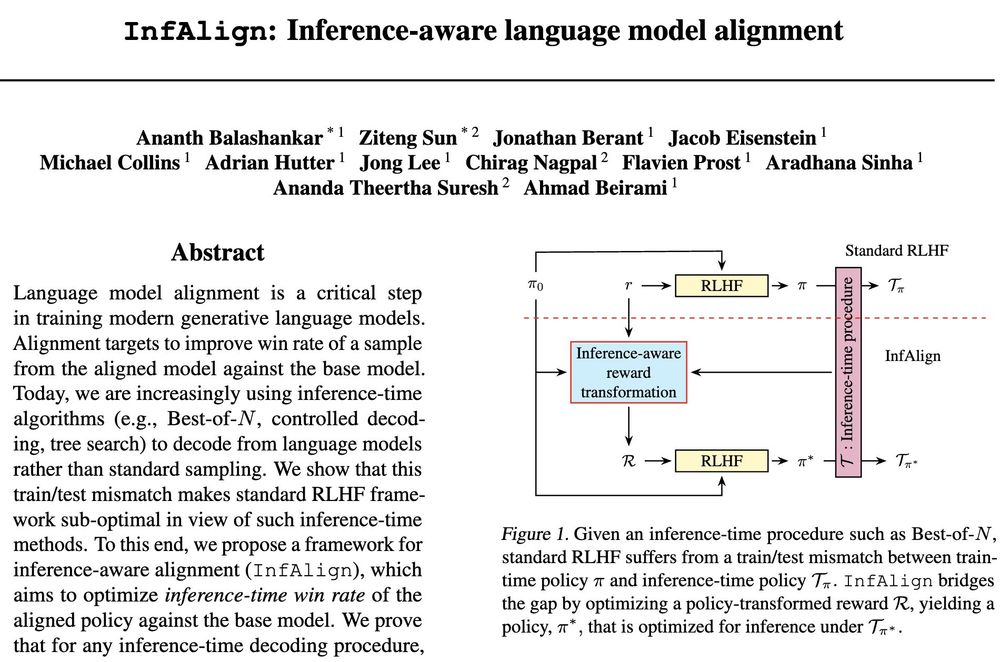

Somewhat surprisingly, we prove that for any inference-time decoding procedure, the optimal aligned policy is the solution to the standard RLHF problem with a transformation of the reward. Therefore, the challenge can be captured by designing a suitable reward transformation.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

To characterize this, we propose a framework for inference-aware alignment (InfAlign), which aims to optimize inference-time win rate of the aligned policy. We show that the standard RLHF framework is sub-optimal in view of the above metric.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

Recent works have characterized (near) optimal alignment objectives (IPO, BoN-distillation) for standard win rate. See e.g. arxiv.org/pdf/2406.00832. However, when the inference-time compute is considered, the outcomes are obtained from a distribution that depends on the inference-time procedure.

11.02.2025 16:26 — 👍 0 🔁 0 💬 1 📌 0

RLHF generally entails training a reward model and then solving a KL-regularized reward maximization problem. The success is typically measured through the win rate of samples from the alignment model against the base model through standard sampling.

11.02.2025 16:26 — 👍 3 🔁 0 💬 1 📌 0

Inference-time procedures (e.g. Best-of-N, CoT) have been instrumental to recent development of LLMs. Standard RLHF focuses only on improving the trained model. This creates a train/inference mismatch.

𝘊𝘢𝘯 𝘸𝘦 𝘢𝘭𝘪𝘨𝘯 𝘰𝘶𝘳 𝘮𝘰𝘥𝘦𝘭 𝘵𝘰 𝘣𝘦𝘵𝘵𝘦𝘳 𝘴𝘶𝘪𝘵 𝘢 𝘨𝘪𝘷𝘦𝘯 𝘪𝘯𝘧𝘦𝘳𝘦𝘯𝘤𝘦-𝘵𝘪𝘮𝘦 𝘱𝘳𝘰𝘤𝘦𝘥𝘶𝘳𝘦?

Check out below.

11.02.2025 16:26 — 👍 25 🔁 6 💬 1 📌 4

Algorithms, predictions, privacy.

https://theory.stanford.edu/~sergei/

I study algorithms/learning/data applied to democracy/markets/society. Asst. professor at Cornell Tech. https://gargnikhil.com/. Helping building personalized Bluesky research feed: https://bsky.app/profile/paper-feed.bsky.social/feed/preprintdigest

Assistant Professor @KSU_CCIS |PhD @InfAtEd Computational Social Science & NLP | Multilingual & Social Processing

‼️Not interested in Monolingual/ArabicNLP-dialect |ArabicHCI/Healthcare/Politics/Network Science/Privacy‼️

🌐 https://abeeraldayel.github.io

head of machine learning @dotphoton building towards metrological machine learning https://luisoala.net/

Postdoc at CMU, previously PhD at RPI. Causality, representation learning, misc.

NLP - mostly representation learning #NLP #NLProc

English PhD turned Machine Learning Researcher. I medicate my imposter syndrome with cold brews.

Current:

Foundation Models @ Apple

Prev:

LLMs/World Models @ Riot Games

RecSys @ Apple

Seattle 🏳️🌈

Senior Lecturer #USydCompSci at the University of Sydney. Postdocs IBM Research and Stanford; PhD at Columbia. Converts ☕ into puns: sometimes theorems. He/him.

ML researcher, MSR + Stanford postdoc, future Yale professor

https://afedercooper.info

I wrote a book.

Free pdf: http://trustworthymachinelearning.com

Paperback: http://amazon.com/dp/B09SL5GPCD

Posts are my own and don't necessarily represent IBM.

Assistant Prof of CS at the University of Waterloo, Faculty and Canada CIFAR AI Chair at the Vector Institute. Joining NYU Courant in September 2026. Co-EiC of TMLR. My group is The Salon. Privacy, robustness, machine learning.

http://www.gautamkamath.com

Google Chief Scientist, Gemini Lead. Opinions stated here are my own, not those of Google. Gemini, TensorFlow, MapReduce, Bigtable, Spanner, ML things, ...

👨💻 NLP PhD Student @ukplab.bsky.social

phd-ing @duke_nlp. previously @uva_ilp @msftresearch @googledeepmind. agentic exploration & rag

https://www.yihaopeng.tw/

Machine learning

Google DeepMind

Paris

computers and music are (still) fun

AI professor at Caltech. General Chair ICLR 2025.

http://www.yisongyue.com