How potentially practical is the theoretical performance?

27.06.2025 16:23 — 👍 0 🔁 0 💬 0 📌 0@samy234.bsky.social

@samy234.bsky.social

How potentially practical is the theoretical performance?

27.06.2025 16:23 — 👍 0 🔁 0 💬 0 📌 0

Run when you hear the sirens coming ...

www.youtube.com/watch?v=sanH...

Why is it not negative? Do they think trump will come to his senses and change his policy to prevent a recession?

29.04.2025 13:45 — 👍 2 🔁 0 💬 3 📌 0

A Plea for Ideological Laziness in an Age of Crisis

chatgpt.com/s/dr_680cd86...

I agree it's a hard problem and you need smarter people in power to tackle it.

05.04.2025 16:43 — 👍 0 🔁 0 💬 0 📌 0How to finance the increased costs of manufacturing in the US? Where is the labour coming from, if you still have high employment? What about supply chains in a trade war with reciprocal tariffs?

05.04.2025 15:45 — 👍 0 🔁 0 💬 1 📌 0

Google Gemini 2.5 is the first public AI model to definitively beat the performance of human PhDs with access to Google on hard multiple choice problems inside their field of expertise (around 81%).

All AI tests are flawed, but GPQA Diamond has been a pretty good one.

& conducted independently.

Anyone else experiencing a sort of cognitive-emotional lag when working with AI? Like your emotional system isn't adapted to the productivity and switching so fast between such complex tasks? Leaving you with a feeling of being overwhelmed even though the tasks only take a few minutes?

03.04.2025 13:00 — 👍 1 🔁 0 💬 0 📌 0What do companies get out of this process? I believe there are only 2 things you have to check:

1. Is the chemistry right with the team?

2. Is the person competent enough to solve a task and communicate the solution to you.

You don't need geniuses to work a corporate job.

And their beating OpenAI in price and features.

26.03.2025 15:14 — 👍 1 🔁 0 💬 0 📌 0DeepSeek documented the changes to some extent.

Source: api-docs.deepseek.com/updates

🥁Introducing Gemini 2.5, our most intelligent model with impressive capabilities in advanced reasoning and coding.

Now integrating thinking capabilities, 2.5 Pro Experimental is our most performant Gemini model yet. It’s #1 on the LM Arena leaderboard. 🥇

Gemini 2.5 pro is out, and you can test it for free right now (5 RPM).

blog.google/technology/g...

DeepSeek's new V3 model (0324) is a major update in performance and license. MIT license will make it hugely impactful for research and open building. Though, many are ending up confused about if it is a "reasoning" model. The model is contrasted to their R1 model which is an only-reasoning model

25.03.2025 15:15 — 👍 19 🔁 2 💬 2 📌 0

Don't get high on your own supply, Tay Musk

23.03.2025 09:20 — 👍 0 🔁 0 💬 0 📌 0

Tencent Hunyuan-T1, their AI reasoning model. Powered by Hunyuan TurboS, it's built for speed, accuracy, and efficiency.

✅ Hybrid-Mamba-Transformer MoE Architecture – The first of its kind for ultra-large-scale reasoning

✅ Strong Logic & Concise Writing – Precise following of complex instructions

I think people have to discern between the unbased promises of super human AGI and the actual usability revolution that is happening through LLMs. Now everyone can access complex computing through natural language, we haven't adjusted to this paradigm change yet.

21.03.2025 07:22 — 👍 3 🔁 0 💬 0 📌 0It is! After testing the current 2.0 gemini models on my RAG tasks and also the current top, pro or preview models from several vendors, i'm not sure how they will compete with Google in the long run given these prices.

21.03.2025 02:24 — 👍 0 🔁 0 💬 0 📌 0Google's gemini 2.0 models are underrated for RAG applications, first benchmarks look really promising.

20.03.2025 15:23 — 👍 0 🔁 0 💬 0 📌 0

Don't go to the free speech for white and orange nazis land if you have private messages critical of the regime on your phone.

www.theguardian.com/us-news/2025...

This feels a bit like the 2008 financial crisis stock market, but instead of waiting for political signals on which bank is being saved, you wait for the orange man to wake up at 15:30 cet and spew his crazy declarations on his "social" media platform.

18.03.2025 10:01 — 👍 1 🔁 0 💬 0 📌 0

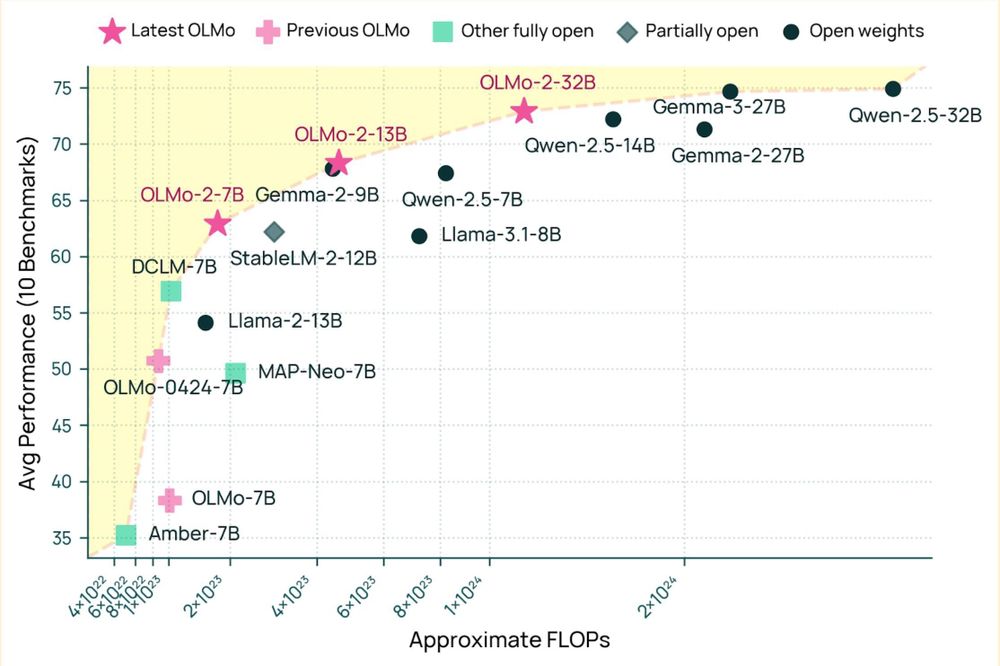

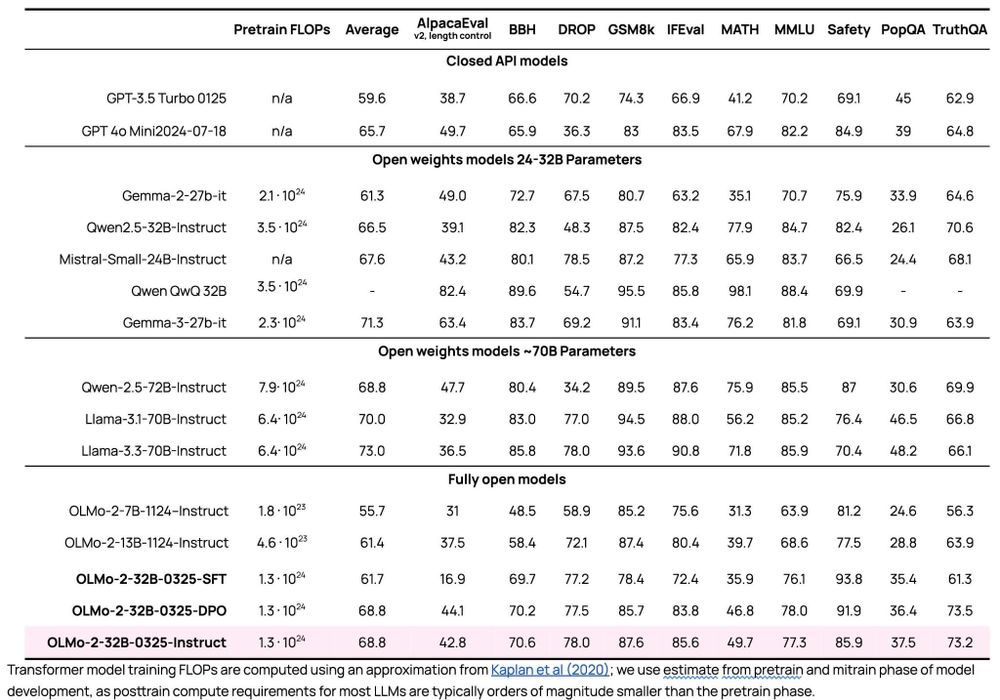

A very exciting day for open-source AI! We're releasing our biggest open source model yet -- OLMo 2 32B -- and it beats the latest GPT 3.5, GPT 4o mini, and leading open weight models like Qwen and Mistral. As usual, all data, weights, code, etc. are available.

13.03.2025 18:16 — 👍 141 🔁 37 💬 5 📌 3"too incompetent to realize that they’re incompetent"

11.03.2025 13:20 — 👍 0 🔁 0 💬 0 📌 0Total shitshow of incompetence.

you all know immediately who i mean.

In a shocking turn of events, many of the people who said they wanted "free speech" actually just wanted to be the ones in charge of which speech is free.

10.03.2025 02:44 — 👍 13030 🔁 2345 💬 244 📌 64

Free speech?

www.theguardian.com/us-news/2025...

Makes intuitive sense how would the model know which tokens to pay attention to while embedding the needle in advance. "Noise" is therefore equally important during indexing. 4k tokens embeddings would only make sense if the needle is so complex it takes up 4k tokens.

08.03.2025 08:23 — 👍 1 🔁 0 💬 0 📌 0

QwQ-32B is on par with R1:671B on math and coding tasks

They used 2 stages of RL, first math and coding and then general tasks.

Maybe this is the next coding model??

qwenlm.github.io/blog/qwq-32b/

Newsletter: Microsoft pulled back on over a gigawatt of planned data center capacity, suggesting that they do not think there is a growth future in generative AI. Meanwhile, SoftBank, the only company that can afford to fund OpenAI, has to take out loans to do so.

www.wheresyoured.at/power-cut/