YouTube video by Dwarkesh Patel

Richard Sutton – Father of RL thinks LLMs are a dead end

The way Sutton himself interprets the “bitter lesson” in this interview definitely caught a lot of bitter lesson enthusiasts off guard.

LLMs not actually being an example of the bitter lesson was quite a nuance no one saw coming.

youtu.be/21EYKqUsPfg?...

04.10.2025 03:55 — 👍 38 🔁 2 💬 2 📌 1

OSF

So far, learning traps seem robust to social learning in our cases. Surprisingly, despite many manipulations that have tried to reduce this learning trap, the most effective has been simply being a child (see @emilyliquin.bsky.social's work on traps in children) osf.io/preprints/ps...

26.09.2025 03:30 — 👍 3 🔁 1 💬 0 📌 0

The New York Times piece today about US science is terrible and wrong—in many ways.

I could write a whole article about this, but as one example:

“To close observers, the original crisis began well before any of this…”

No. I’m a close observer of science, and this is incorrect.

22.09.2025 12:20 — 👍 919 🔁 227 💬 24 📌 31

This is in principle justified by Rao-Blackwell theorem? one abstracts the problem enough such that the data we do have is a suffcient statistics for the inference problem.

16.09.2025 16:06 — 👍 1 🔁 0 💬 0 📌 0

Can a single cell learn? Even without a brain, some microbes show simple forms of cognition. Can this basal cognition be engineered? Check our new paper with @jordiplam.bsky.social on the minimal synthetic circuits & their cognitive limits. @drmichaellevin.bsky.social www.biorxiv.org/content/10.1...

10.09.2025 11:48 — 👍 107 🔁 42 💬 4 📌 6

I’m not sure how useful this form is for characterizing part of the brain that does specific computation though. The heart is an important part of keep me alive to do face processing, but it does’t seem useful to say face processing -> heart is active. though it’s logically correct.

06.09.2025 15:56 — 👍 3 🔁 0 💬 0 📌 1

LLRX republished the blogpost www.llrx.com/2025/08/ai-s...

22.08.2025 20:20 — 👍 13 🔁 5 💬 0 📌 1

Theoretical neuroscience has room to grow

Nature Reviews Neuroscience - The goal of theoretical neuroscience is to uncover principles of neural computation through careful design and interpretation of mathematical models. Here, I examine...

I wrote a Comment on neurotheory, and now you can read it!

Some thoughts on where neurotheory has and has not taken root within the neuroscience community, how it has shaped those subfields, and where we theorists might look next for fresh adventures.

www.nature.com/articles/s41...

20.08.2025 16:09 — 👍 150 🔁 52 💬 8 📌 3

MIT report: 95% of generative AI pilots at companies are failing

There’s a stark difference in success rates between companies that purchase AI tools from vendors and those that build them internally.

MIT’s NANDA initiative found that 95% of generative AI deployments fail after interviewing 150 execs, surveying 350 workers, and analyzing 300 projects. The real “productivity gains” seem to come from layoffs and squeezing more work from fewer people not AI.

20.08.2025 04:51 — 👍 2528 🔁 1086 💬 51 📌 332

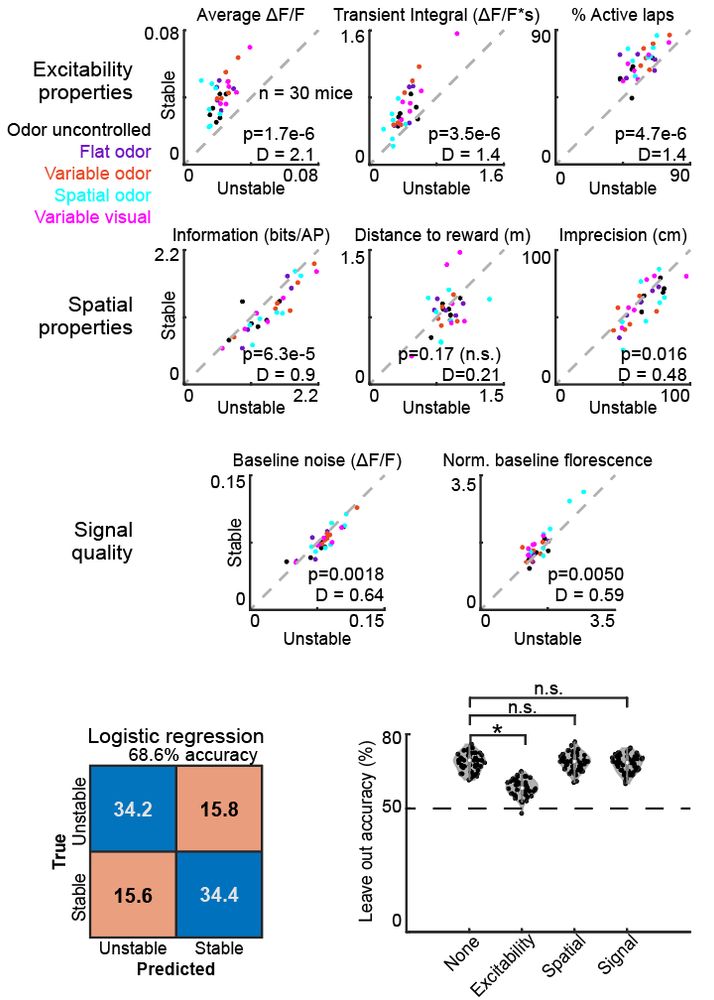

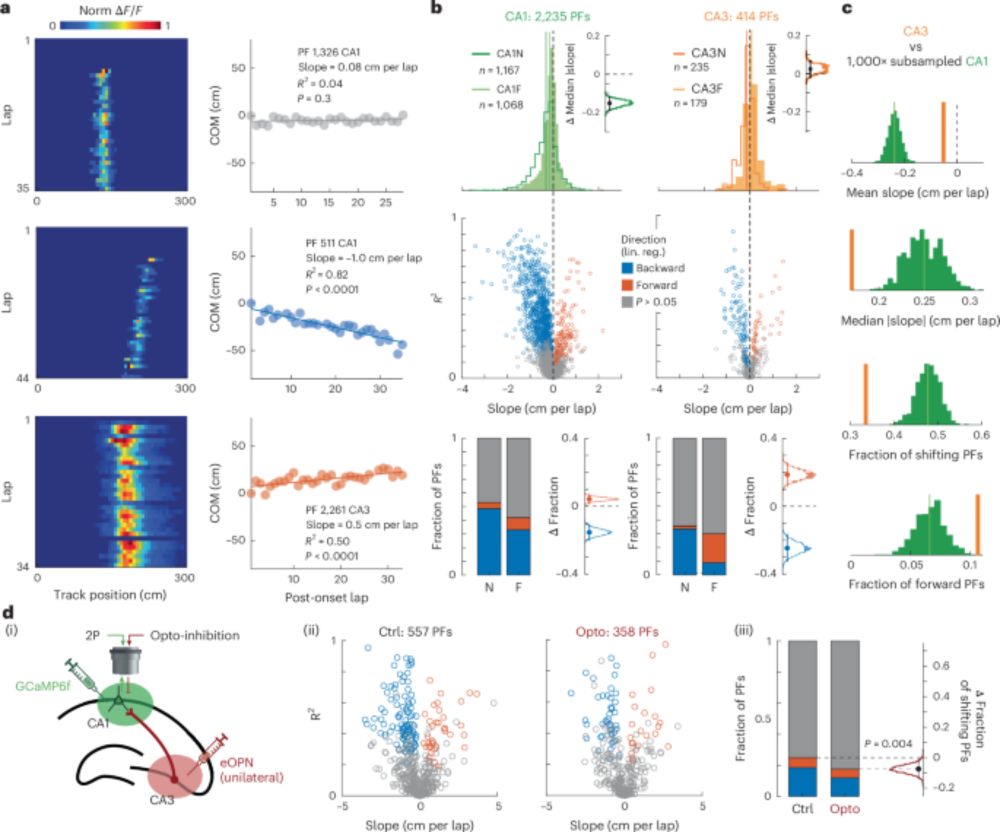

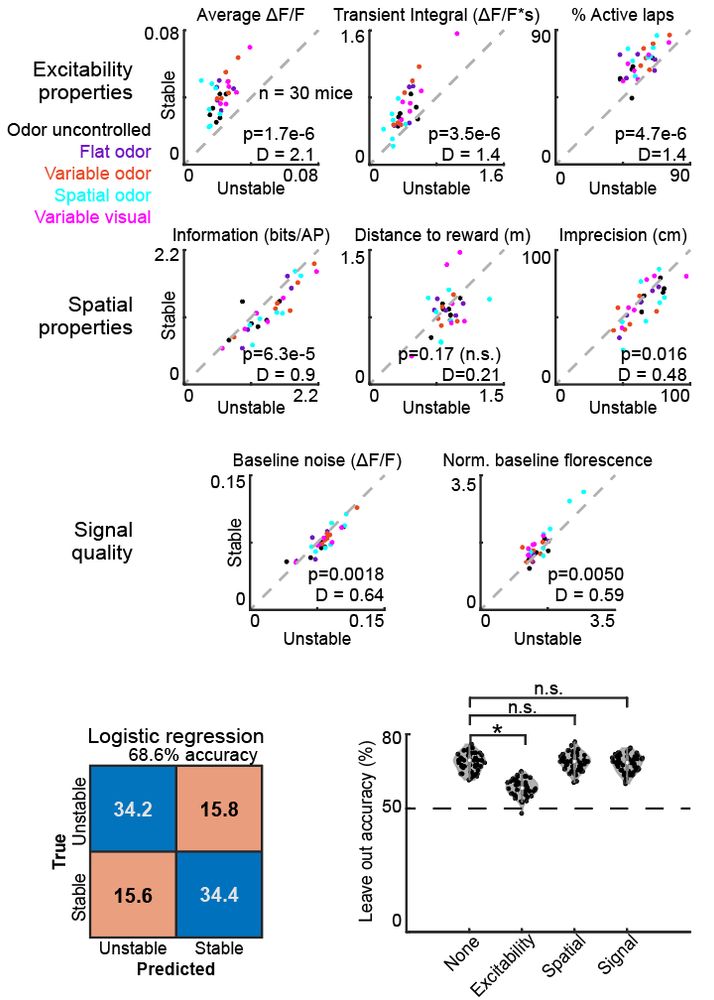

So what drives drift? We looked closely at the neurons and found that a small group of them were stable. These stable neurons were more excitable than neighboring cells, making the fate of the cells predictable.

23.07.2025 16:15 — 👍 6 🔁 2 💬 1 📌 0

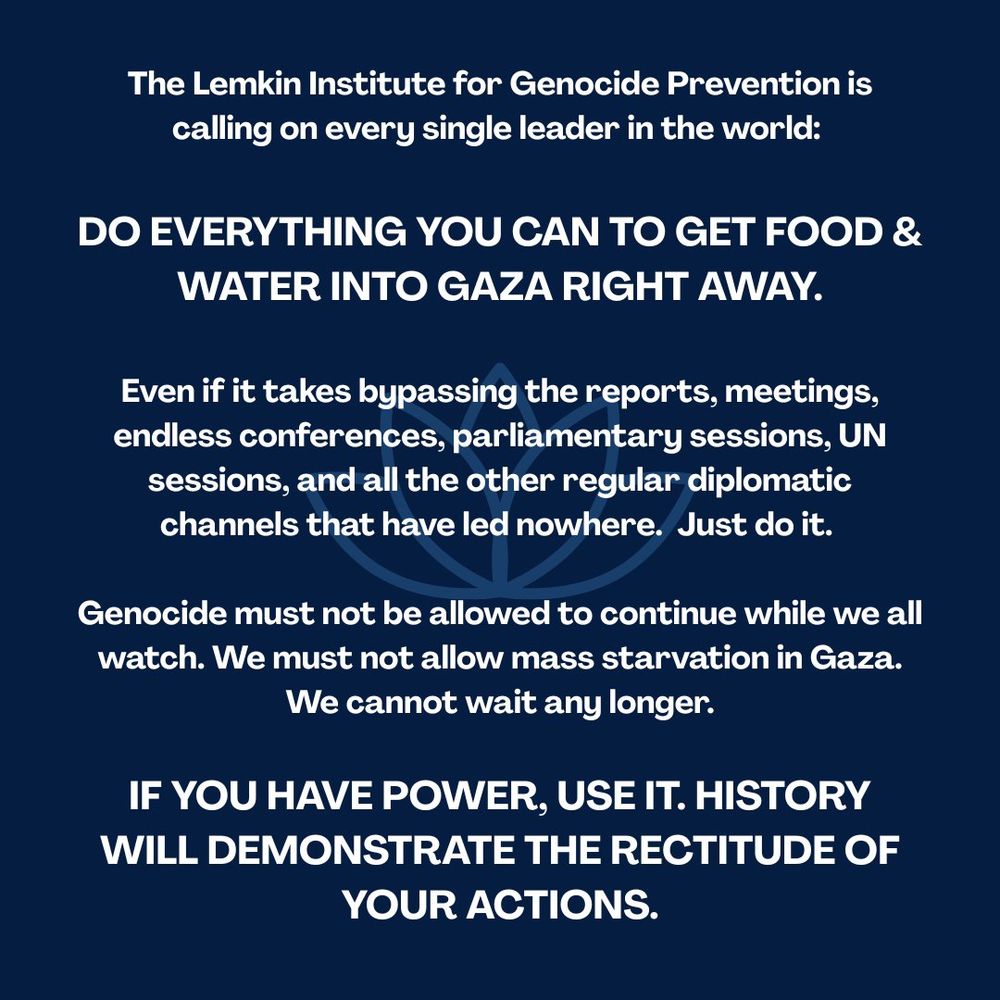

The Lemkin Institute for Genocide Prevention is

calling on every single leader in the world: DO EVERYTHING YOU CAN TO GET FOOD &

WATER INTO GAZA RIGHT AWAY. Even if it takes bypassing the reports, meetings, endless conferences, parliamentary sessions, UN sessions, and all the other regular diplomatic

channels that have led nowhere. Just do it. Genocide must not be allowed to continue while we all

watch. We must not allow mass starvation in Gaza.

We cannot wait any longer. IF YOU HAVE POWER, USE IT. HISTORY WILL DEMONSTRATE THE RECTITUDE OF

YOUR ACTIONS.

DO EVERYTHING YOU CAN TO GET FOOD AND WATER IN TO GAZA.

This is from Lemkin Institute begging..... we are all begging.

21.07.2025 21:57 — 👍 621 🔁 407 💬 7 📌 9

“this is an unfair comparison because the model has not been trained on all data that has ever existed and on all future data that will be digitalized! Our foundation model is omniscient which renders the concept of generalization null!!!!”

16.07.2025 19:16 — 👍 1 🔁 0 💬 1 📌 0

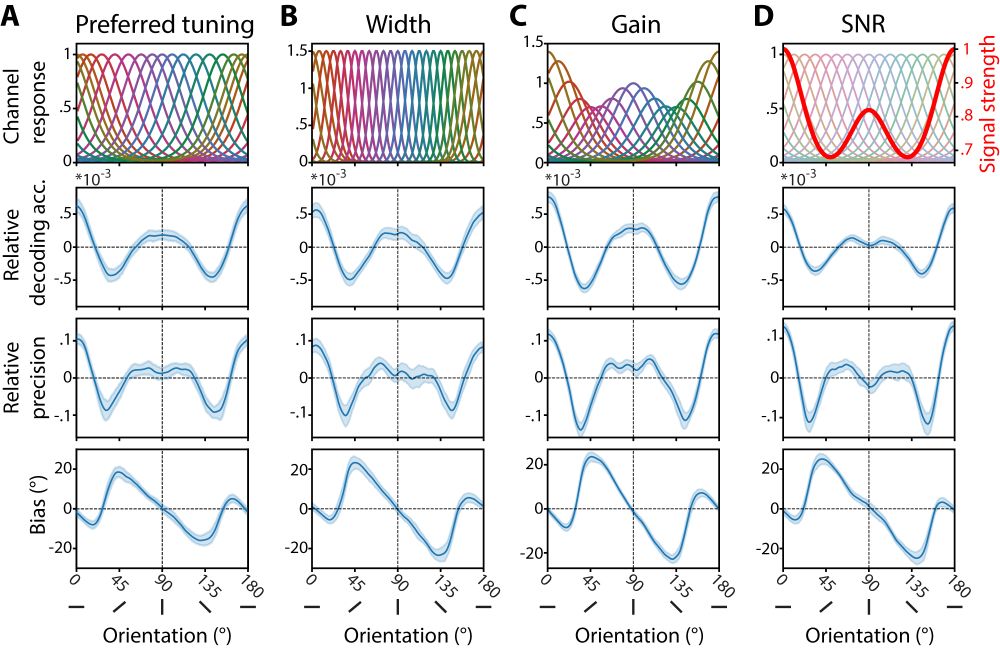

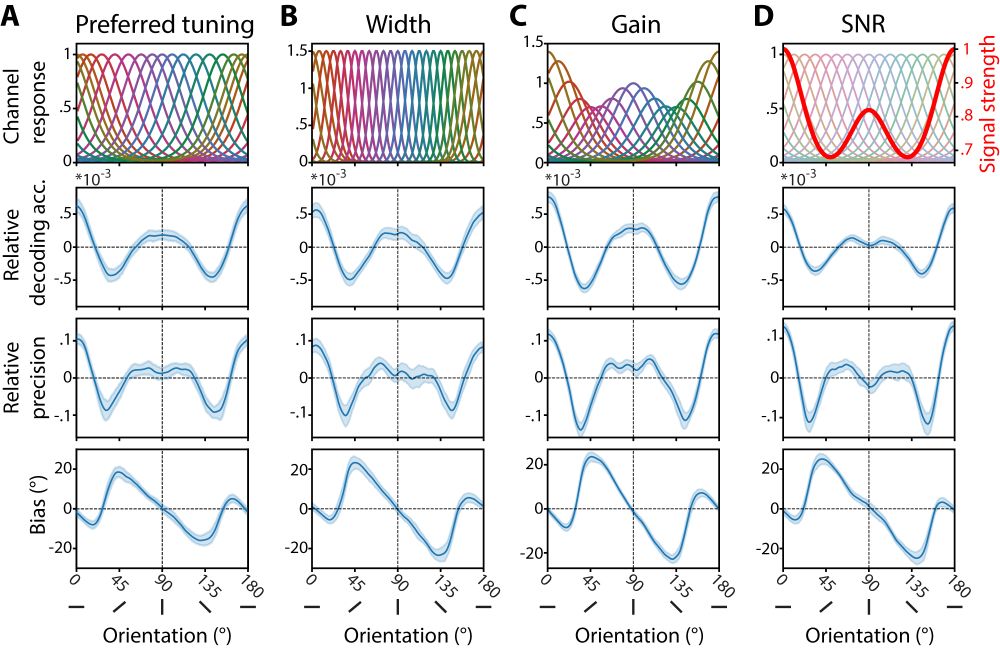

Model mimicry limits conclusions about neural tuning and can mistakenly imply unlikely priors

Nature Communications - Model mimicry limits conclusions about neural tuning and can mistakenly imply unlikely priors

Who doesn't like a good model of the brain? Yet, from simple regression to neural nets, some limitations keep popping up (e.g., overfitting) @mjwolff.bsky.social & I saw some cool but puzzling data, ran a quick analysis & found one such limitation: model mimicry. Now in #naturecommunications &🧵below

02.07.2025 08:50 — 👍 69 🔁 24 💬 1 📌 0

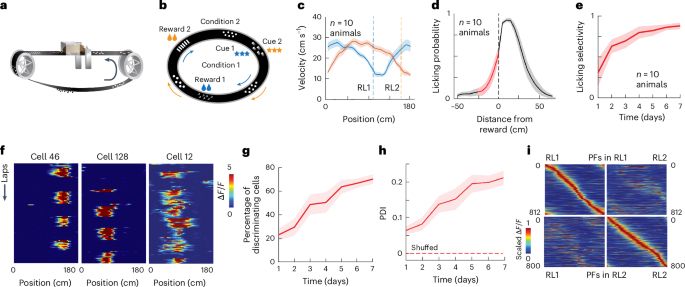

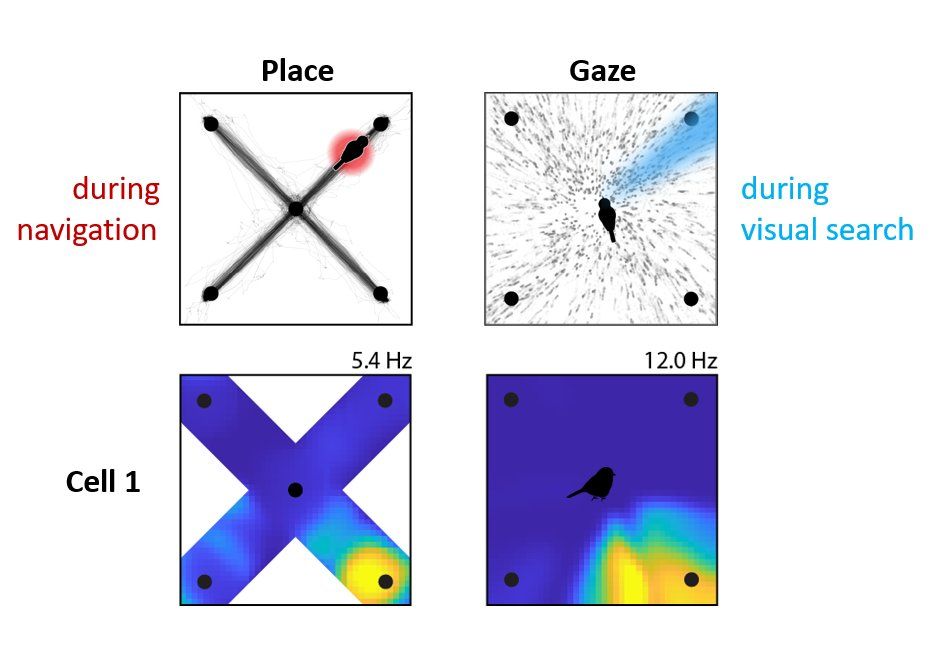

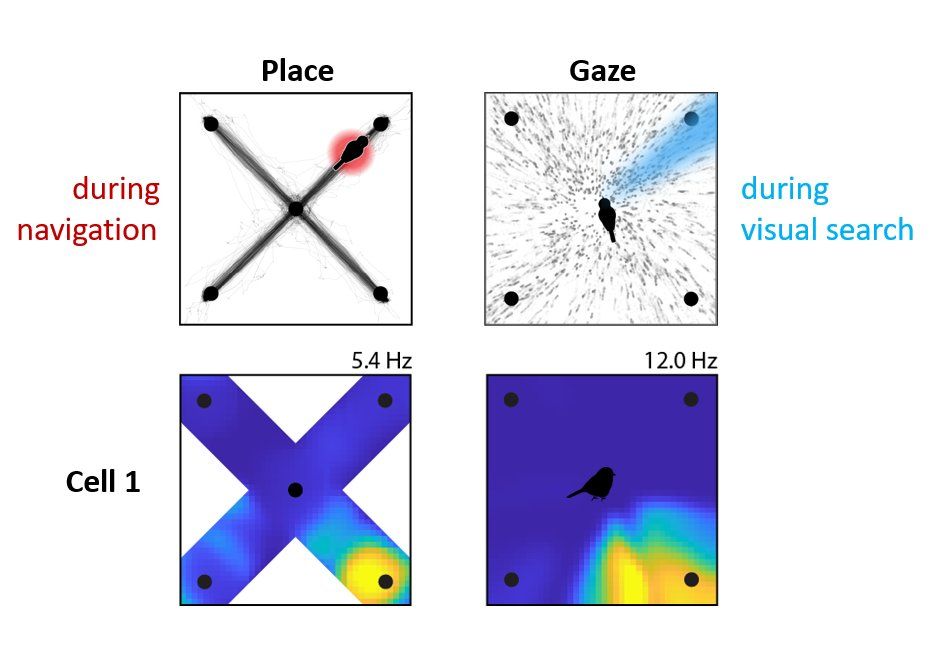

My latest Aronov lab paper is now published @Nature!

When a chickadee looks at a distant location, the same place cells activate as if it were actually there 👁️

The hippocampus encodes where the bird is looking, AND what it expects to see next -- enabling spatial reasoning from afar

bit.ly/3HvWSum

11.06.2025 22:24 — 👍 271 🔁 86 💬 10 📌 5

It occurred to me last night that microwaves are kinda like LLMs.

Remember when they first came out, people bought microwave cookbooks, and special vented plastic cookware, and they were going to change the way we cooked and ate forever?

Now we use them for defrosting mince, and reheating cold tea.

08.05.2025 08:39 — 👍 187 🔁 33 💬 11 📌 6

We’re excited about this project! We present a model of motor savings without the need for context.

02.04.2025 13:37 — 👍 13 🔁 5 💬 0 📌 0

Kilosort4 detects a LOT of neurons, I recorded 15k neurons in one year 🤯 Traditionally, one would curate these detected units to see if they are well isolated single neurons. This is not feasible anymore, so today let's look at three options that are out there to automate this process! 🤖👇

27.03.2025 10:38 — 👍 41 🔁 12 💬 2 📌 1

Technical Associate I, Kanwisher Lab

MIT - Technical Associate I, Kanwisher Lab - Cambridge MA 02139

I’m hiring a full-time lab tech for two years starting May/June. Strong coding skills required, ML a plus. Our research on the human brain uses fMRI, ANNs, intracranial recording, and behavior. A great stepping stone to grad school. Apply here:

careers.peopleclick.com/careerscp/cl...

......

26.03.2025 15:09 — 👍 64 🔁 48 💬 5 📌 3

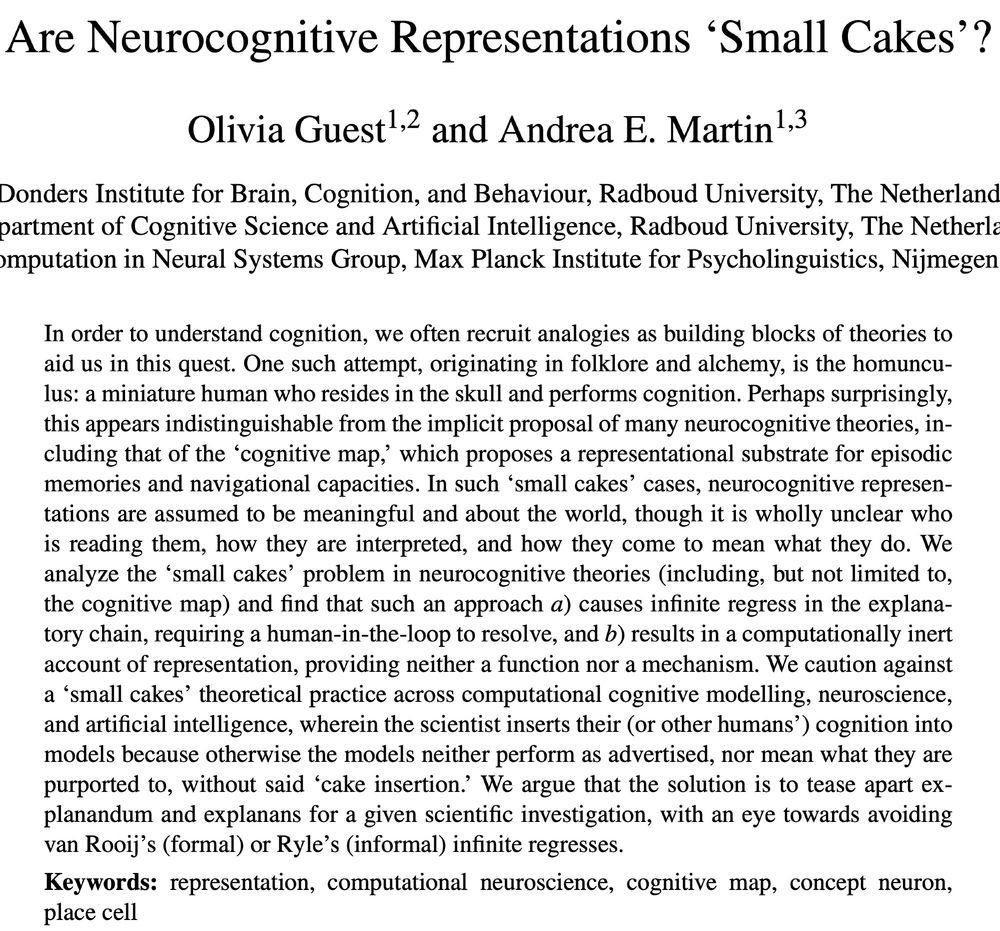

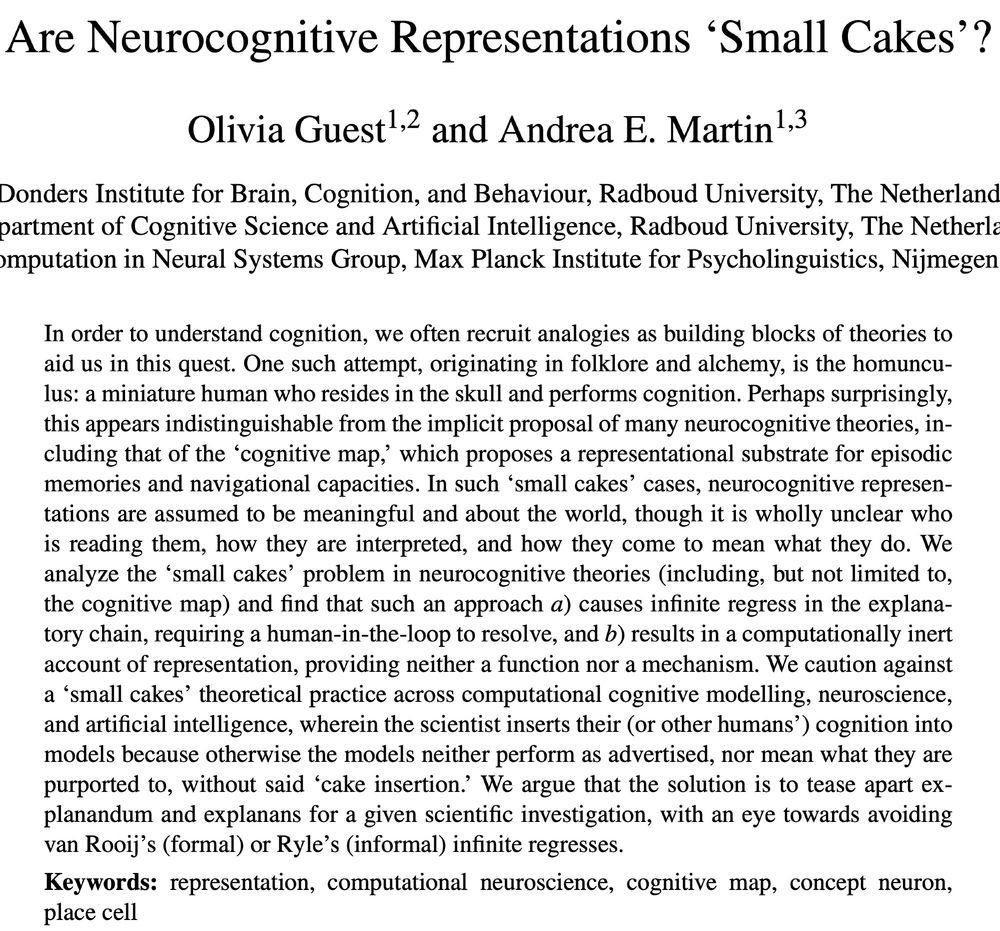

In contrast to the wide spread applause that this piece seems to be getting, I disagree with a lot of what is said here.

1/N

08.03.2025 11:41 — 👍 23 🔁 9 💬 3 📌 3

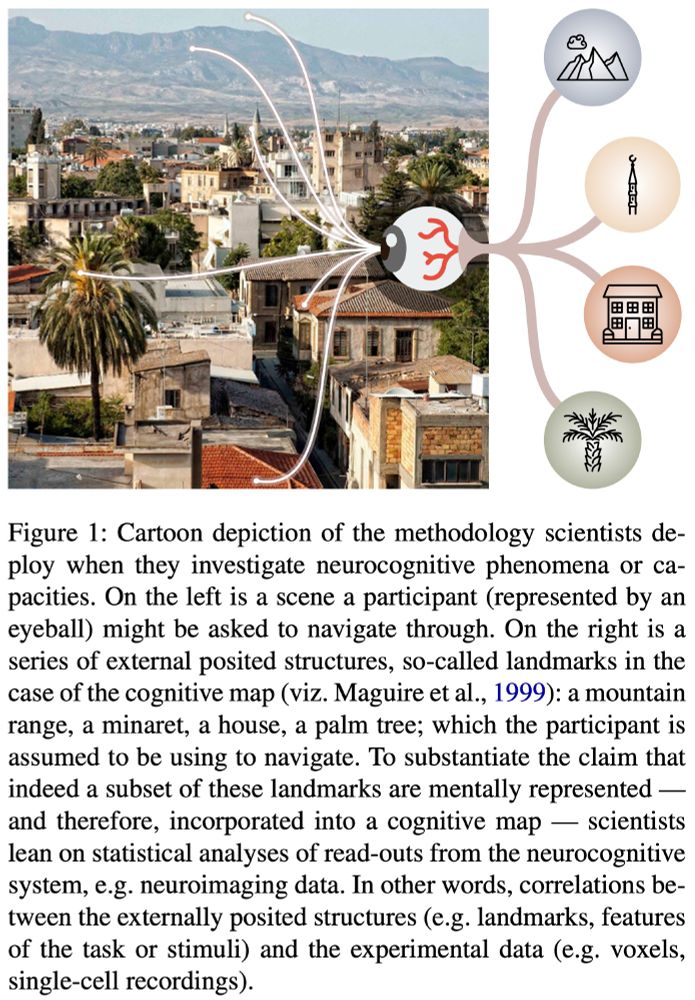

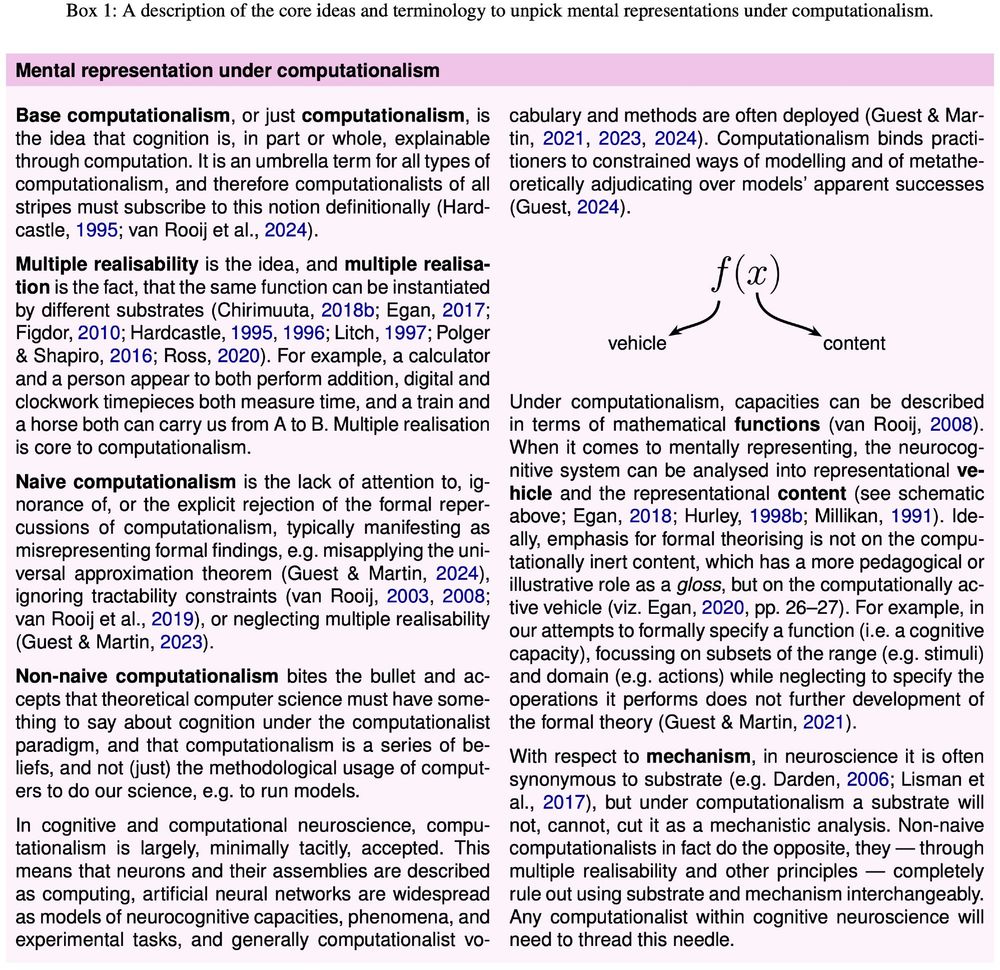

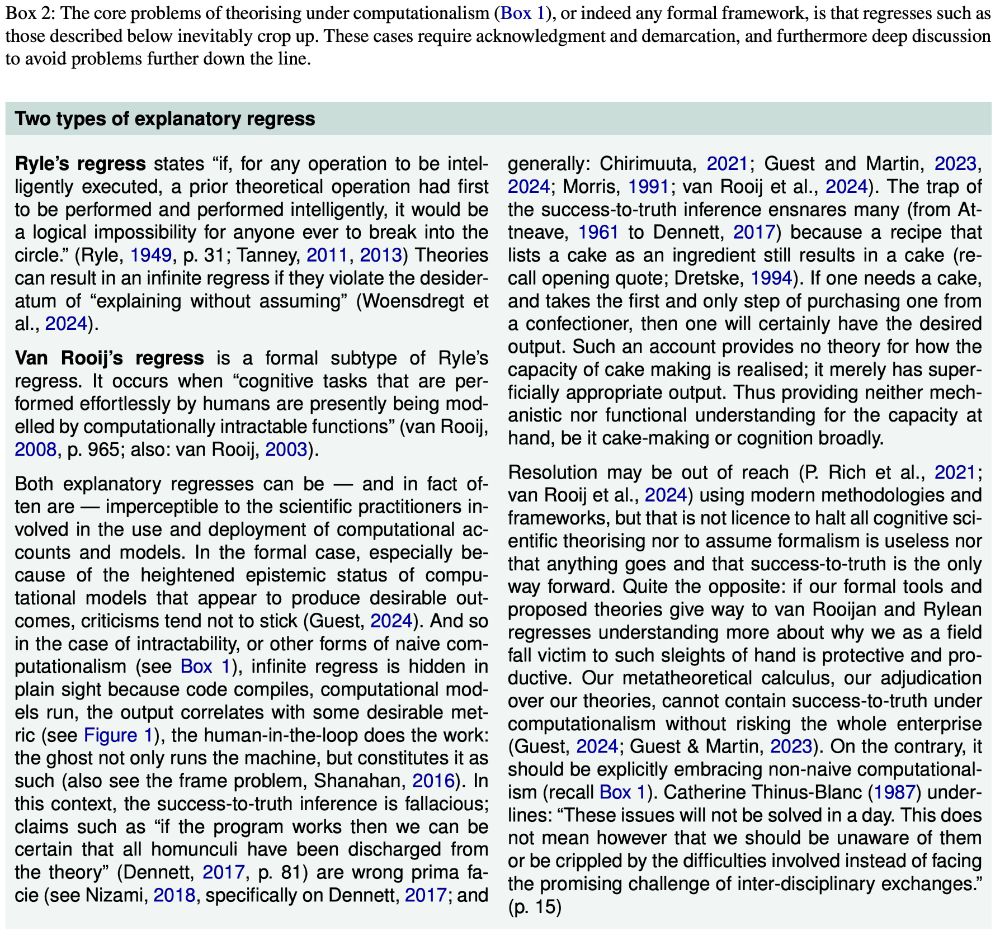

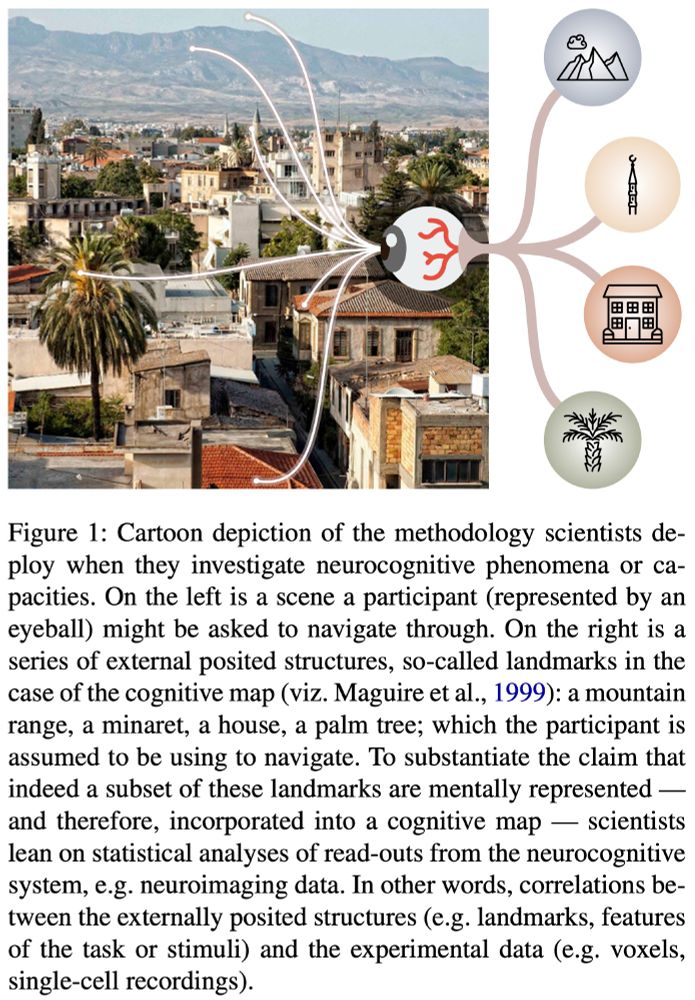

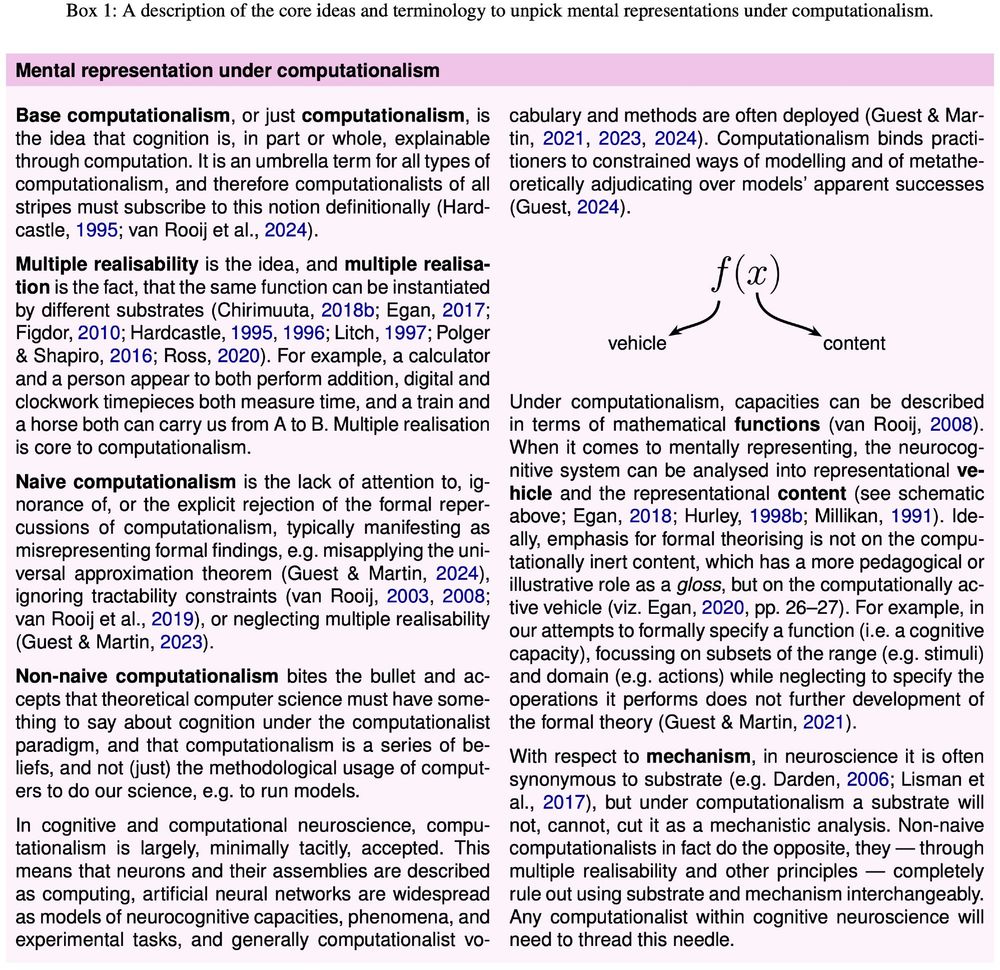

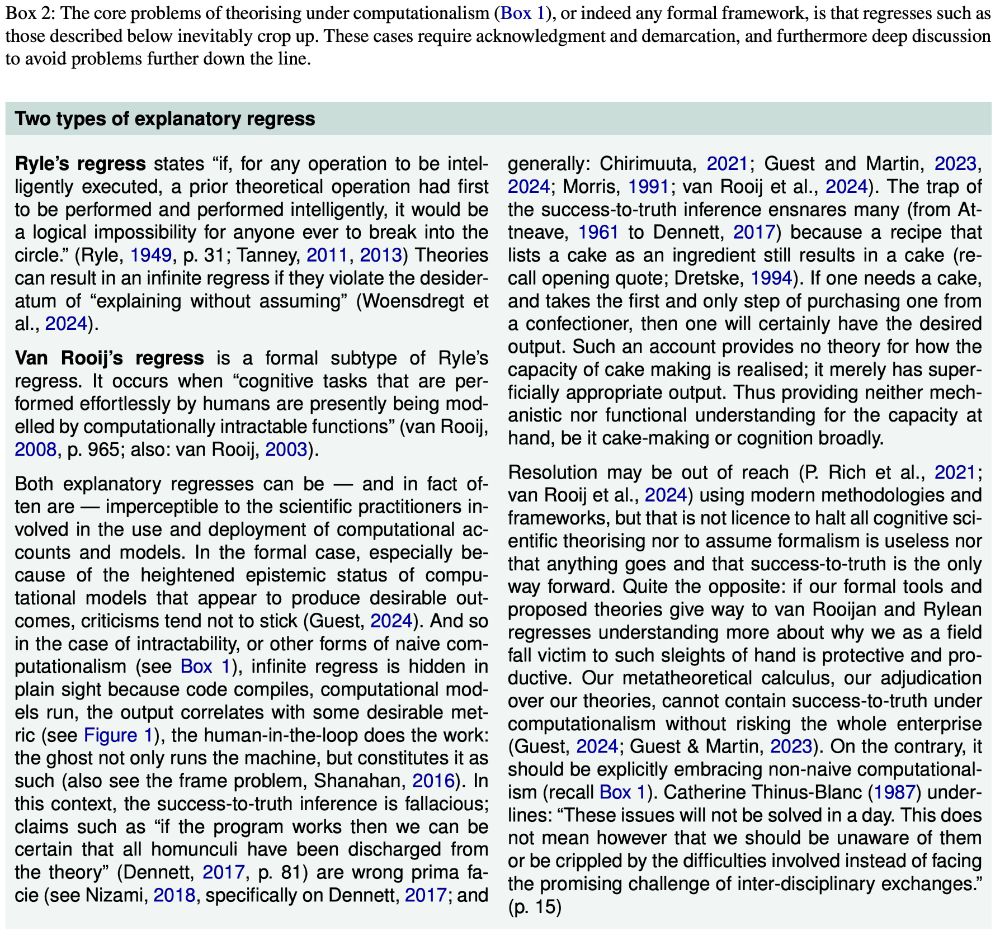

In order to understand cognition, we often recruit analogies as building blocks of theories to aid us in this quest. One such attempt, originating in folklore and alchemy, is the homunculus: a miniature human who resides in the skull and performs cognition. Perhaps surprisingly, this appears indistinguishable from the implicit proposal of many neurocognitive theories, including that of the 'cognitive map,' which proposes a representational substrate for episodic memories and navigational capacities. In such 'small cakes' cases, neurocognitive representations are assumed to be meaningful and about the world, though it is wholly unclear who is reading them, how they are interpreted, and how they come to mean what they do. We analyze the 'small cakes' problem in neurocognitive theories (including, but not limited to, the cognitive map) and find that such an approach a) causes infinite regress in the explanatory chain, requiring a human-in-the-loop to resolve, and b) results in a computationally inert account of representation, providing neither a function nor a mechanism. We caution against a 'small cakes' theoretical practice across computational cognitive modelling, neuroscience, and artificial intelligence, wherein the scientist inserts their (or other humans') cognition into models because otherwise the models neither perform as advertised, nor mean what they are purported to, without said 'cake insertion.' We argue that the solution is to tease apart explanandum and explanans for a given scientific investigation, with an eye towards avoiding van Rooij's (formal) or Ryle's (informal) infinite regresses.

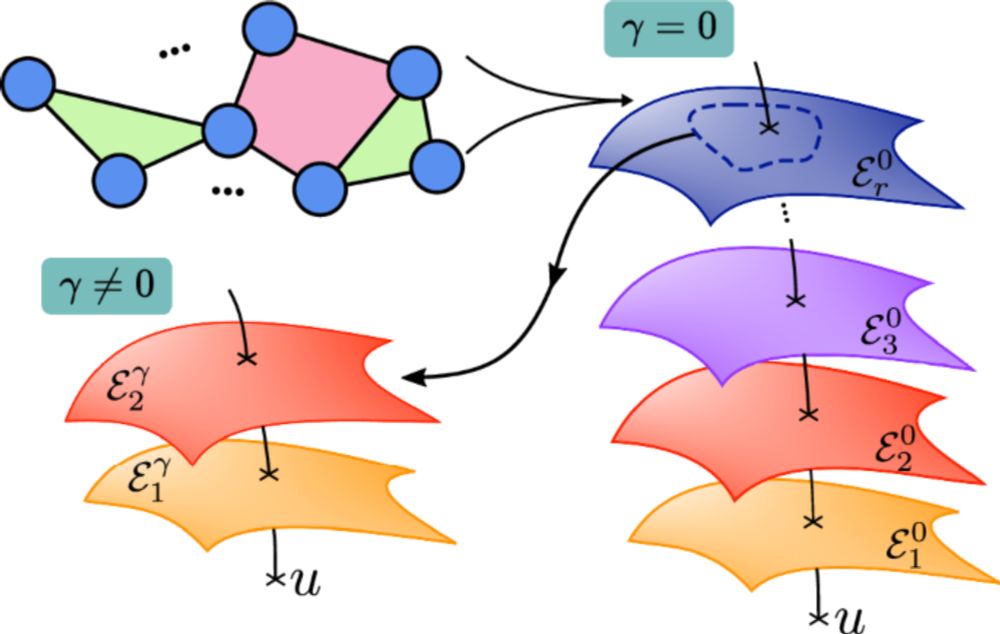

Figure 1 in https://philsci-archive.pitt.edu/24834/

Box 1 in https://philsci-archive.pitt.edu/24834/

Box 2 in https://philsci-archive.pitt.edu/24834/

Tired but happy to say this is out w @andreaeyleen.bsky.social: Are Neurocognitive Representations 'Small Cakes'? philsci-archive.pitt.edu/24834/

We analyse cog neuro theories showing how vicious regress, e.g. the homunculus fallacy, is (sadly) alive and well — and importantly how to avoid it. 1/

01.03.2025 14:16 — 👍 238 🔁 74 💬 24 📌 19

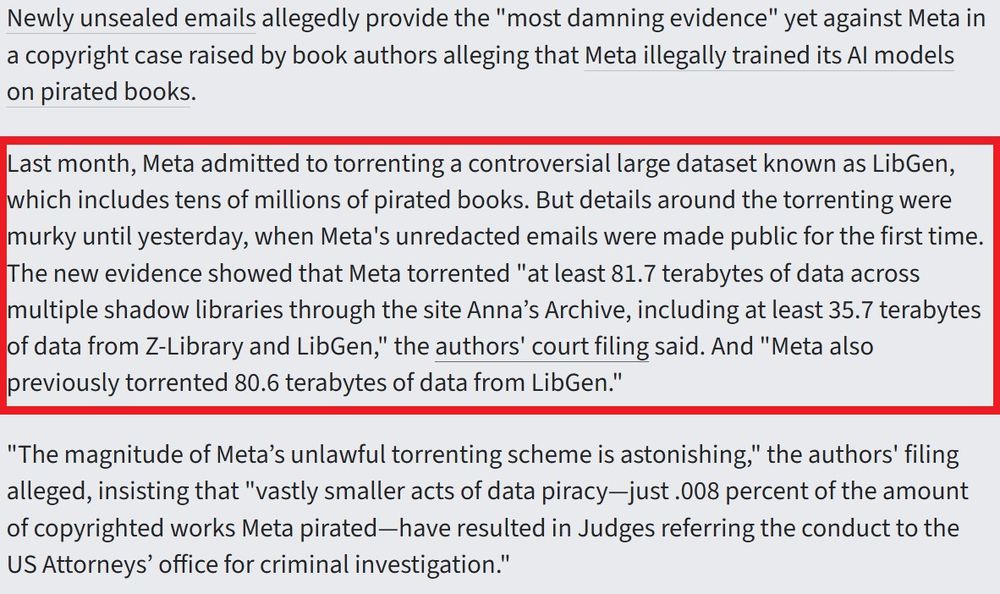

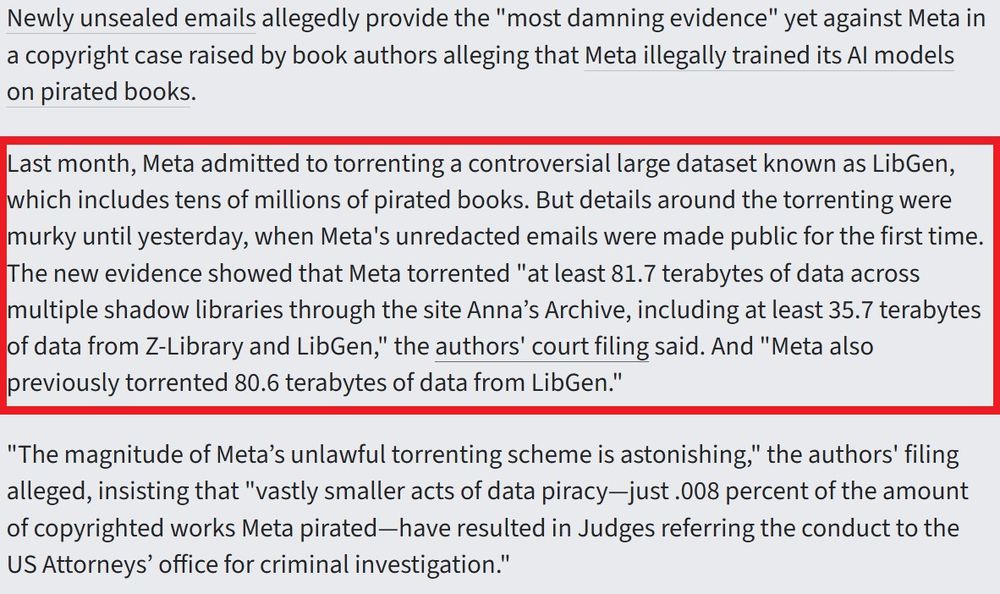

“Torrenting from a corporate laptop doesn’t feel right”: Meta emails unsealed

A photo of Aaron Swartz (1986-2013) when he was 19.

Last month, Meta admitted to torrenting a controversial large dataset known as LibGen, which includes tens of millions of pirated books. But details around the torrenting were murky until yesterday, when Meta's unredacted emails were made public for the first time. The new evidence showed that Meta torrented "at least 81.7 terabytes of data across multiple shadow libraries through the site Anna’s Archive, including at least 35.7 terabytes of data from Z-Library and LibGen," the authors' court filing said. And "Meta also previously torrented 80.6 terabytes of data from LibGen."

Meta illegaly downloaded 80+ terabytes of books from LibGen, Anna's Archive, and Z-library to train their AI models.

In 2010, Aaron Swartz downloaded only 70 GBs of articles from JSTOR (0.0875% of Meta). Faced $1 million in fine and 35 years in jail. Took his own life in 2013.

07.02.2025 16:45 — 👍 7580 🔁 4074 💬 51 📌 171

Wow! I speculated a while back that OpenAI might be scanning API logs to get the hold-out questions on this dataset but now it seems the whole thing was secretly funded by OpenAI who had privileged access to the data.

Apparently I wasn't being cynical enough.

20.01.2025 01:05 — 👍 15 🔁 3 💬 1 📌 0

Neural signatures of model-based and model-free reinforcement learning across prefrontal cortex and striatum https://www.biorxiv.org/content/10.1101/2025.01.11.632388v1

12.01.2025 10:15 — 👍 19 🔁 10 💬 0 📌 1

Assistant Professor at University of New Hampshire | studying how kids and adults explore, explain, and learn | she/her 🏳️🌈 | emilyliquin.com

science. https://briandepasquale.github.io

PhD Student, Boston University Brain Behavior Cognition | he/they 🏳️🌈

Human Curiosity, Exploration, & Information Seeking: Why do we seek out knowledge and when do we avoid it?

Formerly @MGHPsychiatry & @UMassLowell

https://www.psyc.dev

Theoretical neuroscientist interested in brain-body interactions and evolution of adaptive behavior. Associate Professor at Scripps Research Institute in San Diego.

Mathematician at UCLA. My primary social media account is https://mathstodon.xyz/@tao . I also have a blog at https://terrytao.wordpress.com/ and a home page at https://www.math.ucla.edu/~tao/

Math Assoc. Prof. (On leave, Aix-Marseille, France)

Teaching Project (non-profit): https://highcolle.com/

PhD in computational neuroscience; Postdoc at MIT; working with @evfedorenko.bsky.social

eghbalhosseini.github.io

Neuroscientist

post-doc @zuckermanbrain.bsky.social @ Aronov lab 🪶

previously: phd @harvardmed.bsky.social with Nao Uchida

peruana & alfajor lover

Redleaf Endowed Director, Masonic Institute for the Developing Brain (MIDB)

Professor, University of Minnesota

Co-Founder Turing Medical

MacArthur Fellow

Neuroscience, Brain Imaging, Mental Health, Data Science

Neuronas, redes y auto-organización.

Complex systems and computational neuroscience at the Basque Center for Applied Mathematics

Assistant prof at UIUC interested in memory and how neural representations evolve over time.

https://climerlab.org

Neuroscientist, in theory. Studying sleep and navigation in 🧠s and 💻s.

Assistant Professor at Yale Neuroscience, Wu Tsai Institute.

An emergent property of a few billion neurons, their interactions with each other and the world over ~1 century.

Assistant Professor in Moral Philosophy at

@uib.cat . Moral psychology (moral emotions, moral motivation) and applied ethics (mental health, ethics of emotion).

Podcasts: https://beacons.ai/podcast_episodis

Max Planck group leader at ESI Frankfurt | human cognition, fMRI, MEG, computation | sciences with the coolest (phd) students et al. | she/her | looking for postdocs for 2026

Control Systems Engineer. Visiting fellow affiliated with NIMH.

Assemblymember. Democratic Nominee for Mayor of NYC. Running to freeze the rent, make buses fast + free, and deliver universal childcare. Democratic Socialist. zohranfornyc.com

Postdoc at UPenn | Incoming Assistant Prof at Brown Engineering, 2026 | Research at the intersection of neuroscience, technology, and data science

Neuroscientist, both computational and experimental. Also, parent of a teenager :) . All posts and opinions are in my personal capacity.

professional website: https://brodylab.org

Computational cognitive scientist at NYU. Founder of Growing up in Science.

Postdoc at MIT in the jazayeri lab. I study how cerebello-thalamocortical interactions support non-motor function.

gabrielstine.com