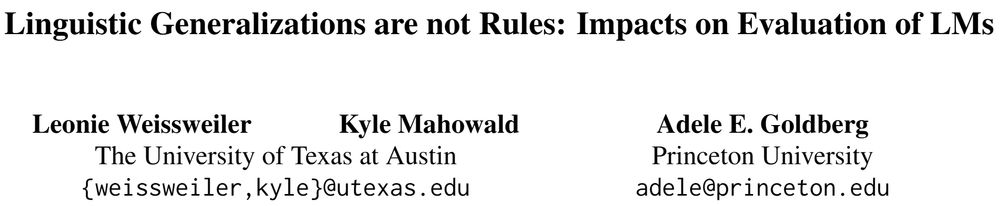

✨New paper✨

Linguistic evaluations of LLMs often implicitly assume that language is generated by symbolic rules.

In a new position paper, @adelegoldberg.bsky.social, @kmahowald.bsky.social and I argue that languages are not Lego sets, and evaluations should reflect this!

arxiv.org/pdf/2502.13195

20.02.2025 15:06 — 👍 69 🔁 20 💬 1 📌 3

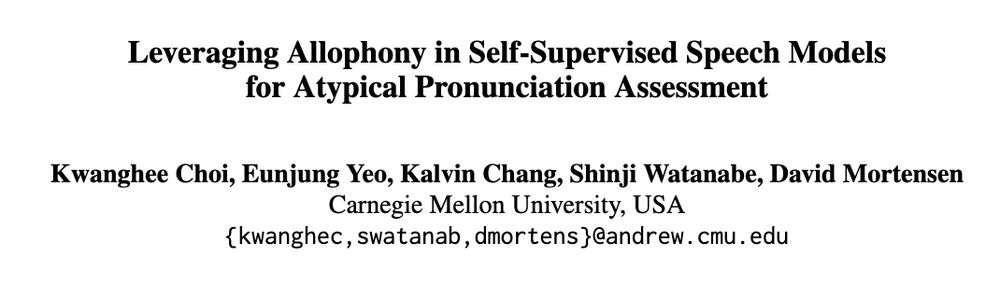

Can self-supervised models 🤖 understand allophony 🗣? Excited to share my new #NAACL2025 paper: Leveraging Allophony in Self-Supervised Speech Models for Atypical Pronunciation Assessment arxiv.org/abs/2502.07029 (1/n)

29.04.2025 17:00 — 👍 15 🔁 10 💬 2 📌 0

interspeech2025.org satellite Young Female* Researchers in Speech Workshop (YFRSW)

sites.google.com/view/yfrsw-2025/

📢 Calling future speech science researchers! 🎙️✨

YFRSW 2025 is for Bachelor’s & Master’s students from marginalized genders exploring research careers in speech science & tech

🚀 Mentorship, networking & career insights!

📅 Details: sites.google.com/view/yfrsw-2...

#Interspeech2025

04.03.2025 10:24 — 👍 3 🔁 1 💬 0 📌 0

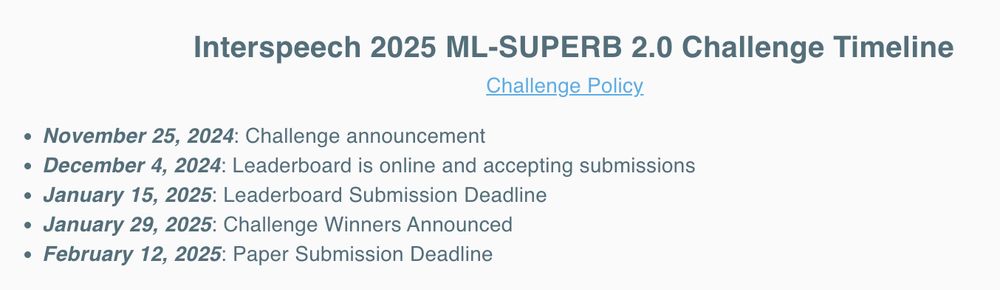

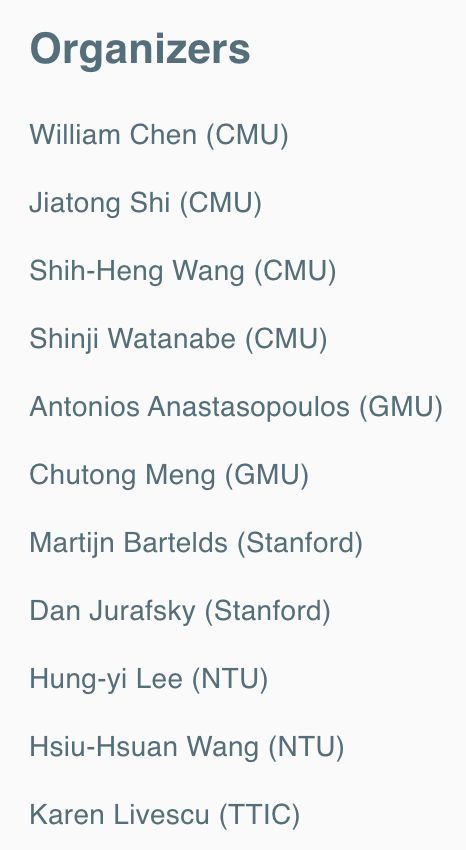

📣 #SpeechTech & #SpeechScience people

We are organizing a special session at #Interspeech2025 on: Interpretability in Audio & Speech Technology

Check out the special session website: sites.google.com/view/intersp...

Paper submission deadline 📆 12 February 2025

06.12.2024 21:29 — 👍 16 🔁 9 💬 1 📌 1

Could i be added, too please? Thank you!! @eyeo1.bsky.social

04.12.2024 19:49 — 👍 1 🔁 0 💬 0 📌 0

I've started putting together a starter pack with people working on Speech Technology and Speech Science: go.bsky.app/BQ7mbkA

(Self-)nominations welcome!

19.11.2024 11:13 — 👍 82 🔁 34 💬 44 📌 3

The Parkinson's Foundation knows that a research breakthrough can happen at any time & believes in educating our PD community with the latest research updates.

https://www.parkinson.org/advancing-research

Phonologist | asst. prof @ University of Southern California Linguistics | previously: postdoc @ MIT Brain & Cognitive Sciences, PhD @ UCLA Linguistics | theory 🔁 experiments 🔁 (Bayesian) models | 🌈 he | cbreiss.com

Postdoc at Uppsala University Computational Linguistics with Joakim Nivre

PhD from LMU Munich, prev. UT Austin, Princeton, @ltiatcmu.bsky.social, Cambridge

computational linguistics, construction grammar, morphosyntax

leonieweissweiler.github.io

NLP Researcher

Language documentation | Morphosyntax | ASR | MT

Discover more from ASHA's high-impact research journals: AJA, AJSLP, JSLHR, and LSHSS, all continuously published on the ASHAWire platform: pubs.asha.org

#bskySPEECHIES #SLPeeps #AUDPeeps

Lecturer at the University of Edinburgh. Member of Centre of Speech Technology Research (CSTR).

Welcome to the College of Health Solutions — where our mission is to improve health for everyone. We translate health research and discovery into practical solutions and prepare our students to address society's greatest health challenges. 🔗 chs.asu.edu

Post doc w/ Carol Espy-Wilson @UMD Electrical and Computer Engineering; PhD CCC-SLP conducting clinical trials at Syracuse University. Developer of efficacious, theory-driven clinical AI speech tech.

Speech processing and clinical speech AI - Interested in children's speech disorder, neurodegenerative disease, respiratory disease, physical wellness, etc.

Postdoc @ Arizona State University

Ph. D, B.Eng @ The Chinese University of Hong Kong

The School of Computer Science at Carnegie Mellon University is one of the world's premier institutions for CS and robotics research and education. We build useful stuff that works!

Music and artificial intelligence.

Researcher at Stability AI.

Musician at BRNRT Collective.

Previously at Dolby and Universitat Pompeu Fabra.

artintech.substack.com

www.jordipons.me

audio & ml research at antarestech.com. previously audioshake.ai, gracenote.com. also interested in photography, bicycles, and beer.

AI + Speech @ Nvidia. PhD @ AGH-UST, ex-JHU. My interests: speech processing technologies; ML/AI software engineering. Building OSS for Speech AI.

Principal Scientist (Director) at Google DeepMind in Japan. 波瀬小⇒一志中⇒鈴鹿高専⇒名工大 (IBM T.J. Watson Research intern)⇒東芝欧州研究所⇒Google (Speech🇬🇧⇒Brain🇯🇵) ⇒Google DeepMind. 3rd generation Korean in Japan.

Researcher@Meta Reality Labs, working on generative models, speech enhancement, speech recognition, TTS, etc.

https://nateanl.github.io/

PhD candidate at Tilburg University, doing research on interpretability for text and speech.

https://hmohebbi.github.io/

Working on multimodal LLMs in André Martins' group @ Instituto Superior Técnico

https://anilkeshwani.github.io/

Functional programming enjoyer.