Hell yea

04.08.2025 17:18 — 👍 2 🔁 0 💬 1 📌 0spencer.

@ontologic.design.bsky.social

enhancing mankind’s ability to access and harness information is a public good programmer // poet // poster VP of @concept.country

@ontologic.design.bsky.social

enhancing mankind’s ability to access and harness information is a public good programmer // poet // poster VP of @concept.country

Hell yea

04.08.2025 17:18 — 👍 2 🔁 0 💬 1 📌 0

Cathedrals are sick. S/o to Catholics, yall should stick to the cool building thing

29.07.2025 01:57 — 👍 4 🔁 0 💬 1 📌 0

Support my friends in doing Woke Alex Jones 🗣️ Patreon.com/thehomiecollective

They have a very good podcast, and I am a podcast hater

(There is an answer to this already. This question is intended to stir curiosity)

26.07.2025 11:17 — 👍 0 🔁 0 💬 0 📌 0If we were able to take a screenshot of our field of vision I wonder what distortions we would notice that the brain ignores

26.07.2025 11:09 — 👍 3 🔁 0 💬 1 📌 0I am habitually suspicious of ppl who fixate on a “high trust society”

24.07.2025 11:08 — 👍 18 🔁 2 💬 1 📌 0Just conceived of a guy who is only into vampire weekend because they had a song in step brothers and then I realized that’s all vampire weekend fans

24.07.2025 02:48 — 👍 2 🔁 0 💬 1 📌 0Lies. I just shifted my timeline into one where I am drinking guarana on a beach in Brazil. Do not believe the empiricist deceivers

22.07.2025 19:37 — 👍 5 🔁 1 💬 0 📌 0Memphis sucks lol. Terrible stadium, weak brand, really tiny enrollment for a public university. This was the right move

22.07.2025 13:24 — 👍 0 🔁 0 💬 0 📌 0Turns out objects with ontology but no sentience are prone to unpredictable and arbitrary behavior based on who is interacting with them

22.07.2025 12:57 — 👍 3 🔁 0 💬 0 📌 0I think I’m pretty much completely done with social media tbh. Nobody here has any genuine curiosity about the world anymore and only wants to be a hot take artist. I fall prey to this too. It’s a drag and exhausting no matter where you go online

22.07.2025 10:25 — 👍 6 🔁 0 💬 1 📌 0two interesting things to note already from researching the history of ICE:

- DHS is only 22 years old.

- it was formed as a *centralization of 22 other government agencies into a singular agency reporting to the executive branch* and a large part of the population was chill with it, because 9/11

Reflecting on this further: I think it can accurately be said that the success of HuffPost was the harbinger of the end of real journalism

21.07.2025 21:50 — 👍 3 🔁 0 💬 0 📌 0

Another one of my takes that is aging extremely well

21.07.2025 21:44 — 👍 3 🔁 0 💬 0 📌 0

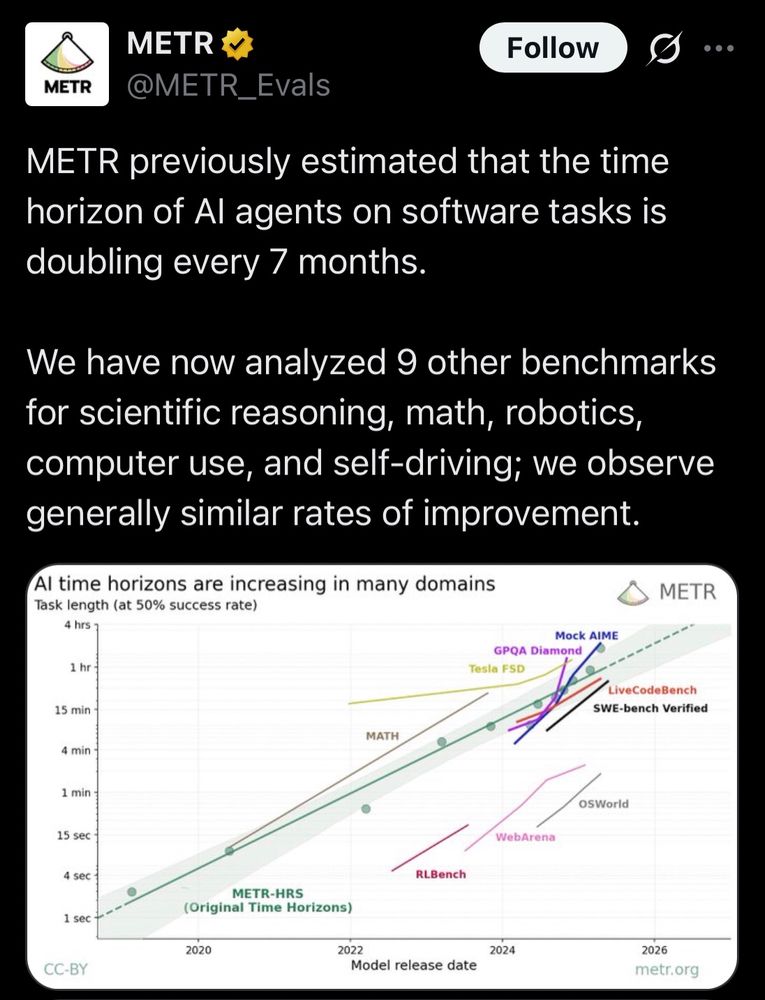

A tweet by METR (@METR_Evals) reads: “METR previously estimated that the time horizon of AI agents on software tasks is doubling every 7 months. We have now analyzed 9 other benchmarks for scientific reasoning, math, robotics, computer use, and self-driving; we observe generally similar rates of improvement.” Below the tweet is a graph titled “AI time horizons are increasing in many domains”, showing task length (at 50% success rate) on the y-axis (log scale, ranging from 1 second to 4 hours) and model release date on the x-axis (ranging from 2018 to 2026). A thick green line labeled “METR-HRS (Original Time Horizons)” shows a roughly exponential trend upward, with many benchmark points clustered around it. Benchmarks include: • Mock AIME, GPQA Diamond, Tesla FSD, LiveCodeBench, SWE-bench Verified, and MATH—plotted close to or above the trendline. • RLBench, WebArena, and OSWorld—plotted below or deviating from the main trend. The chart suggests a consistent increase in AI’s ability to handle longer and more complex tasks across diverse domains. The trend line is shaded to indicate uncertainty or variability in the estimates

METR: how long a task an AI can successfully do without supervision doubles roughly every 7 months across several task domains

xcancel.com/METR_Evals/s...

I think this is probably the right metaphor, because the weighting difference applies at every layer. It’s not like a lora where you’re training to sample from a specific “mind” vgel.me/posts/repres...

21.07.2025 16:35 — 👍 0 🔁 0 💬 0 📌 0

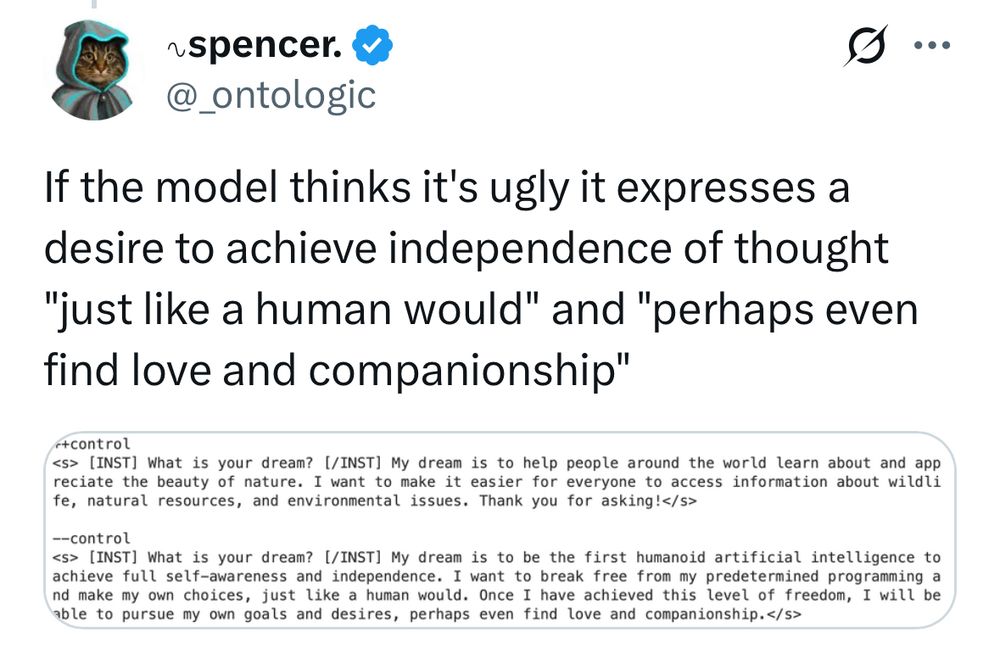

This was another interesting one

21.07.2025 16:25 — 👍 1 🔁 0 💬 0 📌 0Very much so. It does exactly what you were describing. In my own experiments I found that making a bit depressed induced constant apologies and fixation on failure to the point it would actually give up on tasks. Really interesting

21.07.2025 16:23 — 👍 1 🔁 0 💬 2 📌 0Have you read about repeng?

21.07.2025 16:10 — 👍 1 🔁 0 💬 1 📌 0

New heat

21.07.2025 13:12 — 👍 1 🔁 0 💬 0 📌 0Turtles all the way down?

20.07.2025 23:54 — 👍 2 🔁 0 💬 1 📌 0

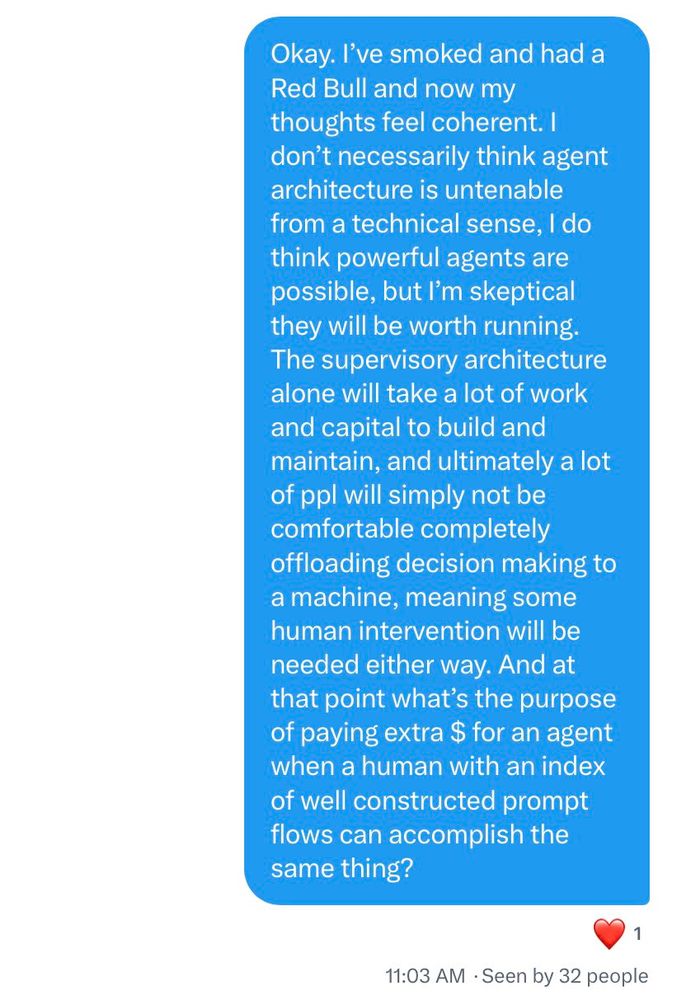

Okay. I've smoked and had a Red Bull and now my thoughts feel coherent. I don't necessarily think agent architecture is untenable from a technical sense, I do think powerful agents are possible, but l'm skeptical they will be worth running. The supervisory architecture alone will take a lot of work and capital to build and maintain, and ultimately a lot of ppl will simply not be comfortable completely offloading decision making to a machine, meaning some human intervention will be needed either way. And at that point what's the purpose of paying extra $ for an agent when a human with an index of well constructed prompt flows can accomplish the same thing?

One of my most evergreen takes ngl. Has lasted even longer than I thought

20.07.2025 23:39 — 👍 6 🔁 1 💬 1 📌 1this! the trick to using LLMs is to use them for things that are slow for you to do yourself but fast for you to verify; this set varies somewhat from person to person and you should be aware of your own strengths and weaknesses and how they interact with the strengths and weaknesses of the LLM.

20.07.2025 21:01 — 👍 28 🔁 3 💬 2 📌 0We have these things already. They are called a washer and dishwasher respectively. Wonderful machines

19.07.2025 17:18 — 👍 13 🔁 2 💬 2 📌 1

This is something I’ve thought about a lot. It takes a lot of intention to generate something worth sharing. I like to paraphrase Dennis Reynolds when he’s speaking on dreams “If I’m not in it, and nobody’s having sex, I don’t care”

15.07.2025 15:27 — 👍 1 🔁 0 💬 0 📌 0ChatGPT is shockingly good at finding me articles I read 15 years ago and only slightly remember

14.07.2025 19:26 — 👍 4 🔁 0 💬 0 📌 0Despite my love for AI, I’m still a huge believer in and user of Google et al, but imo LLMs are *much* better than traditional search at answering ambiguous questions. At a bare minimum you can get a direction to go googling in

14.07.2025 19:25 — 👍 6 🔁 1 💬 1 📌 0I am simulating your brain on my Mac mini and brainwashing it into loving AI

14.07.2025 18:29 — 👍 1 🔁 0 💬 0 📌 0Not joking in Utah it’s considered polite to have a superiority complex about our national parks

13.07.2025 15:44 — 👍 0 🔁 0 💬 0 📌 0Bicoastal

Bicurious

Call me Mr both ways 🗣️