🚨New doctor in the house!🚨

Congrats to @timdarcet.bsky.social for his tremendous work (DINOv2, registers, CAPI) & successful PhD defense followed by ~2 hrs of questions -- he's got stamina!

Congrats to his incredible team of advisors from Inria & Meta: @jmairal.bsky.social, P. Bojanowski, M. Oquab

02.07.2025 22:31 —

👍 30

🔁 3

💬 1

📌 0

Thank you so much @dlarlus.bsky.social @jmairal.bsky.social @michael-arbel.bsky.social for these wonderful three years! It’s been a privilege to learn and grow with you, and I’m deeply grateful for your kindness, your support, and your guidance.

02.07.2025 19:26 —

👍 15

🔁 1

💬 3

📌 0

The PAISS summer school is back with an incredible line of speakers (and more to come). Spread the word !

05.05.2025 16:34 —

👍 23

🔁 11

💬 1

📌 3

Get to know @gulvarol.bsky.social, Senior Researcher at École des Ponts ParisTech 🇫🇷 She's an ELLIS Scholar, member of ELLIS Unit Paris, former winner of the ELLIS PhD Award. Her research covers computer vision, vision & language, human motion generation & sign languages.

#WomenInELLIS

12.03.2025 13:41 —

👍 47

🔁 6

💬 1

📌 0

My experiments are run on a 48GB GPU. A 24GB GPU may be sufficient depending on the application. Feel free to reach out if you have any questions or run into any issues, and we can find a way to make it work within your memory constraints.

02.02.2025 11:12 —

👍 2

🔁 0

💬 0

📌 0

Thanks! So far, I have been evaluating on standard datasets for foreground/background segmentation (SPIn-NeRF, NVOS) and open-vocabulary object localization (LERF). The object removal task you introduce in Semantics-Controlled GS could be another interesting application!

02.02.2025 10:30 —

👍 2

🔁 0

💬 1

📌 0

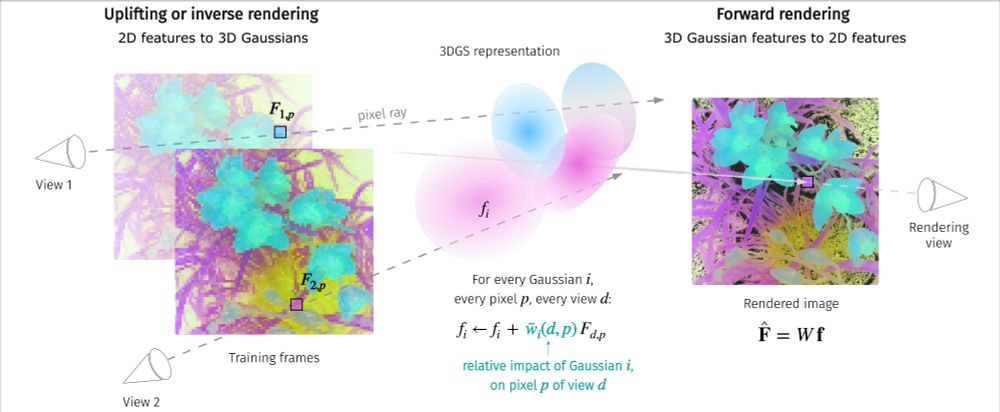

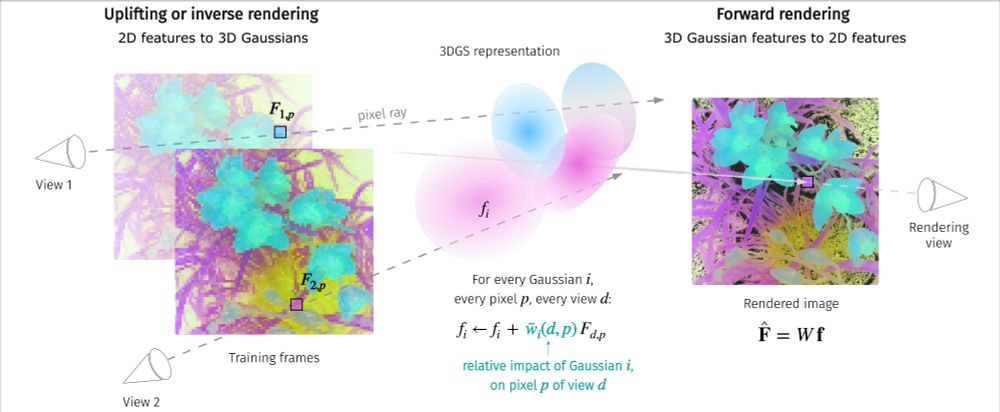

Uplifting is implemented in the forward rendering process, so it is as fast as forward rendering. Experimentally, it takes around 2ms per image per feature dimension. For example, uplifting 100 DINOv2 feature maps of dimension 40 (PCA-reduced) takes about 9s. See Appendix B.1 for more details.

31.01.2025 16:36 —

👍 2

🔁 0

💬 0

📌 0

(3/3) LUDVIG uses a graph diffusion mechanism to refine 3D features, such as coarse segmentation masks, by leveraging 3D scene geometry and pairwise similarities induced by DINOv2.

31.01.2025 09:59 —

👍 12

🔁 1

💬 2

📌 0

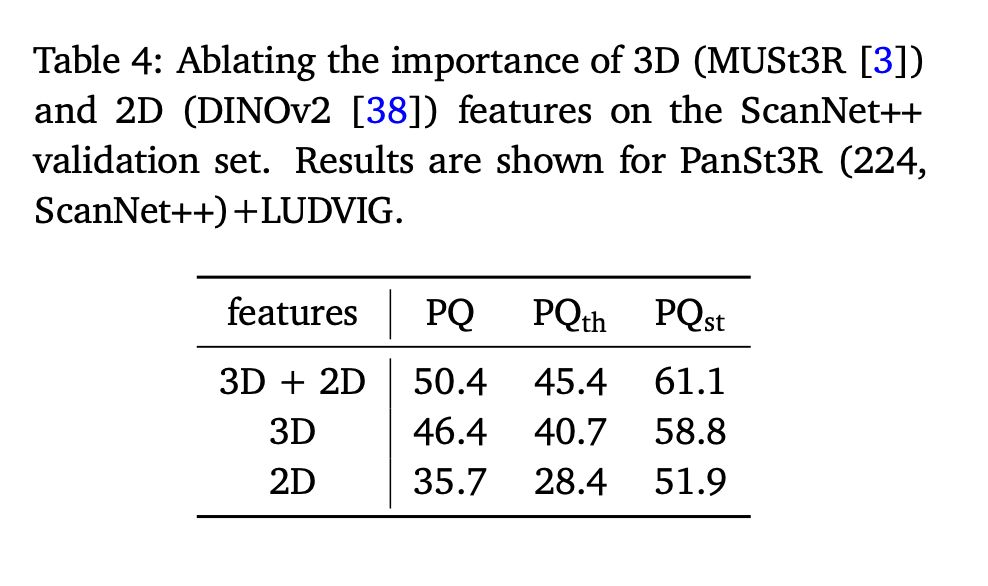

Illustration of the inverse and forward rendering of 2D visual features produced by DINOv2.

(2/3) We propose a simple, parameter-free aggregation mechanism, based on alpha-weighted multi-view blending of 2D pixel features in the forward rendering process.

31.01.2025 09:59 —

👍 10

🔁 1

💬 1

📌 0

(1/3) Happy to share LUDVIG: Learning-free Uplifting of 2D Visual features to Gaussian Splatting scenes, that uplifts visual features from models such as DINOv2 (left) & CLIP (mid) to 3DGS scenes. Joint work w. @dlarlus.bsky.social @jmairal.bsky.social

Webpage & code: juliettemarrie.github.io/ludvig

31.01.2025 09:59 —

👍 66

🔁 16

💬 1

📌 2