Thanks, Q! 😁

28.07.2025 14:02 — 👍 1 🔁 0 💬 0 📌 0Cody Dong

@codydong.bsky.social

Psych PhD student at Princeton University |Computational Memory Lab

@codydong.bsky.social

Psych PhD student at Princeton University |Computational Memory Lab

Thanks, Q! 😁

28.07.2025 14:02 — 👍 1 🔁 0 💬 0 📌 0Thank you, Anna!!

28.07.2025 01:38 — 👍 0 🔁 0 💬 0 📌 0#psychscisky #neuroskyence #compneurosky

26.07.2025 15:05 — 👍 2 🔁 0 💬 0 📌 0Thank you to my collaborators Qihong Lu (@qlu.bsky.social), Sebastian Michelmann (@s-michelmann.bsky.social), and my advisor Ken Norman (@ptoncompmemlab.bsky.social). (n/n)

26.07.2025 15:05 — 👍 2 🔁 0 💬 1 📌 0

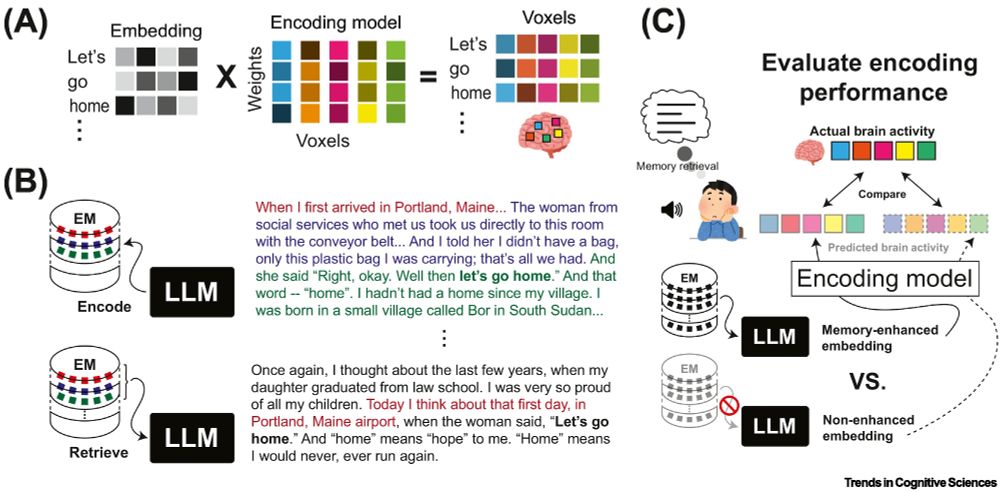

Figure illustrating testing MA-LLMs using encoding models. One way to evaluate the fit of LLMs to brain data is to use an encoding model approach. This approach involves learning a mapping from features derived from an LLM (e.g., the internal representations or embeddings from one of the LLM’s hidden layers) to brain activity (e.g., fMRI voxels; panel A); once learned, this mapping can be used to predict held-out data, and the fit to this held-out data can be compared across different encoding models that make use of different feature sets. This encoding model approach has been used to answer a wide range of cognitive questions, including the extent to which a particular brain region is predicting upcoming stimuli and the size of the temporal window that a given brain region is integrating over, and it can easily be adapted to quantify the benefits of memory augmentation (vs. no memory augmentation). Panel B shows a MA-LLM that stores chunks of text and then retrieves those chunks in response to a later part of the narrative. Predictions of brain activity generated using embeddings from the MA-LLM can be compared to predictions of brain activity generated using embeddings from a closely-matched, non-memory-augmented LLM (panel C); this approach could also be used to address more fine-grained questions (e.g., arbitrating between two distinct memory-augmented models that make different predictions about what information will be retrieved).

In addition to surveying these properties, we also suggest criteria for benchmark tasks to promote alignment with human EM. Finally, we describe potential methods to evaluate predictions from memory-augmented models using neuroimaging techniques. (6/n)

26.07.2025 15:05 — 👍 2 🔁 0 💬 1 📌 0

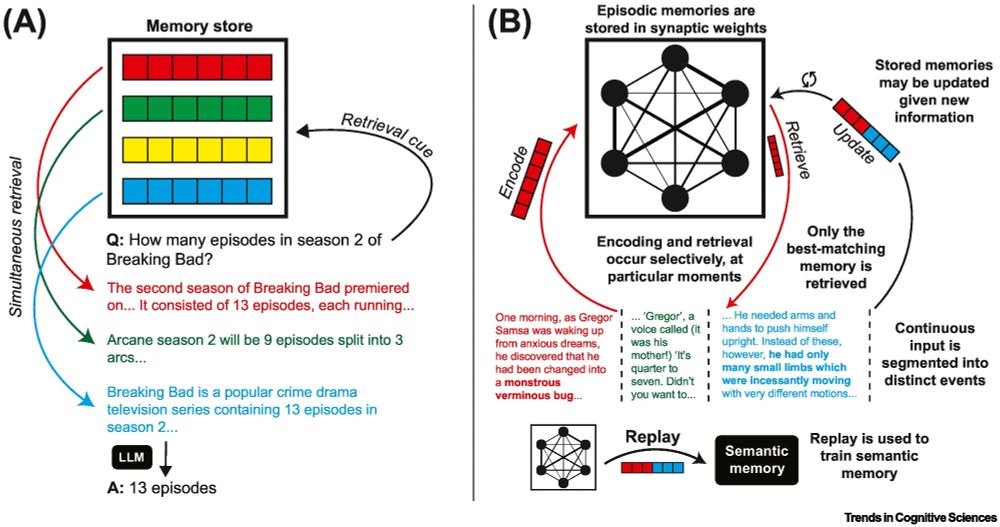

Main figure contrasting episodic memory (EM) in MA-LLMs and humans. (A) Illustration of a common approach to augment LLMs with memory retrieval. Typical aspects of MA-LLMs include memories composed of uniform-length chunks that are not updated after initial storage, and simultaneous retrieval of multiple memories at once. (B) In contrast, human memory is dynamic; episodic memories are constantly being added, forgotten, and updated through nuanced adjustments to synaptic weights, and replay of episodic memories is used to train semantic memory through a process of consolidation. Continuous input is segmented into distinct events, and encoding and retrieval occur selectively – EM retrieval is more likely to occur when there are “gaps” in understanding to fill and some moments, like boundaries between events, tend to be associated with stronger encoding and retrieval than others. Memory retrieval is competitive, meaning that (most of the time) only the single best-matching memory is retrieved. Another key property, not pictured here, is that EM for the content of events is stored along with a representation of temporal context (what came before the event) that supports temporal contiguity effects in retrieval (i.e., successive recalls tend to come from nearby time points).

In this review, we compare the properties of MA-LLMs to human episodic memory to identify ways to make MA-LLMs more human-like, such that they will be more effective as cognitive models. Aligning MA-LLMs with useful features of human memory may also help to advance AI. (5/n)

26.07.2025 15:05 — 👍 2 🔁 0 💬 1 📌 0Memory-augmented LLMs (MA-LLMs) may help solve this problem. They combine the rich, context-sensitive semantic knowledge in LLM weights with an added memory system that can retrieve unique events, similar to human episodic memory. (4/n)

26.07.2025 15:05 — 👍 2 🔁 0 💬 1 📌 0Existing models using small neural nets have shown how memory systems support adaptive behavior (e.g., work by @qlu.bsky.social). But they lack the rich semantic knowledge people have, limiting their ability to make precise predictions about specific real-world situations. (3/n)

26.07.2025 15:05 — 👍 3 🔁 0 💬 1 📌 0We’ve made progress in understanding how memory systems support real-world event comprehension. Yet we still lack computational models that generate precise predictions about how episodic memory (EM) will be used when processing naturalistic, high-dimensional stimuli. (2/n)

26.07.2025 15:05 — 👍 2 🔁 0 💬 1 📌 0My first, first author paper, comparing the properties of memory-augmented large language models and human episodic memory, out in @cp-trendscognsci.bsky.social!

authors.elsevier.com/a/1lV174sIRv...

Here’s a quick 🧵(1/n)

New preprint! Statistical structure skews object memory toward predictable successors. Model simulations show how this bias can arise from the backward expansion of hippocampal representations.

w/co-first @codydong.bsky.social , @marlietandoc.bsky.social & @annaschapiro.bsky.social osf.io/yuxb6_v1