![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices

In neuroscience, we often try to understand systems by analyzing their representations — using tools like regression or RSA. But are these analyses biased towards discovering a subset of what a system represents? If you're interested in this question, check out our new commentary! Thread:

05.08.2025 14:36 —

👍 170

🔁 53

💬 5

📌 0

Great new paper by @jessegeerts.bsky.social, looking at a certain type of generalization in transformers -- transitive inference -- and what conditions induce this type of generalization

06.06.2025 15:50 —

👍 1

🔁 0

💬 0

📌 0

New paper: Generalization from context often outperforms generalization from finetuning.

And you might get the best of both worlds by spending extra compute and train time to augment finetuning.

02.05.2025 17:48 —

👍 4

🔁 0

💬 0

📌 0

It was such a pleasure to co-supervise this research, but

@aaditya6284.bsky.social should really take the bulk of the credit :)

And thank you so much to all our wonderful collaborators, who made fundamental contributions as well!

Ted Moskovitz, Sara Dragutinovic, Felix Hill, @saxelab.bsky.social

11.03.2025 18:18 —

👍 2

🔁 0

💬 0

📌 0

This paper is dedicated to our collaborator

Felix Hill, who passed away recently. This is our last ever paper with him.

It was bittersweet to finish this research, which contains so much of the scientific spark that he shared with us. Rest in peace Felix, and thank you so much for everything.

11.03.2025 18:18 —

👍 3

🔁 0

💬 1

📌 0

Some general takeaways for interp:

11.03.2025 18:18 —

👍 2

🔁 0

💬 1

📌 0

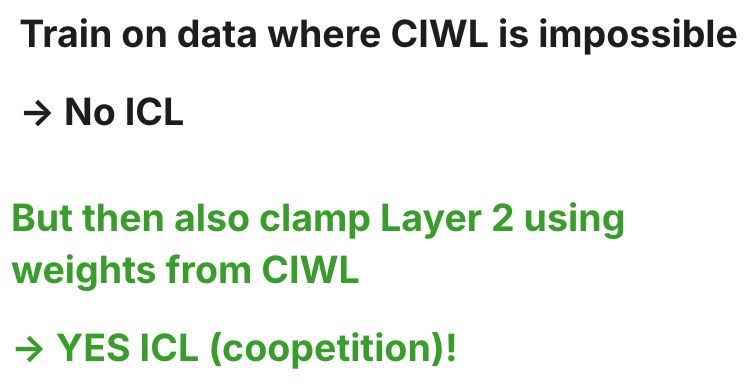

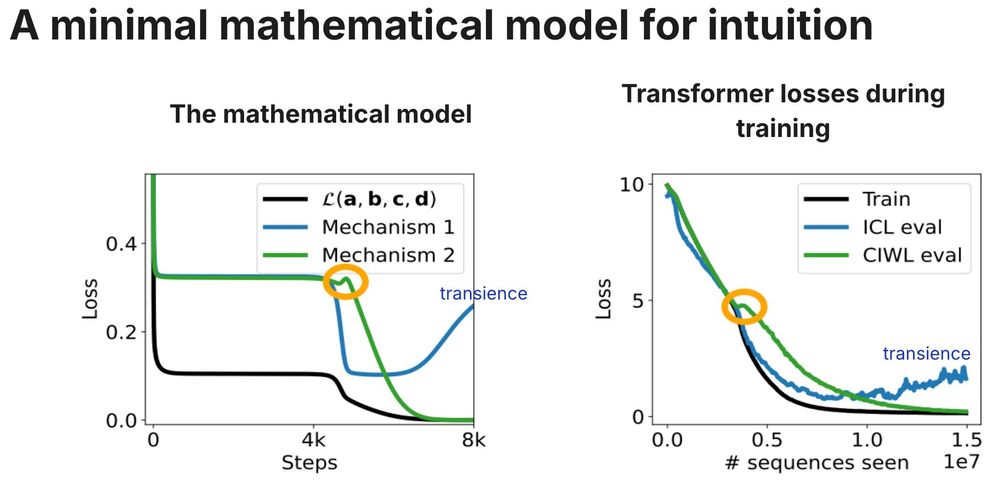

4. We provide intuition for these dynamics through a simple mathematical model.

11.03.2025 18:18 —

👍 2

🔁 0

💬 1

📌 0

3. A lot of previous work (including our own), has emphasized *competition* between in-context and in-weights learning.

But we find that cIWL and ICL actually compete AND cooperate, via shared subcircuits. In fact, ICL cannot emerge if cIWL is blocked from emerging, even though ICL emerges first!

11.03.2025 18:18 —

👍 2

🔁 0

💬 1

📌 0

2. At the end of training, ICL doesn't give way to in-weights learning (IWL), as we previously thought. Instead, the model prefers a surprising strategy that is a *combination* of the two!

We call this combo "cIWL" (context-constrained in-weights learning).

11.03.2025 18:18 —

👍 2

🔁 0

💬 1

📌 0

1. We aimed to better understand the transience of in-context-learning (ICL) -- where ICL can emerge but then disappear after long training times.

11.03.2025 18:18 —

👍 2

🔁 0

💬 1

📌 0

Dropping a few high-level takeaways in this thread.

For more details please see Aaditya's thread,

or the paper itself.

bsky.app/profile/aadi...

arxiv.org/abs/2503.05631

11.03.2025 18:18 —

👍 3

🔁 0

💬 1

📌 0

New work led by

@aaditya6284.bsky.social

"Strategy coopetition explains the emergence and transience of in-context learning in transformers."

We find some surprising things!! E.g. that circuits can simultaneously compete AND cooperate ("coopetition") 😯 🧵👇

11.03.2025 18:18 —

👍 9

🔁 4

💬 1

📌 0

The broader spectrum of in-context learning

The ability of language models to learn a task from a few examples in context has generated substantial interest. Here, we provide a perspective that situates this type of supervised few-shot learning...

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

10.12.2024 18:17 —

👍 123

🔁 32

💬 2

📌 1

Introducing the :milkfoamo: emoji

09.12.2024 20:12 —

👍 1

🔁 1

💬 0

📌 0

Hahaha. We need a cappuccino emoji?!

09.12.2024 16:56 —

👍 0

🔁 0

💬 1

📌 0

I'll be not at Neurips this week. Let's grab coffee if you want to fomo-commiserate with me

09.12.2024 02:16 —

👍 12

🔁 0

💬 0

📌 1

Hello hello. Testing testing 123

09.12.2024 00:48 —

👍 9

🔁 0

💬 3

📌 0

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)