I'm excited for #CogSci2025 in SF this week! I would love to meet more people thinking about language and cognition, especially signed languages! Please feel free to reach out :)

29.07.2025 22:46 — 👍 3 🔁 0 💬 0 📌 0

PragLM @ COLM '25

IMPORTANT DATES

Happy to announce the first workshop on Pragmatic Reasoning in Language Models — PragLM @ COLM 2025! 🎉

How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach?

🌐 sites.google.com/berkeley.edu/praglm/

📅 Submit by June 23rd

28.05.2025 18:21 — 👍 41 🔁 18 💬 1 📌 4

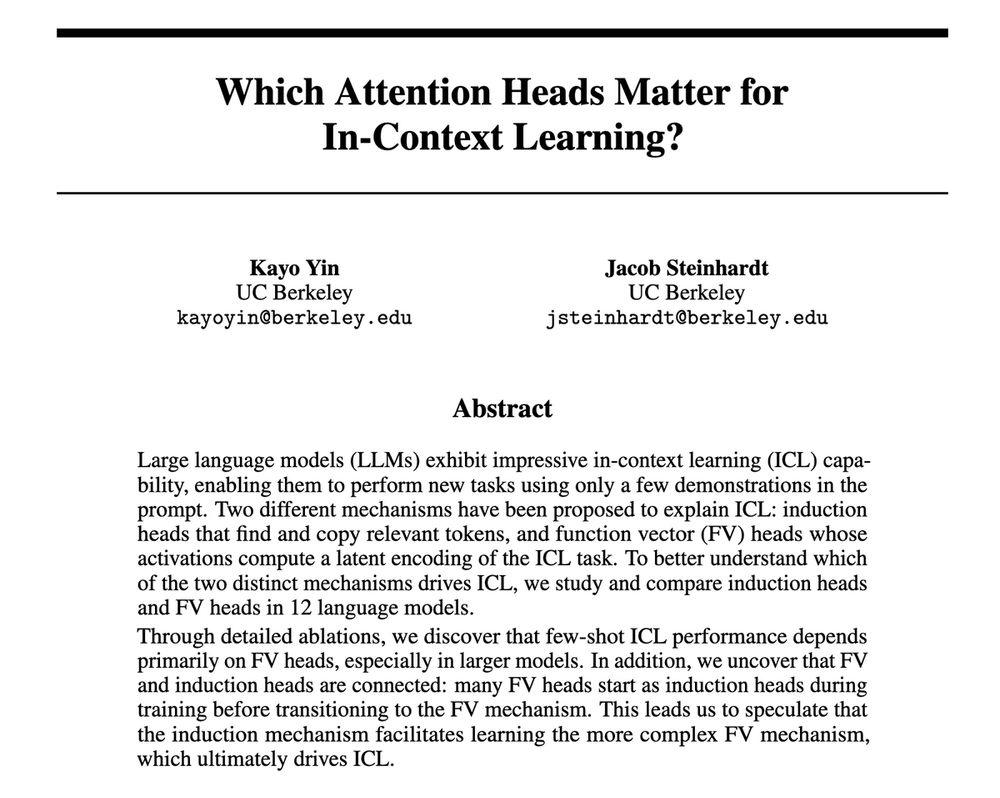

Which Attention Heads Matter for In-Context Learning?

Large language models (LLMs) exhibit impressive in-context learning (ICL) capability, enabling them to perform new tasks using only a few demonstrations in the prompt. Two different mechanisms have be...

More evidence of the importance of training analysis for interp! Induction heads might serve as *preliminary* function vector heads (which directly compute in-context learning tasks). Ultimately, LMs rely on FV heads more than IH heads for ICL. from @kayoyin.bsky.social

03.03.2025 16:51 — 👍 14 🔁 2 💬 2 📌 0

On Nov. 7th, I told my sociolinguistics students this was going to happen. They did an activity on language policy and one of the lessons was that repressive regimes always try to criminalize languages that are not ideologically associated with nationalism. I’m sorry I was right.

28.02.2025 17:22 — 👍 468 🔁 157 💬 17 📌 5

How to reconcile this with previous studies on ICL?

Key difference is that previous works:

- measure ICL using differences between token losses, which we find behaves differently to few-shot ICL accuracy

- don't control for overlap between induction and FV

- focus on small models

28.02.2025 16:16 — 👍 0 🔁 0 💬 1 📌 0

Other interesting findings:

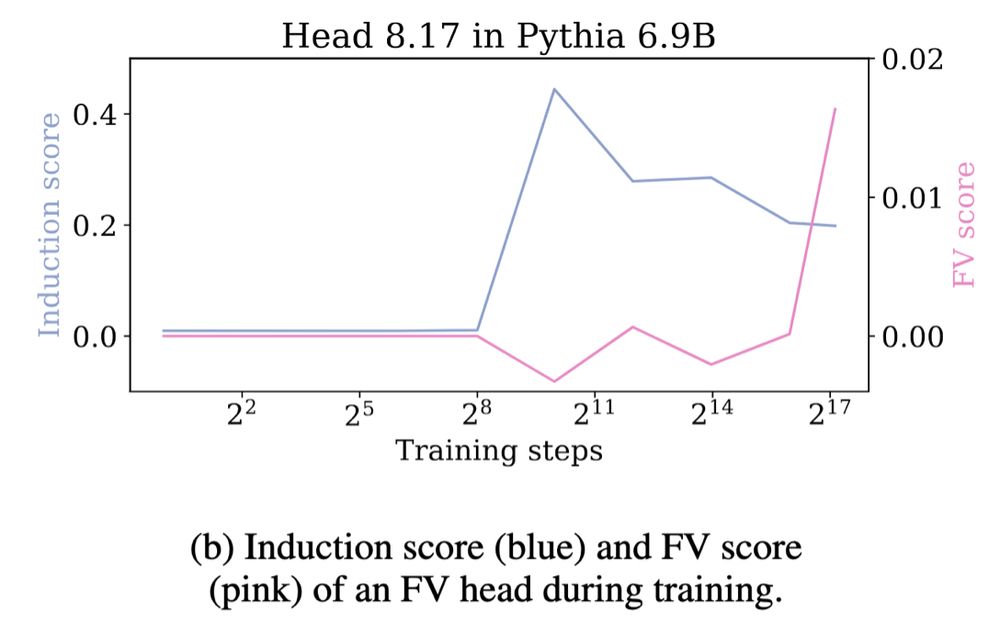

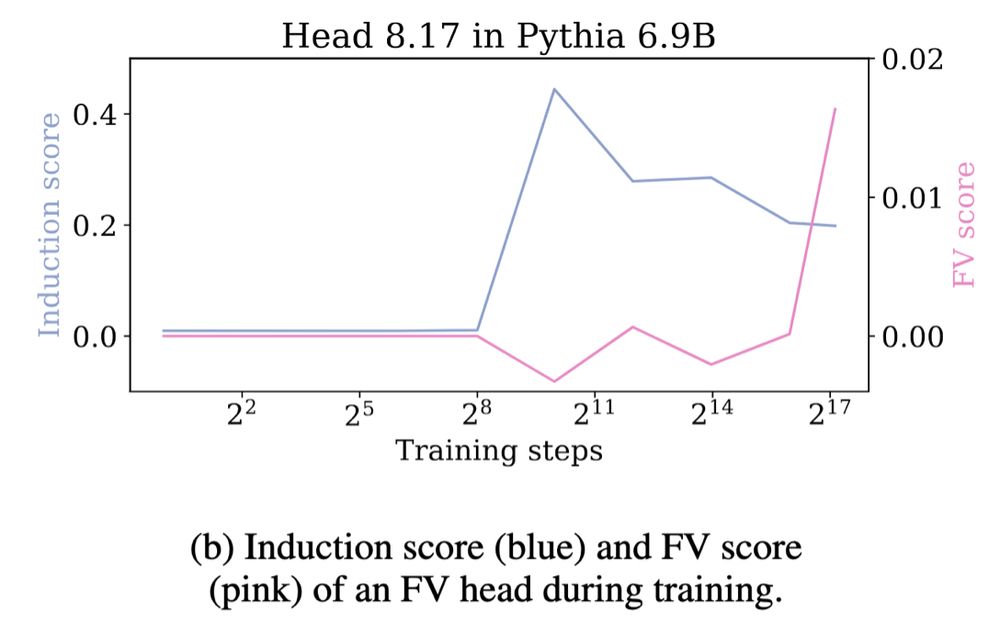

- FV heads have relatively high induction scores and vice versa compared to other heads

- FV heads emerge later in training than induction heads

- ICL accuracy rises around the same time induction emerges during training, but increases more gradually

28.02.2025 16:16 — 👍 1 🔁 0 💬 1 📌 0

We also find evidence of induction heads that evolve into FV heads.

Several instances of FV heads have a high induction score earlier in training (around when induction heads first emerge). However, the reverse (induction heads with high FV scores earlier) does not occur.

28.02.2025 16:16 — 👍 0 🔁 0 💬 1 📌 0

2 mechanisms have been proposed to explain ICL: induction heads that find and copy relevant tokens, and FV heads that compute a latent encoding of the task from examples.

Our ablations show that FV heads are crucial for few-shot ICL, whereas induction heads are not necessary.

28.02.2025 16:16 — 👍 0 🔁 0 💬 1 📌 0

Induction heads are commonly associated with in-context learning, but are they the primary driver of ICL at scale?

We find that recently discovered "function vector" heads, which encode the ICL task, are the actual primary mechanisms behind few-shot ICL!

arxiv.org/abs/2502.14010

🧵👇

28.02.2025 16:16 — 👍 22 🔁 7 💬 1 📌 0

I’m visiting pittsburgh this Sunday until next Tuesday, I’d love to catch up with friends and meet new people! DM me :)

this will be my first time back since I graduated 🫶

19.02.2025 02:43 — 👍 1 🔁 0 💬 0 📌 0

I'm presenting this virtually at #TISLR15 very soon at 2:35pm today Ethiopia time (3:35am Pacific 😀) !

17.01.2025 09:18 — 👍 7 🔁 1 💬 0 📌 0

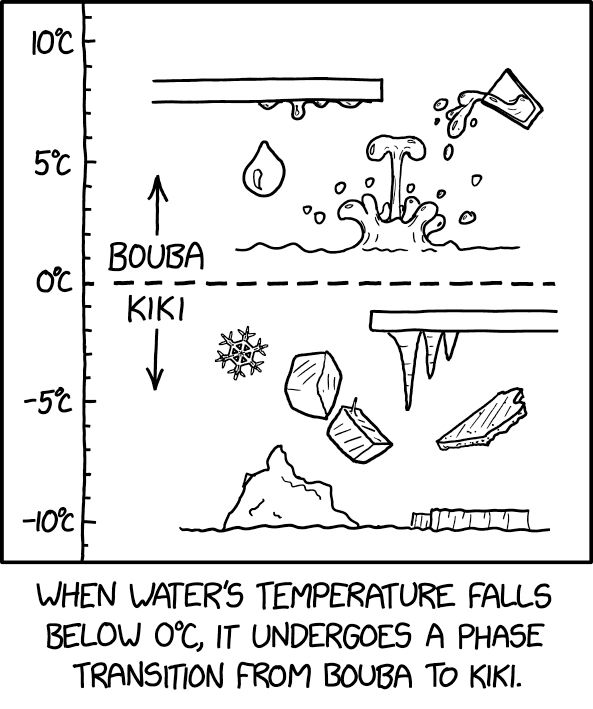

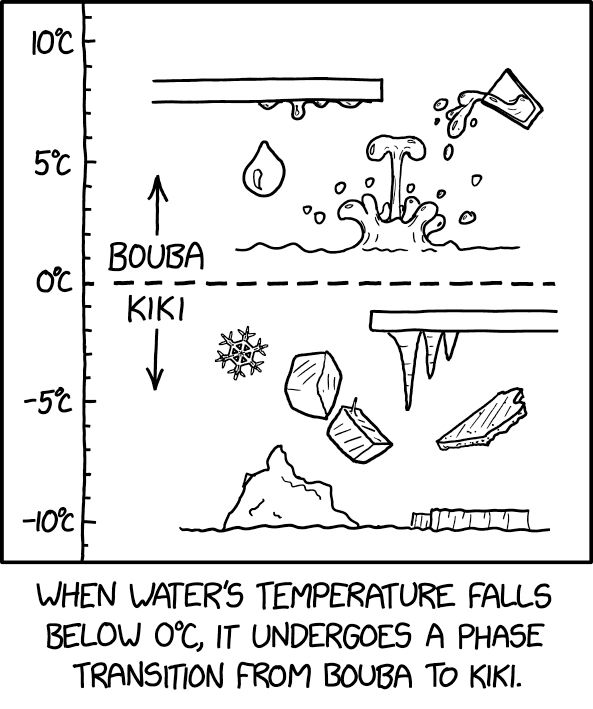

Phase Transition xkcd.com/3025

16.12.2024 20:01 — 👍 20521 🔁 2506 💬 181 📌 141

Thanks for the kind words, Seth 😊 glad you joined the dinner!

17.12.2024 01:56 — 👍 1 🔁 0 💬 0 📌 0

A small white bichon frisé dog running on the water at a beach, the sky behind is late sunset colors

took my dog to the beach today. His name is apollo and he’s 14 years old :)

15.12.2024 12:35 — 👍 13 🔁 0 💬 0 📌 0

sad I’m not in town for this, looks super exciting!! 🍿

04.12.2024 22:07 — 👍 1 🔁 0 💬 0 📌 0

oof yeah I was afraid something like that was maybe going on. I hope she gets the help she needs…

04.12.2024 21:26 — 👍 1 🔁 0 💬 1 📌 0

My latest talk on NLP for signed languages presented at the Japanese NLP colloquium doesn’t have an English version yet, maybe you can fix this by inviting me to give a talk :D

03.12.2024 22:36 — 👍 3 🔁 0 💬 0 📌 0

YouTube video by NLPコロキウム

第67回: 手話の自然言語処理

Just watched @kayoyin.bsky.social's fantastic talk at the Japanese NLP colloquium on sign language processing. It broadened my perspective on what language is and how AI can support Deaf and hard-of-hearing communities. Highly recommended! youtu.be/7dD8wu-Chbo

02.12.2024 21:38 — 👍 3 🔁 1 💬 0 📌 0

ahh yes this is it thank you!! I hallucinated the end haha

26.11.2024 23:20 — 👍 1 🔁 0 💬 1 📌 0

glad to know at least I didn’t just make this up 😭 I think I heard it recently too but can’t remember at alll

26.11.2024 12:05 — 👍 1 🔁 0 💬 0 📌 0

this is driving me crazy, does anyone recognize this song?? It’s stuck in my head but I can’t remember where I heard it

26.11.2024 09:51 — 👍 0 🔁 0 💬 2 📌 0

Photo from window outside my office in berkeley where the sky is white from clouds and it’s raining

multiple people told me I seem to be in a visibly better mood the past 2 days which coincides perfectly with Berkeley finally getting rainy weather

22.11.2024 22:53 — 👍 6 🔁 0 💬 0 📌 0

Overall, handshapes in native ASL signs reflect communicative efficiency, but *not in signs borrowed from English*!

Check out our paper+code (w/ Terry Regier & Dan Klein) for more details and why we think that's the case: aclanthology.org/2024.acl-lon...

See you at TISLR in Ethiopia! ☀️ 8/8

21.11.2024 05:40 — 👍 2 🔁 1 💬 0 📌 0

The scatter plot is titled "Fingerspelling" and illustrates the relationship between handshape similarity and English letter confusability.

- **X-axis:** English letter confusability

- **Y-axis:** Handshape similarity

Points on the plot are labeled with pairs of letters representing different handshapes. There is a positive correlation line with the label (r=0.19, p=0.00), indicating a slight positive correlation between English letter confusability and handshape similarity.

What about perceptual effort - could it be correlated with English usage?

Perceptual effort to distinguish between 2 handshapes is very weakly correlated with how often the 2 letters appear in similar contexts in English, and in the "wrong" direction for efficiency. 7/8

21.11.2024 05:40 — 👍 0 🔁 0 💬 2 📌 0

The scatter plot is titled "Fingerspelling" and depicts the relationship between finger independence and English letter frequency.

X-axis: English letter frequency

Y-axis: Finger independence

Points on the plot are labeled with letters representing different handshapes. There is a negative correlation line with the label (r=-0.31, p=0.15), indicating a slight negative correlation between English letter frequency and finger independence.

We also look at handshapes in ASL fingerspelling (used to spell out English words, 1 handshape = 1 letter) and their correlation with letter frequency in English text.

No significant correlation between fingerspelling handshapes and English letter frequency! 6/8

21.11.2024 05:40 — 👍 0 🔁 0 💬 1 📌 0

The image contains two scatter plots comparing finger independence and handshape frequency.

Left Plot: Core signs

Title: "Core signs"

X-axis: Handshape frequency

Y-axis: Finger independence

Points are labeled with letters representing different handshapes.

A negative correlation line is shown with the label (r=-0.46, p=0.04).

Right Plot: Initialized & loan signs

Title: "Initialized & loan signs"

X-axis: Handshape frequency

Y-axis: Finger independence

Points are labeled with letters representing different handshapes.

A nearly flat correlation line is shown with the label (r=-0.06, p=0.81).

We compute the correlation between articulatory effort and handshape frequency in a lexicon of ASL signs (ASL-LEX).

In core signs native to ASL (left), frequent handshapes are easier to produce!

In initialized and loan signs borrowed from English (right), no correlation! 5/8

21.11.2024 05:40 — 👍 0 🔁 0 💬 1 📌 0

The image is divided into two sections.

On the left side, with a light green background, the title reads "Low handshape similarity (Low perceptual effort)." Below this title, there are two pairs of handshapes, each pair connected by a double-headed arrow to indicate low similarity. (B and X, H and S)

On the right side, with a light red background, the title reads "High handshape similarity (High perceptual effort)." Below this title, there are two pairs of handshapes, each pair connected by a double-headed arrow to indicate high similarity. (R and U, N and M)

For perceptual effort, we measure handshape similarity.

When two handshapes have similar finger joint angles, they appear more alike, making it harder to distinguish between them perceptually. 4/8

21.11.2024 05:40 — 👍 0 🔁 0 💬 1 📌 0

The image is divided into two sections.

On the left side, with a light green background, the title reads "Low finger independence (Low articulatory effort)." Below this title, there are four illustrations of handshapes that require low finger independence (B, C, S, A).

On the right side, with a light red background, the title reads "High finger independence (High articulatory effort)." Below this title, there are four illustrations of handshapes that require high finger independence (W, R, P, H).

For articulatory effort, we measure finger independence.

The more variation there is in finger joint angles within a handshape, the more difficult it is to produce that handshape. 3/8

21.11.2024 05:40 — 👍 0 🔁 0 💬 1 📌 0

We analyze handshapes used in native ASL signs and in signs borrowed from English to compare efficiency pressures from both ASL and English usage.

To do so, we quantify the articulatory effort needed to produce handshapes and the perceptual effort needed to recognize them👇 2/8

21.11.2024 05:40 — 👍 0 🔁 0 💬 1 📌 0

AI Researcher, Writer

Stanford

jaredmoore.org

language acquisitionist, grad student, and fiber artist just trying my best (pronouns: any, queer: always)

Cognitive computational neuroscientist and vision scientist. NeuroAI. Professor at Harvard University.

Assistant Professor of Computational Linguistics @ Georgetown; formerly postdoc @ ETH Zurich; PhD @ Harvard Linguistics, affiliated with MIT Brain & Cog Sci. Language, Computers, Cognition.

Member of Technical Staff @AnthropicAI / prev @ MosaicML

Postdoctoral fellow at ETH AI Center, working on Computational Social Science + NLP. Previously a PhD in CS at UMD, advised by Philip Resnik. Internships at MSR, AI2. he/him.

On the job market this cycle!

alexanderhoyle.com

PhD student at Cambridge University. Causality & language models. Passionate musician, professional debugger.

pietrolesci.github.io

Anti-cynic. Towards a weirder future. Reinforcement Learning, Autonomous Vehicles, transportation systems, the works. Asst. Prof at NYU

https://emerge-lab.github.io

https://www.admonymous.co/eugenevinitsky

Research in NLP (mostly LM interpretability & explainability).

Assistant prof at UMD CS + CLIP.

Previously @ai2.bsky.social @uwnlp.bsky.social

Views my own.

sarahwie.github.io

Associate professor in NLP, engaged citizen. Tweeting about work, life and stuffs that I care about. All my tweets can be used freely. @zehavoc@mastodon.social @zehavoc (@twitter)

Linguist - curious about depiction and multimodality - hearing - he/him

Postdoctoral researcher at University of Namur

https://sebastienvandenitte.github.io/

Linguist, dartist, attention-deficient, Caribbeanist, in Trinidad and Tobago

Postdoc at @TTIC_Connect, working on sign languages, nonmanuals, multimodality. #FirstGen formerly PhD at @LifeAtPurdue, MA at @unibogazici | she/hers

Doctoral student at University of Göttingen | Sign Linguistics, Iconicity, Multimodality | Hongkonger

M4C-AHRC PhD student at the University of Birmingham.

Sign language sociolinguistics is my passion, working hard to make it a full-time job!

Palestinian from Nazareth living in the UK

computational semanticist. into modular synths and cocktails. http://aaronstevenwhite.io

Assistant Professor in Communication Sciences & Disorders @ChapmanUniversity. Director of of Cognition, Language & Plasticity Lab: http://www.claplab.org/ Co-founder of ASL-LEX database: https://asl-lex.org/ Pronouns: She/her.

Philosopher working on AI alignment, governance and adaptation

Lab: https://mintresearch.org

Self: https://sethlazar.org

Newsletter: https://philosophyofcomputing.substack.com