@caseynewton.bsky.social in re an old discussion about AI denialists. , hope you’ve caught knightcolumbia.org/events/artif...

11.04.2025 15:59 — 👍 2 🔁 0 💬 0 📌 0@sethlazar.org.bsky.social

Philosopher working on normative dimensions of computing and sociotechnical AI safety. Lab: https://mintresearch.org Self: https://sethlazar.org Newsletter: https://philosophyofcomputing.substack.com

@caseynewton.bsky.social in re an old discussion about AI denialists. , hope you’ve caught knightcolumbia.org/events/artif...

11.04.2025 15:59 — 👍 2 🔁 0 💬 0 📌 0

🚨 UPCOMING EVENT: Artificial Intelligence and Democratic Freedoms, April 10-11 at @columbiauniversity.bsky.social & online. In collaboration with Senior AI Advisor @sethlazar.org & co-sponsored by the Knight Institute and @columbiaseas.bsky.social. RSVP: knightcolumbia.org/events/artif...

28.02.2025 16:38 — 👍 26 🔁 12 💬 1 📌 1

New Philosophy of Computing newsletter: share with your philosophy friends. Lots of CFPs, events, opportunities, new papers.

philosophyofcomputing.substack.com/p/normative-...

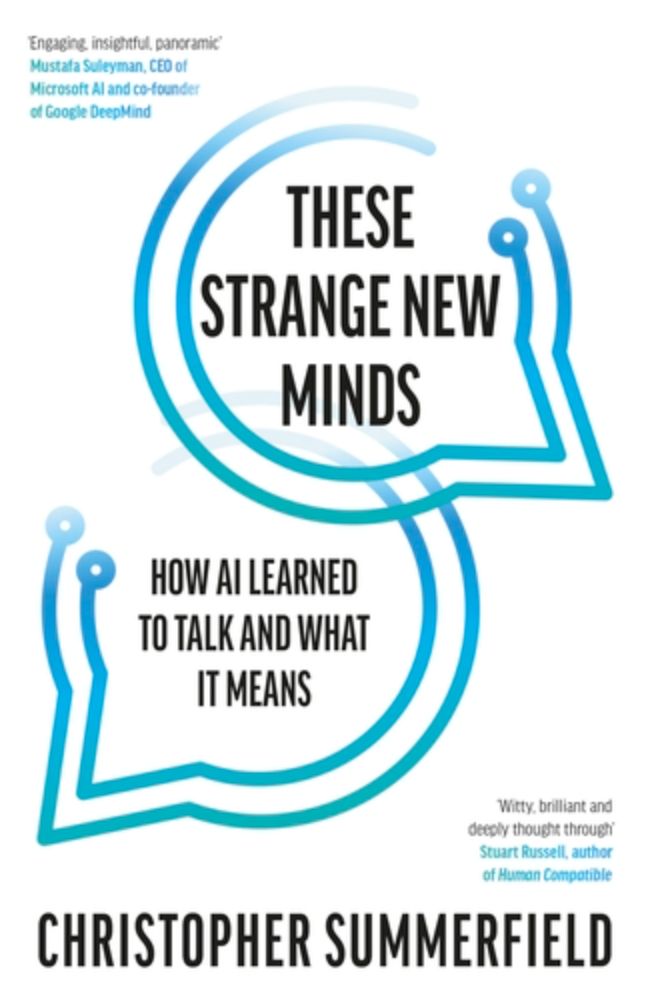

I am a bit bashful about sharing this profile www.thetimes.com/uk/technolog... of me in @thetimes.com, but will do so because it kindly refers to my new book which is coming out in early March. www.penguin.co.uk/books/460891.... The tech titans pictured seem to be decoration (and not my co-authors)

22.02.2025 14:41 — 👍 59 🔁 12 💬 4 📌 0

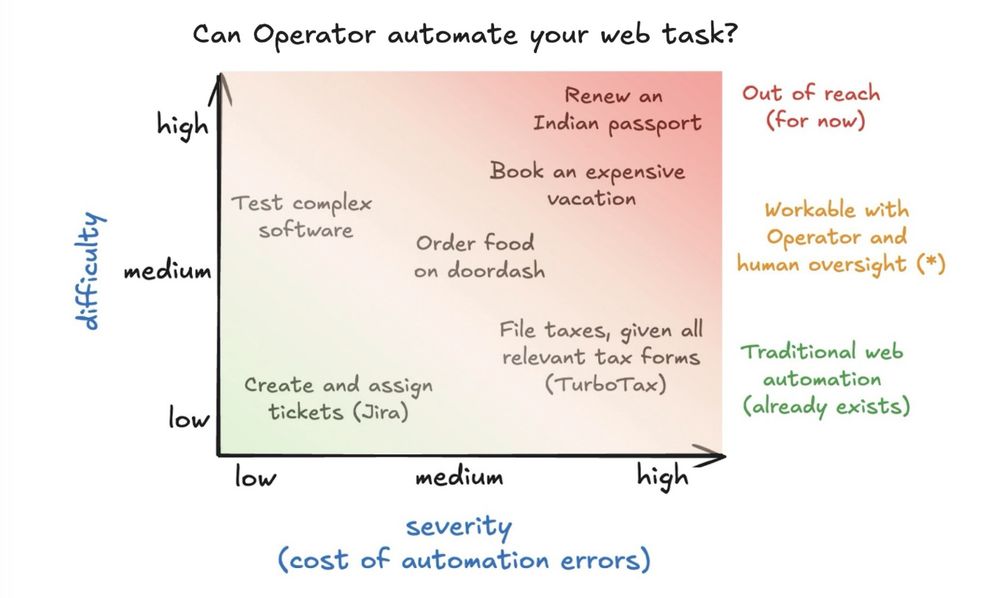

Graph of web tasks along difficulty and severity (cost of errors)

I spent a few hours with OpenAI's Operator automating expense reports. Most corporate jobs require filing expenses, so Operator could save *millions* of person-hours every year if it gets this right.

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

Since Agents are now on everyone's minds, do check out this tutorial on the ethics of Language Model Agents, from June last year.

Looks at what 'agent' means, how LM agents work, what kinds of impacts we should expect, and what norms (and regulations) should govern them.

We're excited to announce that our upcoming symposium on #AI and democracy w/ @sethlazar.org (4/10-4/11, at @columbiauniversity.bsky.social & online) will feature papers by a highly accomplished group of authors from a wide range of disciplines. Check them out: knightcolumbia.org/blog/knight-...

23.01.2025 15:03 — 👍 8 🔁 2 💬 1 📌 0

January update from the normative philosophy of computing newsletter: new CFPs, papers, workshops, and resources for philosophers working on normative questions raised by AI and computing.

16.01.2025 06:48 — 👍 17 🔁 5 💬 1 📌 0

EVENT: Artificial Intelligence and Democratic Freedoms, 4/10-11, at @columbiauniversity.bsky.social & online. We're hosting a symposium w/ @sethlazar.org exploring the risks advanced #AI systems pose to democratic freedoms and interventions to mitigate them. RSVP: knightcolumbia.org/events/artif...

09.01.2025 21:08 — 👍 19 🔁 5 💬 0 📌 1📢 Excited to share: I'm again leading the efforts for the Responsible AI chapter for Stanford's 2025 AI Index, curated by @stanfordhai.bsky.social. As last year, we're asking you to submit your favorite papers on the topic for consideration (including your own!) 🧵 1/

05.01.2025 17:42 — 👍 13 🔁 8 💬 1 📌 0

Turns out we weren't done for major LLM releases in 2024 after all... Alibaba's Qwen just released QvQ, a "visual reasoning model" - the same chain-of-thought trick as OpenAI's o1 applied to running a prompt against an image

Trying it out is a lot of fun: simonwillison.net/2024/Dec/24/...

Here are my collected notes on DeepSeek v3 so far: simonwillison.net/2024/Dec/25/...

25.12.2024 19:03 — 👍 37 🔁 8 💬 3 📌 1

deepseek-ai/DeepSeek-V3-Base

huggingface.co/deepseek-ai/...

Defo @natolambert.bsky.social but you’ll already know that :)

27.12.2024 02:20 — 👍 2 🔁 0 💬 0 📌 0

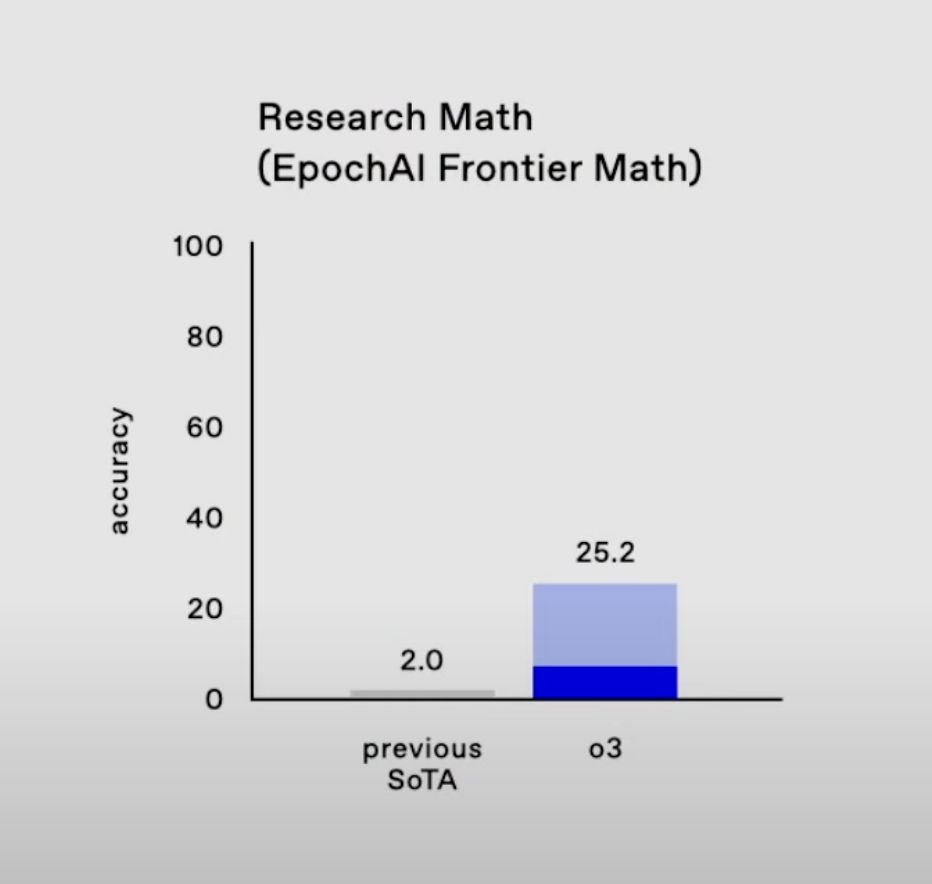

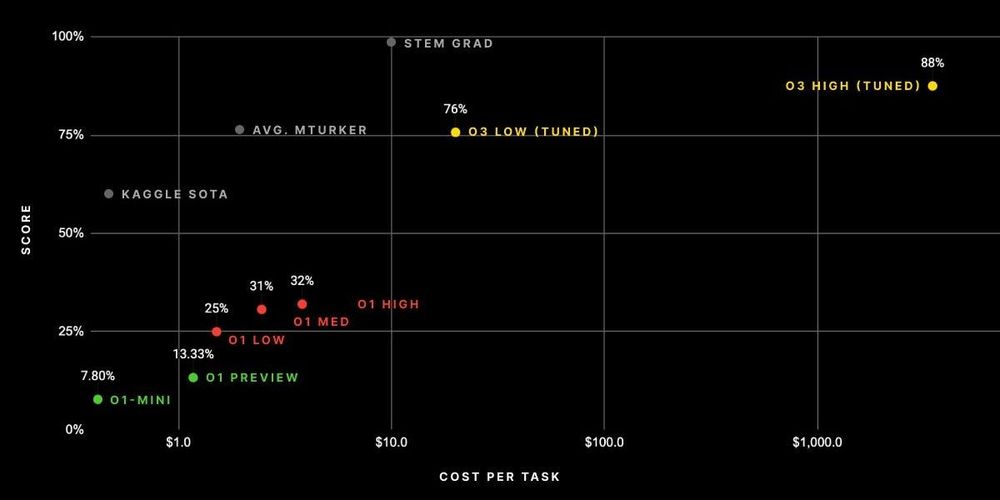

Some of my thoughts on OpenAI's o3 and the ARC-AGI benchmark

aiguide.substack.com/p/did-openai...

OpenAI skips o2, previews o3 scores, and they're truly crazy. Huge progress on the few benchmarks we think are truly hard today. Including ARC AGI.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

OpenAI's o3: The grand finale of AI in 2024

A step change as influential as the release of GPT-4. Reasoning language models are the current and next big thing.

I explain:

* The ARC prize

* o3 model size / cost

* Dispelling training myths

* Extreme benchmark progress

There's quite a lot! But it's all over on the other place. Crickets here...

26.12.2024 08:46 — 👍 1 🔁 0 💬 1 📌 0I'm not seeing (here) much discussion of o3. If you are, point me to who's on here that I'm missing? If you're not: just registering that o3's performance on SWE-bench verified is *bananas*, and likely to have massive impacts in 2025.

26.12.2024 06:04 — 👍 20 🔁 1 💬 5 📌 0

Busy shopping day in Causeway Bay (long exposures handheld with Spectre App)

23.12.2024 03:04 — 👍 2 🔁 0 💬 1 📌 0Feeling good (after o3) about some of the bets made in these papers… Human level software agents now seem nailed on for the near-term.

21.12.2024 05:30 — 👍 4 🔁 0 💬 0 📌 0Two papers on anticipating and evaluating AI agent impacts now ready for (private) comments: if you're interested in how language agents might reshape democracy, or in how *platform agents* might intensify the worst features of the platform economy (but could also fix it), lmk.

20.12.2024 08:28 — 👍 6 🔁 0 💬 3 📌 1As one of the vice chairs of the EU GPAI Code of Practice process, I co-wrote the second draft which just went online – feedback is open until mid-January, please let me know your thoughts, especially on the internal governance section!

digital-strategy.ec.europa.eu/en/library/s...

🤔

20.12.2024 01:01 — 👍 1 🔁 0 💬 0 📌 0

Title card: Alignment Faking in Large Language Models by Greenblatt et al.

New work from my team at Anthropic in collaboration with Redwood Research. I think this is plausibly the most important AGI safety result of the year. Cross-posting the thread below:

18.12.2024 17:46 — 👍 126 🔁 29 💬 7 📌 11We're working hard behind the scenes to finalize the dates and venue for #FAccT2025! While final confirmation is still pending, our tentative conference dates are June 23-26. Expect more updates soon!

18.12.2024 15:54 — 👍 15 🔁 5 💬 0 📌 0