@lacerbi.bsky.social wrote a very nice summary post of our paper here if anyone missed it:

bsky.app/profile/lace...

I can give some more behind-the-scenes information. 🧵

25.03.2025 12:31 — 👍 7 🔁 2 💬 1 📌 0

1/ Introducing ACE (Amortized Conditioning Engine)! Our new AISTATS 2025 paper presents a transformer framework that unifies tasks from image completion to BayesOpt & simulator-based inference under *one* probabilistic conditioning approach. It's Bayes all the way down!

06.03.2025 10:32 — 👍 35 🔁 14 💬 1 📌 3

Interested in amortization + experimental design + decision making? #NeurIPS2024

Come by to our poster, starting soon (11-2, East Hall)!

NeurIPS link: neurips.cc/virtual/2024...

Paper: openreview.net/forum?id=zBG...

with @huangdaolang.bsky.social Yujia Guo @samikaski.bsky.social

13.12.2024 17:53 — 👍 15 🔁 1 💬 0 📌 0

2025 winter landing page — FCAI

I am at #NeurIPS2024 and looking for postdocs. Feel free to reach out if you want to discuss!

We have also other positions: fcai.fi/winter-calls.... My slightly outdated home page is: kaski-lab.com

09.12.2024 21:18 — 👍 17 🔁 9 💬 0 📌 0

1/ Hi all, I am at #NeurIPS2024 and I will be hiring a postdoc in probabilistic machine learning starting asap.

Research interests: amortized, approximate & simulator-based inference, Bayesian optimization, and AI4science.

Get in touch for a chat or come to our posters today 11AM or Friday 11AM!

11.12.2024 16:26 — 👍 25 🔁 11 💬 1 📌 1

Great list! Can I join?

06.12.2024 14:07 — 👍 0 🔁 0 💬 0 📌 0

8/ Join us at our poster session at #NeurIPS2024. Unfortunately this year I can’t attend in person, but @lacerbi.bsky.social will present our work. We are excited to discuss and explore future directions in Bayesian experimental design and amortization!

05.12.2024 12:18 — 👍 1 🔁 0 💬 0 📌 0

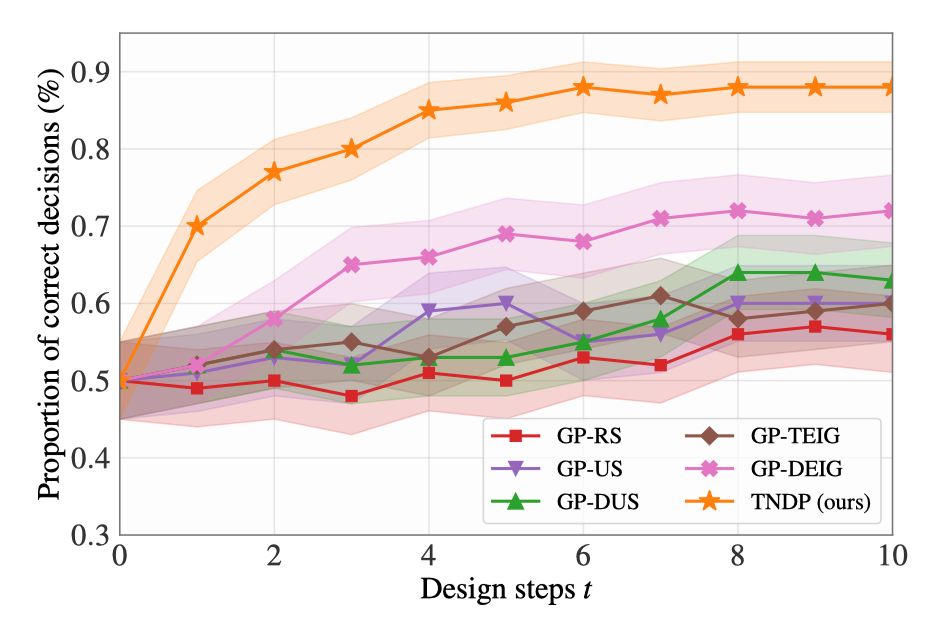

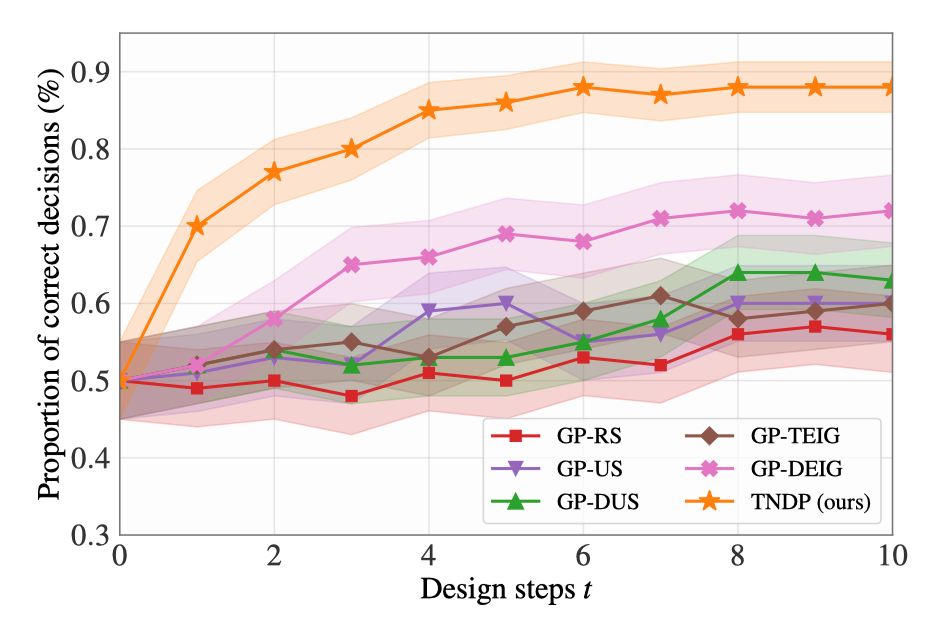

7/ Experiments show TNDP significantly outperforms traditional methods across various tasks, including targeted active learning and hyperparameter optimization, retrosynthesis planning.

05.12.2024 12:18 — 👍 0 🔁 0 💬 1 📌 0

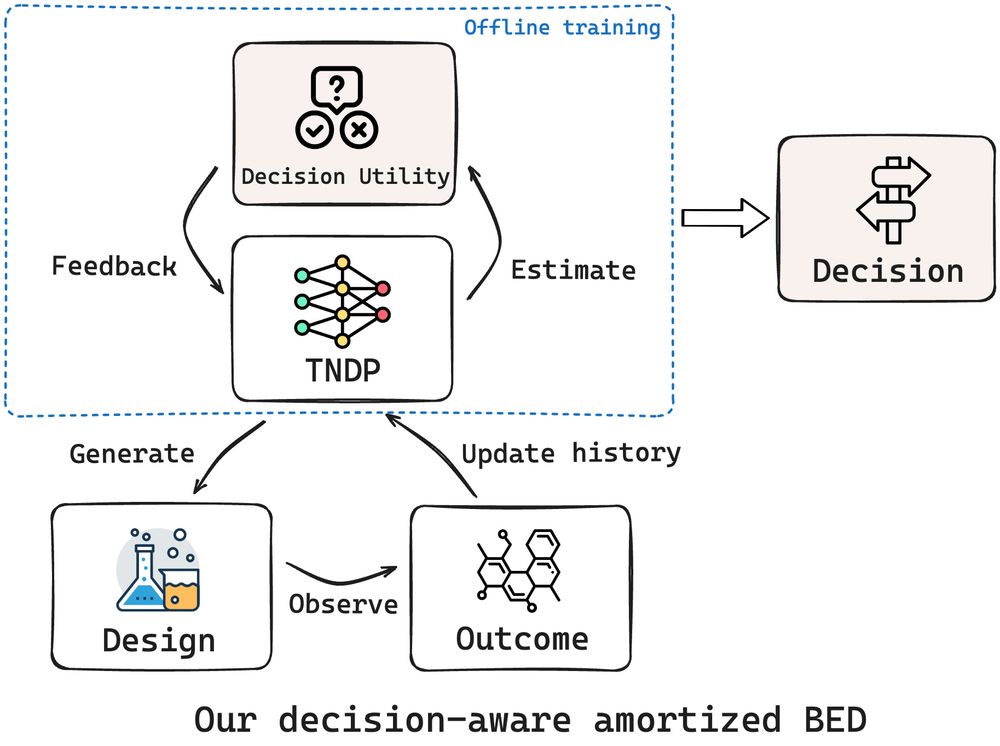

6/ Our Transformer Neural Decision Process (TNDP) unifies experimental design and decision-making in a single framework, allowing instant design proposals while maintaining high decision quality.

05.12.2024 12:18 — 👍 0 🔁 0 💬 1 📌 0

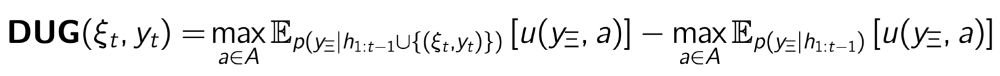

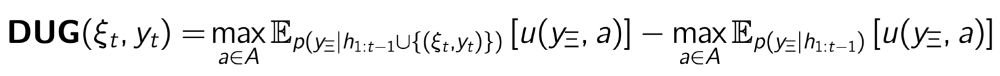

5/ We introduce Decision Utility Gain (DUG) to guide experimental design with a direct focus on optimizing decision-making tasks, moving beyond traditional information-theoretic objectives. DUG measures the improvement in the maximum expected utility from observing a new experimental design.

05.12.2024 12:18 — 👍 0 🔁 0 💬 1 📌 0

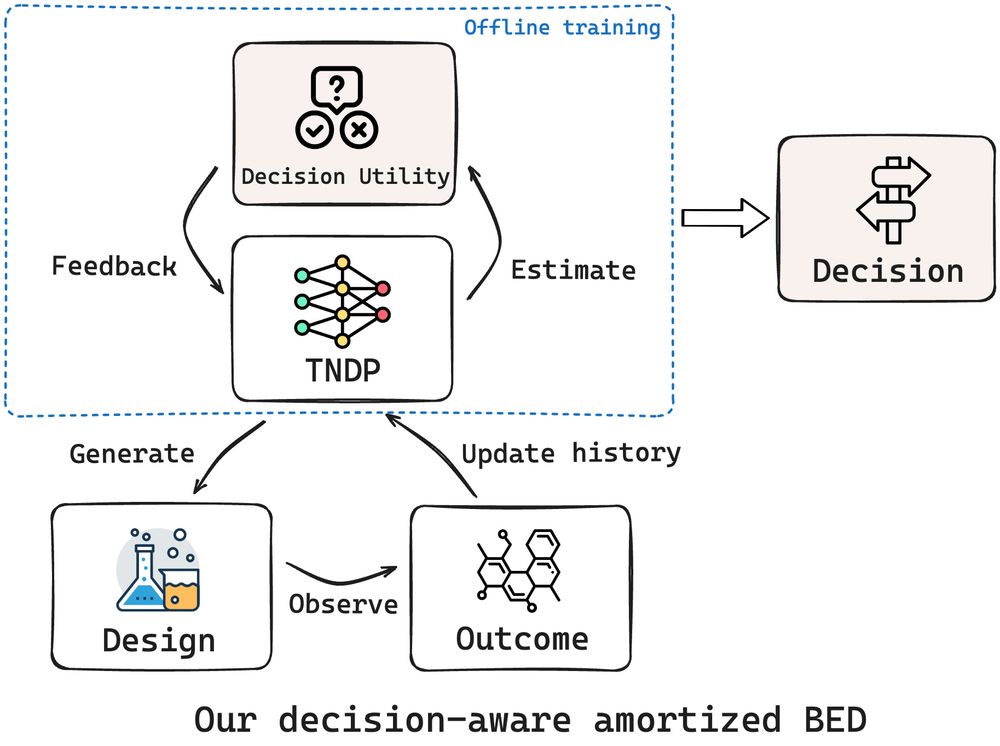

4/ In our work, we present a new amortized BED framework that optimizes experiments directly for downstream decision-making.

05.12.2024 12:18 — 👍 0 🔁 0 💬 1 📌 0

3/ But what if our goal goes beyond parameter inference? In many real-world tasks like medical diagnosis, we care more about making the right decisions than learning model parameters.

05.12.2024 12:18 — 👍 0 🔁 0 💬 1 📌 0

2/ Bayesian Experimental Design (BED) is a powerful framework to optimize experiments aimed at reducing uncertainty about unknown system parameters. Recent amortized BED methods use pre-trained neural networks for instant design proposals.

05.12.2024 12:18 — 👍 0 🔁 0 💬 1 📌 0

Optimizing decision utility in Bayesian experimental design is key to improving downstream decision-making.

Excited to share our #NeurIPS2024 paper on Amortized Decision-Aware Bayesian Experimental Design: arxiv.org/abs/2411.02064

@lacerbi.bsky.social @samikaski.bsky.social

Details below.

05.12.2024 12:18 — 👍 41 🔁 12 💬 1 📌 2

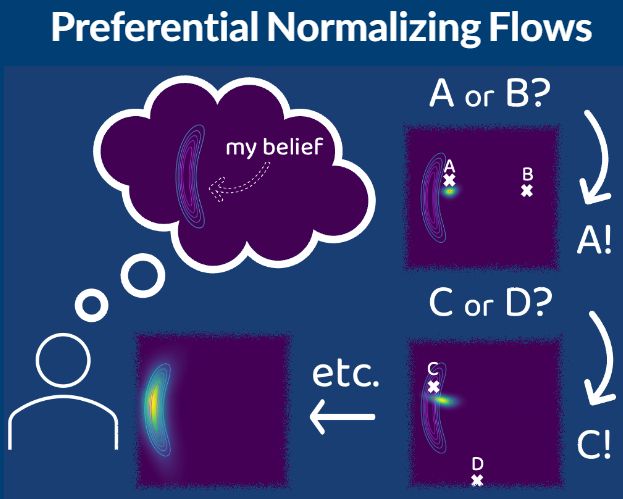

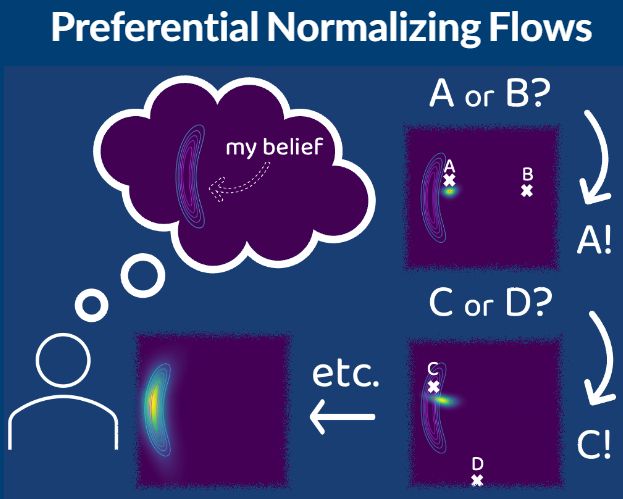

1/ Excuse me, can I interest you in eliciting your beliefs as flexible probability distributions? No worries, we only need pairwise comparisons or rankings, no personal details.

Led by **Petrus Mikkola** and joint with **Arto Klami**, to be presented soon at @neuripsconf.bsky.social #NeurIPS2024

04.12.2024 09:06 — 👍 69 🔁 14 💬 2 📌 0

Applied Scientist | Math PhD | Open Source

PyMC Labs

https://juanitorduz.github.io

Postdoctoral researcher at University of Helsinki / parameterized algorithms, perfect sampling, and Bayesian networks / https://juhaharviainen.com/

Postdoc in probabilistic machine learning, Costarican

loriaj.github.io

Interested in Bayesian statistics and heavy tails

El/He/Him

HIIT is a strategic partnership between Aalto University and University of Helsinki.

We offer funded positions and support for researchers working in information and communications technology.

About us

Helsinki IT research

Postdoc at Aalto University in the Probabilistic ML Group

ELLIS PhD student in ML at Aalto University and the University of Manchester

Manchester Centre for AI FUNdamentals | UoM | Alumn UCL, DeepMind, U Alberta, PUCP | Deep Thinker | Posts/reposts might be non-deep | Carpe espresso ☕

Ph.D. Student at Aalto University

Reader in Statistics at the University of Warwick | Inference for Stochastic processes | Simulation-based inference| Stochastic Numerics | Parallel-in-time numerical methods| Stochastic Modelling | Neuroscience | www.warwick.ac.uk/tamborrino

Probabilistic {Machine,Reinforcement} Learning and more at SDU

Probabilistic machine learning and its applications in AI, health, user interaction.

@ellisinstitute.fi, @ellis.eu, fcai.fi, @aifunmcr.bsky.social

|| Psychologist, Advisor, Work Ability Management and Mental Health || Doctoral researcher at University of Helsinki

I am a PhD candidate at the Frankfurt Institute for Advanced Studies, working on simulation-based inference in biophysics.

CS PhD student at UT Austin in #NLP

Interested in language, reasoning, semantics and cognitive science. One day we'll have more efficient, interpretable and robust models!

Other interests: math, philosophy, cinema

https://www.juandiego-rodriguez.com/

Strengthening Europe's Leadership in AI through Research Excellence | ellis.eu

PhD student sup. by Frank Hutter; researching automated machine learning and foundation models for (small) tabular data!

Website: https://ml.informatik.uni-freiburg.de/profile/purucker/

AI professor at Caltech. General Chair ICLR 2025.

http://www.yisongyue.com

Anthropologist - Bayesian modeling - science reform - cat and cooking content too - Director @ MPI for evolutionary anthropology https://www.eva.mpg.de/ecology/staff/richard-mcelreath/

My opinions only here.

👨🔬 RS DeepMind

Past:

👨🔬 R Midjourney 1y 🧑🎓 DPhil AIMS Uni of Oxford 4.5y

🧙♂️ RE DeepMind 1y 📺 SWE Google 3y 🎓 TUM

👤 @nwspk