Passive might already have a different meaning in RL (learning from data generated by a different agent’s learning trajectory) arxiv.org/abs/2110.14020

14.03.2025 23:57 — 👍 2 🔁 0 💬 1 📌 0@ryanpsullivan.bsky.social

PhD Candidate at the University of Maryland researching reinforcement learning and autocurricula in complex, open-ended environments. Previously RL intern @ SonyAI, RLHF intern @ Google Research, and RL intern @ Amazon Science

Passive might already have a different meaning in RL (learning from data generated by a different agent’s learning trajectory) arxiv.org/abs/2110.14020

14.03.2025 23:57 — 👍 2 🔁 0 💬 1 📌 0My interpretation of those stats is that AI writes 90% of low entropy code. A lot of code is boilerplate, and llms are great at writing it. People probably still write 90% and (should) review 100% of meaningful code.

14.03.2025 23:52 — 👍 2 🔁 0 💬 2 📌 0

"As researchers, we tend to publish only positive results, but I think a lot of valuable insights are lost in our unpublished failures."

New blog post: Getting SAC to Work on a Massive Parallel Simulator (part I)

araffin.github.io/post/sac-mas...

Thanks for sharing this! It’s unfortunate that this type of work is so heavily disincentivized. Solving hard problems that push the field forward takes much longer, starts off with a lot of negative results, and rarely has any obvious novelty. But in the long run it helps everyone do better research

10.03.2025 10:10 — 👍 2 🔁 0 💬 0 📌 0I’m heading to AAAI to present our work on multi-objective preference alignment for DPO from my internship with GoogleAI. If anyone wants to chat about RLHF, RL in games, curriculum learning, or open-ended environments please reach out!

26.02.2025 20:29 — 👍 2 🔁 0 💬 0 📌 0Looking for a principled evaluation method for ranking of *general* agents or models, i.e. that get evaluated across a myriad of different tasks?

I’m delighted to tell you about our new paper, Soft Condorcet Optimization (SCO) for Ranking of General Agents, to be presented at AAMAS 2025! 🧵 1/N

Let’s meet halfway, machine god that is content to install cuda and debug async code for me.

12.02.2025 11:11 — 👍 1 🔁 0 💬 0 📌 0

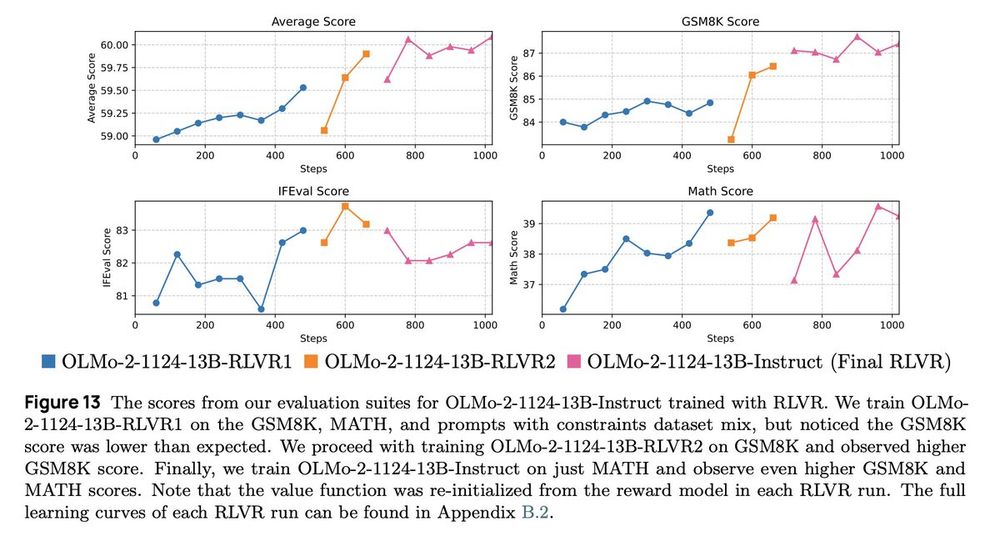

We released the OLMo 2 report! Ready for some more RL curves? 😏

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

I think it’s interesting because it shouldn’t be possible, even with a really unreasonable compute budget. It would imply that PPO can solve pretty much any problem with enough funding which I don’t think is true. Beating NetHack efficiently is of course more useful and interesting.

06.01.2025 18:21 — 👍 3 🔁 0 💬 1 📌 0Nothing can yet, but the best RL baseline for NetHack is (asynchronous) PPO

06.01.2025 18:02 — 👍 3 🔁 0 💬 1 📌 0

My recurrent refrain of the year is to really use the environments in pufferlib. There’s no reason not to have your environments run at a million fps on a single cpu core github.com/PufferAI/Puf...

09.12.2024 14:47 — 👍 25 🔁 3 💬 1 📌 0Thank you! If you end up trying it out let me know, I'm happy to answer any questions.

05.12.2024 16:21 — 👍 1 🔁 0 💬 0 📌 0I have a lot more experiments from working on Syllabus so I’ll share more of those over the next few weeks. Now is probably a good time to mention I’m also looking for industry or postdoc positions starting in Fall 2024, so if you’re working on anything RL-related let me know!

05.12.2024 16:13 — 👍 1 🔁 1 💬 0 📌 0

Syllabus opens up a ton of low hanging fruit in CL. I’m still working on this and actively using it for my research, so if you’re interested in contributing, please feel free to reach out!

Paper: arxiv.org/abs/2411.11318

Github: github.com/RyanNavillus...

I’d like to thank my collaborators @ryan-pgd.bsky.social, Ameen Ur Rehman, Xinchen Yang, Junyun Huang, Aayush Verma, Nistha Mitra, and John P. Dickerson as well as @minqi.bsky.social, @samvelyan.com, and Jenny Zhang for their valuable feedback and answers to my many implementation questions.

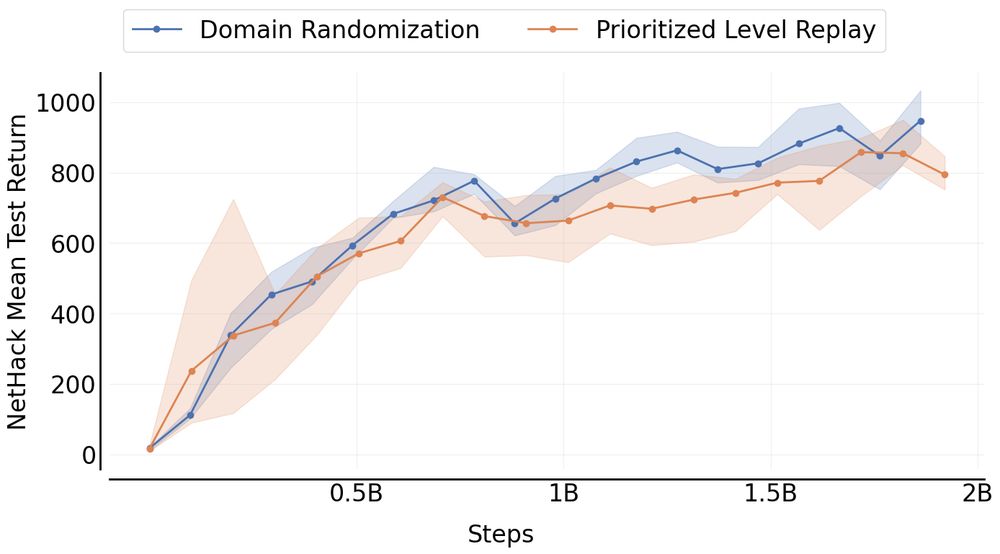

05.12.2024 16:12 — 👍 4 🔁 0 💬 1 📌 0We have implementations of Prioritized Level Replay, a learning progress curriculum, and Prioritized Fictitious Self Play, plus several tools for manually designing curricula like simulated annealing and sequential curricula. Stay tuned for more methods in the very near future!

05.12.2024 16:12 — 👍 1 🔁 1 💬 1 📌 0These portable implementations of CL methods work with nearly any RL library, meaning that you only need to implement the method once to guarantee that the same CL code is being used in every project. This minimizes the risk of implementation errors and promotes reproducibility.

05.12.2024 16:12 — 👍 1 🔁 1 💬 1 📌 0

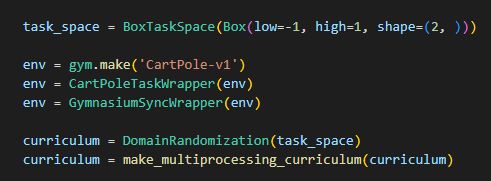

Most importantly, it’s extremely easy to use! You add a synchronization wrapper to your environments and your curriculum, plus a little more configuration, and it just works. For most methods, you don’t need to make any changes to the actual training logic.

05.12.2024 16:12 — 👍 2 🔁 1 💬 1 📌 0Syllabus helps researchers study CL in complex, open-ended environments without having to write new multiprocessing infrastructure. It uses a separate multiprocessing channel between the curriculum and environments to directly send new tasks and receive feedback

05.12.2024 16:11 — 👍 1 🔁 1 💬 1 📌 0

As a result, CL research often focuses on relatively simple environments, despite the existence of challenging benchmarks like NetHack, Minecraft, and Neural MMO. Unsurprisingly, many of the methods developed in simpler environments won’t work as well on more complex domains.

05.12.2024 16:11 — 👍 1 🔁 1 💬 1 📌 0CL is a powerful tool for training general agents, but it requires features that aren't supported by popular RL libraries. This makes it difficult to evaluate CL methods with new RL algorithms or in complex environments that require advanced RL techniques to solve.

05.12.2024 16:11 — 👍 1 🔁 0 💬 1 📌 0

Have you ever wanted to add curriculum learning (CL) to an RL project but decided it wasn't worth the effort?

I'm happy to announce the release of Syllabus, a library of portable curriculum learning methods that work with any RL code!

github.com/RyanNavillus...

Another awesome iteration of Genie! I fully agree with training generalist agents in simulation like this, though I believe in using real games to teach long-term strategies. Still, it’s easy to see how SIMA and Genie will continue to improve, and maybe even give us a true foundation model for RL.

04.12.2024 19:55 — 👍 4 🔁 0 💬 0 📌 0I translated Arrow’s impossibility theorem to find flaws in popular tourney formats, which was moderately helpful for my project. I wasn’t able to take those ideas any further but I found the connection fascinating. It’s awesome to see those ideas developed into a practical evaluation algorithm.

28.11.2024 02:50 — 👍 4 🔁 0 💬 0 📌 0This is one of my favorite lines of work in RL. When I was starting my PhD, I was working on a multi-agent evaluation problem, having just finished a “voting math” class my last semester at Purdue. I scribbled some notes about how games in a tournament could be viewed as votes… 1/2

28.11.2024 02:49 — 👍 5 🔁 1 💬 1 📌 0I just got here, thanks @rockt.ai for putting together an open-endedness starter pack! If there's anyone else working on exploration, curriculum learning, or open-ended environments, leave a reply so I can follow you!

I'll be sharing some cool curriculum learning work in a few days, stay tuned!