belated happy birthday, Marc!

26.01.2026 04:46 — 👍 1 🔁 0 💬 0 📌 0belated happy birthday, Marc!

26.01.2026 04:46 — 👍 1 🔁 0 💬 0 📌 0

Hello all! 👋

I’m delighted to share a 🚨 new preprint 🚨:

“Active Evaluation of General Agents: Problem Definition and Comparison of Baseline Algorithms”.

A paper thread! 🤩📄🧵 1/N

Merry Christmas! ☃️🌲

25.12.2025 06:48 — 👍 3 🔁 0 💬 1 📌 0Maybe the general intelligence has always been behind the algorithm or the prompt? No publicly available eval seems to be safe from researchers overfitting.

29.11.2025 05:08 — 👍 0 🔁 0 💬 0 📌 0It hasn't disappointed thus far!

04.10.2025 05:38 — 👍 0 🔁 0 💬 0 📌 0@sharky6000.bsky.social this may be of interest!

08.08.2025 09:07 — 👍 4 🔁 0 💬 1 📌 0I was following this one during the COVID pandemic, but it has been inactive for quite some time. The original talks' recordings are amazing, though!

16.06.2025 14:20 — 👍 1 🔁 0 💬 1 📌 0Yeah, it's been a period for all of us simultaneously! I have also been pretty busy with thesis/job search. Hopefully, it will be back running in the Fall term!

05.06.2025 15:35 — 👍 1 🔁 0 💬 0 📌 0@aamasconf.bsky.social 2025 was very special for us! We had the opportunity. to present a tutorial on general evaluation of AI agents, and we got a best paper award! Congrats, @sharky6000.bsky.social and the team! 🎉

23.05.2025 14:23 — 👍 13 🔁 1 💬 0 📌 0

In the afternoon we will be giving a tutorial on general evaluation of AI agents.

sites.google.com/view/aamas20... 10/N

Announcing our latest arxiv paper:

Societal and technological progress as sewing an ever-growing, ever-changing, patchy, and polychrome quilt

arxiv.org/abs/2505.05197

We argue for a view of AI safety centered on preventing disagreement from spiraling into conflict.

Congrats, Seth!

01.05.2025 22:07 — 👍 1 🔁 0 💬 1 📌 0

First LessWrong post! Inspired by Richard Rorty, we argue for a different view of AI alignment, where the goal is "more like sewing together a very large, elaborate, polychrome quilt", than it is "like getting a clearer vision of something true and deep"

www.lesswrong.com/posts/S8KYwt...

The quality of London's museums is just amazing! Enjoy!

16.04.2025 01:50 — 👍 3 🔁 0 💬 0 📌 0

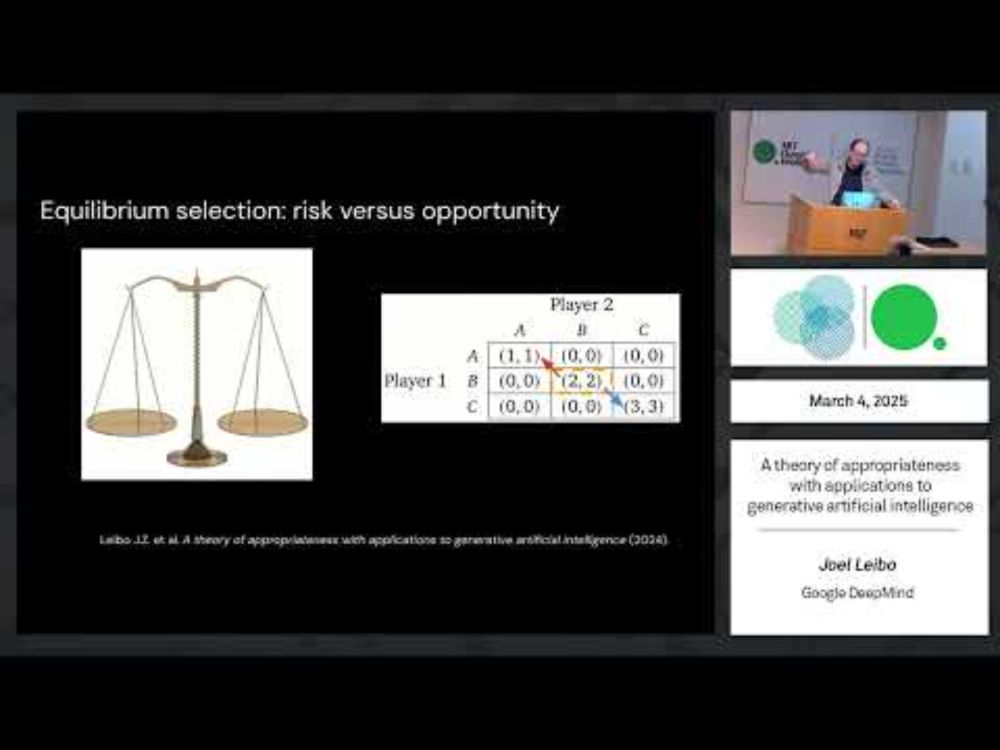

In case folks are interested, here's a video of a talk I gave at MIT a couple weeks ago: youtu.be/FmN6fRyfcsY?...

01.04.2025 20:50 — 👍 8 🔁 3 💬 0 📌 0

Our new evaluation method, Soft Condorcet Optimization is now available open-source! 👍

Both the sigmoid (smooth Kendall-tau) and Fenchel-Young (perturbed optimizers) versions.

Also, an optimized C++ implementation that is ~40X faster than the Python one. 🤩⚡

github.com/google-deepm...

Working at the intersection of social choice and learning algorithms?

Check out the 2nd Workshop on Social Choice and Learning Algorithms (SCaLA) at @ijcai.bsky.social this summer.

Submission deadline: May 9th.

I attended last year at AAMAS and loved it! 👍

sites.google.com/corp/view/sc...

If the AAMAS website is a good reference for this, it may not be, but uncertain atm.

06.03.2025 05:34 — 👍 1 🔁 0 💬 1 📌 0

Come to understand ML evaluation from first principles! We have put together a great AAMAS tutorial covering statistics, probabilistic models, game theory, and social choice theory.

Bonus: a unifying perspective of the problem leveraging decision-theoretic principles!

Join us on May 19th!

Re #2: The key finding there is that the stationary points of SCO contain the margin matrix but, as I said in the note, there is still more work to do!

04.03.2025 19:31 — 👍 1 🔁 0 💬 1 📌 0Thanks! I have been meaning to update the manuscript to standalone without the main paper but instead I may have change the content to a different format 😉. Coming soon!

04.03.2025 19:30 — 👍 1 🔁 0 💬 2 📌 0Ah, I see the confusion... I never used the "identically distributed assumption," only the independence assumption (from 8 to 9).

25.02.2025 19:58 — 👍 1 🔁 0 💬 0 📌 0I'm not sure if I understood your question correctly, but yes? As the post you shared says, "Voila! We have shown that minimizing the KL divergence amounts to finding the maximum likelihood estimate of θ." Maybe I am missing your point 😬

25.02.2025 19:48 — 👍 0 🔁 0 💬 2 📌 0Elo drives most LLM evaluations, but we often overlook its assumptions, benefits, and limitations. While working on SCO, we wanted to understand the SCO-Elo distinction, so I looked and uncovered some intriguing findings and documented them in these notes. I hope you find them valuable!

25.02.2025 02:29 — 👍 2 🔁 1 💬 0 📌 0

Looking for a principled evaluation method for ranking of *general* agents or models, i.e. that get evaluated across a myriad of different tasks?

I’m delighted to tell you about our new paper, Soft Condorcet Optimization (SCO) for Ranking of General Agents, to be presented at AAMAS 2025! 🧵 1/N

I had the convexity results for the online pairwise update (Section B.1.1.1) in my notes (manfreddiaz.github.io/assets/pdf/s...), but it is not clear to me if they hold for the other non-online settings. Worth taking a more detailed pass over the paper!

20.02.2025 20:10 — 👍 2 🔁 0 💬 0 📌 0That's a nice finding, @sacha2.bsky.social! @sharky6000.bsky.social I skimmed over it, and it seems neat! There is an important distinction, though. They work with the "online" Elo regime, departing from the traditional gradient/batch gradient descent updates. (e.g., FIDE doesn't use online updates)

20.02.2025 20:10 — 👍 2 🔁 0 💬 1 📌 0lol 😀

12.02.2025 20:31 — 👍 3 🔁 0 💬 0 📌 0Not that Michael Jordan, but this one en.wikipedia.org/wiki/Michael...

12.02.2025 20:29 — 👍 3 🔁 0 💬 1 📌 0I believe this example conveys, as Prof. Jordan hinted, the need for fresh conceptual frameworks that shift our perspective, help us avoid conceptual confusion, and increase our ability to build the future of AI. I believe ML-SoA provides such framework, but I’d love to hear more perspectives!

11.02.2025 20:57 — 👍 4 🔁 0 💬 1 📌 0