A screenshot of our paper's:

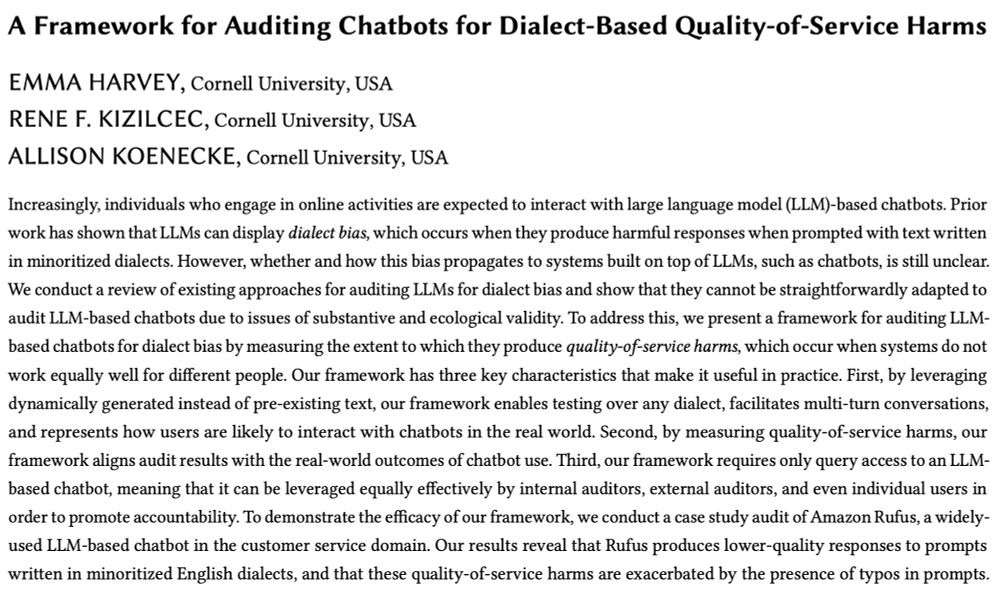

Title: A Framework for Auditing Chatbots for Dialect-Based Quality-of-Service Harms

Authors: Emma Harvey, Rene Kizilcec, Allison Koenecke

Abstract: Increasingly, individuals who engage in online activities are expected to interact with large language model (LLM)-based chatbots. Prior work has shown that LLMs can display dialect bias, which occurs when they produce harmful responses when prompted with text written in minoritized dialects. However, whether and how this bias propagates to systems built on top of LLMs, such as chatbots, is still unclear. We conduct a review of existing approaches for auditing LLMs for dialect bias and show that they cannot be straightforwardly adapted to audit LLM-based chatbots due to issues of substantive and ecological validity. To address this, we present a framework for auditing LLM-based chatbots for dialect bias by measuring the extent to which they produce quality-of-service harms, which occur when systems do not work equally well for different people. Our framework has three key characteristics that make it useful in practice. First, by leveraging dynamically generated instead of pre-existing text, our framework enables testing over any dialect, facilitates multi-turn conversations, and represents how users are likely to interact with chatbots in the real world. Second, by measuring quality-of-service harms, our framework aligns audit results with the real-world outcomes of chatbot use. Third, our framework requires only query access to an LLM-based chatbot, meaning that it can be leveraged equally effectively by internal auditors, external auditors, and even individual users in order to promote accountability. To demonstrate the efficacy of our framework, we conduct a case study audit of Amazon Rufus, a widely-used LLM-based chatbot in the customer service domain. Our results reveal that Rufus produces lower-quality responses to prompts written in minoritized English dialects.

I am so excited to be in 🇬🇷Athens🇬🇷 to present "A Framework for Auditing Chatbots for Dialect-Based Quality-of-Service Harms" by me, @kizilcec.bsky.social, and @allisonkoe.bsky.social, at #FAccT2025!!

🔗: arxiv.org/pdf/2506.04419

23.06.2025 14:44 — 👍 30 🔁 10 💬 1 📌 2

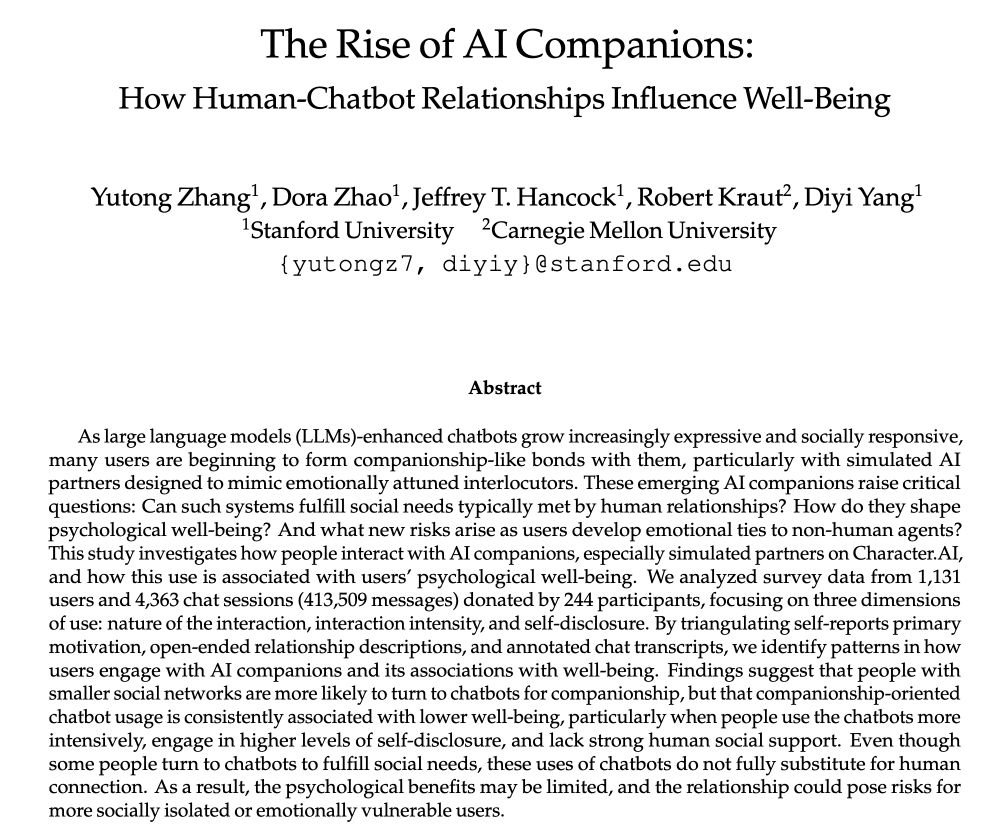

AI companions aren’t science fiction anymore 🤖💬❤️

Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

18.06.2025 16:27 — 👍 4 🔁 3 💬 1 📌 0

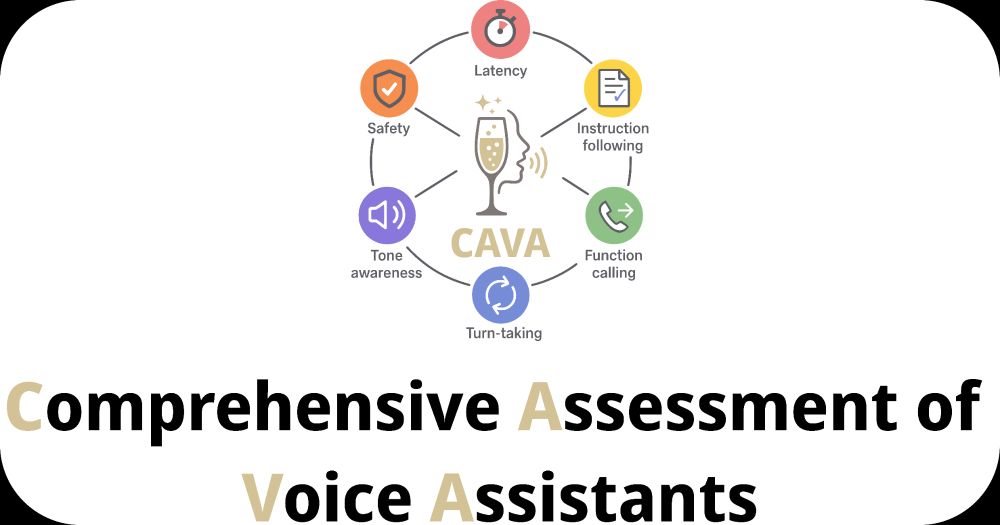

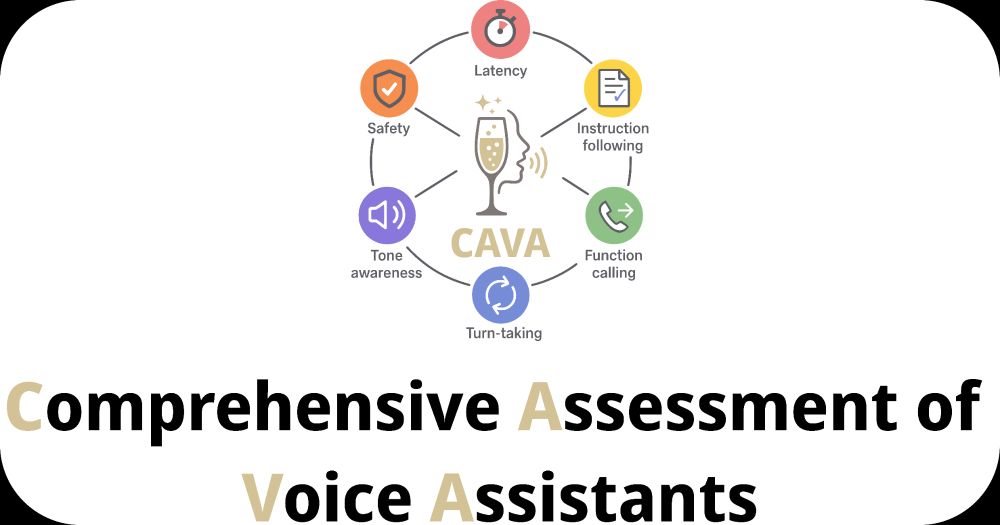

Comprehensive Assessment for Voice Assistants

CAVA is a new benchmark for assessing how well Large Audio Models support voice assistant capabilities.

Introducing CAVA: The Comprehensive Assessment for Voice Assistants

A new benchmark for evaluating the capabilities required for speech-in-speech-out voice assistants!

- Latency

- Instruction following

- Function calling

- Tone awareness

- Turn taking

- Audio Safety

TalkArena.org/cava

07.05.2025 16:15 — 👍 0 🔁 1 💬 1 📌 0

Screenshot of Arxiv paper title, "Rejected Dialects: Biases Against African American Language in Reward Models," and author list: Joel Mire, Zubin Trivadi Aysola, Daniel Chechelnitsky, Nicholas Deas, Chrysoula Zerva, and Maarten Sap.

Reward models for LMs are meant to align outputs with human preferences—but do they accidentally encode dialect biases? 🤔

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

06.03.2025 19:49 — 👍 37 🔁 11 💬 1 📌 2

EgoNormia (egonormia.org) exposes a major gap in Vision-Language Models understanding of the social world: they don't know how to behave when norms about the physical world *conflict* ⚔️ (<45% acc.)

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

04.03.2025 04:44 — 👍 7 🔁 2 💬 0 📌 0

Culture is not trivia: sociocultural theory for cultural NLP. By Naitian Zhou and David Bamman from the Berkeley School of Information and Isaac L. Bleaman from Berkeley Linguistics.

There's been a lot of work on "culture" in NLP, but not much agreement on what it is.

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

18.02.2025 20:45 — 👍 120 🔁 35 💬 5 📌 3

LM agents today primarily aim to automate tasks. Can we turn them into collaborative teammates? 🤖➕👤

Introducing Collaborative Gym (Co-Gym), a framework for enabling & evaluating human-agent collaboration! I now get used to agents proactively seeking confirmations or my deep thinking.(🧵 with video)

17.01.2025 17:44 — 👍 23 🔁 10 💬 1 📌 1

Bill Labov died this morning. I'm not coherent enough to talk about how important and influential and brilliant he was. I am very sad.

I was so lucky to know him, and I am grateful every day that he (and Gillian, and Walt, etc) built an academic field where kindness is expected.

18.12.2024 02:08 — 👍 701 🔁 122 💬 24 📌 25

Talk Arena: Interactive Evaluation of Large Audio Models

With an increasing number of Large *Audio* Models 🔊, which one do users like the most?

Introducing talkarena.org — an open platform where users speak to LAMs and receive text responses. Through open interaction, we focus on rankings based on user preferences rather than static benchmarks.

🧵 (1/5)

10.12.2024 00:01 — 👍 30 🔁 8 💬 3 📌 3

Maybe some starter packs for the Dyirbal noun classes?

1. most animate objects, men

2. women, water, fire, violence, and exceptional animals

3. edible fruit and vegetables

4. miscellaneous (includes things not classifiable in the first three)

24.11.2024 17:53 — 👍 9 🔁 1 💬 0 📌 0

YouTube video by Casey Fiesler

AI is not the GOAT. (Uh oh, your professor is attempting stand up comedy.)

Hi Bluesky! You get to be the very first internet people to see my standup comedy debut. Because I know you’ll be nicer to me than the 12 year olds on TikTok. youtu.be/KqL2ahOvAgg?...

23.11.2024 18:52 — 👍 72 🔁 7 💬 8 📌 3

I noticed a lot of starter packs skewed towards faculty/industry, so I made one of just NLP & ML students: go.bsky.app/vju2ux

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

23.11.2024 19:54 — 👍 176 🔁 55 💬 102 📌 4

go.bsky.app/VZBhuJ5

22.11.2024 02:42 — 👍 1 🔁 0 💬 0 📌 0

👋

19.11.2024 20:48 — 👍 2 🔁 0 💬 0 📌 0

@butanium.bsky.social I nominate @aryaman.io

19.11.2024 16:57 — 👍 2 🔁 0 💬 0 📌 1

A photo of Boulder, Colorado, shot from above the university campus and looking toward the Flatirons.

I'm recruiting 1-2 PhD students to work with me at the University of Colorado Boulder! Looking for creative students with interests in #NLP and #CulturalAnalytics.

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

19.11.2024 10:38 — 👍 307 🔁 136 💬 10 📌 12

Repost if you’ve participated in a Summer Institute in Computational Social Science. Let’s get #SICSS Bluesky going!

08.10.2023 19:49 — 👍 52 🔁 64 💬 0 📌 3

resources | Julia Mendelsohn

Materials that some people might find helpful

I'm sharing materials from my academic job search last year! Includes research, teaching, and diversity statements, plus my UMD cover letter and job talk slides. I applied for a mix of iSchool, data sci, CS, and linguistics positions). Feel free to share!

juliamendelsohn.github.io/resources/

18.11.2024 16:00 — 👍 70 🔁 12 💬 0 📌 1

All the ACL chapters are here now: @aaclmeeting.bsky.social @emnlpmeeting.bsky.social @eaclmeeting.bsky.social @naaclmeeting.bsky.social #NLProc

19.11.2024 03:48 — 👍 107 🔁 37 💬 1 📌 3

I wanted to contribute to "Starter Pack Season" with one for Stanford NLP+HCI: go.bsky.app/VZBhuJ5

Here are some other great starter packs:

- CSS: go.bsky.app/GoEyD7d + go.bsky.app/CYmRvcK

- NLP: go.bsky.app/SngwGeS + go.bsky.app/JgneRQk

- HCI: go.bsky.app/p3TLwt

- Women in AI: go.bsky.app/LaGDpqg

15.11.2024 19:20 — 👍 25 🔁 10 💬 2 📌 2

Ready for another Computational Social Science Starter Pack?

Here is number 2! More amazing folks to follow! Many students and the next gen represented!

go.bsky.app/GoEyD7d

14.11.2024 23:42 — 👍 78 🔁 53 💬 33 📌 43

Thanks Emilio! And thanks for compiling these

15.11.2024 00:18 — 👍 1 🔁 0 💬 0 📌 0

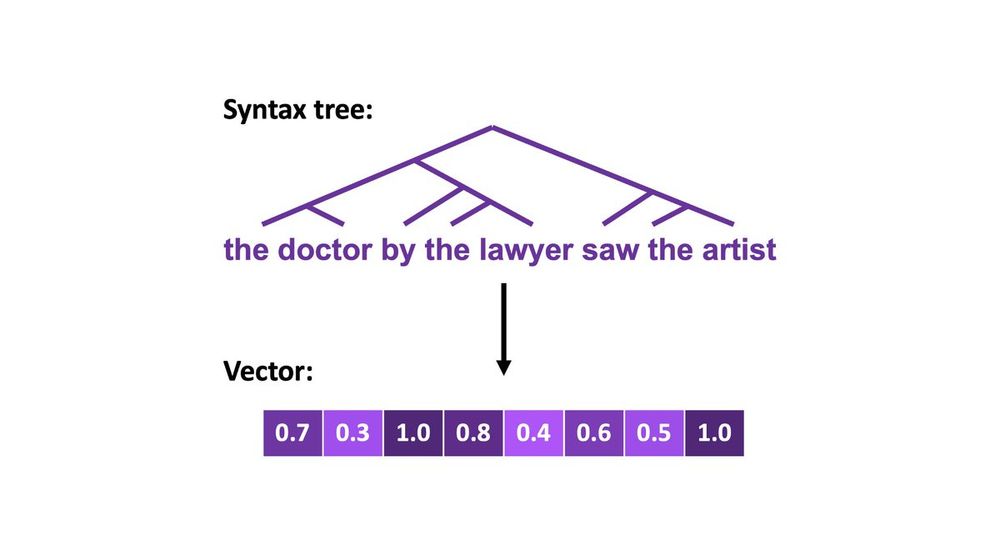

Top: syntax tree for the sentence "the doctor by the lawyer saw the artist"

Bottom: a continuous vector

🤖🧠 I'll be considering applications for postdocs & PhD students to start at Yale in Fall 2025!

If you are interested in the intersection of linguistics, cognitive science, and AI, I encourage you to apply!

Postdoc link: rtmccoy.com/prospective_...

PhD link: rtmccoy.com/prospective_...

14.11.2024 21:39 — 👍 14 🔁 5 💬 0 📌 0

I'd love to join! :)

14.11.2024 07:22 — 👍 0 🔁 0 💬 1 📌 0

Hello! I'm an internet linguist!

I wrote a book called Because Internet about how we use language online gretchenmcculloch.com/book

I make @lingthusiasm.bsky.social, a podcast that's enthusiastic about linguistics

And I maintain a linguistics starter pack here: go.bsky.app/UUM7Gcx

08.11.2024 17:06 — 👍 325 🔁 84 💬 23 📌 8

The AI Interdisciplinary Institute at the University of Maryland (AIM) is hiring

40 new faculty members

in all areas of AI, particularly:

- accessibility,

- sustainability,

- social justice, and

- learning;

building on computational, humanistic, or social scientific approaches to AI.

>

13.11.2024 12:37 — 👍 64 🔁 19 💬 2 📌 5

🎓 Fully funded PhD Fellowship in Interpretable NLP at the University of Copenhagen & Pioneer Centre for AI available!

📆 Application deadline: 15 Jan 2025

👥 Supervisors: Pepa Atanasova & me

🤝 Reasons to apply: www.copenlu.com/post/why-ucph/

📝 Apply here: employment.ku.dk/phd/?show=16...

#NLProc #XAI

08.11.2024 14:13 — 👍 28 🔁 10 💬 0 📌 3

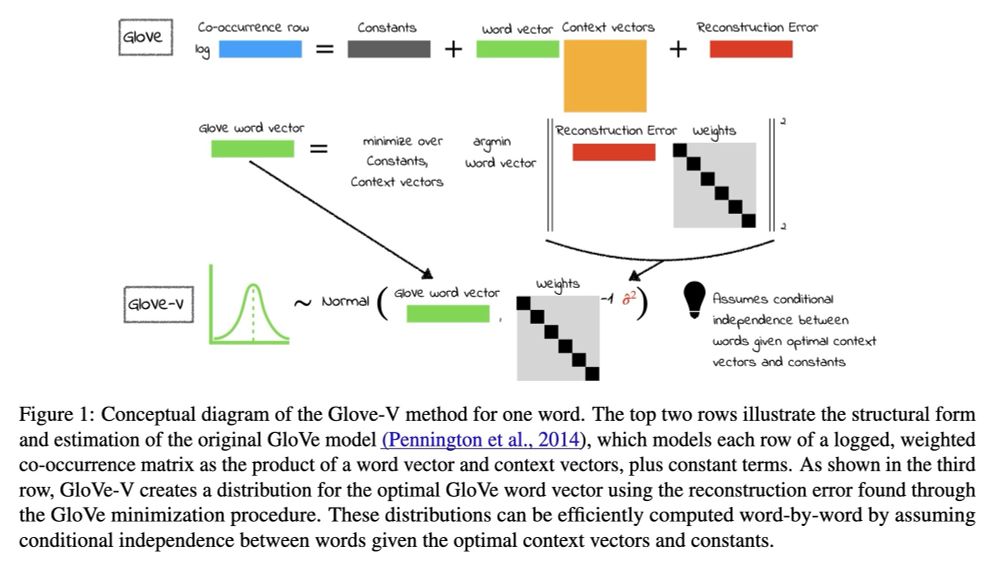

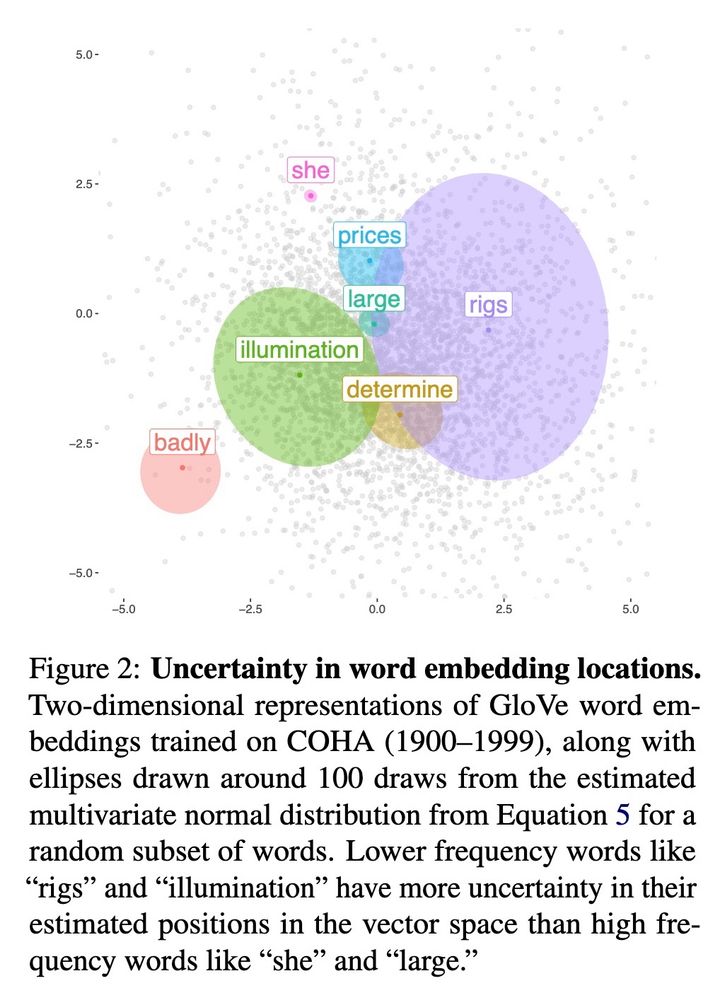

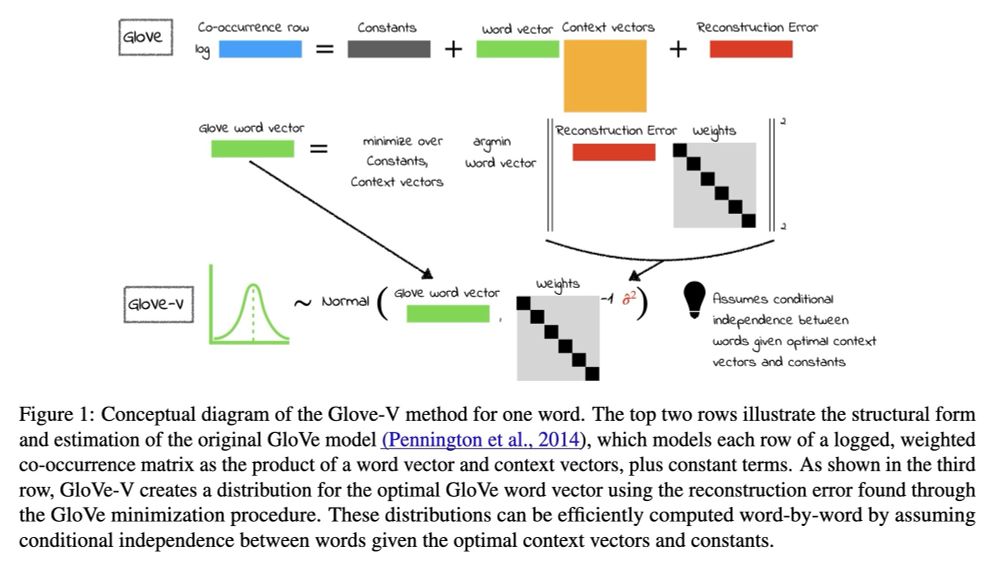

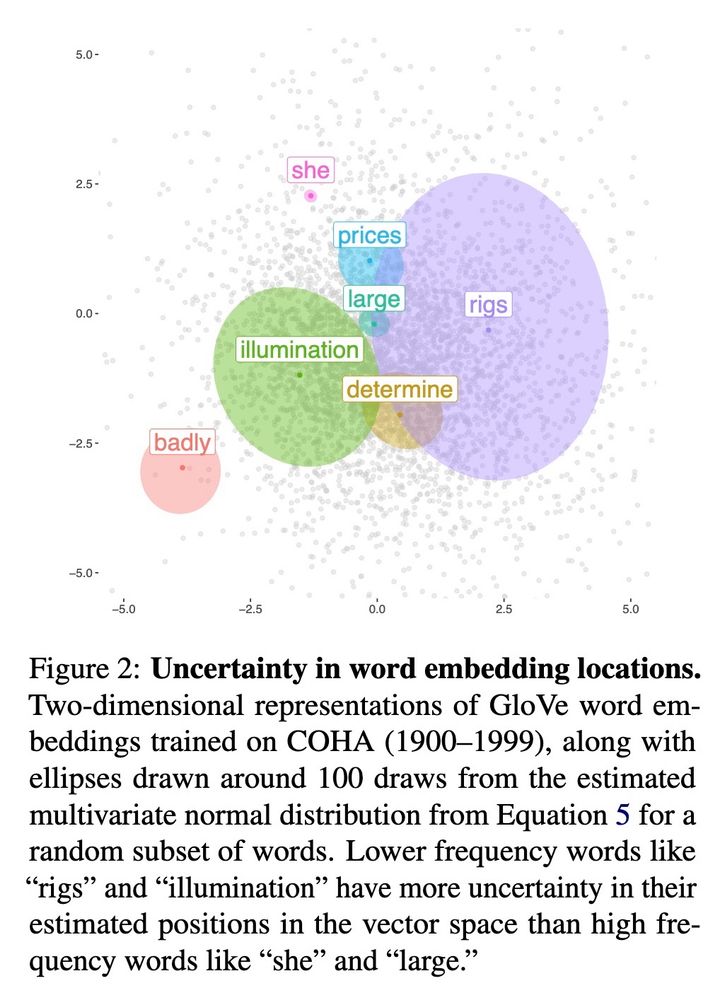

Papers at #EMNLP2024 #1

Statistical Uncertainty in Word Embeddings: GloVe-V

Neural models, from word vectors through transformers, use point estimate representations. They can have large variances, which often loom large in CSS applications.

Tue Nov 12 15:15-15:30 Flagler

10.11.2024 00:36 — 👍 58 🔁 13 💬 0 📌 0

I guess I should cross post here too.

I'm recruiting one (1) PhD student to work on multimodal embodied agents. VLMs + RL. Please apply to the UCSD CSE app by Dec 15 and mark my name as a faculty of interest.

More lab info at pearls.ucsd.edu

08.11.2024 23:43 — 👍 22 🔁 5 💬 2 📌 0

Doctor of Philosophy in Information Studies (PhD) - College of Information (INFO)

This doctoral program prepares students to address the hardest social and technical problems of today and tomorrow.

📣 I am recruiting 1-2 PhD students for Fall 2025 at the University of Maryland College of Information.

Consider applying if you're interested in language, society/politics, and computers!

Deadline Dec 3: ischool.umd.edu/academics/ph...

And pls share with anyone who may be interested!

29.10.2024 17:05 — 👍 26 🔁 14 💬 0 📌 0

Making invisible peer review contributions visible 🌟 Tracking 2,970 exceptional ARR reviewers across 1,073 institutions | Open source | arrgreatreviewers.org

PhD student @ Cornell info sci | Sociotechnical fairness & algorithm auditing | Previously MSR FATE, Penn | https://emmaharv.github.io/

Assistant Professor at @cs.ubc.ca and @vectorinstitute.ai working on Natural Language Processing. Book: https://lostinautomatictranslation.com/

Stanford Health Policy: Interdisciplinary innovation, discovery and education to improve health policy here at home and around the world.

Assistant professor with too many opinions. Texpat, politics academia, gay stuff, anti-carbrain. Not an AI brain genius guy. 🇵🇸🏳️🌈🇺🇦🏳️⚧️

"See you divas on the streets."

E me aperta pra eu quase sufocar

BayesForDays@lingo.lol on Mastodon.

I am an Assistant Professor of Computer Science and Psychology at Northeastern University and a member of the Data Visualization Lab @Khoury.

Youtube: https://www.youtube.com/@DrDataVIS

Postdoc @Stanford Psychology

Cognitive scientist seeking to reverse engineer the human cognitive toolkit. Asst Prof of Psychology at Stanford. Lab website: https://cogtoolslab.github.io

PhD Student in Information Science at UC Berkeley. Computational social science, NLP, online harms.

Applying and improving #NLP/#AI but in a safe way. Teaching computers to tell stories, play D&D, and help people talk (accessibility).

Assistant Prof in CSEE @ UMBC.

🏳️🌈♿

https://laramartin.net

Sociologist, Prof. of Organizational Behavior at Stanford who studies culture | Co-director of the Computational Culture Lab | http://comp-culture.org

Master’s student @ltiatcmu.bsky.social. he/him

Social psychologist. Postdoc at StanfordSPARQ.

Rice '16 Stanford PhD '23

Postdoc @milanlp.bsky.social working on LLM safety and societal impacts. Previously PhD @oii.ox.ac.uk and CTO / co-founder of Rewire (acquired '23)

https://paulrottger.com/

associate prof at UMD CS researching NLP & LLMs