There are countless websites that generate color palettes, but I needed a reusable package for my own apps. So I built dittoTones. 🟣

It mimics the perceptual DNA (Lightness/Chroma curves) of popular systems and blends them with your target hue → meodai.github.io/dittoTones/

29.12.2025 00:29 —

👍 860

🔁 132

💬 18

📌 6

In Conversation: Tyler Hobbs & Matt DesLauriers - Unit

Tyler Hobbs and Matt DesLauriers, two pioneers of generative art, sit down together to discuss the intricacies of their chosen artistic medium.

Really enjoyed this conversation with Tyler Hobbs during his recent book signing event at Unit London, where we talk about generative art & our creative process. 🤗

Full 1hr+ recording (!!) in the link below:

→ unitlondon.com/2025-07-09/i...

09.07.2025 21:08 —

👍 22

🔁 3

💬 0

📌 0

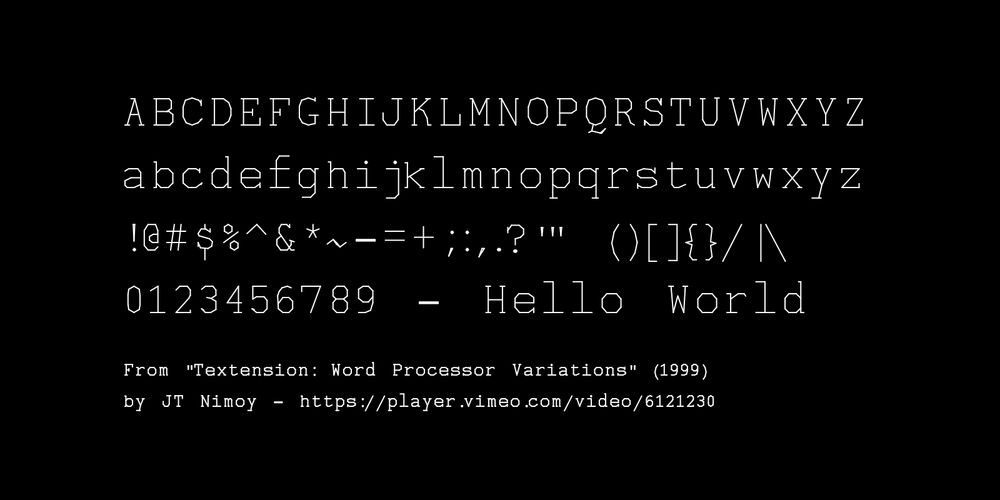

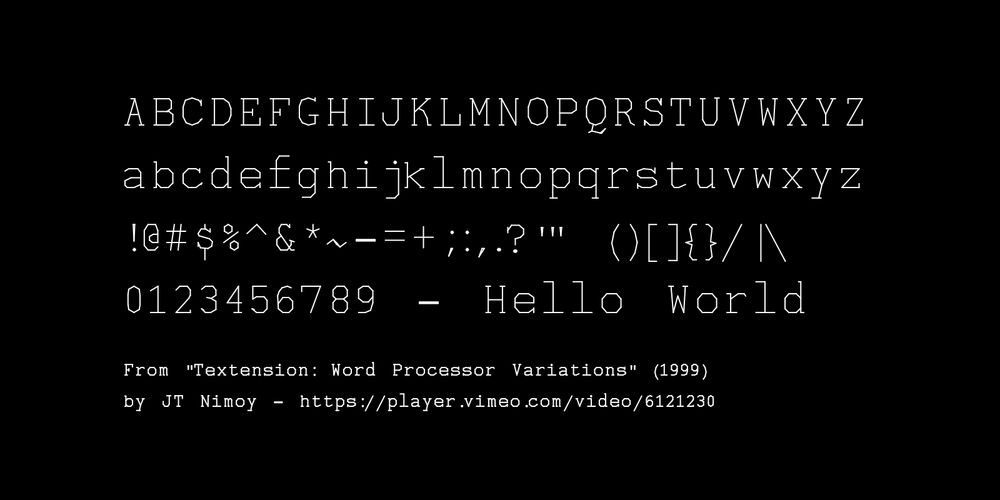

JT Nimoy's monoline font from Textension (1999)

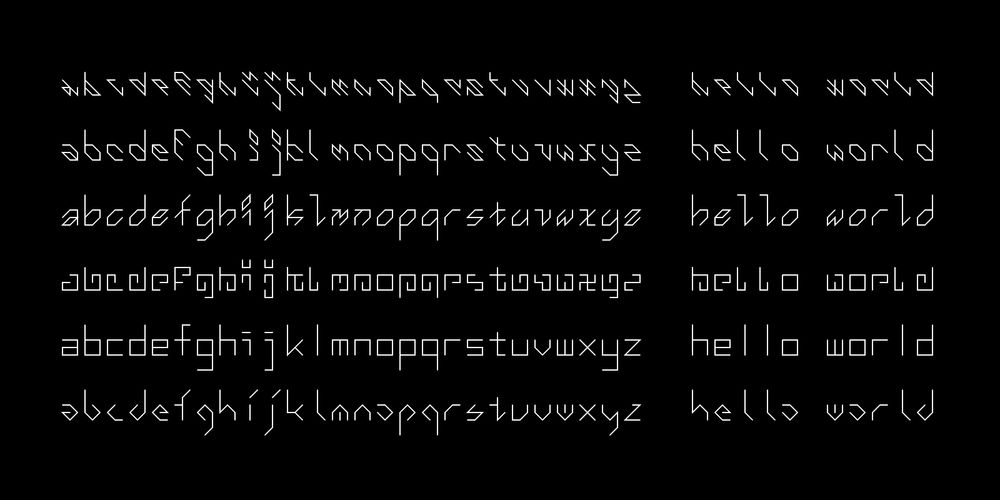

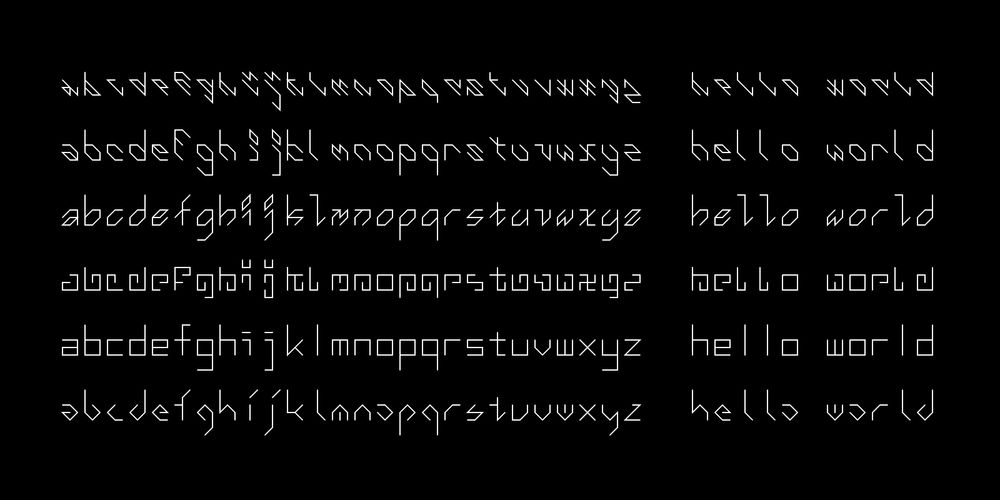

Some of Douglas Hofstadter's "Letter Spirit" fonts (1987-1996)

For some super arcane deep cuts, I've ported JT Nimoy's "Textension" vector font (1999) and Douglas Hofstadter's "Letter Spirit" gridfonts (c.1987-1996) to p5.js, now available in my archive of p5-single-line-font-resources: github.com/golanlevin/p...

04.06.2025 12:08 —

👍 118

🔁 21

💬 4

📌 3

About Colors 色について

kyndinfo.notion.site/About-Colors...

#p5js #math #physics #code #art

06.06.2025 22:15 —

👍 29

🔁 9

💬 0

📌 0

What I’m working on is a bit more homebrew, tailored specifically for a project I’m working on. The pigment curves are optimized to match physically measured spectral data, and the mixing model is pretty different than spectraljs’ approach.

05.05.2025 10:58 —

👍 2

🔁 0

💬 0

📌 0

still image—

07.04.2025 18:34 —

👍 5

🔁 0

💬 0

📌 0

using an evolutionary algorithm to paint Mona Lisa in 200 rectangles—

🔧 source code in JS:

github.com/mattdesl/snes

07.04.2025 18:34 —

👍 16

🔁 1

💬 1

📌 1

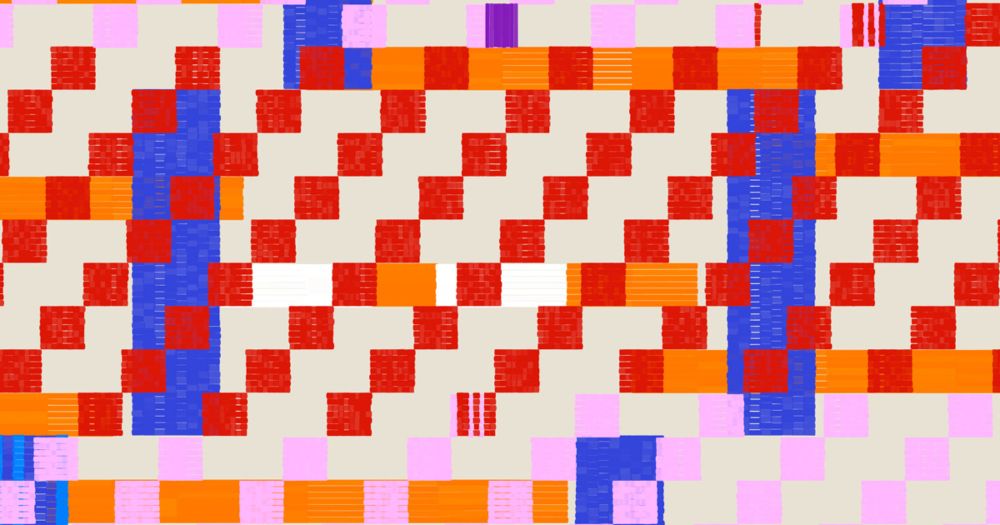

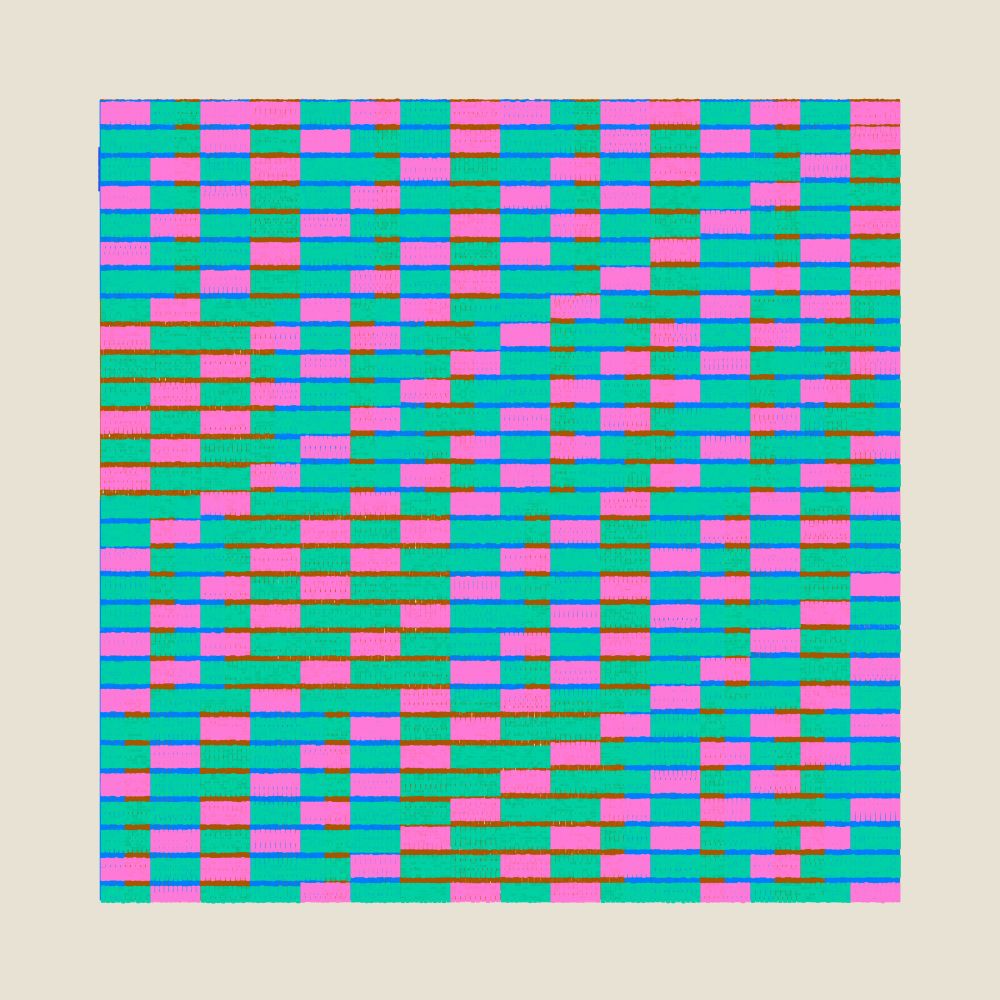

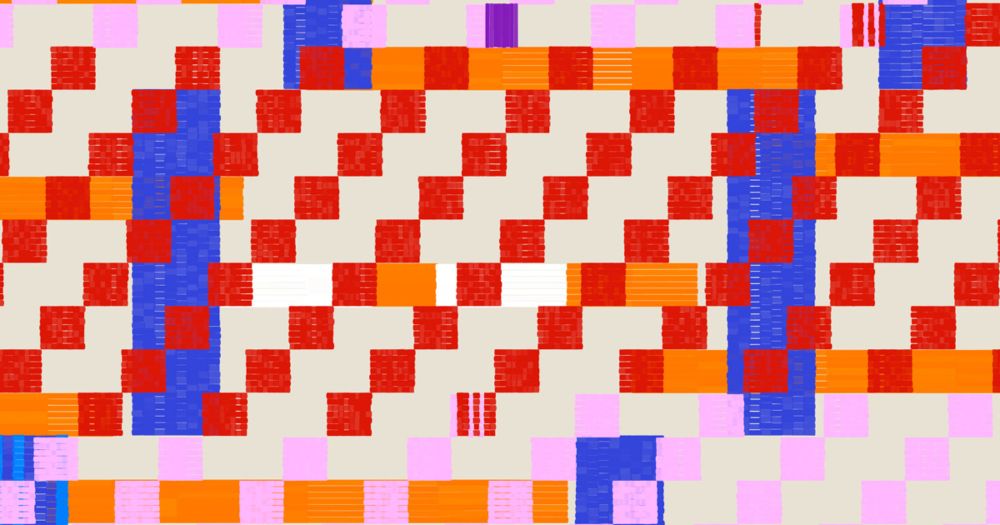

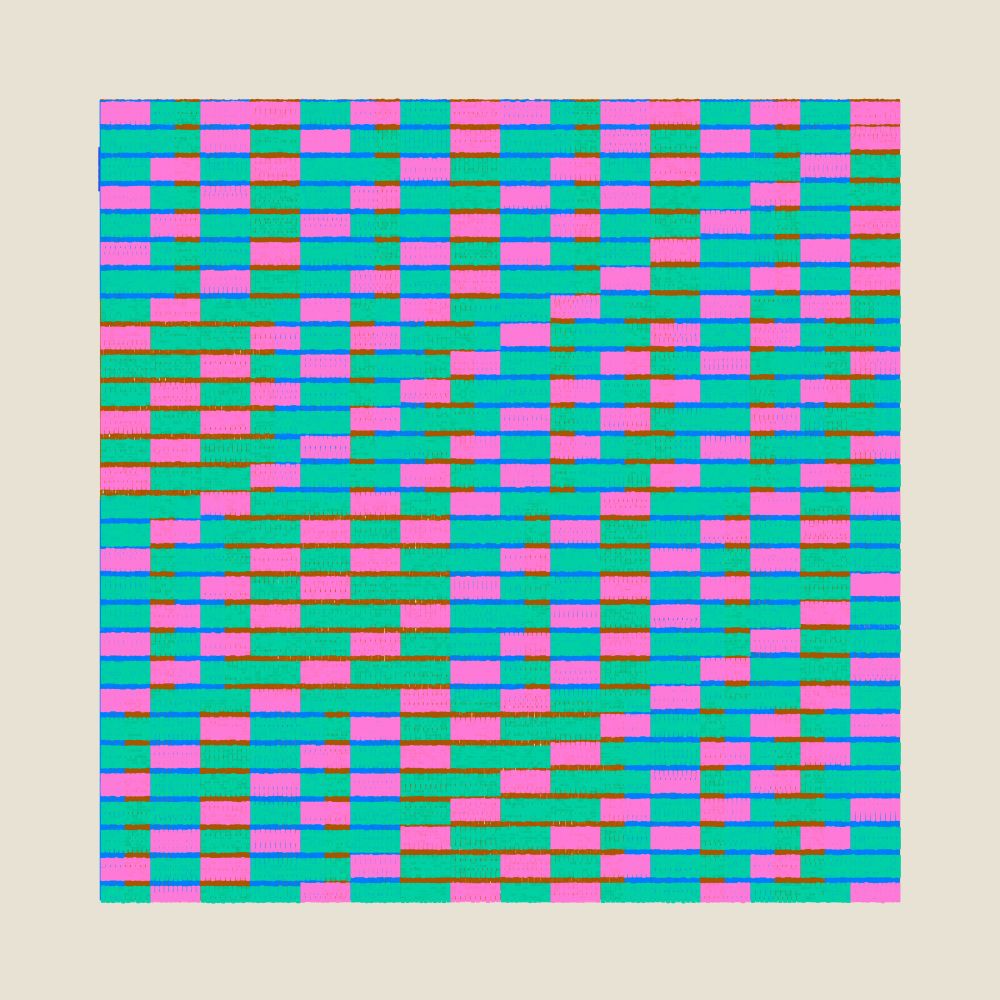

Here is another selection using permutations of three unique pigments instead of two. This is equivalent to uniform sampling within a 3D simplex, i.e. a tetrahedron.

It leads to less purity of any single ink, and is a little lower in overall saturation.

(GIF doesn’t work on BlueSky I guess?)

19.02.2025 10:58 —

👍 7

🔁 0

💬 0

📌 0

Generating vibrant palettes with Kubelka-Munk pigment mixing, using 5 primaries (blue, yellow, red, white, black). The routine selects 2 pigments and a random concentration of the two; although it can extend to higher dimensions by sampling the N-dimensional pigment simplex.

19.02.2025 10:57 —

👍 26

🔁 1

💬 1

📌 0

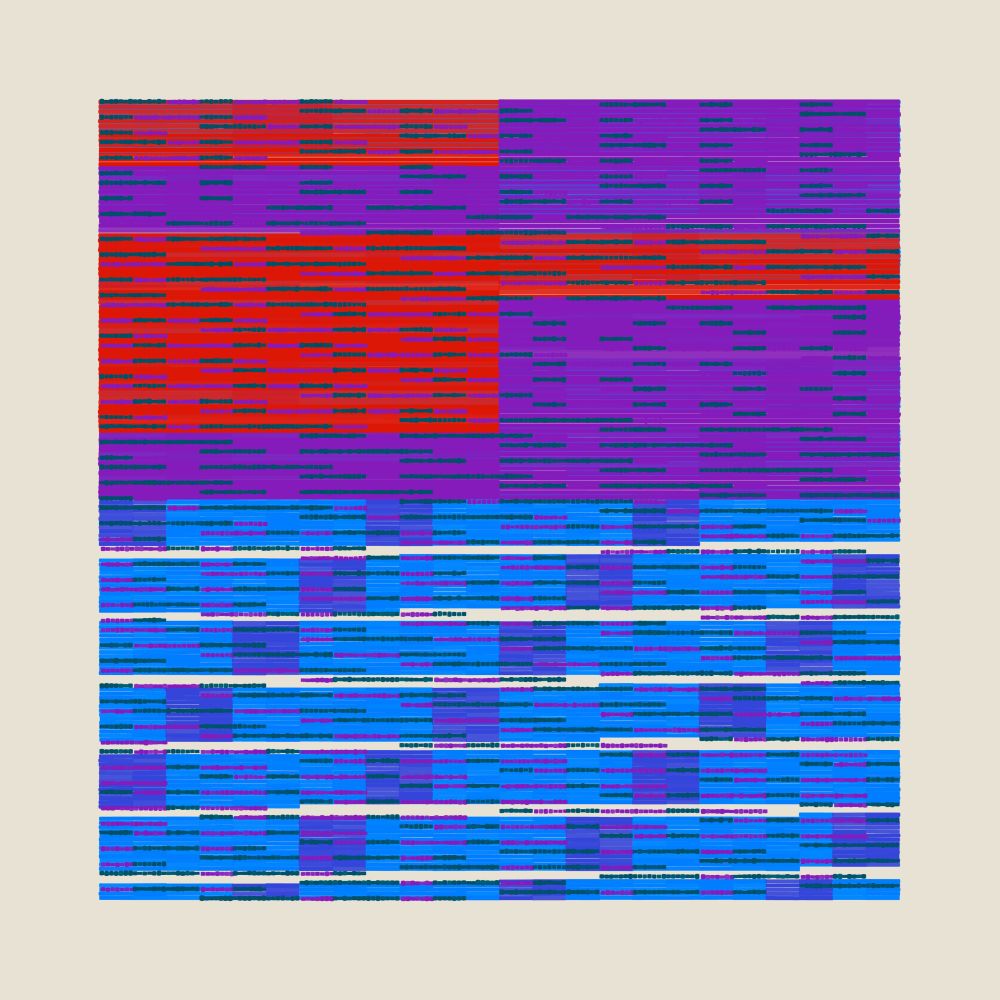

Adding another pigment (dimension) is quite easy with a neural network. Now it predicts concentrations for CMY + white + black, allowing for smooth grayscale ramps and giving us a bit of a wider pigment gamut.

04.02.2025 20:17 —

👍 5

🔁 0

💬 2

📌 0

A couple things that aren’t clear to me yet, is how Mixbox achieves such vibrant saturation during interpolations, and how they handle black & achromatic ramps specifically. I may be struggling to achieve the same because of my “imaginary” spectral coefficients.

03.02.2025 22:13 —

👍 3

🔁 0

💬 1

📌 0

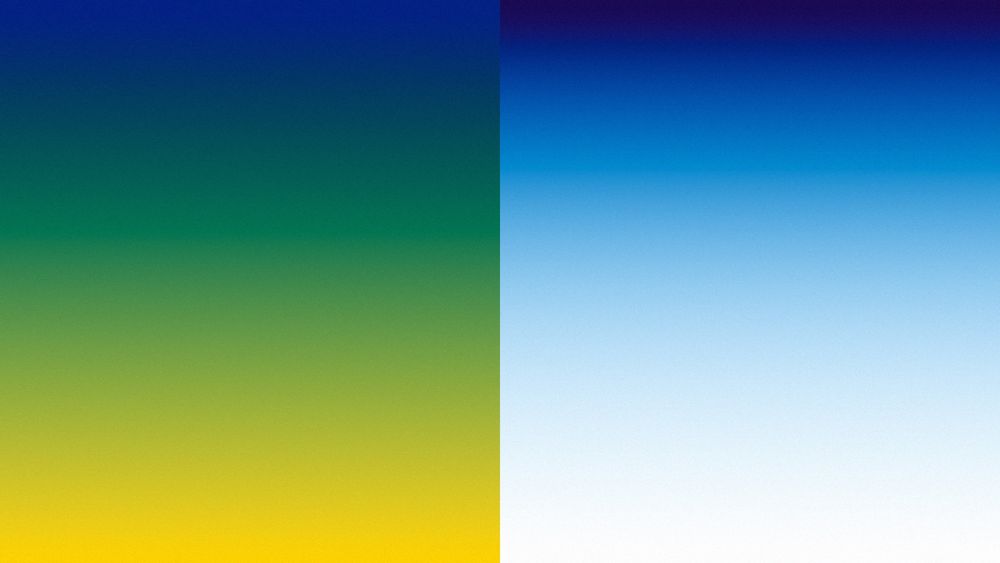

I like the idea of a neural net as it's continuous, adapts to arbitrary input, and is fast to load and light on memory. However as you can see, it has a hard time capturing the green of the LUT at the beginning (although it doesn’t exhibit the artifacts with the saturated purple input).

03.02.2025 22:13 —

👍 3

🔁 0

💬 1

📌 0

Research/experiments building an OSS implementation of practical and real-time Kubelka-Munk pigment mixing. Not yet as good as Mixbox, but getting closer. Comparing LUT (32x32x32 stored in PNG) vs a small neural net (2 hidden layers, 16 neurons).

03.02.2025 22:13 —

👍 48

🔁 3

💬 2

📌 0

I think certain dev tools could get away with it—like Vite, or my own canvas-sketch. I think the UX of an electron app may be better for average user but also hurts experienced devs; not just install time but also lack of browser diversity which is crucial if building for the web.

25.01.2025 08:48 —

👍 3

🔁 0

💬 0

📌 0

Looks amazing Scott. 👏

25.01.2025 08:40 —

👍 1

🔁 0

💬 0

📌 0

GitHub - scrtwpns/mixbox: Mixbox is a library for natural color mixing based on real pigments.

Mixbox is a library for natural color mixing based on real pigments. - scrtwpns/mixbox

It's closer to Mixbox's implementation; using four primary pigments (each with a K and S curve), and then using numerical optimization to find the best concentration of pigments for a given OKLab input color. It's only running in Python at the moment, but LUT is possible.

github.com/scrtwpns/mix...

23.01.2025 13:40 —

👍 1

🔁 0

💬 2

📌 0

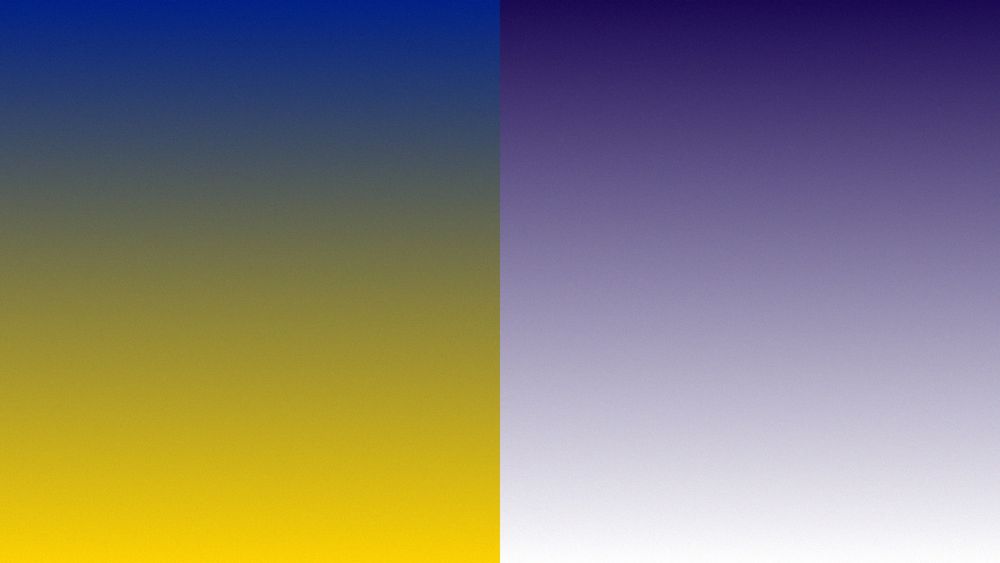

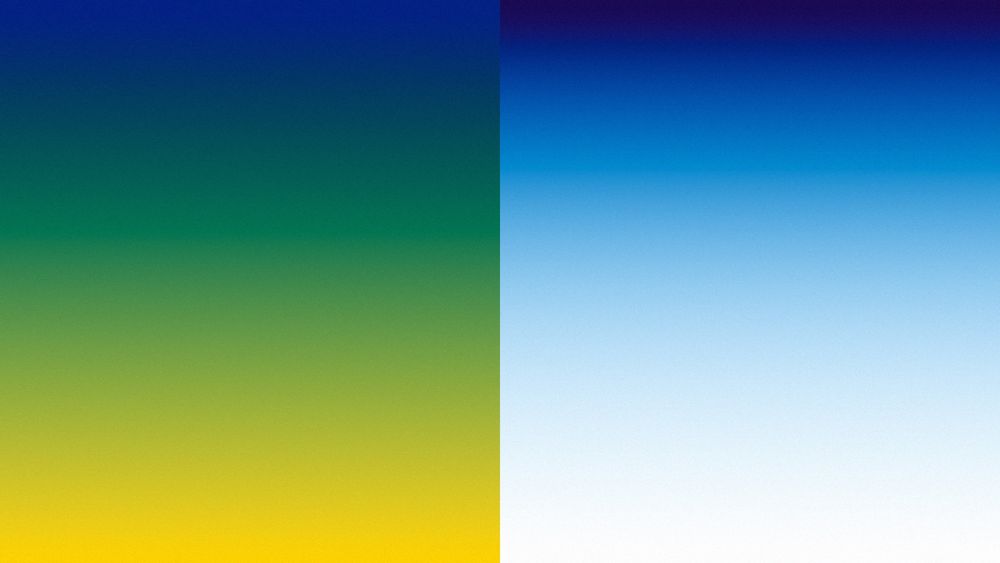

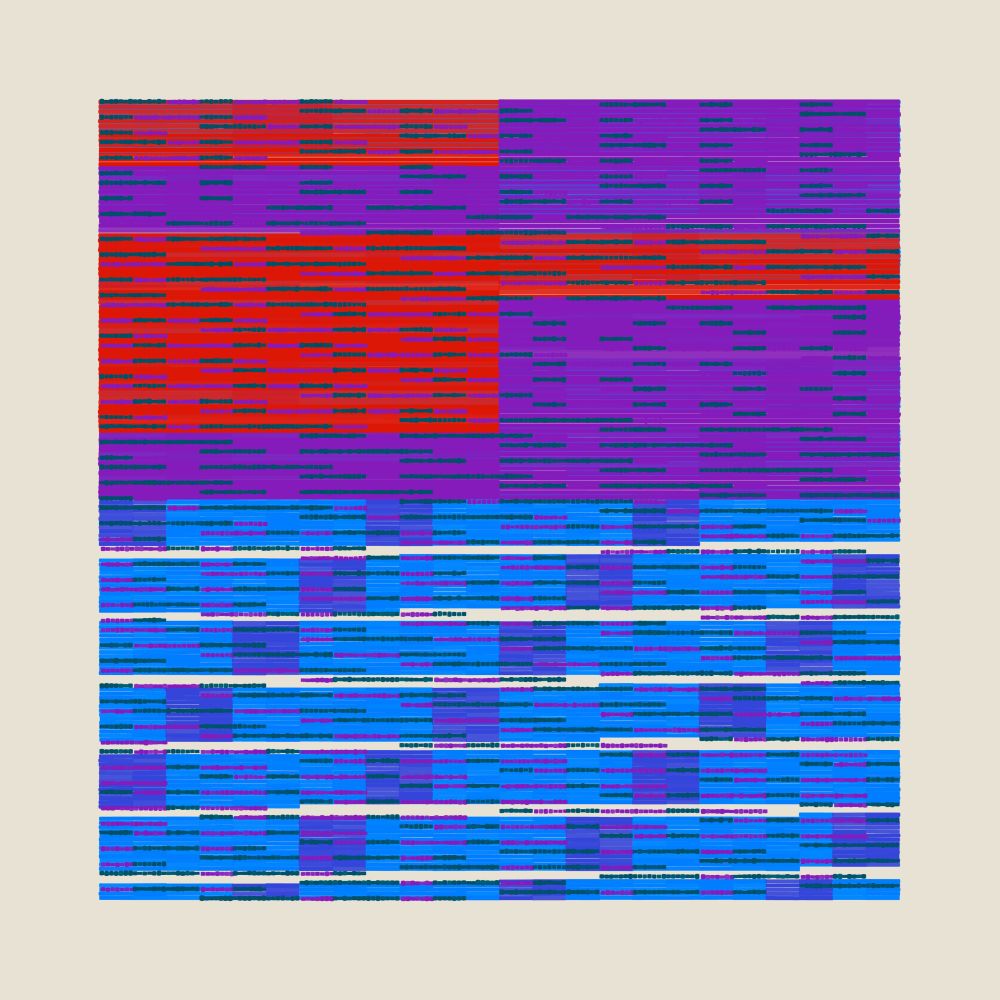

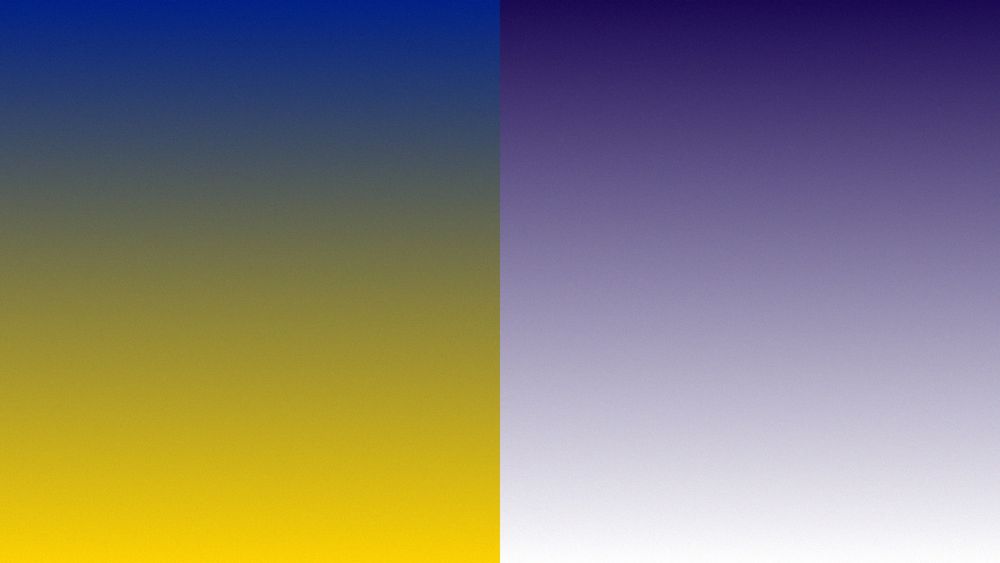

a late #genuary—"gradients only"

working on an open source pigment mixing library, based on Kubelka-Munk theory.

left: before KM mixing

right: after KM mixing

23.01.2025 12:56 —

👍 32

🔁 2

💬 1

📌 0

It just sets the background fill to none at the moment. It would be nice to have more options like per layer exports though.

20.12.2024 13:11 —

👍 1

🔁 0

💬 0

📌 0

Final few hours to mint a Bitframes before the crowdfund closes and edition size is locked. 100% of net proceeds are being directed to a documentary on the history of generative art. 📽️

Closes today at 5PM GMT (UK time).

→ bitframes.io

20.12.2024 13:08 —

👍 10

🔁 1

💬 0

📌 0

Added some plotter and high-res print tools to the open source Bitframes GitHub repo:

Tools—

print-bitframes.surge.sh

Code—

github.com/mattdesl/bit...

19.12.2024 13:08 —

👍 14

🔁 0

💬 1

📌 0

Last week to mint and contribute to the Bitframes crowdfund! 100% of net proceeds are going to the production of a documentary film on the history of generative & computer art. 🎬

→ bitframes.io

18.12.2024 16:04 —

👍 14

🔁 2

💬 0

📌 0

COMPUTER ART IN THE MAINFRAME ERA—

A ~40 min interview with professor and computer art history scholar Grant D. Taylor that I conducted during R&D for Bitframes.

Listen → bitframes.io/episodes/1

12.12.2024 17:05 —

👍 12

🔁 1

💬 0

📌 0

BITFRAMES

A generative art project and documentary film crowdfund by artist Matt DesLauriers.

Bitframes - an NFT project / crowdfunding initiative by @mattdesl.bsky.social to produce a documentary on #generativeart

bitframes.io

03.12.2024 21:08 —

👍 7

🔁 1

💬 1

📌 0

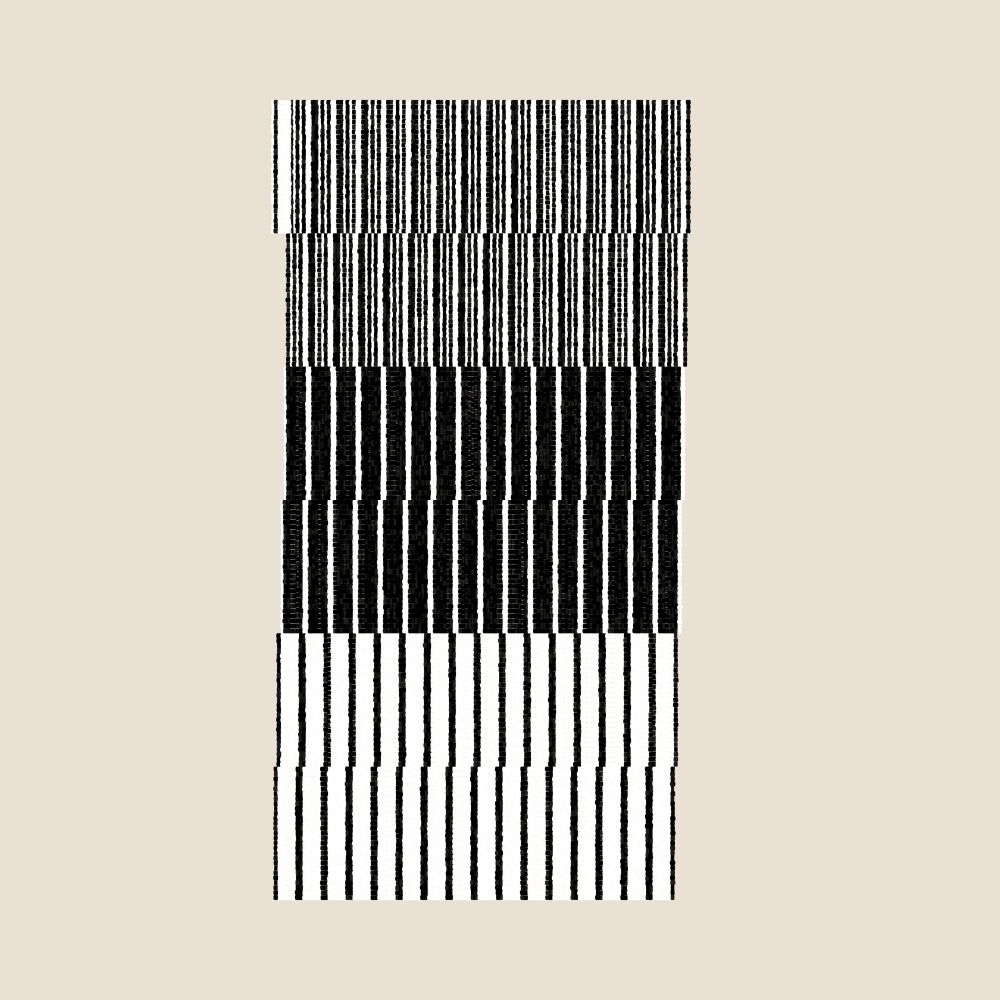

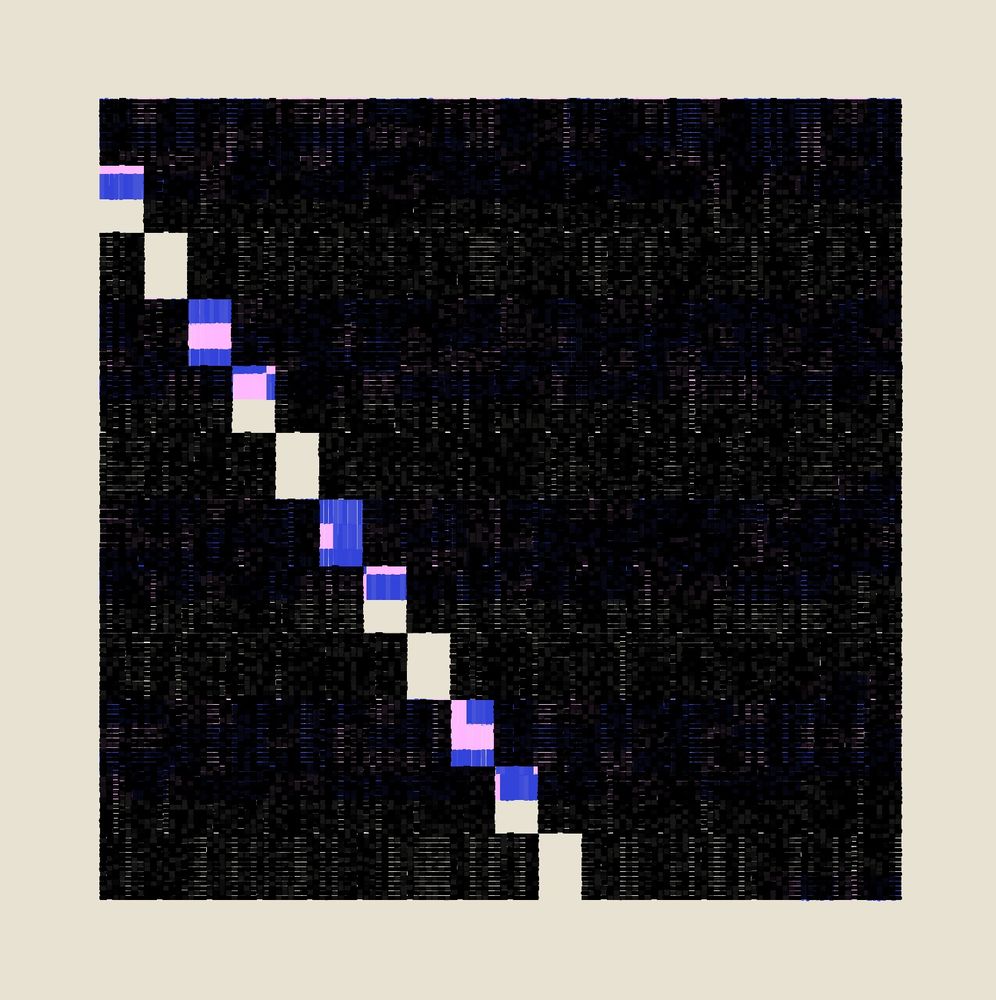

Bitframes #1603

03.12.2024 08:08 —

👍 30

🔁 2

💬 0

📌 0

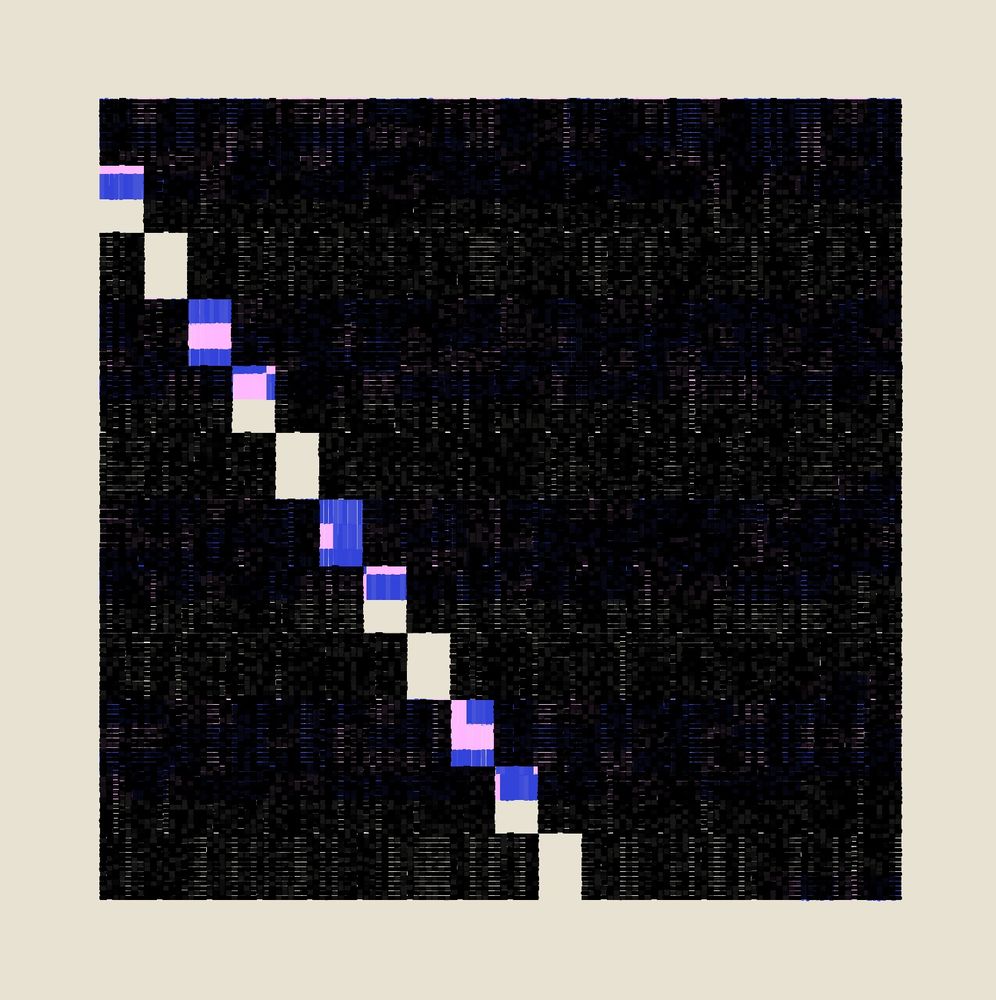

Abstract image of a square composed of individual black hatched squares with a series of clear squares running diagonally across the matrix which reveal colored squares underneath.

My contribution to @mattdesl.bsky.social awesome Bitframes project. Token ID 1226.

28.11.2024 20:49 —

👍 15

🔁 1

💬 0

📌 0

happy to be part of bitframes.io by @mattdesl.bsky.social and support generativefilm.io :)

bitframes #1188 and #1189

28.11.2024 11:59 —

👍 19

🔁 1

💬 0

📌 0

Thank you Marcin for supporting the project & film! ❤️

28.11.2024 14:06 —

👍 7

🔁 0

💬 0

📌 0

Woah ! This is a fantastic output. Nice one. 👏

28.11.2024 11:10 —

👍 1

🔁 0

💬 0

📌 0

Bitframes #1153

(bitframes.io by @mattdesl.bsky.social)

27.11.2024 23:30 —

👍 40

🔁 2

💬 2

📌 0