YouTube video by Friday Talks Tübingen

Strategic Hypothesis Testing - [Yatong Chen]

A new recording of our FridayTalks@Tübingen series is online!

Strategic Hypothesis Testing

by

@yatongchen.bsky.social

Watch here: youtu.be/VcKpRuUi4cQ

22.10.2025 10:11 — 👍 0 🔁 0 💬 1 📌 0

Principal Investigator Positions (m/f/d) as Hector Endowed Fellows of the ELLIS Institute Tübingen

🚀 The new call for Principal Investigators as Hector Endowed Fellows at the ELLIS Institute Tübingen is now open!

These positions offer the exciting possibility of co-appointments with the @mpi-is.bsky.social and the @tuebingen-ai.bsky.social .

📌 Apply here: institute-tue.ellis.eu/en/jobs/PI-c...

17.10.2025 09:41 — 👍 3 🔁 5 💬 0 📌 0

YouTube video by Friday Talks Tübingen

The Curse of Depth in Large Language Models - [Shiwei Liu]

A new recording of our FridayTalks@Tübingen series is online!

The Curse of Depth in Large Language Models

by

@shiweiliu.bsky.social

Watch here: youtu.be/knVOH3oM_-I

26.09.2025 06:37 — 👍 1 🔁 0 💬 1 📌 0

a man wearing a white shirt and tie smiles in front of a window

ALT: a man wearing a white shirt and tie smiles in front of a window

I've been waiting some years to make this joke and now it’s real:

I conned somebody into giving me a faculty job!

I’m starting as a W1 Tenure-Track Professor at Goethe University Frankfurt in a week (lol), in the Faculty of CS and Math

and I'm recruiting PhD students 🤗

23.09.2025 12:58 — 👍 186 🔁 31 💬 30 📌 3

YouTube video by Friday Talks Tübingen

AI Safety and Alignment - [Maksym Andriushchenko]

A new recording of our FridayTalks@Tübingen series is online!

AI Safety and Alignment

by

@maksym-andr.bsky.social

Watch here: youtu.be/7WRW8MDQ8bk

11.09.2025 13:18 — 👍 6 🔁 1 💬 1 📌 0

YouTube video by Friday Talks Tübingen

Why LLM Benchmarks are Broken and How to Fix It? - [Guanhua Zhang]

A new recording of our FridayTalks@Tübingen series is online!

Why LLM Benchmarks are Broken and How to Fix It?

by

Guanhua Zhang

Watch here: youtu.be/X820NwnHu-c

01.09.2025 13:08 — 👍 0 🔁 0 💬 1 📌 0

Want to get started with theoretical analysis of probabilistic decision-making algos (bandits, BO, planning, etc.), but don't know where to start? Existing literature too dense to parse?

arxiv.org/abs/2508.21620

I've written a self-contained introductory monograph on this!

01.09.2025 11:15 — 👍 6 🔁 1 💬 0 📌 0

YouTube video by Friday Talks Tübingen

How much can we forget about Data Contamination? - [Sebastian Bordt]

A new recording of our FridayTalks@Tübingen series is online!

How much can we forget about Data Contamination?

by

@sbordt.bsky.social

Watch here: youtu.be/T9Y5-rngOLg

29.08.2025 07:05 — 👍 2 🔁 1 💬 1 📌 0

YouTube video by Friday Talks Tübingen

Adversarially Robust CLIP Models Can Induce Better (Robust) Perceptual Metrics

A new recording of our FridayTalks@Tübingen series is online!

Adversarially Robust CLIP Models Can Induce Better (Robust) Perceptual Metrics

by

@chs20.bsky.social & Naman Deep Singh

Watch here: www.youtube.com/watch?v=sK9Y...

19.08.2025 05:58 — 👍 0 🔁 0 💬 1 📌 0

YouTube video by Friday Talks Tübingen

Large Language Models Are Zero-Shot Problem Solvers—Just Like Modern Computers - [Tim Xiao]

A new recording of our FridayTalks@Tübingen series is online!

Large Language Models Are Zero-Shot Problem Solvers—Just Like Modern Computers

by

Tim Z. Xiao

Watch here: www.youtube.com/watch?v=ySHu...

11.08.2025 12:40 — 👍 3 🔁 0 💬 1 📌 0

YouTube video by Friday Talks Tübingen

Effortless, Simulation-Efficient Bayesian Inference using Tabular Foundation Models

A new recording of our FridayTalks@Tübingen series is online!

Effortless, Simulation-Efficient Bayesian Inference using Tabular Foundation Models

by

@vetterj.bsky.social & Manuel Gloeckler from @mackelab.bsky.social

Watch here: youtube.com/watch?v=Wx2p...

05.08.2025 07:07 — 👍 3 🔁 0 💬 1 📌 0

📣 [Openings] I'm now an Assistant Prof @westernu.ca CS dept. Funded PhD & MSc positions available! Topics: large probabilistic models, decision-making under uncertainty, and apps in AI4Science. More on agustinus.kristia.de/openings/

04.07.2025 22:55 — 👍 17 🔁 5 💬 1 📌 1

@unituebingen.bsky.social Tübingen has won 6 out of 9! Not too bad, is it? 👍 Congratulations to all winning teams!

22.05.2025 17:00 — 👍 4 🔁 1 💬 0 📌 0

The members of the Cluster of Excellence "Machine Learning: New Perspectives for Science" raise their glasses and celebrate securing another funding period.

We're super happy: Our Cluster of Excellence will continue to receive funding from the German Research Foundation @dfg.de ! Here’s to 7 more years of exciting research at the intersection of #machinelearning and science! Find out more: uni-tuebingen.de/en/research/... #ExcellenceStrategy

22.05.2025 16:23 — 👍 74 🔁 20 💬 4 📌 5

Das Bild zeigt einen Brunnen vor der Neuen Aula. Darunter der Text: Sechs Exzellenzcluster werden in Tübingen gefördert.

Die Universität Tübingen erhält sechs #Exzellenzcluster, die im Rahmen der #Exzellenzstrategie vom 01.01.2026 an sieben Jahre lang gefördert werden! Darunter drei Cluster, die bereits etabliert sind und eine erneute Förderung erhalten. uni-tuebingen.de/universitaet... #ExStra #Forschung #UniTübingen

22.05.2025 17:13 — 👍 70 🔁 29 💬 5 📌 4

Great idea! Are there any plans to do this for other subfields as well?

21.02.2025 12:54 — 👍 4 🔁 0 💬 1 📌 0

Unfortunately, voting at a German embassy is not an option.

21.02.2025 12:47 — 👍 0 🔁 0 💬 0 📌 0

I'm extremely disappointed with the organization of the upcoming German election. Voting is a fundamental right, yet my ballot letter arrived too late in Toronto—now the fastest! delivery won't get it there by Monday, after the election. Unacceptable.

20.02.2025 14:46 — 👍 3 🔁 0 💬 2 📌 0

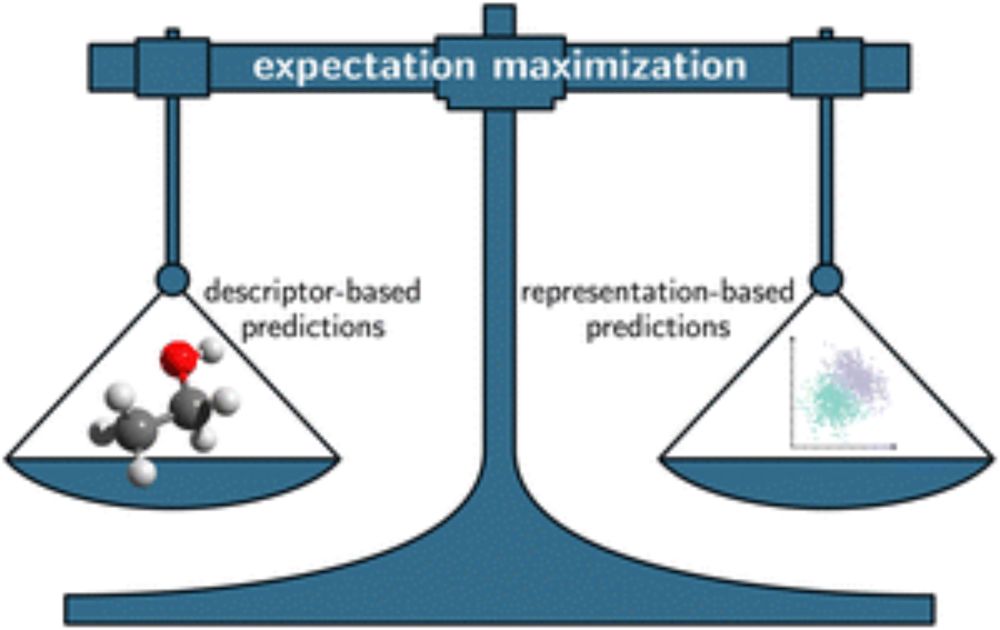

More generally, we believe that the variational expectation maximization algorithm can be a useful tool for many physico-chemical prediction problems since it balances structure-based and representation-learning based predictions by weighing of their respective uncertainties.

31.01.2025 14:45 — 👍 0 🔁 0 💬 0 📌 0

We extend a probabilistic matrix factorization method (Jirasek et al., 2020) by learning priors from the chemical structure of mixture components utilizing graph neural networks and the variational expectation maximization algorithm which significantly improves the predictive accuracy over the SOTA.

31.01.2025 14:45 — 👍 0 🔁 0 💬 1 📌 0

Predicting the physico-chemical properties of pure substances and mixtures is a central task in

thermodynamics. We propose a method for combining molecular descriptors with representation learning for the prediction of activity coefficients of binary liquid mixtures at infinite dilution.

31.01.2025 14:45 — 👍 0 🔁 0 💬 1 📌 0

Research group leader @ Max Planck Institute working on theory & social aspect of CS. Previous @UCSC@GoogleDeepMind @Stanford @PKU1898

https://yatongchen.github.io/

Research Lead @parameterlab.bsky.social working on Trustworthy AI

Speaking 🇫🇷, English and 🇨🇱 Spanish | Living in Tübingen 🇩🇪 | he/him

https://gubri.eu

Prof. Uni Tübingen, Machine Learning, Robotics, Haptics

Research Scientist @Bioptimus. Previously at ETH Zürich, Max Planck Institute for Intelligent Systems, Google Research, EPFL, and RIKEN AIP.

aleximmer.github.io

Computational Statistics and Machine Learning (CSML) Lab | PI: Massimiliano Pontil | Webpage: csml.iit.it | Active research lines: Learning theory, ML for dynamical systems, ML for science, and optimization.

Assistant Professor at the university of Warwick.

I compute integrals for a living.

https://adriencorenflos.github.io/

Hi, I am a Royal Society Newton Internation Fellow @ Oxford.

machine learning researcher @ Apple machine learning research

PhD student in ML at Tübingen AI Center & International Max-Planck Research School for Intelligent Systems

Professor of Computer Vision and AI at TU Munich, Director of the Munich Center for Machine Learning mcml.ai and of ELLIS Munich ellismunich.ai

cvg.cit.tum.de

PhD student in AI at University of Tuebingen.

Dreaming for a better world.

https://andrehuang.github.io/

PhD student @ Uni Tübingen | @bethgelab.bsky.social | Computational Neuroscience & ML

Faculty at the ELLIS Institute Tübingen and Max Planck Institute for Intelligent Systems. Leading the AI Safety and Alignment group. PhD from EPFL supported by Google & OpenPhil PhD fellowships.

More details: https://www.andriushchenko.me/

PhD student at Uni Tübingen, fm.ls group. Banner pic: rare sight of sunshine in Ammergasse, Tübingen.

PhD student at the University of Tübingen, member of @bethgelab.bsky.social

PhD student @Max Planck Institute for Intelligent Systems | working on optimization & game-theoretic frameworks for robust and efficient ML | organizer @twiml.bsky.social | https://melisilaydabal.github.io

not affiliated with Y Combinator, running on a pi in my basement

Statistics, machine learning, numerics, and simulation at EURECOM.

![Strategic Hypothesis Testing - [Yatong Chen]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:lf7pelyqhcozozng2zrl2wxx/bafkreifkvmkvrzdr7baoqn2bedme3swiuwuwvrkialzfx47ltqk57aevka@jpeg)

![The Curse of Depth in Large Language Models - [Shiwei Liu]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:lf7pelyqhcozozng2zrl2wxx/bafkreib2ddbjo2ibp5huntucvgmxffhebla64ws7h3h7a5c6yszhg36tx4@jpeg)

![AI Safety and Alignment - [Maksym Andriushchenko]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:lf7pelyqhcozozng2zrl2wxx/bafkreihyvlpespqgl72lrutg3526tovcj6jrnuxmwizp6lt5l3xugrh7gy@jpeg)

![Why LLM Benchmarks are Broken and How to Fix It? - [Guanhua Zhang]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:lf7pelyqhcozozng2zrl2wxx/bafkreiehlmjqsrbgzaaoxp3wsbxjjmomffo5ju2p55dadij4z66qmpfybi@jpeg)

![How much can we forget about Data Contamination? - [Sebastian Bordt]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:lf7pelyqhcozozng2zrl2wxx/bafkreiabgtuirkbqr5nm4lc2nuetzerosiatyxomav7kdfnzozbubv5dai@jpeg)

![Large Language Models Are Zero-Shot Problem Solvers—Just Like Modern Computers - [Tim Xiao]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:lf7pelyqhcozozng2zrl2wxx/bafkreidx62tp47jtfjbc2wegh7zfiizgjwpglcppqfvrg72ivizmisnjuy@jpeg)