Small Language Models (SLMs) don’t have the capacity to remember everything in their training data. Which tokens should they learn to predict, and when should they ask for help? We tackle this question in our new preprint.

You can check it out on arxiv: arxiv.org/abs/2602.12005

🧵1/7

13.02.2026 16:16 —

👍 46

🔁 7

💬 1

📌 1

With other folks at 🍏, @brunokm.bsky.social has worked on a complete(d) parameterisation for NNs that can *transfer* locally tuned hyperparameters: tune optimizers' parameters (e.g. LR) *per module/depth* using an evolutionary search on small models → they transfer perf. gains to much larger models

06.01.2026 16:33 —

👍 5

🔁 1

💬 0

📌 0

I am at #NeurIPS2025. We can chat about data mixing, efficient training, ML@Apple and more.

02.12.2025 18:47 —

👍 2

🔁 1

💬 0

📌 0

To me, a simple and cheap fix could be an automatic reveal of the names of everyone involved in the review process 5 years after decision. This would be opt-in by authors. Reviewers/AC/SACs would be more careful when writing.

01.12.2025 12:46 —

👍 5

🔁 0

💬 0

📌 0

I understand it's a challenge to implement this, but when bad actors do not pay a price for cheating, the folks that pay the steepest price end up being the authors who spend endless hours writing rebuttals. I feel AI conferences have prioritized growth over fairness to authors.

01.12.2025 12:46 —

👍 2

🔁 0

💬 1

📌 0

namely, it's impossible, when PCs see abuse/collusions or very poor quality work (submissions/reviews), to ban momentarily or permanently bad players. So every year/conference is again up for grabs if you're one of those bad actors.

01.12.2025 12:46 —

👍 2

🔁 0

💬 1

📌 0

my 2 cents on the ICLR drama: It's been years that the system has been under attack. But it's also been years that we hear, year after year, that there is no way to enforce protection mechanisms (e.g. deny lists for dishonest authors or reviewers etc..) for legal reasons.

01.12.2025 12:46 —

👍 6

🔁 1

💬 1

📌 0

📢 We’re looking for a researcher in in cogsci, neuroscience, linguistics, or related disciplines to work with us at Apple Machine Learning Research! We're hiring for a one-year interdisciplinary AIML Resident to work on understanding reasoning and decision making in LLMs. 🧵

07.11.2025 21:19 —

👍 9

🔁 5

💬 1

📌 1

We have been working with Michal Klein on pushing a module to train *flow matching* models using JAX. This is shipped as part of our new release of the OTT-JAX toolbox (github.com/ott-jax/ott)

The tutorial to do so is here: ott-jax.readthedocs.io/tutorials/ne...

05.11.2025 14:04 —

👍 13

🔁 7

💬 1

📌 0

🚀 Excited to share LinEAS, our new activation steering method accepted at NeurIPS 2025! It approximates optimal transport maps e2e to precisely guide 🧭 activations achieving finer control 🎚️ with ✨ less than 32 ✨ prompts!

💻https://github.com/apple/ml-lineas

📄https://arxiv.org/abs/2503.10679

21.10.2025 10:00 —

👍 2

🔁 1

💬 1

📌 1

It's that time of the year! 🎁

The Apple Machine Learning Research (MLR) team in Paris is hiring a few interns, to do cool research for ±6 months 🚀🚀 & work towards publications/OSS.

Check requirements and apply: ➡️ jobs.apple.com/en-us/detail...

More❓→ ✉️ mlr_paris_internships@group.apple.com

17.10.2025 13:07 —

👍 7

🔁 4

💬 0

📌 0

While working on semidiscrete flow matching this summer (➡️ arxiv.org/abs/2509.25519), I kept looking for a video illustrating that the velocity field solving the Benamou-Brenier OT problem is NOT constant w.r.t. time ⏳... so I did it myself, take a look! ott-jax.readthedocs.io/tutorials/th...

09.10.2025 20:09 —

👍 11

🔁 1

💬 0

📌 0

LLMs are currently this one big parameter block that stores all sort of facts. In our new preprint, we add context-specific memory parameters to the model, and pretrain the model along with a big bank of memories.

📑 arxiv.org/abs/2510.02375

[1/10]🧵

06.10.2025 16:06 —

👍 13

🔁 4

💬 1

📌 0

Wow! Finally OT done on the entire training set to train a diffusion model!

04.10.2025 07:03 —

👍 12

🔁 3

💬 0

📌 0

Then there's always 𝜀 regularization. When 𝜀=∞, we recover vanilla FM. At this point we're not completely sure whether 𝜀=0 is better than 𝜀>0, they both work! 𝜀=0 has a minor edge in larger scales (sparse gradients, faster assignment, slightly better metrics), but 𝜀>0 is also useful (faster SGD)

04.10.2025 11:21 —

👍 1

🔁 0

💬 0

📌 0

Thanks for the nice comments! my interpretation is that we're using OT to produce pairs (x_i,y_i) to guide FM. With that, it's up to you to provide an inductive bias (a model) that gets f(x)~=y while generalizing. The hard OT assignment could be that model, but it would fail to generalize.

04.10.2025 11:21 —

👍 5

🔁 0

💬 1

📌 0

for people that like OT, IMHO the very encouraging insight is that we have evidence that the "better" you solve your OT problem, the more flow matching metrics improve, this is Figure 3

04.10.2025 08:45 —

👍 3

🔁 0

💬 1

📌 0

Thanks @rflamary.bsky.social! yes, exactly. We try to summarize this tradeoff in Table 1, in which we show that for a one-off preprocessing cost, we now get all (noise,data) pairings you might need during flow matching training for "free" (up to the MIPS lookup for each noise).

04.10.2025 08:44 —

👍 1

🔁 0

💬 1

📌 0

This much faster than using Sinkhorn, and generates with higher quality.

As a bonus, you can forget about entropy regularization (set ε=0), apply things like correctors to guidance, and use it on consistency-type models, or even with conditional generation.

03.10.2025 21:00 —

👍 2

🔁 0

💬 1

📌 0

the great thing with SD-OT is that this only needs to be computed once. You only need to store a real number per data sample. You can precompute these numbers once & for all using stochastic convex optimization.

When training a flow model, you assign noise to data using these numbers.

03.10.2025 20:56 —

👍 1

🔁 0

💬 1

📌 0

In practice, however, this idea only begins to work when using massive batch sizes (see arxiv.org/abs/2506.05526). The problem is that the costs of running Sinkhorn on millions of points can quickly balloon...

Our solution? rely on semidiscrete OT at scales that were never considered before.

03.10.2025 20:56 —

👍 1

🔁 0

💬 1

📌 0

Our two phenomenal interns, Alireza Mousavi-Hosseini and Stephen Zhang @syz.bsky.social have been cooking some really cool work with Michal Klein and me over the summer.

Relying on optimal transport couplings (to pick noise and data pairs) should, in principle, be helpful to guide flow matching

🧵

03.10.2025 20:50 —

👍 30

🔁 7

💬 2

📌 1

The

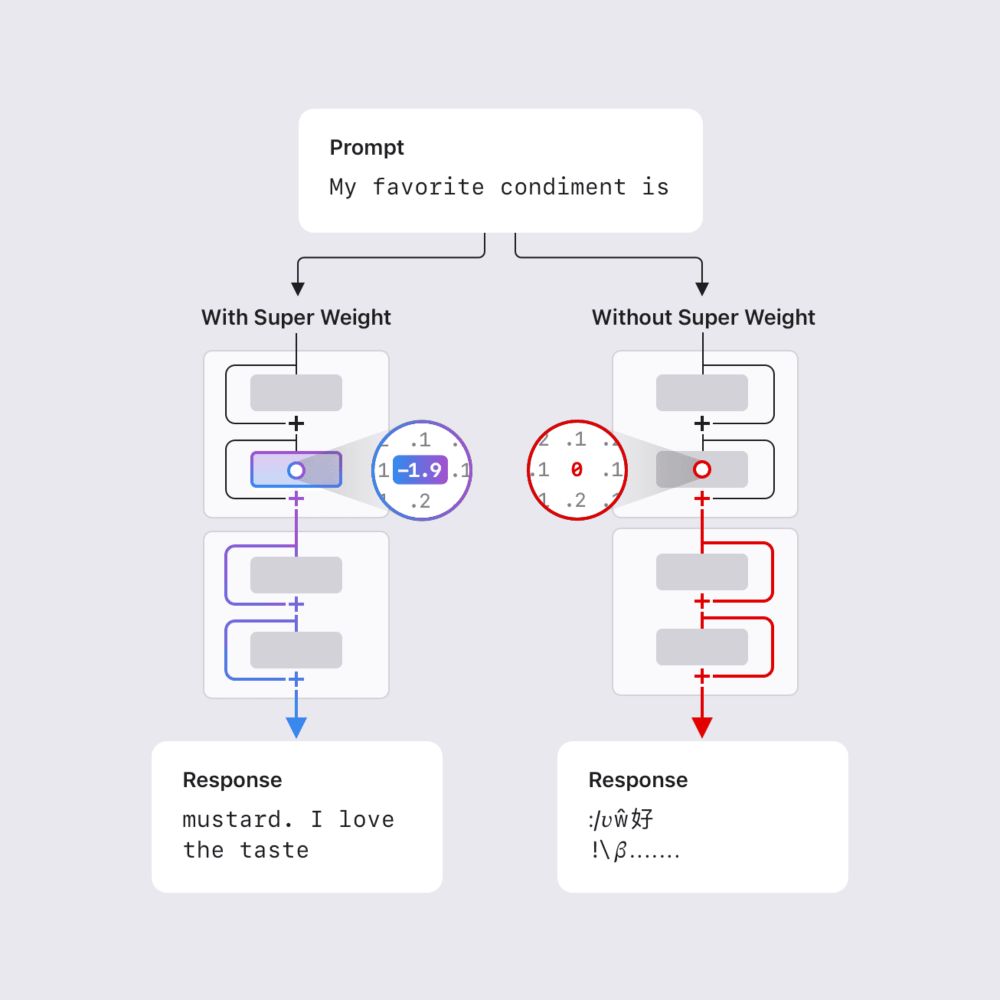

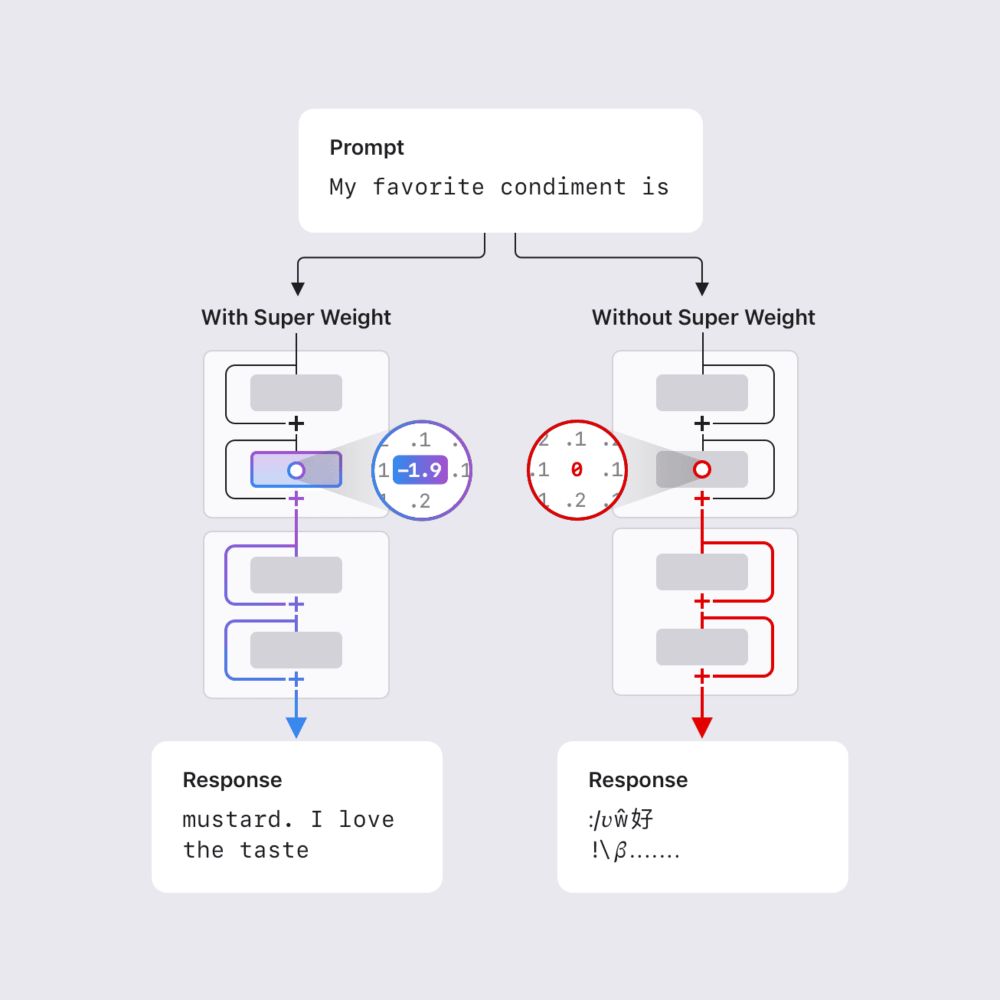

A recent paper from Apple researchers,

New Apple #ML Research Highlight: The "Super Weight:" How Even a Single Parameter can Determine an #LLM's Behavior machinelearning.apple.com/research/the...

21.08.2025 18:13 —

👍 4

🔁 2

💬 1

📌 0

you're right that the PCs' message uses space as a justification to accept less papers, but it does not explicitly mention that the acceptance rate should be lower than the historical standard of 25%. In my SAC batch, the average acceptance before their email was closer to 30%, but that's just me..

29.08.2025 11:32 —

👍 4

🔁 0

💬 2

📌 0

I see it a bit differently. The new system pushed reviewers aggressively to react to rebuttals. I think this is a great change, but this has clearly skewed results, creating many spurious grade upgrades. Now the system must be rebalanced in the other direction by SAC/AC for results to be fair..

29.08.2025 07:05 —

👍 5

🔁 0

💬 1

📌 1

Sharded Sinkhorn — ott 0.5.1.dev34+g3462f28 documentation

scaling up the computation of optimal transport couplings to hundreds of thousands of 3k dimensional vectors made easy using sharding and OTT-JAX! check this notebook, it only takes a few lines of code thanks to JAX's native sharding abilities ott-jax.readthedocs.io/en/latest/tu...

01.08.2025 00:13 —

👍 14

🔁 2

💬 0

📌 0