FastTD3: Simple, Fast, and Capable Reinforcement Learning for Humanoid Control

FastTD3: Simple, Fast, and Capable Reinforcement Learning for Humanoid Control.

A very fun project at @ucberkeleyofficial.bsky.social, led by amazing Younggyo Seo, with Haoran Geng, Michal Nauman, Zhaoheng Yin, and Pieter Abbeel!

Page: younggyo.me/fast_td3/

Arxiv: arxiv.org/abs/2505.22642

Code: github.com/younggyoseo/...

29.05.2025 17:49 — 👍 1 🔁 0 💬 0 📌 0

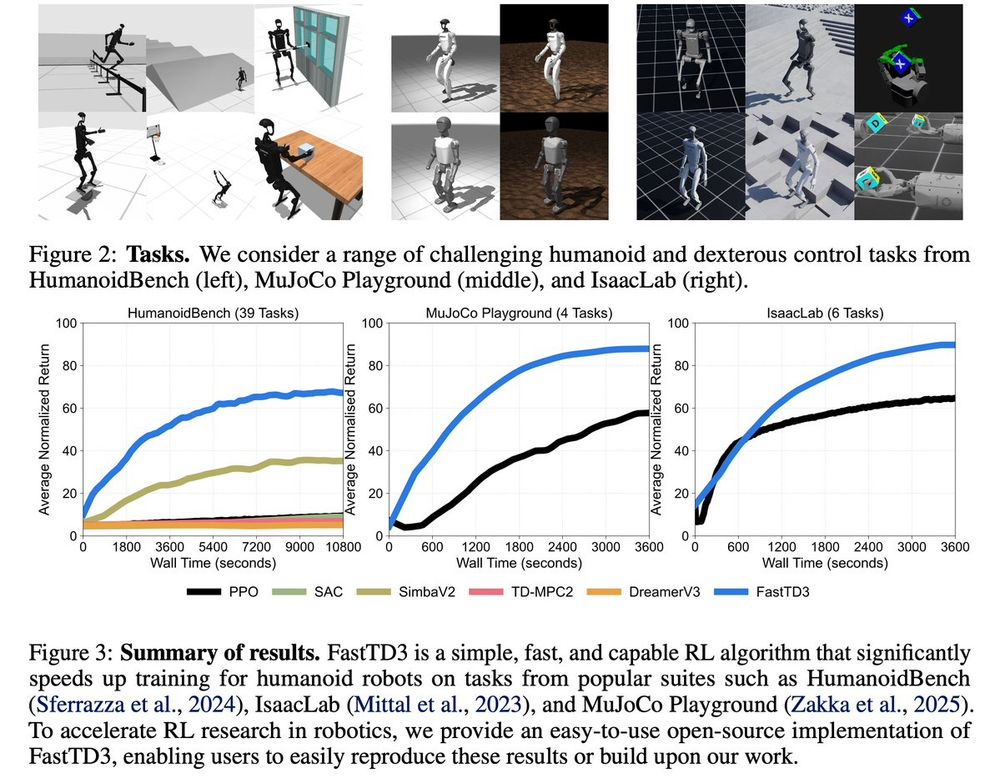

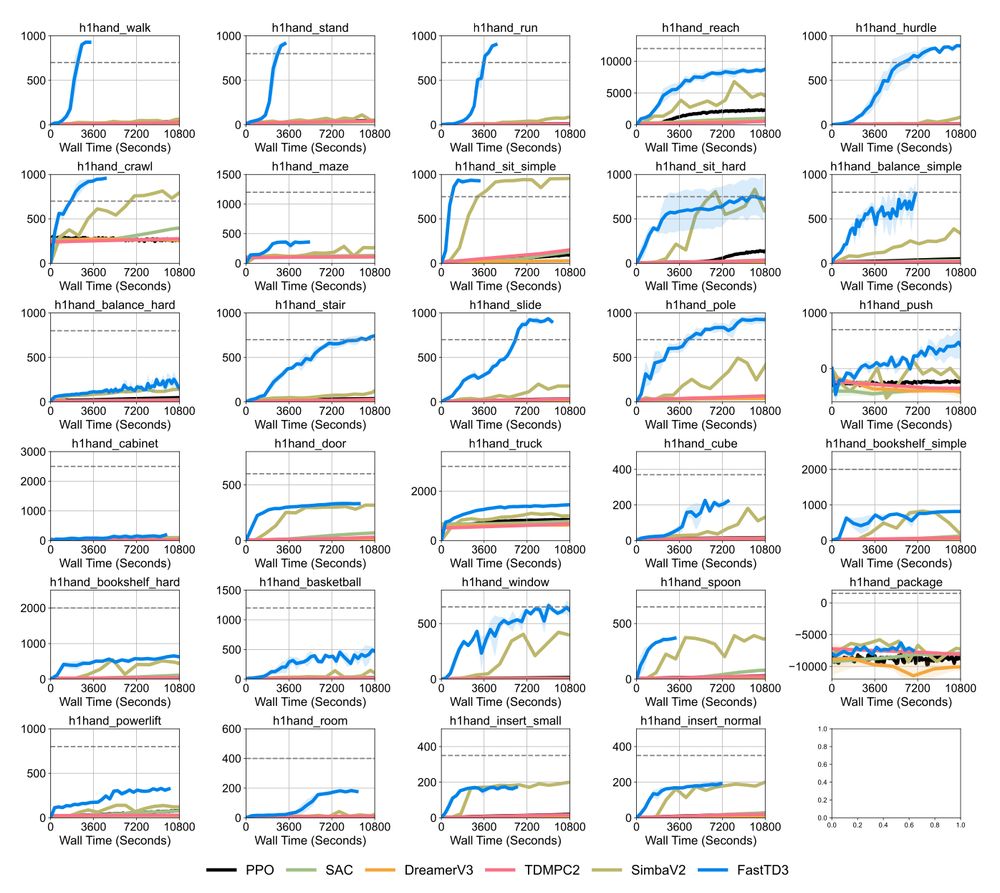

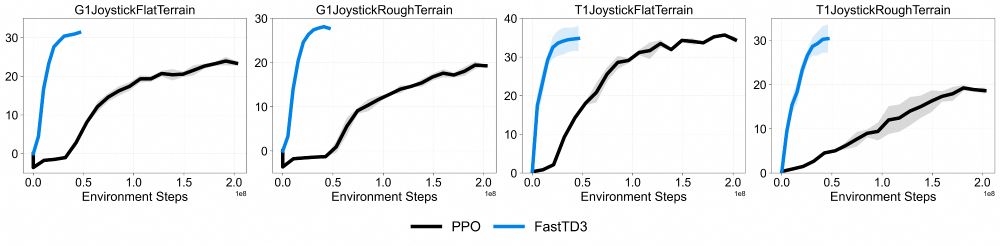

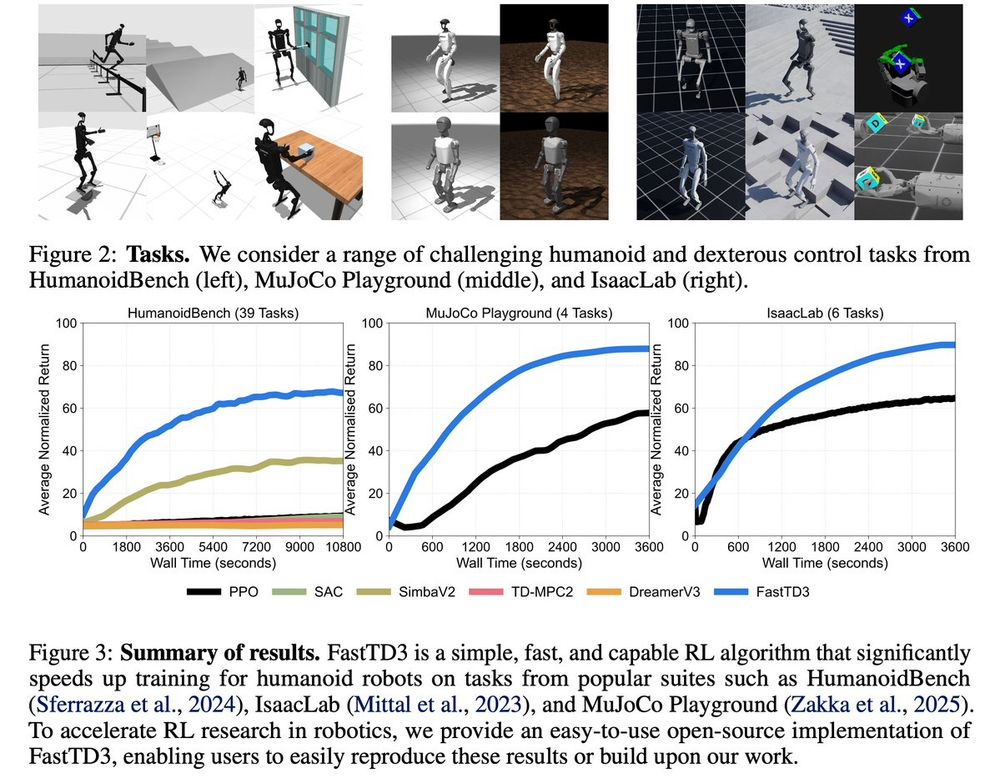

FastTD3 is open-source, and compatible with most sim-to-real robotics frameworks, e.g., MuJoCo Playground and Isaac Lab. All the advances in scaling off-policy RL are now readily available to the robotics community 🤖

29.05.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

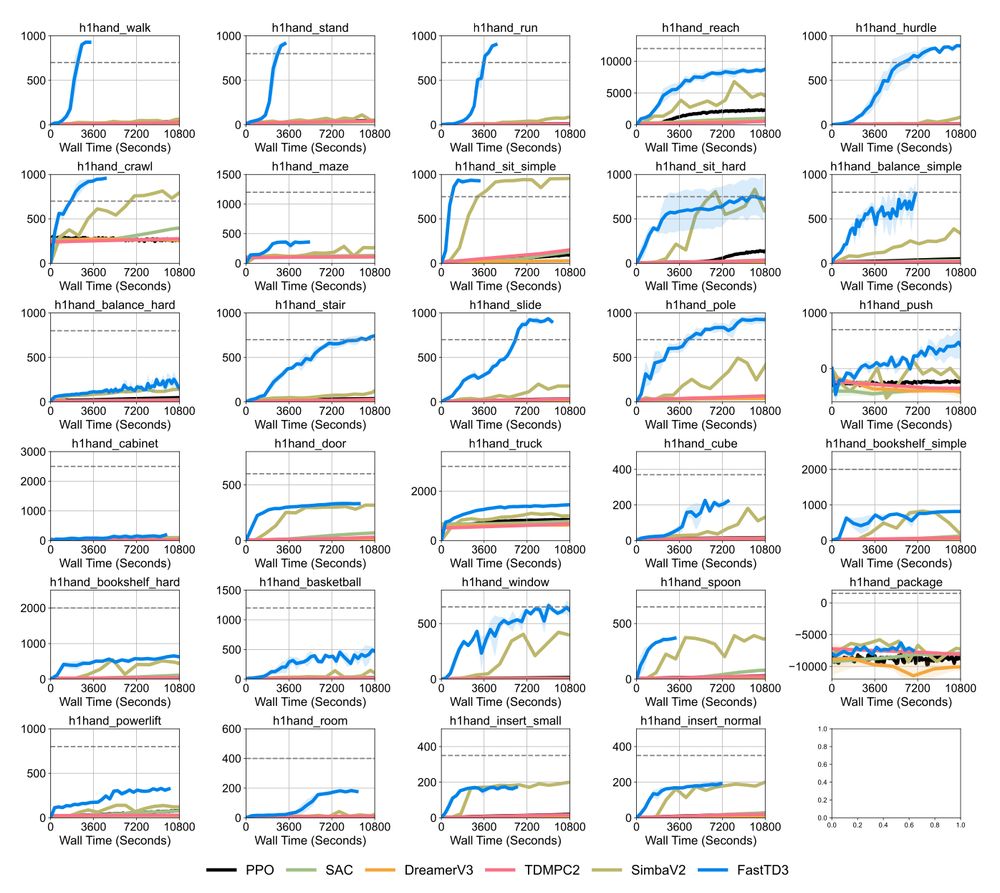

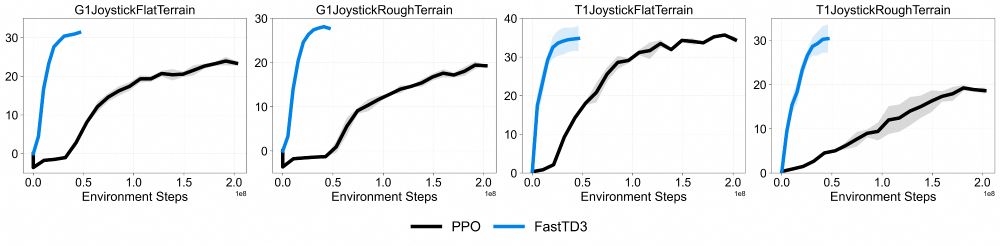

A very cool thing: FastTD3 achieves state-of-the-art performance on most HumanoidBench tasks, even superior to model-based algorithms. All it takes: 128 parallel environments and 1-3 hours of training 🤯

29.05.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

Off-policy methods have pushed RL sample efficiency, but robotics still leans on parallel on-policy RL (PPO) for wall-time gains. FastTD3 gets the best of both worlds!

29.05.2025 17:49 — 👍 0 🔁 0 💬 1 📌 0

We just released FastTD3: a simple, fast, off-policy RL algorithm to train humanoid policies that transfer seamlessly from simulation to the real world.

younggyo.me/fast_td3

29.05.2025 17:49 — 👍 2 🔁 0 💬 1 📌 0

Heading to @ieeeras.bsky.social RoboSoft today! I'll be giving a short Rising Star talk Thu at 2:30pm: "Towards Multi-sensory, Tactile-Enabled Generalist Robot Learning"

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

22.04.2025 17:59 — 👍 3 🔁 0 💬 0 📌 0

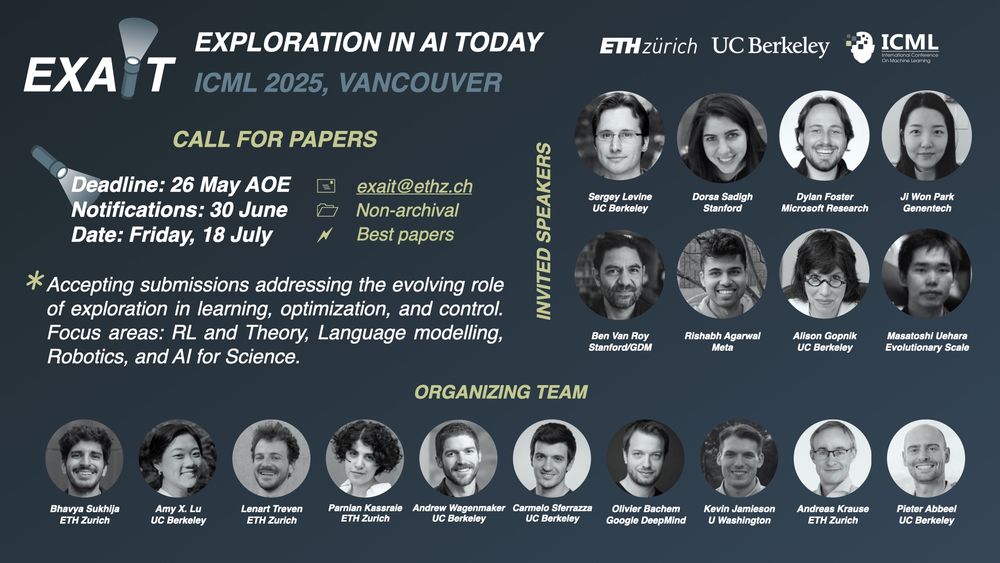

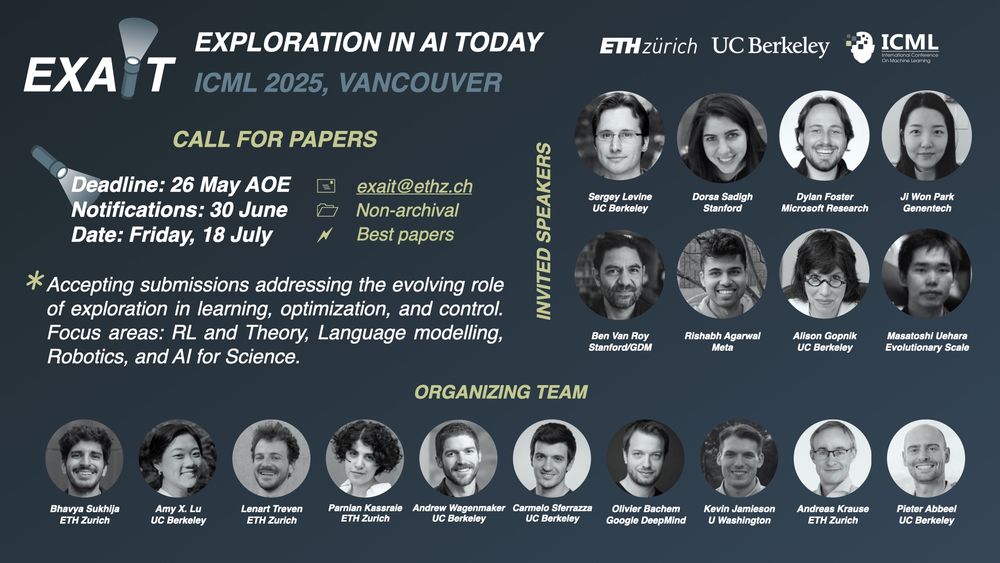

And co-organizers @sukhijab.bsky.social, @amyxlu.bsky.social, Lenart Treven, Parnian Kassraie, Andrew Wagenmaker, Olivier Bachem, @kjamieson.bsky.social, @arkrause.bsky.social, Pieter Abbeel

17.04.2025 05:53 — 👍 0 🔁 0 💬 0 📌 0

With amazing speakers Sergey Levine, Dorsa Sadigh, @djfoster.bsky.social, @ji-won-park.bsky.social, Ben Van Roy, Rishabh Agarwal, @alisongopnik.bsky.social, Masatoshi Uehara

17.04.2025 05:53 — 👍 0 🔁 0 💬 1 📌 0

What is the place of exploration in today's AI landscape and in which settings can exploration algorithms address current open challenges?

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

17.04.2025 05:53 — 👍 9 🔁 3 💬 1 📌 0

Many robots (and robot videos!), and many awesome collaborators at @ucberkeleyofficial.bsky.social @uoft.bsky.social @cambridgeuni.bsky.social @stanforduniversity.bsky.social @deepmind.google.web.brid.gy – huge shoutout to the entire team!

16.01.2025 22:27 — 👍 2 🔁 0 💬 0 📌 0

Google Colab

Very easy installation, it can even run on a single Python notebook: colab.research.google.com/github/googl...

Check out @mujoco.bsky.social’s thread above for all the details.

Can't wait to see the robotics community build on this pipeline and keep pushing the field forward!

16.01.2025 22:27 — 👍 2 🔁 0 💬 1 📌 0

It was really amazing to work on this and see the whole project come together.

Sim-to-real is often an iterative process – Playground makes it seamless.

An open-source ecosystem is essential for integrating new features – check out Madrona-MJX for distillation-free visual RL!

16.01.2025 22:27 — 👍 2 🔁 0 💬 1 📌 0

Big news for open-source robot learning! We are very excited to announce MuJoCo Playground.

The Playground is a reproducible sim-to-real pipeline that leverages MuJoCo ecosystem and GPU acceleration to learn robot locomotion and manipulation in minutes.

playground.mujoco.org

16.01.2025 22:27 — 👍 2 🔁 0 💬 1 📌 0

GitHub - fuse-model/FuSe

Contribute to fuse-model/FuSe development by creating an account on GitHub.

We open source the code and the models, as well as the dataset, which comprises 27k (!) action-labeled robot trajectories with visual, inertial, tactile, and auditory observations.

Code: github.com/fuse-model/F...

Models and dataset: huggingface.co/oier-mees/FuSe

13.01.2025 18:51 — 👍 0 🔁 0 💬 1 📌 0

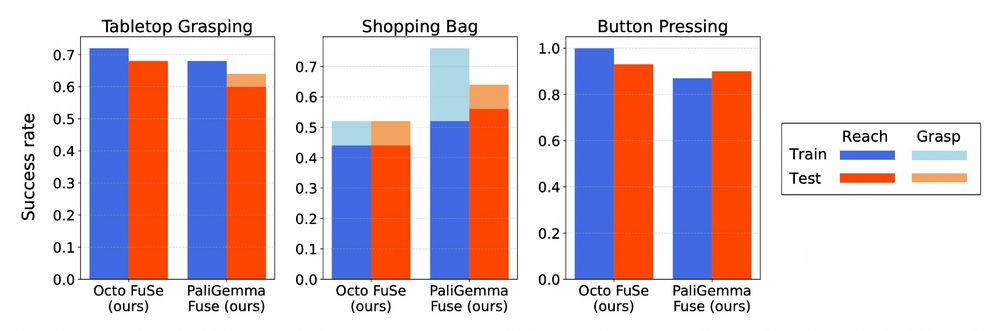

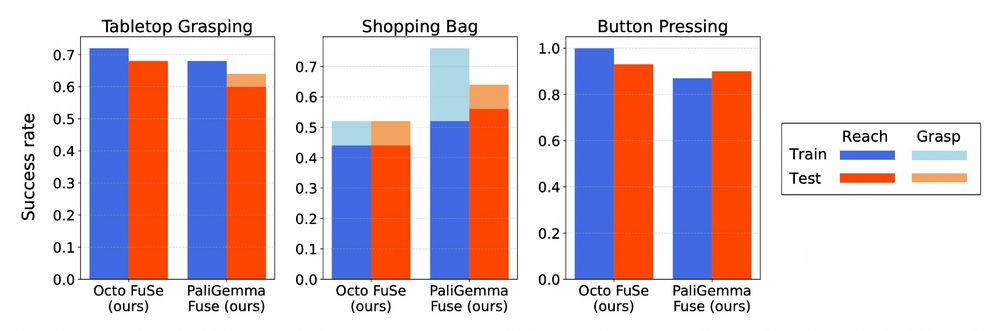

We find that the same general recipe is applicable to generalist policies with diverse architectures, including a large 3B VLA with a PaliGemma vision-language-model backbone.

13.01.2025 18:51 — 👍 0 🔁 0 💬 1 📌 0

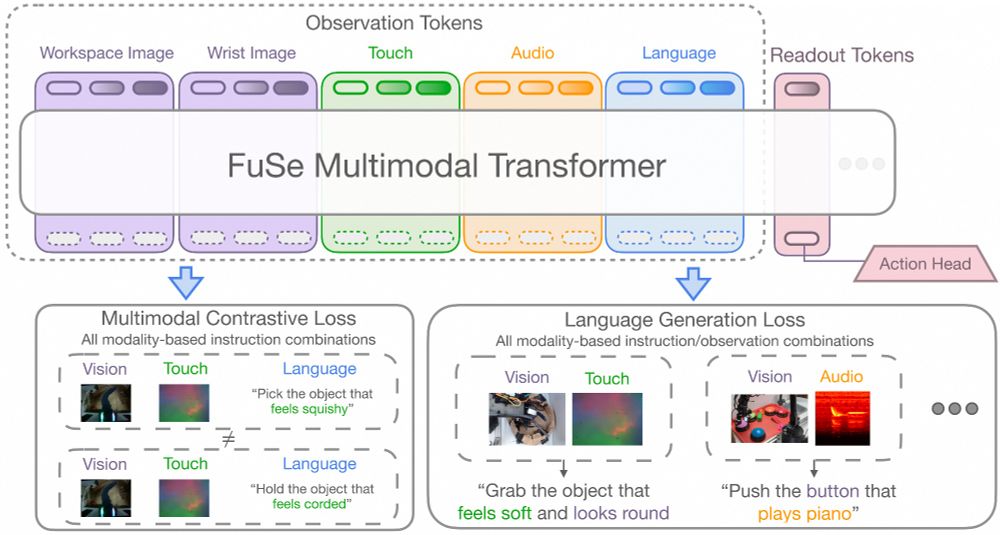

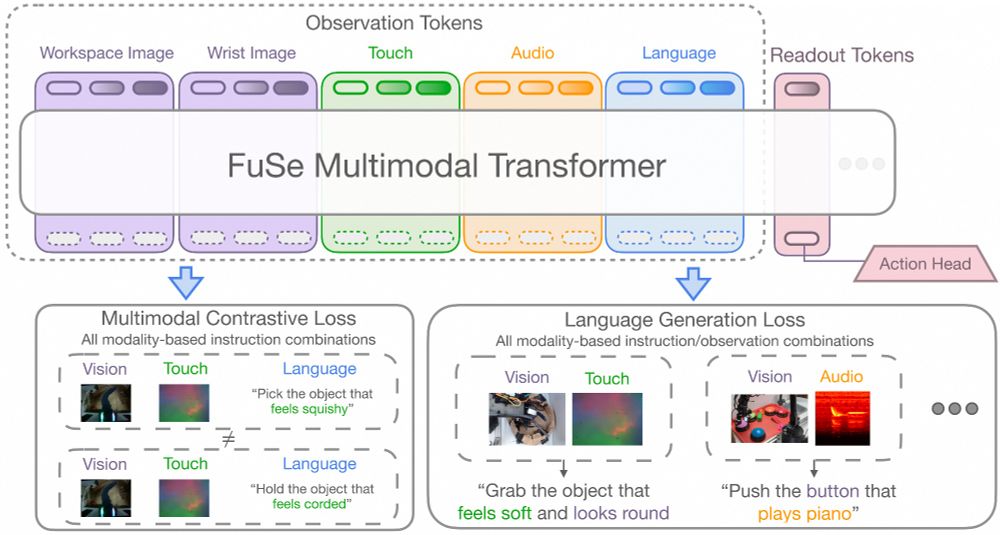

FuSe policies reason jointly over vision, touch, and sound, enabling tasks such as multimodal disambiguation, generation of object descriptions upon interaction, and compositional cross-modal prompting (e.g., “press the button with the same color as the soft object”).

13.01.2025 18:51 — 👍 0 🔁 0 💬 1 📌 0

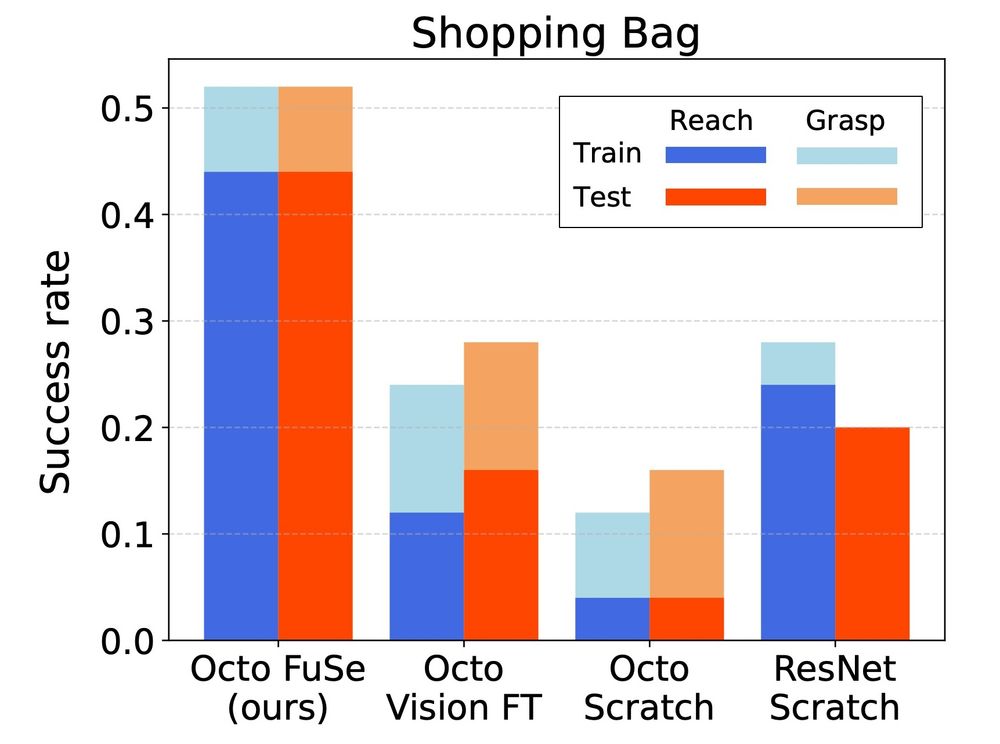

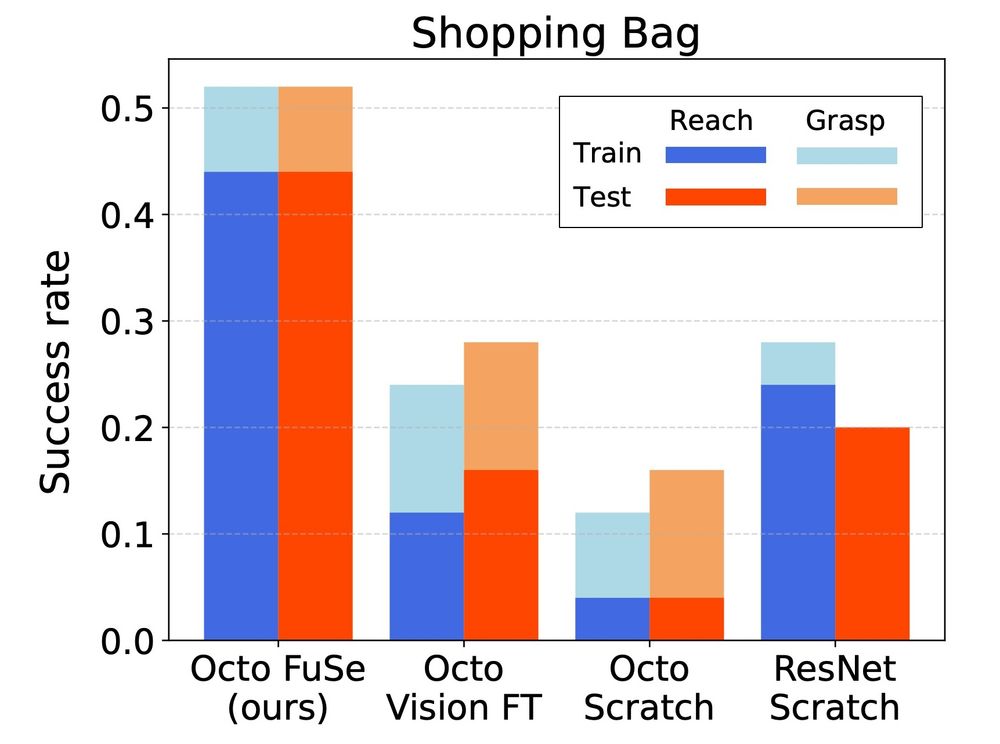

Pretrained generalist robot policies finetuned on multimodal data consistently outperform baselines finetuned only on vision data. This is particularly evident in tasks with partial visual observability, such as grabbing objects from a shopping bag.

13.01.2025 18:51 — 👍 0 🔁 0 💬 1 📌 0

We use language instructions to ground all sensing modalities by introducing two auxiliary losses. In fact, we find that naively finetuning on a small-scale multimodal dataset results in the VLA over-relying on vision, ignoring much sparser tactile and auditory signals.

13.01.2025 18:51 — 👍 0 🔁 0 💬 1 📌 0

Ever wondered what robots 🤖 could achieve if they could not just see – but also feel and hear?

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

13.01.2025 18:51 — 👍 1 🔁 1 💬 1 📌 0

Excited to share MaxInfoRL, a family of powerful off-policy RL algorithms! The core focus of this work was to develop simple, flexible, and scalable methods for principled exploration. Check out the thread below to see how MaxInfoRL meets these criteria while also achieving SOTA empirical results.

17.12.2024 17:48 — 👍 5 🔁 1 💬 1 📌 0

GitHub - sukhijab/maxinforl_jax

Contribute to sukhijab/maxinforl_jax development by creating an account on GitHub.

We are also excited to share both Jax and Pytorch implementations, making it simple for RL researchers to integrate MaxInfoRL into their training pipelines.

Jax (built on jaxrl): github.com/sukhijab/max...

Pytorch (based on @araffin.bsky.social‘s SB3): github.com/sukhijab/max...

17.12.2024 17:46 — 👍 1 🔁 0 💬 1 📌 0

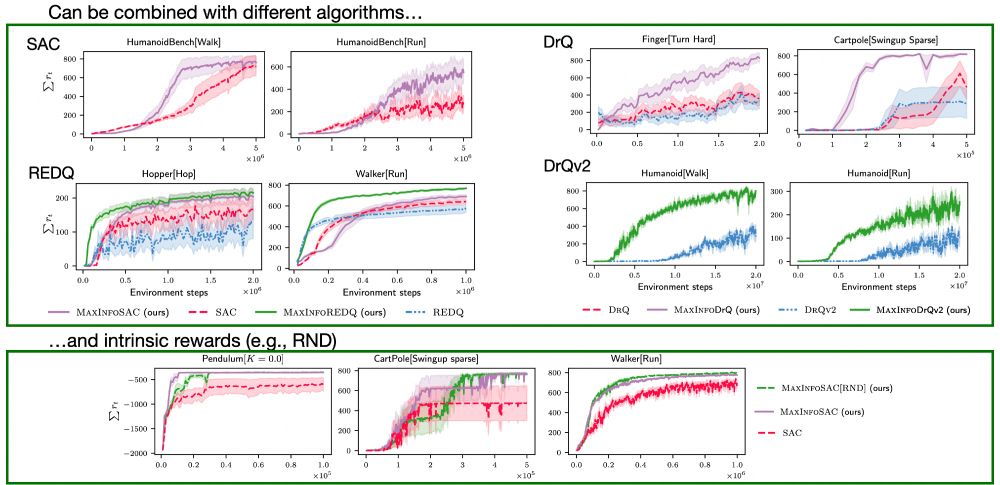

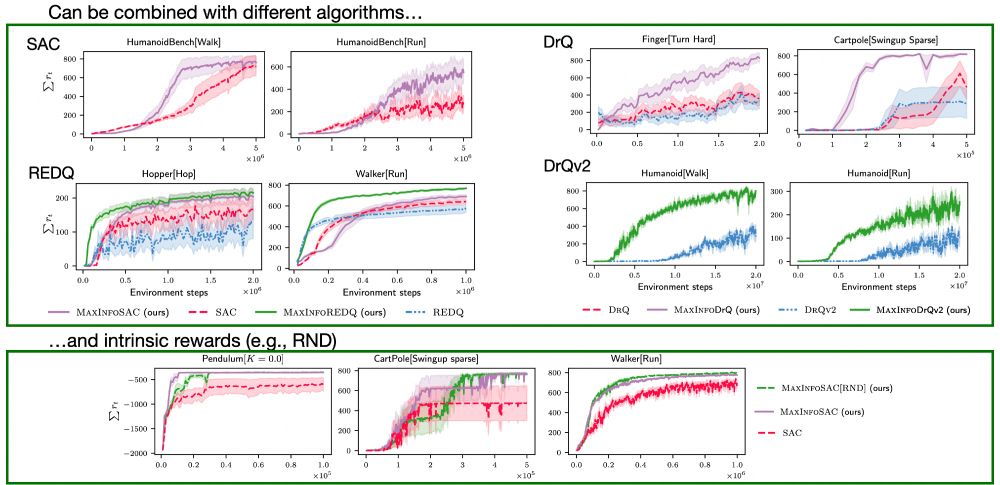

By combining MaxInfoRL with DrQv2 and DrM, this achieves state-of-the-art model-free performance on hard visual control tasks such as DMControl humanoid and dog tasks, improving both sample efficiency and steady-state performance.

17.12.2024 17:46 — 👍 0 🔁 0 💬 1 📌 0

MaxInfoRL is a simple, flexible, and scalable add-on to most RL advancements. We combine it with various algorithms, such as SAC, REDQ, DrQv2, DrM, and more – consistently showing improved performance over the respective backbones.

17.12.2024 17:46 — 👍 0 🔁 0 💬 1 📌 0

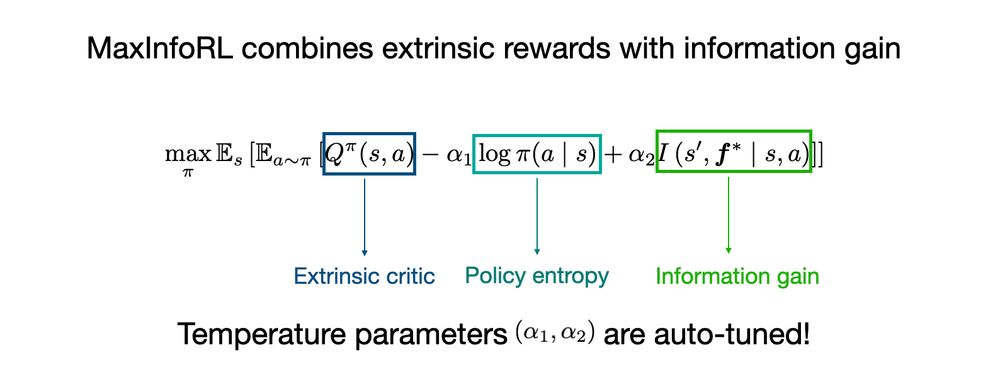

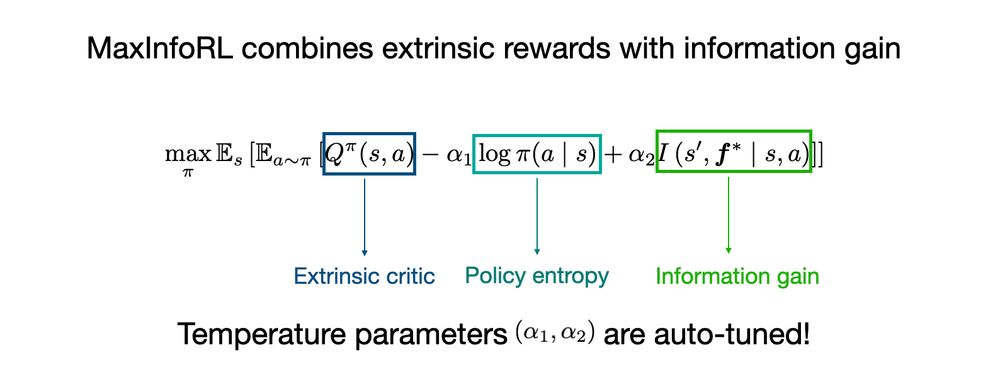

While standard Boltzmann exploration (e.g., SAC) focuses only on action entropy, MaxInfoRL maximizes entropy in both state and action spaces! This proves to be crucial when dealing with complex exploration settings.

17.12.2024 17:46 — 👍 1 🔁 0 💬 1 📌 0

The core principle is to balance extrinsic rewards with intrinsic exploration. MaxInfoRL achieves this by 1) using an ensemble of dynamics models to estimate information gain, and 2) incorporating this as an automatically-tuned exploration bonus in addition to policy entropy.

17.12.2024 17:46 — 👍 0 🔁 0 💬 1 📌 0

🚨 New reinforcement learning algorithms 🚨

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

17.12.2024 17:46 — 👍 17 🔁 2 💬 1 📌 1

GitHub - sukhijab/maxinforl_jax

Contribute to sukhijab/maxinforl_jax development by creating an account on GitHub.

We are also excited to share both Jax and Pytorch implementations, making it simple for RL researchers to integrate MaxInfoRL into their training pipelines.

Jax (built on jaxrl): github.com/sukhijab/max...

Pytorch (based on @araffin.bsky.social‘s SB3): github.com/sukhijab/max...

17.12.2024 17:44 — 👍 0 🔁 0 💬 1 📌 0

Assistant Prof. at Georgia Tech | NVIDIA AI | Making robots smarter

Innovating the future, one robot at a time! 🤖 Discover more at ieee-ras.org.

Franco Lab @UCLA Exploring how to program dynamic behaviors in biological self-assembly 💡✨ We’re harnessing DNA and RNA to build smart, adaptive materials 🔬🧬 #Biomaterials #DNA #RNA

Foundation Models for Generalizable Autonomy.

Assistant Professor in AI Robotics, Georgia Tech

prev Berkeley, Stanford, Toronto, Nvidia

PhD candidate at UCSD. Prev: NVIDIA, Meta AI, UC Berkeley, DTU. I like robots 🤖, plants 🪴, and they/them pronouns 🏳️🌈

https://www.nicklashansen.com

Northwestern University assistant professor. Interested in evolution, robots, AI and ALife.

https://www.xenobot.group

Robotics: Science and Systems Conference #robotics #research

roboticsconference.org

An open-source physics simulator for articulated systems with contacts.

Maintained by Google DeepMind and the community. This is not an officially supported Google product.

AI & Transportation | MIT Associate Professor

Interests: AI for good, sociotechnical systems, machine learning, optimization, reinforcement learning, public policy, gov tech, open science.

Science is messy and beautiful.

http://www.wucathy.com

PhD at ETH Zürich, Visiting researcher at Berkeley AI Research. Research Scientist Intern at AWS

Robotics, Artificial Intelligence, Reinforcement Learning.

https://sukhijab.github.io/

Prof. Uni Tübingen, Machine Learning, Robotics, Haptics

Robotics Prof at Rutgers University, New Jersey - Amazon Scholar

From SLAM to Spatial AI; Professor of Robot Vision, Imperial College London; Director of the Dyson Robotics Lab; Co-Founder of Slamcore. FREng, FRS.

Associate professor @ Cornell Tech

PhDing @UCSanDiego @NVIDIA @hillbot_ai on scalable robot learning and embodied AI. Co-founded @LuxAIChallenge to build AI competitions. @NSF GRFP fellow

http://stoneztao.com

Cofounded and lead PyTorch at Meta. Also dabble in robotics at NYU.

AI is delicious when it is accessible and open-source.

http://soumith.ch

Weekly podcast hosted by @claireasher.bsky.social exploring the exciting world of #robotics, artificial intelligence, and autonomous machines. New episode every Friday.

https://linktr.ee/robottalkpod