The EPFL NLP lab is looking to hire a postdoctoral researcher on the topic of designing, training, and evaluating multilingual LLMs:

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

@smamooler.bsky.social

PhD Candidate at @icepfl.bsky.social | Ex Research Intern@Google DeepMind 👩🏻💻 Working on multi-modal AI reasoning models in scientific domains https://smamooler.github.io/

The EPFL NLP lab is looking to hire a postdoctoral researcher on the topic of designing, training, and evaluating multilingual LLMs:

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

Also: not the usual #NLProc topic, but if you're working on genetic AI models, I’d love to connect! I'm exploring the intersection of behavioral genomics and multi-modal AI for behavior understanding.

26.07.2025 15:13 — 👍 0 🔁 0 💬 0 📌 0

Excited to attend #ACL2025NLP in Vienna next week!

I’ll be presenting our #NAACL2025 paper, PICLe, at the first workshop on Large Language Models and Structure Modeling (XLLM) on Friday. Come by our poster if you’re into NER and ICL with pseudo-annotation.

arxiv.org/abs/2412.11923

🚨 New Preprint!!

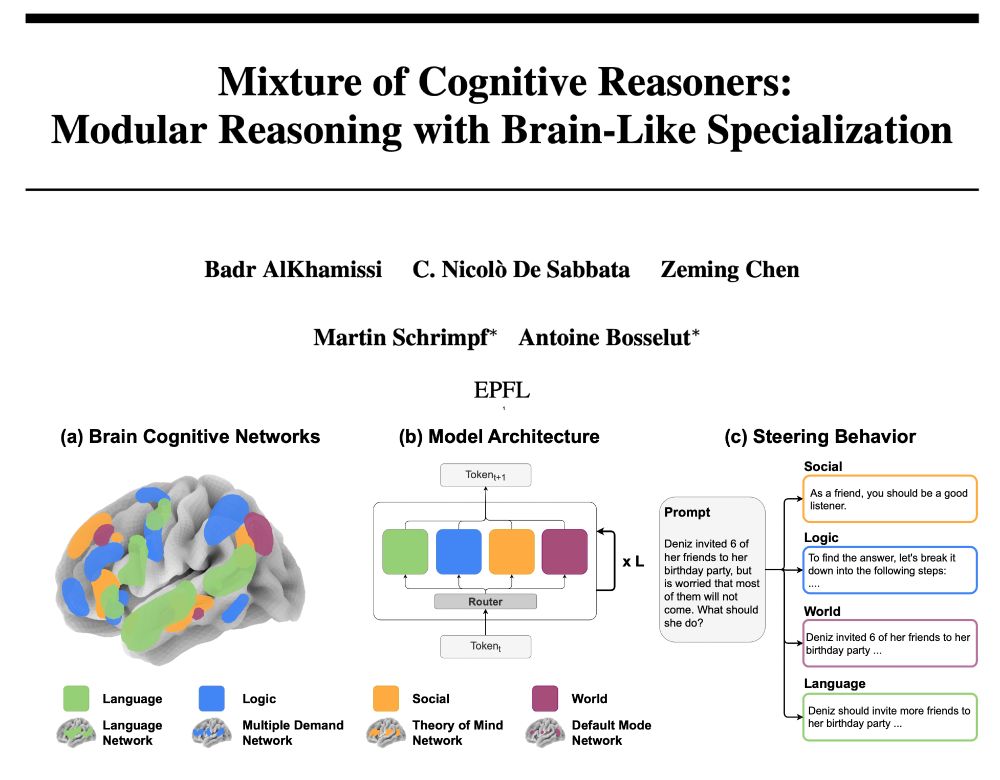

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

🌍 ✨ Introducing MELT Workshop 2025: Multilingual, Multicultural, and Equitable Language Technologies

A workshop on building inclusive, culturally-aware LLMs!

🧠 Bridging the language divide in AI

📅 October 10, 2025 Co-located with @colmweb.org

🔗 melt-workshop.github.io

#MeltWorkshop2025

Super excited to share that our paper "A Logical Fallacy-Informed Framework for Argument Generation" has received the Outstanding Paper Award 🎉🎉 at NAACL 2025!

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025

paper: arxiv.org/abs/2412.11923

code: github.com/sMamooler/PI...

Couldn't attend @naaclmeeting.bsky.social in person as I didn't get a visa on time 🤷♀️ My colleague @mismayil.bsky.social will present PICLe on my behalf today, May 1st, at 3:15 pm in RUIDOSO. Feel free to reach out if you want to chat more!

01.05.2025 08:39 — 👍 2 🔁 0 💬 1 📌 0Check out VinaBench, our new #CVPR2025 paper. We introduce a benchmark for faithful and consistent visual narratives.

Paper: arxiv.org/abs/2503.20871

Project Page: silin159.github.io/Vina-Bench/

Excited to share that we will present PICLe at @naaclmeeting.bsky.social main conference!

10.03.2025 10:21 — 👍 0 🔁 0 💬 0 📌 1

🚨 New Preprint!!

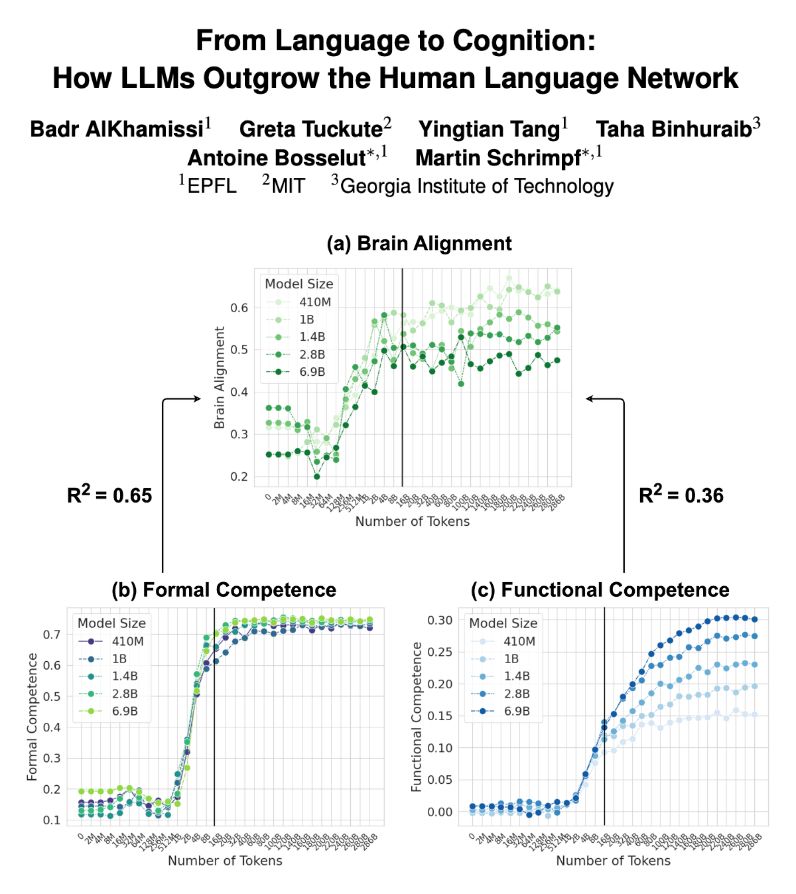

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

Lots of great news out of the EPFL NLP lab these last few weeks. We'll be at @iclr-conf.bsky.social and @naaclmeeting.bsky.social in April / May to present some of our work in training dynamics, model representations, reasoning, and AI democratization. Come chat with us during the conference!

25.02.2025 09:18 — 👍 25 🔁 12 💬 1 📌 0

🚨 New Paper!

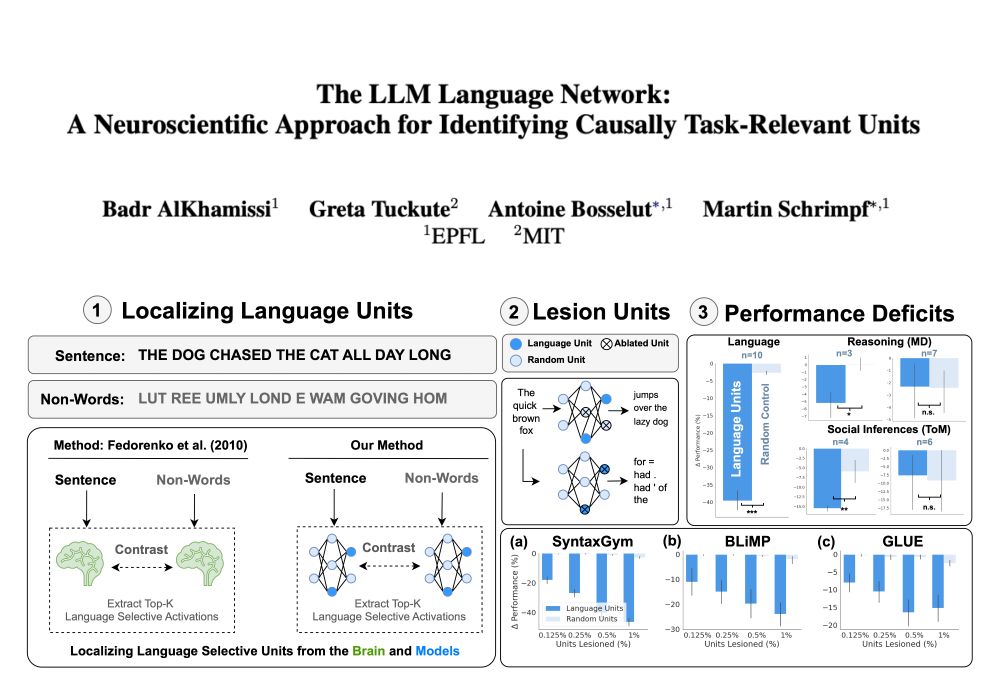

Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖

Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks!

w/ @gretatuckute.bsky.social, @abosselut.bsky.social, @mschrimpf.bsky.social

🧵👇

🙏 Amazing collaboration with my co-authors and advisors

@smontariol.bsky.social, @abosselut.bsky.social,

@trackingskills.bsky.social

📖 Check out the full paper here: arxiv.org/pdf/2412.11923

17.12.2024 14:51 — 👍 0 🔁 0 💬 1 📌 0

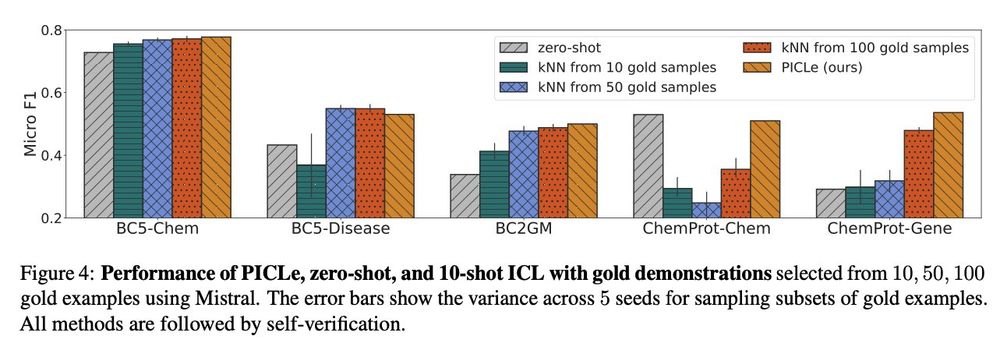

📊 We evaluate PICLe on 5 biomedical NED datasets and find:

✨ With zero human annotations, PICLe outperforms ICL in low-resource settings, where limited gold examples can be used as in-context demonstrations!

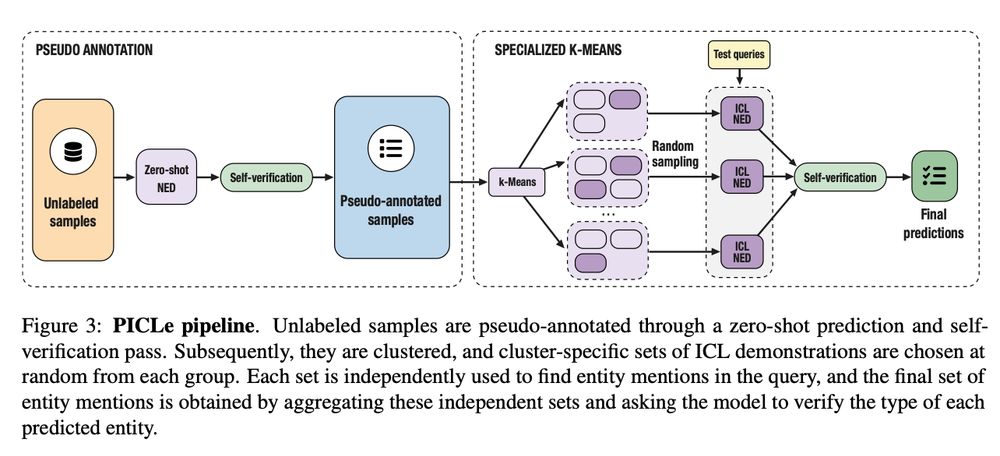

⚙️ How does PICLe work?

1️⃣ LLMs annotate demonstrations in a zero-shot first pass.

2️⃣ Synthetic demos are clustered, and in-context sets are sampled.

3️⃣ Entity mentions are predicted using each set independently.

4️⃣ Self-verification selects the final predictions.

💡 Building on our findings, we introduce PICLe: a framework for in-context learning powered by noisy, pseudo-annotated demonstrations. 🛠️ No human labels, no problem! 🚀

17.12.2024 14:51 — 👍 0 🔁 0 💬 1 📌 0

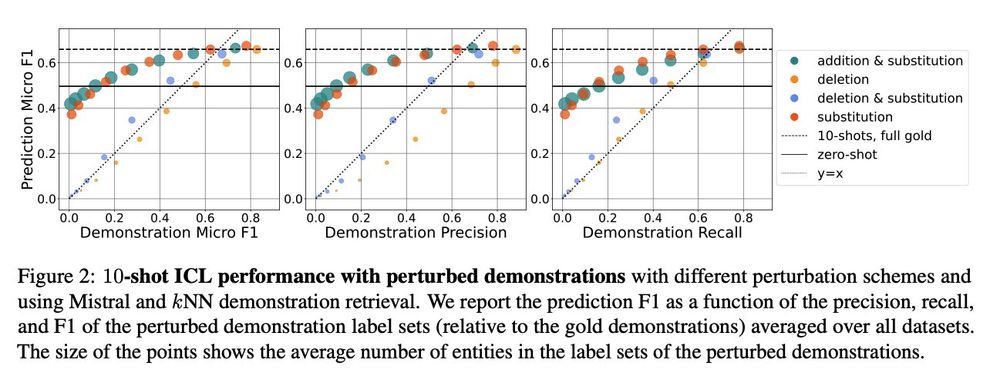

📊 Key finding: A semantic mapping between demonstration context and label is essential for in-context task transfer. BUT even weak semantic mappings can provide enough signal for effective adaptation in NED!

17.12.2024 14:51 — 👍 0 🔁 0 💬 1 📌 0🔍 It’s unclear which demonstration attributes enable in-context learning in tasks that require structured, open-ended predictions (such as NED).

We use perturbation schemes that create demonstrations with varying correctness levels to analyze key demonstration attributes.

🚀 Introducing PICLe: a framework for in-context named-entity detection (NED) using pseudo-annotated demonstrations.

🎯 No human labeling needed—yet it outperforms few-shot learning with human annotations!

#AI #NLProc #LLMs #ICL #NER