A meta-point of this paper is that category theory has utility for reasoning about current problems of interest in mainstream machine learning. The theory is predictive, not just descriptive. 🧵(1/6)

06.03.2025 04:38 — 👍 5 🔁 1 💬 1 📌 0

For future work, we're excited about exploring the design space of SymDiff for improving molecular/protein diffusion models

Me and my co-authors will also be at ICLR presenting this work, so feel free to come chat with us to discuss more about SymDiff!

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

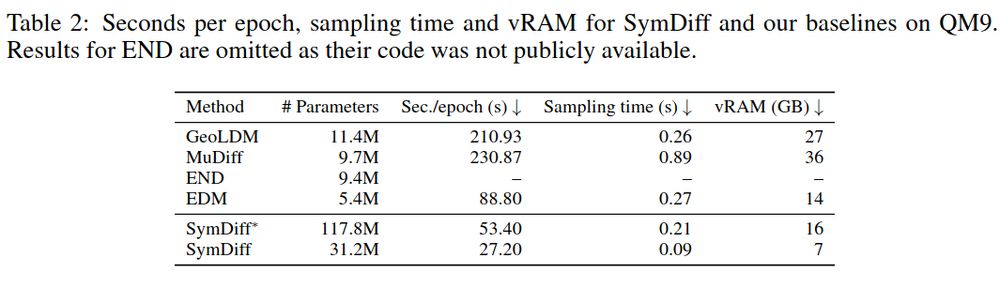

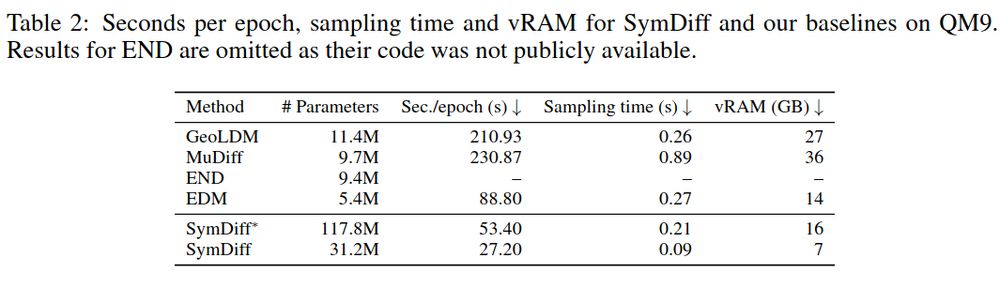

In addition, as a consequence of the flexibility allowed by SymDiff, we report much lower computational costs compared to message-passing based EGNNs

(8/9)

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

We note that the generality of our methodology should also allow us to apply SymDiff as a drop-in replacement to these other methods as well

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

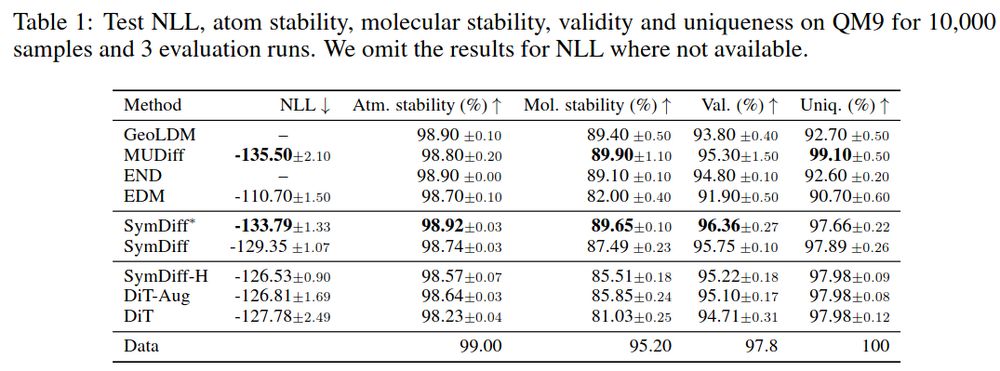

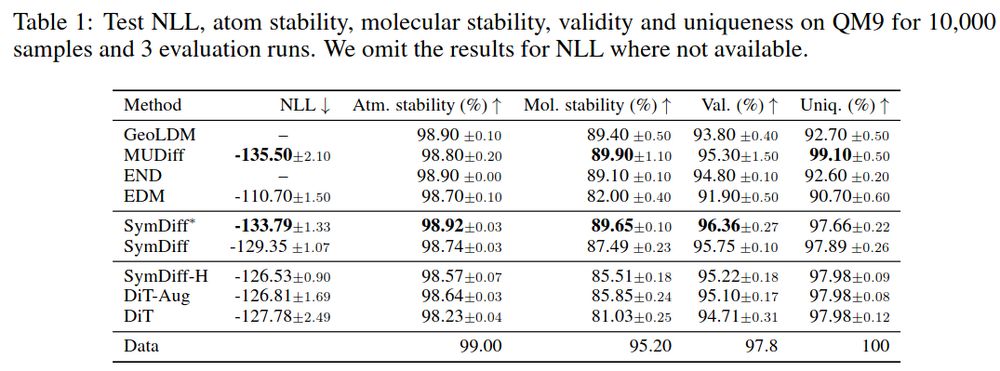

We show substantial improvements over EDM on QM9, GEOM-Drugs, and competitive performance with much more sophisticated recent baselines (which all use intrinsically equivariant architectures)

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

To validate our methodology, we apply SymDiff to molecular generation with E(3)-equivariance

We take the basic molecule generation framework of EDM (Hoogeboom et al. 2022) and use SymDiff with Diffusion Transformers as a drop-in replacement for the EGNN they use in their reverse process

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

✅ We show how to bypass previous requirements of a intrinsically equivariant sub-network with the Haar measure, for even more flexibility

✅ We also sketch how to extend SymDiff to score and flow-based models as well

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

✅ We derive a novel objective to train our new model

✅ We overcome previous issues with symmetrisation concerning pathologies of cannonicalisation, and the computational cost and errors involved in evaluating frame averaging/probabilistic symmetrisation

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

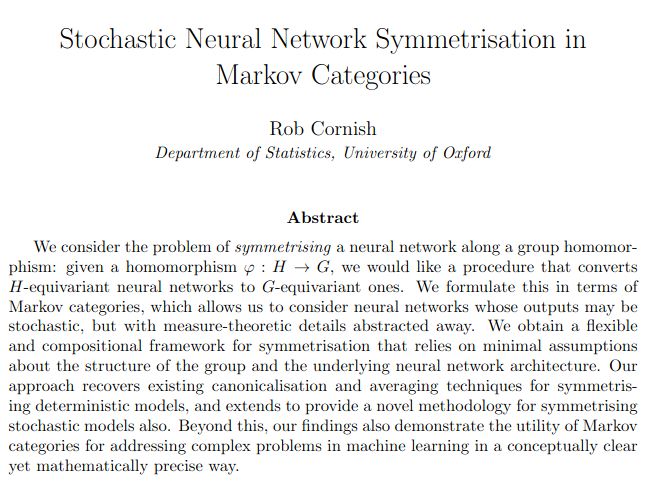

This provides us with the mathematical foundations to build SymDiff

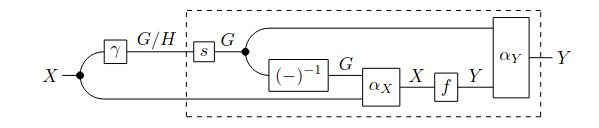

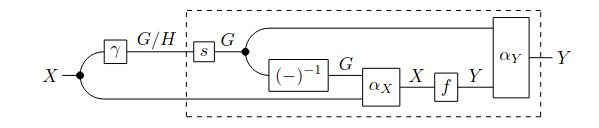

Our key idea is to "symmetrise" the reverse kernels of a diffusion model to build equivariance with unconstrained architectures

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

This is where recent work from Cornish (2024) (arxiv.org/abs/2406.11814) comes in. This generalises all previous work and extends it to the stochastic case using category-theoretic arguments, under the name of "stochastic symmetrisation"

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

A key limitation of this line of work however is that they only consider deterministic functions - i.e. they cannot convert the stochastic kernels involved in generative modelling to be equivariant

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

This decouples prediction from equivariance constraints, benefiting from the flexibility of the arbitrary base model, which doesn't suffer from the constraints of intrinsically equivariant architectures.

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

As an alternative, people have proposed cannonicalisation/frame averaging/probabilistic symmetrisation which convert arbitrary networks to be equivariant through a learned group averaging

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

One common criticism of (intrinsically) equivariant architectures is that due to the architecture constraints required to ensure equivariance, they suffer from worse expressivity, greater computational cost and implementation complexity

04.03.2025 15:30 — 👍 0 🔁 0 💬 1 📌 0

There's been a lot of recent interest in whether equivariant models are really needed for data containing symmetries (think AlphaFold 3)

04.03.2025 15:30 — 👍 1 🔁 0 💬 1 📌 0

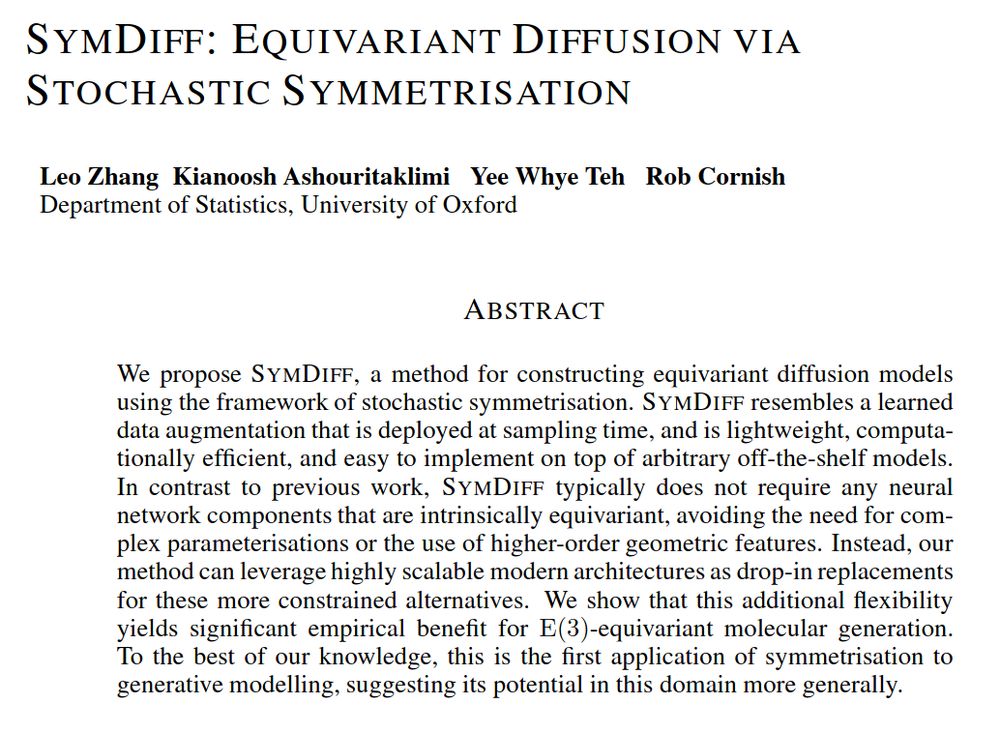

In our new paper (accepted at ICLR), we propose the first framework for constructing equivariant diffusion models via symmetrisation

This allows us to ensure E(3)-equivariance with just highly scalable standard architectures such as Diffusion Transformers, instead of EGNNs, for molecular generation

04.03.2025 15:30 — 👍 3 🔁 0 💬 1 📌 2

DPhil student at the University of Oxford and Nuffield College | Researching the intersection of Statistics, Econometrics & Machine Learning

PhD student in Computational Statistics and Machine Learning at STOR-i CDT, Lancaster University, UK.

Research Interests: Sampling Algorithms, Bayesian Experiment Designs, Neural Amortization.

https://shusheng3927.github.io/

PhD student with @glouppe.bsky.social. Working on neural emulation of physical systems, and SciML in general.

Statistical Machine Learning PhD student at University of Oxford

Lecturer in Maths & Stats at Bristol. Interested in probabilistic + numerical computation, statistical modelling + inference. (he / him).

Homepage: https://sites.google.com/view/sp-monte-carlo

Seminar: https://sites.google.com/view/monte-carlo-semina

Machine Learning Professor

https://cims.nyu.edu/~andrewgw

Research fellow @OxfordStats @OxCSML, spent time at FAIR and MSR

Former quant 📈 (@GoldmanSachs), former former gymnast 🤸♀️

My opinions are my own

🇧🇬-🇬🇧 sh/ssh

postdoc @oxfordstatistics.bsky.social working on (robust) LMs for @naturerecovery.bsky.social 🌱

prev PhD student @clopathlab.bsky.social 🧠 & AI resident @ Google X 🤖

We're the Department of Statistics at the University of Oxford (UK). We provide teaching & complete research on computational statistics and statistical methodology, probability, bioinformatics and mathematical genetics.

https://www.stats.ox.ac.uk/

Research fellow @ Oxford Statistics Department

jrmcornish.github.io

official Bluesky account (check username👆)

Bugs, feature requests, feedback: support@bsky.app